Please check the detailed information in the "INSTRUCTIONS" section below.

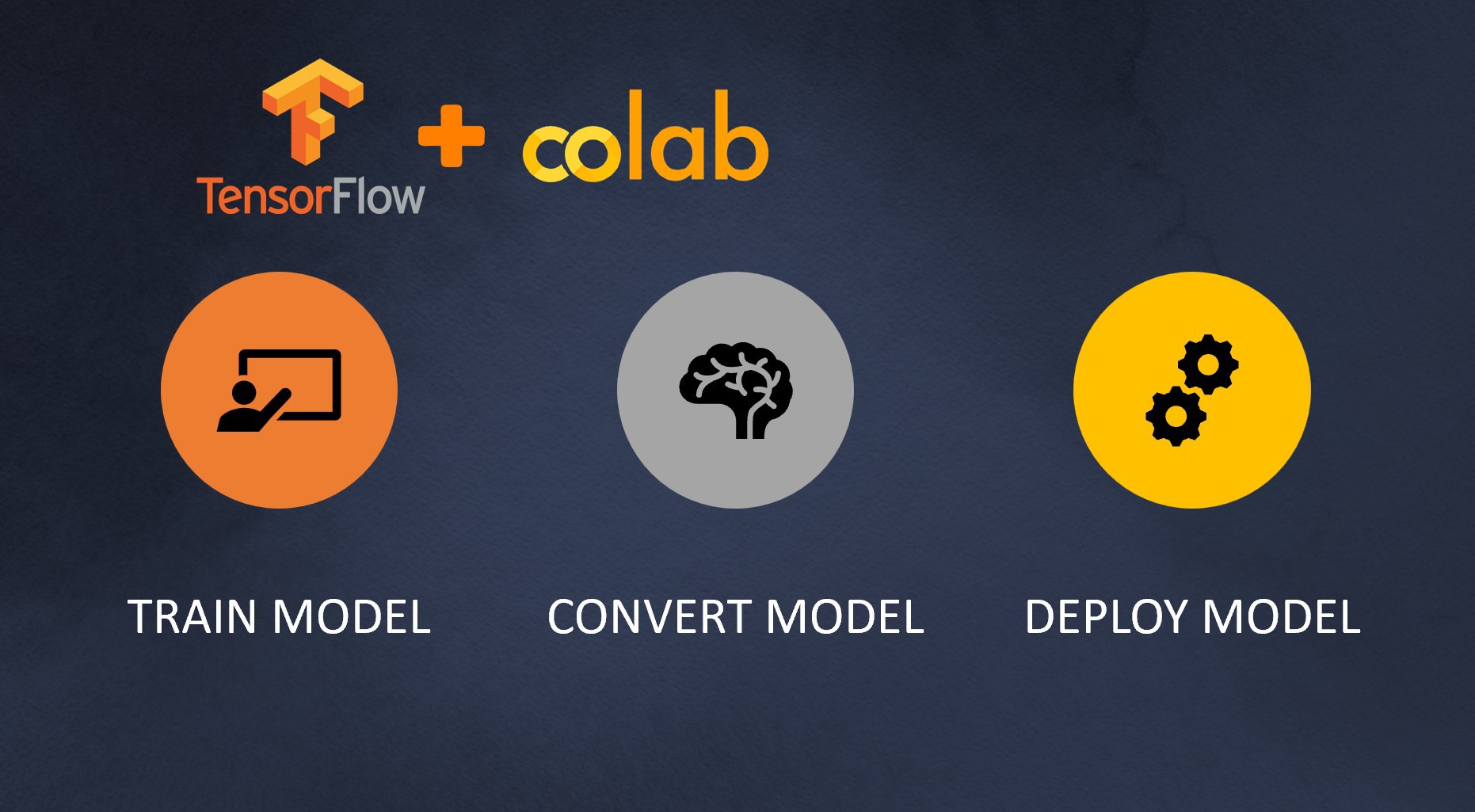

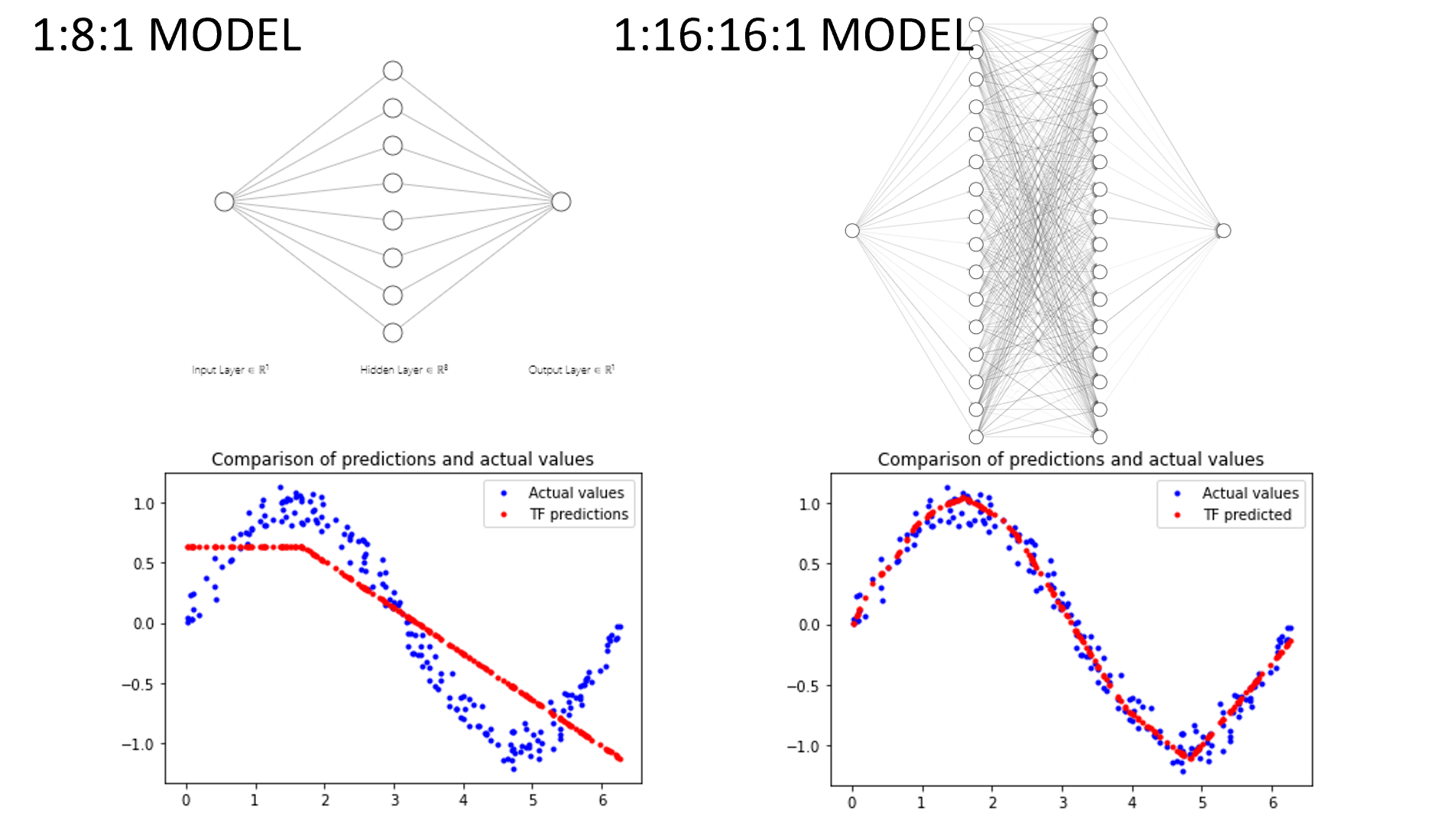

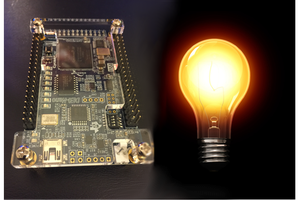

AMB21/22/23 TensorFlow Lite - Hello World

This project demonstrated how to apply a simple machine learning model trained via Google Tensor Flow, and transfer it to AMB21/22/23 board

Splendide_Mendax

Splendide_Mendax

andrew.powell

andrew.powell

RAMKUMAR R

RAMKUMAR R