This technique uses TinyML to recognize gestures robustly. In the interest of reaching as many developers as possible, I won’t assume any understanding of the field.

💡 What We’re Building?

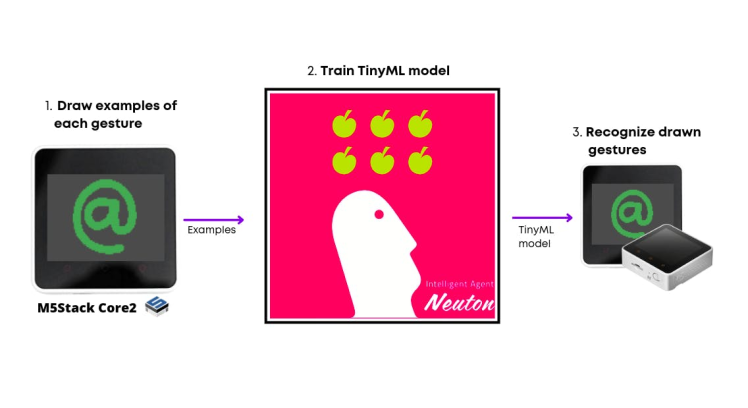

By the end of the tutorial, we’ll have a setup that allows us to draw on-screen gestures and recognize them with high accuracy using TinyML. The components involved are:

- Device to collect some examples of each gesture (draw some check marks, numbers, alphabets, special symbols, etc.)

- TinyML platform to train our collected data to recognize drawn gestures and embed them onto smaller MCUs.

- Open applications on the computer by recording the user’s strokes on the screen and using the TinyML inference algorithm to figure out what gesture, if any, an application should open.

📌 Why draw and use TinyML?

It has been a decade since touch screens became ubiquitous in phones and laptops, but we still generally interact with apps using only minor variations of a few gestures: tapping, panning, zooming, and rotating. It’s tempting to try to handcraft algorithms to identify each gesture you intend to use for your touchscreen device. Imagine what can go wrong when dealing with more complex gestures, with perhaps multiple strokes, that all have to be distinguished from each other.

Existing techniques: The state-of-the-art for complex gesture recognition on mobile devices seems to be an algorithm called $P

(http://depts.washington.edu/madlab/proj/dollar/pdollar.html). $P is the newest in the “dollar family” of gesture recognizers developed by researchers at the University of Washington.

$P’s main advantage is that all the code needed to make it work is short and simple.

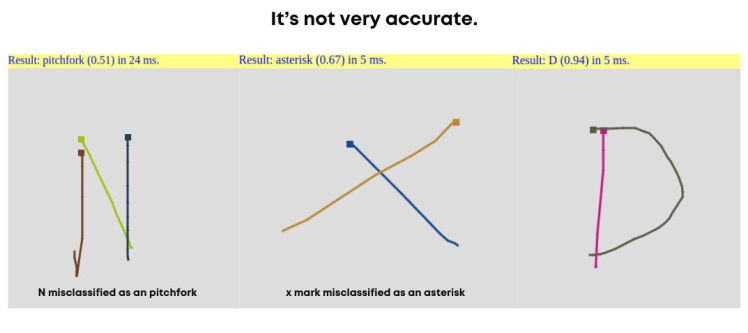

$P is not very accurate

$P is not very accurate

Another limitation of $P is its inability to extract high-level features, which are sometimes the only way of detecting a gesture.

🔥 My approach using TinyML Neural Networks

A robust and flexible approach to detecting complex gestures is the use of machine learning. There are a number of ways to do this. I tried out the simplest reasonable one I could think of:

- Track and translate the user’s gesture to fit in the fixed-size box screen resolution.

- Store the location of the touched pixels in a buffer (the location of the individual pixel is decomposed by relation, L = y * screen width + x).

- Use the pixel locations buffer as input to a Neural Network (NN).

This converts the problem into a classification and pattern recognition problem, which NNs solve extremely well. In short, it’s much harder than you think to write the code that explicitly detects a stroke a user made in the shape of a digit or symbol, for example, without NNs.

👽 What’s a Machine Learning Algorithm?

A machine learning algorithm learns from a dataset in order to make inferences given incomplete information about other data.

In our case, the data are strokes made on the screen by the user and their associated gesture classes (“alphabets”, “symbols”, etc.). What we want to make inferences about are new strokes made by a user for which we don’t know the gesture class (incomplete information).

⭐ Allowing an algorithm to learn from data is called “training”. The resulting inference machine that models the data is aptly called a “model”

💾 Step 1: Making the Data Set

Like any machine learning algorithm, my network needed examples (drawing gestures) to learn from. To make the data as realistic as possible, I wrote a data collection code for inputting and maintaining a data set of gestures on the same touch screen where the network would eventually be used.

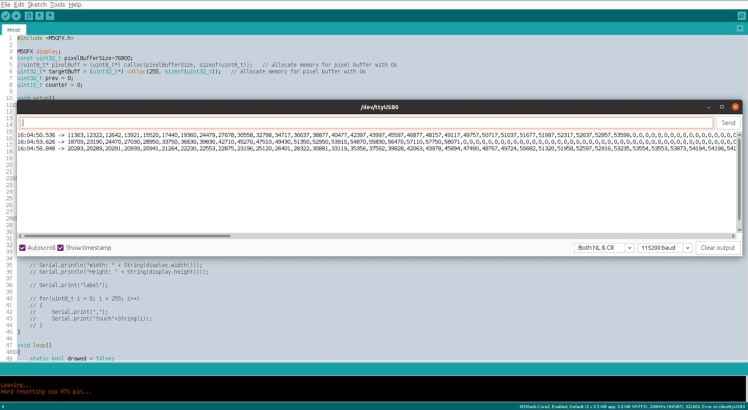

Comma-separated data to be stored in CSV format for training To understand the data set collection, we will need some knowledge of pixels. In simple terms, a pixel can be identified by a pair of integers providing the column number and the row number. In our case, we are using...

Read more » Sumit

Sumit

alex.miller

alex.miller