This simple example can be extended in several ways and used in more complex gesture recognition cases, such as map navigation and routes creation, TV interface control when playing games or switching channels, control of children's toys by hand movements, etc.

In practice, replacing mechanical pushbuttons with a gesture-controlled interface is an excellent way to:

- add value and advanced features to your device

- improve your product’s performance and reliability

- increase customer appeal

Brief Task Overview

Handwritten digit recognition with models trained on the MNIST dataset is a popular “Hello World” project for deep learning as it is simple to build a network that achieves over 90% accuracy for it. There are lots of existing open-source implementations of MNIST models on the Internet, making it a well-documented starting point for machine learning beginners.

Deep Learning models usually require a considerable amount of resources for inference, but we are interested in running neural networks on low-power embedded systems with limited available resources.

In my case, the MNIST digit recognition will be performed by a Neuton TinyML model and a GUI interface to increase usability. The model allocation, input, and output processing and inference will be handled by the Neuton C SDK and custom code written specifically for the example.

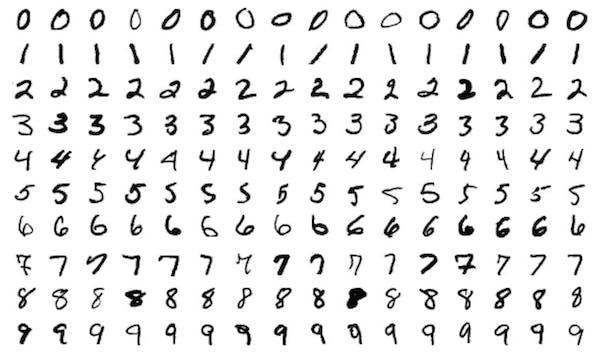

A Look at the MNIST Dataset

The dataset used in this article consists of 60, 000 training and 10, 000 testing examples of centered grayscale images of handwritten digits. Each sample has a resolution of 28x28 pixels:

The samples were collected from high-school students and Census Bureau employees in the US, so the dataset contains mostly examples of numbers as they are written in North America. For European-style numbers, a different dataset has to be used. Convolutional neural networks typically give the best result when used with this dataset, and even simple networks can achieve high accuracy.

The data is available on Kaggle:https://www.kaggle.com/competitions/digit-recognizer/data

Dataset Description

The data files train.csv and test.csv contain grey-scale images of hand-drawn digits, from 0 to 9.

Each image is 28 pixels in height and 28 pixels in width, 784 pixels in total. Each pixel has a single pixel value associated with it, indicating the lightness or darkness of that pixel, with higher numbers meaning darker. This pixel value is an integer between 0 and 255, inclusive.

Each pixel column in the training set has a name like pixelx, where x is an integer between 0 and 783, inclusive. To locate this pixel on the image, suppose that we have decomposed x as x = I * 28 + j, where I and j are integers between 0 and 27, inclusive. Then pixelx is located on row I and column j of a 28 x 28 matrix, (indexing by zero).

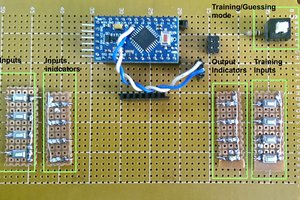

Application Functionality

The application is controlled through a GUI displayed on a touch-sensitive TFT LCD. The GUI, as shown, includes a touch-based input area for writing digits, an output area for displaying the results of inference, and two buttons, one for running the inference and the other for clearing the input and output areas. It also outputs the result and the confidence of the prediction to standard output, which can be read by using programs like PuTTY and listening on the associated COM port while the board is connected to the PC.

Procedure

Training MNIST model on Neuton Platform

To skip grayscale conversions in the original Kaggle dataset, pixels of the main color, regardless of what it is, are considered white[1] and everything else black[0]. I modified the original MNIST dataset to get rid of grayscale values using this python script.

import csv

import numpy as np

f = open(...

Read more »

Sumit

Sumit

Nick Bild

Nick Bild

Rucksikaa.R

Rucksikaa.R

Foxmjay

Foxmjay

Gonçalo Nespral

Gonçalo Nespral