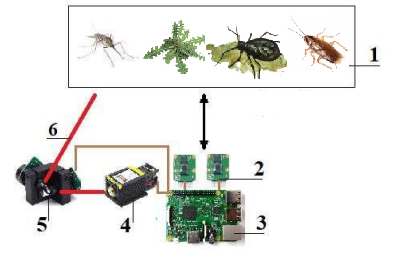

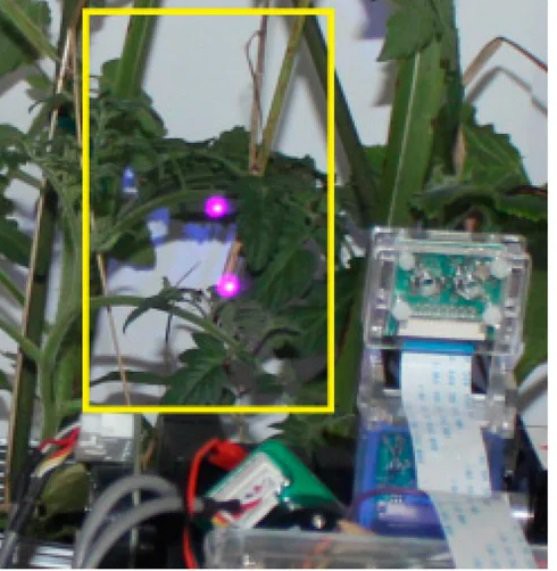

To detect x,y coordinates initially I used Haar cascades in RaspberryPI. After that yolov4-tiny in Jetson nano. For Y coordinates - stereo vision in OpenCV.

Calculation of necessary value for the angle of mirrors.

RaspberryPI/JetsonNano by SPI sends a command for galvanometer via DAC mcp4922. Electrical scheme (here). From mcp4922 bipolar analog signal go-to amplifier. Finally, we have -12 and + 12 V for the control positions of the mirrors.

The principle of operation

Single board computer to processes the digital signal from the camera and determines the positioning of the object, and transmits the digital signal to the analog display - 3, where digital-to-analog converts the signal to the range of 0-5V. Using a board with an operational amplifier, we get a bipolar voltage, from which the boards with the motor driver for the galvanometer are powered - 4, from where the signal goes to galvanometers -7. The galvanometer uses mirrors to change the direction of the laser - 6. The system is powered by the power supply - 5. Cameras 2 determine the distance to the object. The camera detects mosquitoes and transmits data to the galvanometer, which sets the mirrors in the correct position, and then the laser turns on.

Don't use the power laser!

The main limiting factor in the development of this technology is the danger of the laser may damage the eyes. The laser can enter a blood vessel and clog it, it can get into a blind spot where nerves from all over the eye go to the brain, you can burn out a line of "pixels" And then the damaged retina can begin to flake off, and this is the path to complete and irreversible loss of vision. This is dangerous because a person may not notice at the beginning of damage from a laser hit: there are no pain receptors there, the brain completes objects in damaged areas (remapping of dead pixels), and only when the damaged area becomes large enough person starts to notice that some objects not visible.

We can develop additional security systems, such as human detection, audio sensors, etc. But in any case, we are not able to make the installation 100% safe, since even a laser can be reflected and damage the eye of a person who is not in the field of view of the device and at a distant distance. Therefore, this technology should not be used at home.

My strong recommendation - don't use the power laser! I recommend making a device that will track an object using a safe laser pointer.

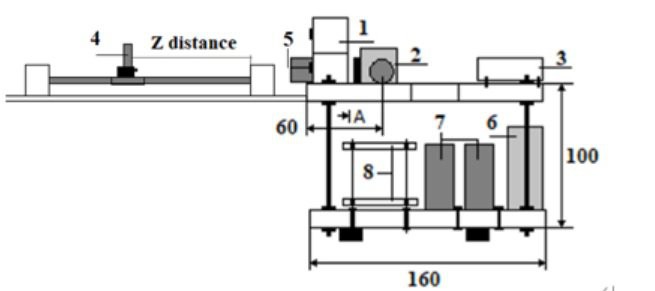

Dimensions

1 - PI cameras, 2 - galvanometer, 3 - Jetson nano, 4 - adjusting the position to the object, 5 - laser device, 6 - power supply, 7 - galvanometer driver boards, 8 - analog conversion boards

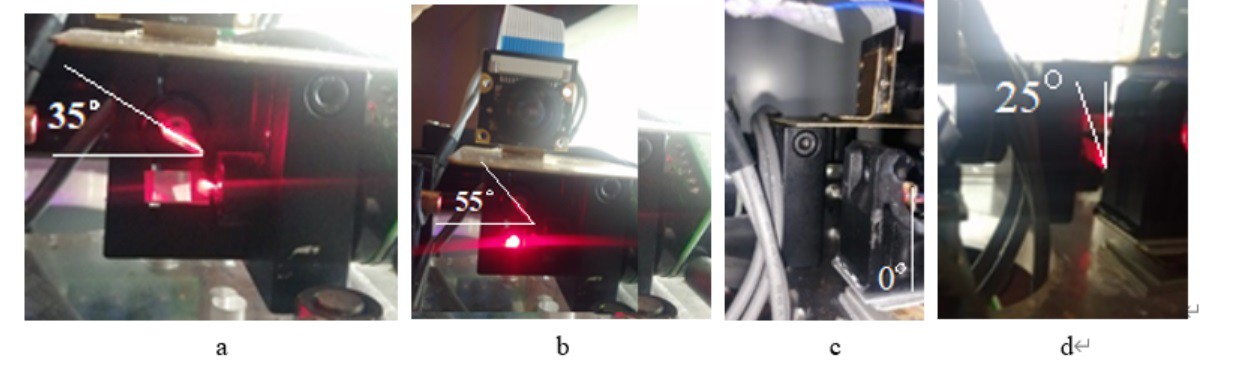

Galvanometer setting

In practice, the maximum deflection angle of the mirrors is set at the factory, but before use, it is necessary to check, for example, according to the documentation, our galvanometer had a step width of 30, but as it turned out we have only 2

Maximum and minimum positions of galvanometer mirrors:

a - lower position - 350 for x mirror;

b - upper position - 550 for x mirror;

c - lower position - 00 for y mirror;

d - upper position - 250 for y mirror;

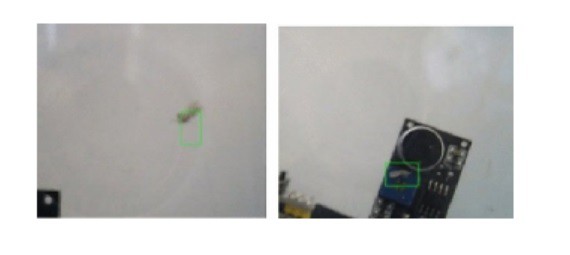

Determining the coordinates of an object

X,Y - coordinate

Z-coordinate

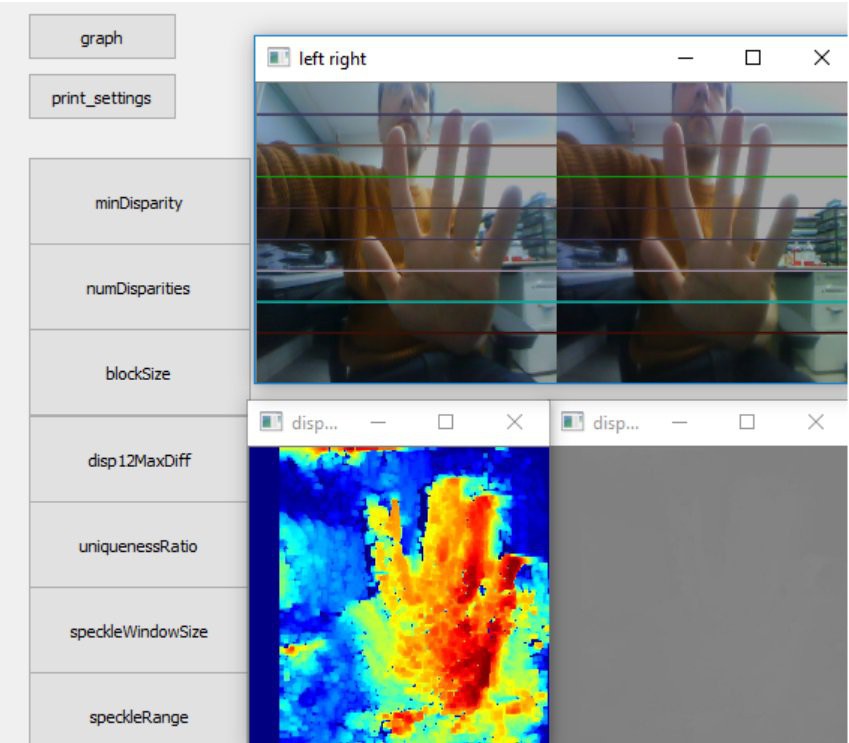

We created GUI, source here

At the expense of computer vision, the position of the object in the X, Y plane is determined - based on which its ROI area is taken. Then I use stereo vision to compile a depth map and for a given ROI with the NumPy library tool - np.average we calculated the average value for the pixels of this area, which will allow us to calculate the distance to the object.

You can find more detail in the published paper in preprint - Low-Cost Stereovision System (Disparity Map) For Few Dollars

Determining the angle of the galvanometer mirror

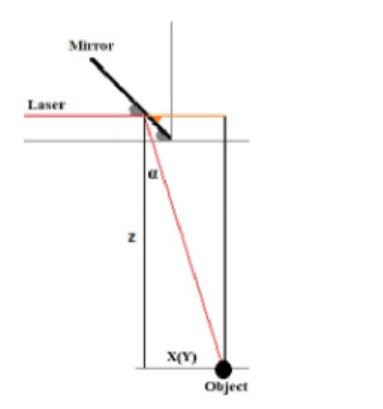

angle of galvanometer mirror theory

The laser beam obeys all the optical laws of physics, therefore, depending on the design of the galvanometer, the required angle of inclination of the mirror – α, can be calculated through the geometrical formulas. In our case, through the tangent of the angle α, where it is equal to the ratio of the opposing side – X(Y) (position calculated by deep learning) to the adjacent side - Z (calculated by stereo vision).

We need more FPS

For single boards, computers are actual problems with FPS. For one object with Jetson was reached the next result for the Yolov4-tiny model.

Framework

with Keras: 4-5 FPS

with Darknet: 12-15 FPS

with Darknet Tensor RT: 24-27 FPS

with Darknet DeepStream: 23-26 FPS

with tkDNN: 30-35 FPS

You can find more detail in the published paper in arxiv - [Increasing FPS for single board computers and embedded computers in 2021 (Jetson nano and YOVOv4-tiny). Practice and review]( https://arxiv.org/abs/2107.12148)

Demonstrations

In this video - a laser (the red point) tries to catch a yellow LED. It is an adjusting process but in fact, instead, a yellow LED can be a mosquito, and instead, the red laser can be a powerful laser.

[]()

In the video below, you can see, how the YoloV4tiny detected a cockroach and after that - turn on the laser and send a signal to the galvanometer to set the position of the mirror. When using a more powerful laser, the efficiency is much higher. But the video cannot be shot with a powerful laser, the light is too bright.

[![Detected a cockroach(https://github.com/Ildaron/Laser_control/blob/master/Supplementary%20files/coac1.bmp)]()

Security questions

An additional device - a security module that will turn off the laser:

- Use additional cameras to fix people

- Audio sensors to capture voice and noise

- To mechanically shoot down the laser

- To use a thermal camera if there is any warm effect, turn it off - this is probably also possible to protect against fires consider not to overheat.

- Teach the system to record the process of laser reflection from any random glass or other mirror surfaces (maybe before turning on the power laser - for checking turn on the simple laser).

Discussion and Future work

We can try to light up the room to reflect the mosquito - and then use the library functions - OpenCV in range or haar cascades to detect the object. With a bright background, they will be detected without problems. This is for low-power single-board computers - Raspberry, Orange, Banana, etc.

For jetson nano, we can use yolov4-tiny which, using the tkDNN library, is able to give 30-35 FPS

Research laser effect

Use a lower laser power as much as possible. The laser should burn the wings of mosquitos but should be safe for the eyes. That is to do research on the topic of laser power, laser wavelength, and their efficiency for mosquitos. This is for safety, the lower the power, the better.

Remote control

Laser control on a stationary computer. The IP camera installed next to the laser only transmits video to the computer, and the computer already analyzes it on a powerful processor video card and transmits back coordinates for the laser via Wi-Fi. In this case, we can use very powerful computing processors.

PCB boards

Make the device completely on our electronic boards. It is a galvanometer for a laser show and changes positions 20,000 times per second, which is why there are such powerful and big drivers for motors. It is useful to make a small PCB board to change the position of the laser only 200 times per second. In finally, so to speak, the pocket version.

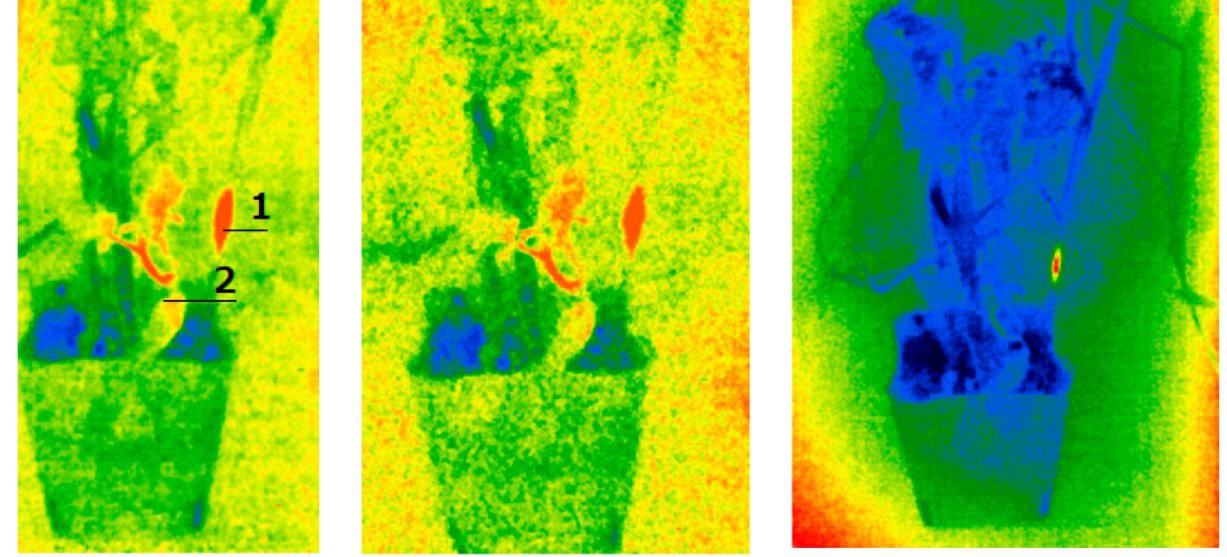

Laser for weed control

infrared image

Result of laser works

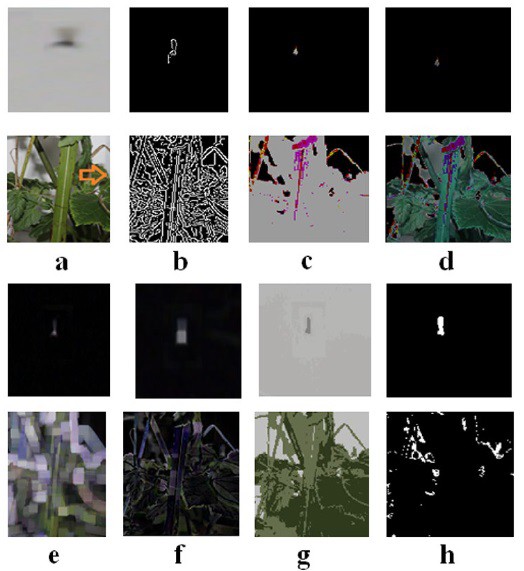

Image processing for mosquito detection

Image processing operation: a received image with mosquito,b edges detection by cv2.Canny (img, 10,500), c image thresholdingby cv2.threshold (img, 127,155, cv2.THRESH_BINARY_INV), dimage thresholding by cv2.threshold (img, 127,155, cv2.THRESH_TOZERO_INV), e morphological transformations by cv2.morpholo-gyEx (img, cv2.MORPH_BLACKHAT, kernel1), f morphologicaltransformations by cv2.morphologyEx (img, cv2.MORPH_GRA -DIENT, kernel2), g segmentation by cv2.kmeans (pixel_values, k,None, criteria, 10, cv2.KMEANS_RANDOM_CENTERS, h colordetection by cv2.inRange (hsv, h_min, h_max), where hsv, h_min,and h_max for up row—from 0, 0, 19 to 128, 35, 168. For low row,hsv, h_min, and h_max—from 23, 3, 128 to 65, 44, 180

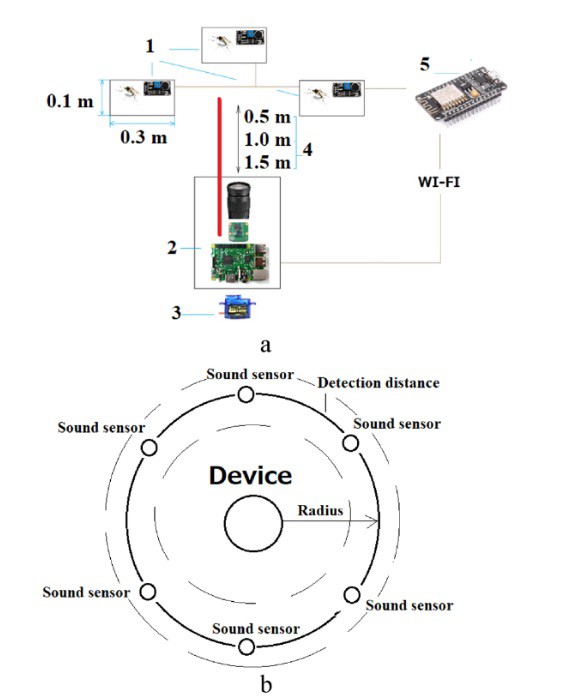

Can be used also and audio sensors

—three boxes with mosquitoes,2—laser machine with the telephoto lens, 3—servomotor for changing the position on the x-axis, 4—the distance between the camera and the boxes changed from 0.5 to 1.5 m, and 5—laser, 15 W, 450-nm wavelength; b schematic representation of the control area, where radius it is the length of a control area

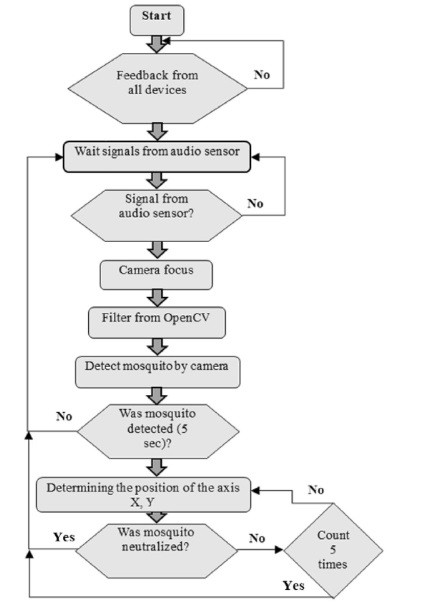

Algorithm with audio sensors

Publication and Citation

- Ildar, R. (2021). Machine vision for low-cost remote control of mosquitoes by power laser. Journal of Real-Time Image Processing

availabe [here]( https://www.researchgate.net/publication/349226713_Machine_vision_for_low-cost_remote_control_of_mosquitoes_by_power_laser) https://doi.org/10.1007/s11554-021-01079-x

- Rakhmatulin I, Andreasen C. (2020). A Concept of a Compact and Inexpensive Device for Controlling Weeds with Laser Beams. Agronomy

availabe [here](https://www.mdpi.com/2073-4395/10/10/1616) https://doi.org/10.3390/agronomy10101616

- Rakhmatuiln I, Kamilaris A, Andreasen C. Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sensing. 2021; 13(21):4486. https://doi.org/10.3390/rs13214486

Ildar Rakhmatulin

Ildar Rakhmatulin