Navigation

The title tag system is explained here, and the table is updated when a change occurs. Notable logs have bold L# text.

Preface

[2022 - Sep 26]

Ideally, #SecSavr Suspense [gd0105] can actually be built and works as intended and used to manufacture a solution. Custom, likely high density PCBs and fancy optics sure aren't in my league budget otherwise.

Even if that happens, it'll still be my most advanced project idea I've thought of.

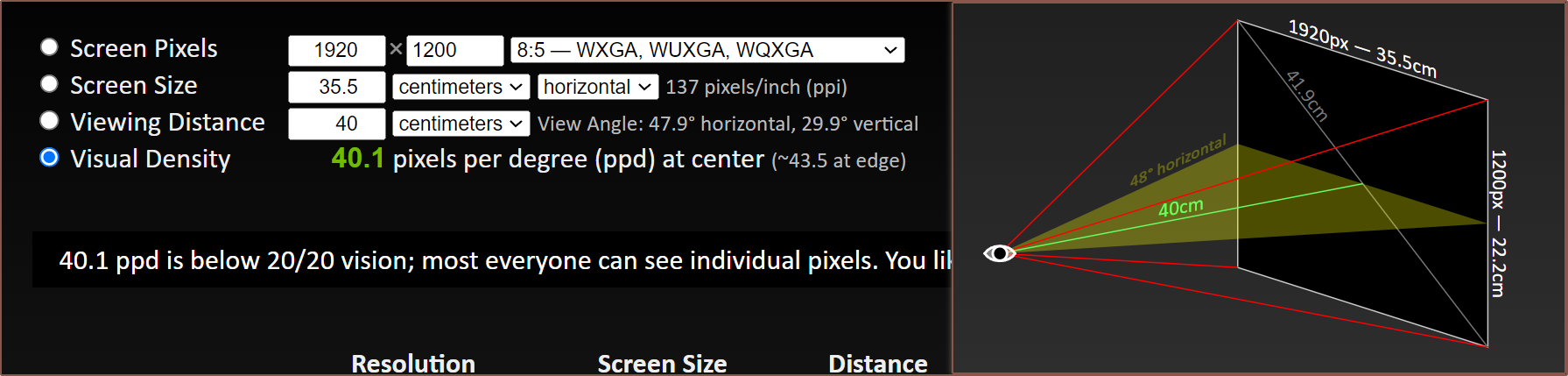

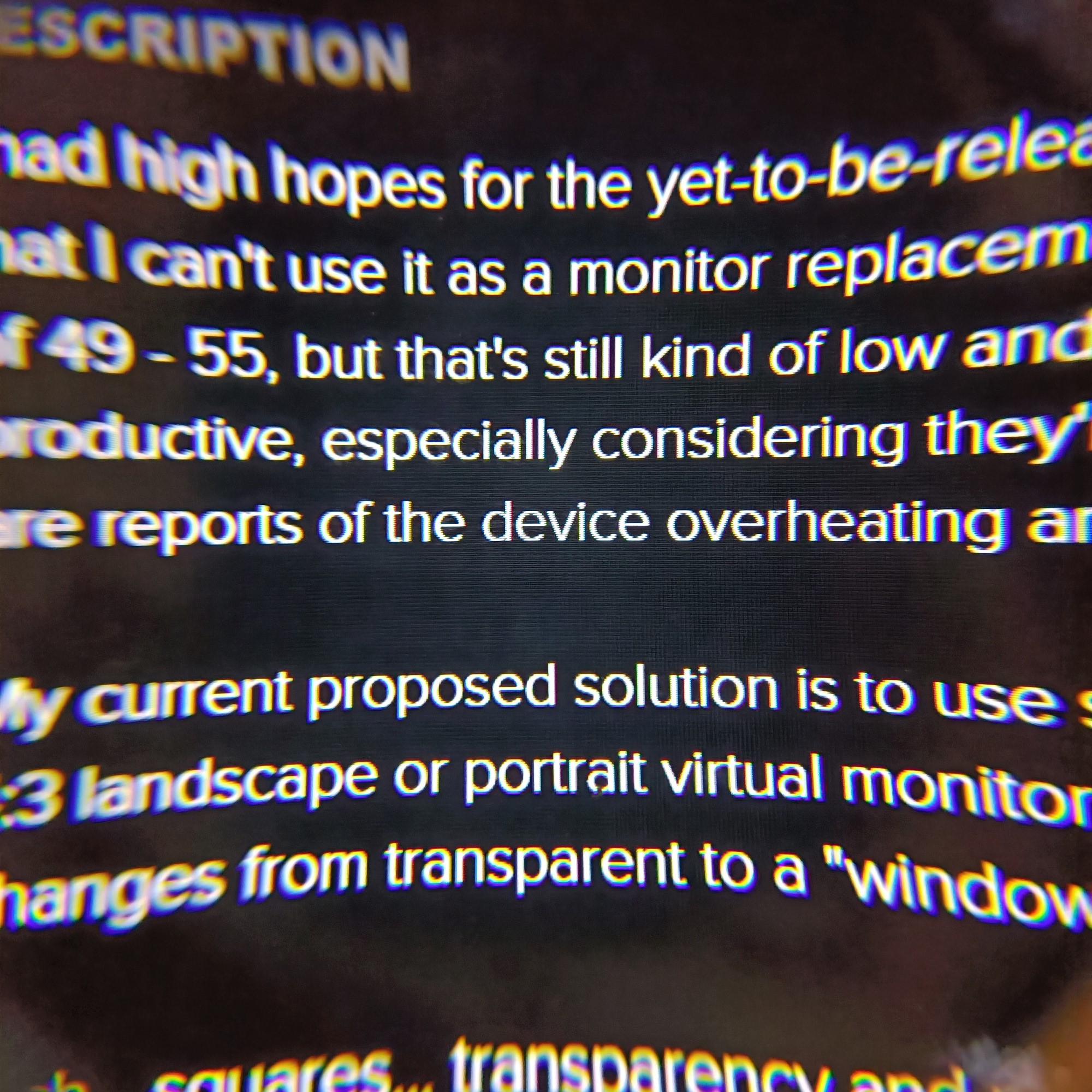

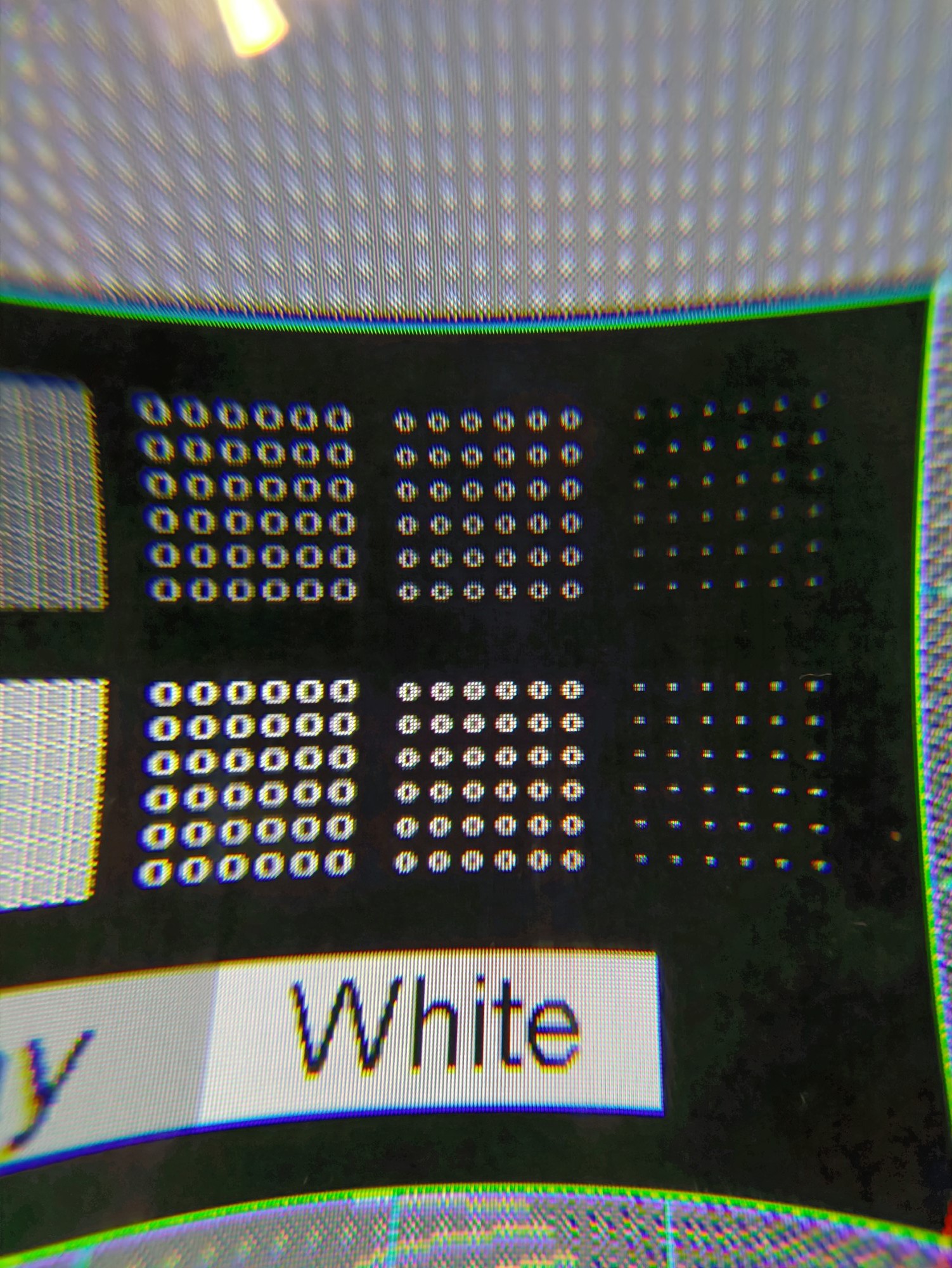

This project idea started yesterday, when I found out that a 1080p, 15.6" screen that is 60cm away from my eyes is 60 pixels per degree (ppd). I can't comfortably look at such a resolution for more than a few seconds before wishing for a higher resolution. I also predict that 1440p (same screen and distance) is over my eye's resolution, seeing as I can't see and additional detail when using 150% scaling on my 4K screen for #Teti [gd0022] unless I move closer.

Thus, the range is somewhere between 60 and 80 ppd. I was hoping that the upcoming Pimax 12K would be the headset that allows me to work in virtual labs and offices, but they'd have to house 24K displays for that! According to calculation, 35ppd would be the equivalent of 720P HD on a 17.3" laptop, 60cm away.

On the [Pimax 12K and under £s] market, the closest things are the newest AR glasses, like the Nreal Air, VITURE One, Dream Glass Flow and TQSKY T1, with the highest being 55ppd. (Huh. That's a lot of new AR glasses that actually look good this year.) These all use dual 1080p screens, so I'd hopefully just have to wait until a manufacturer makes a 1440p screen so that one of these startups...

Read more » kelvinA

kelvinA

I think I've found

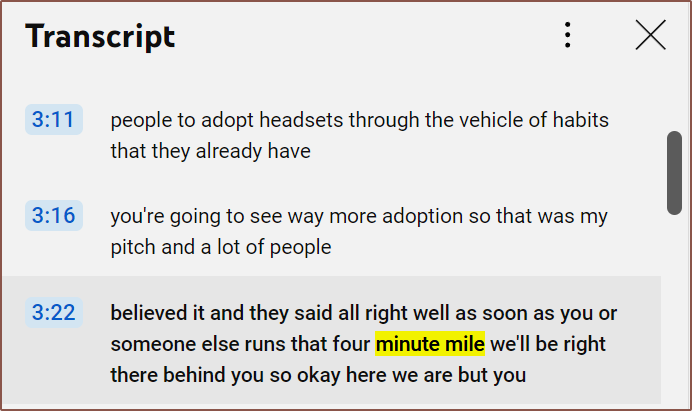

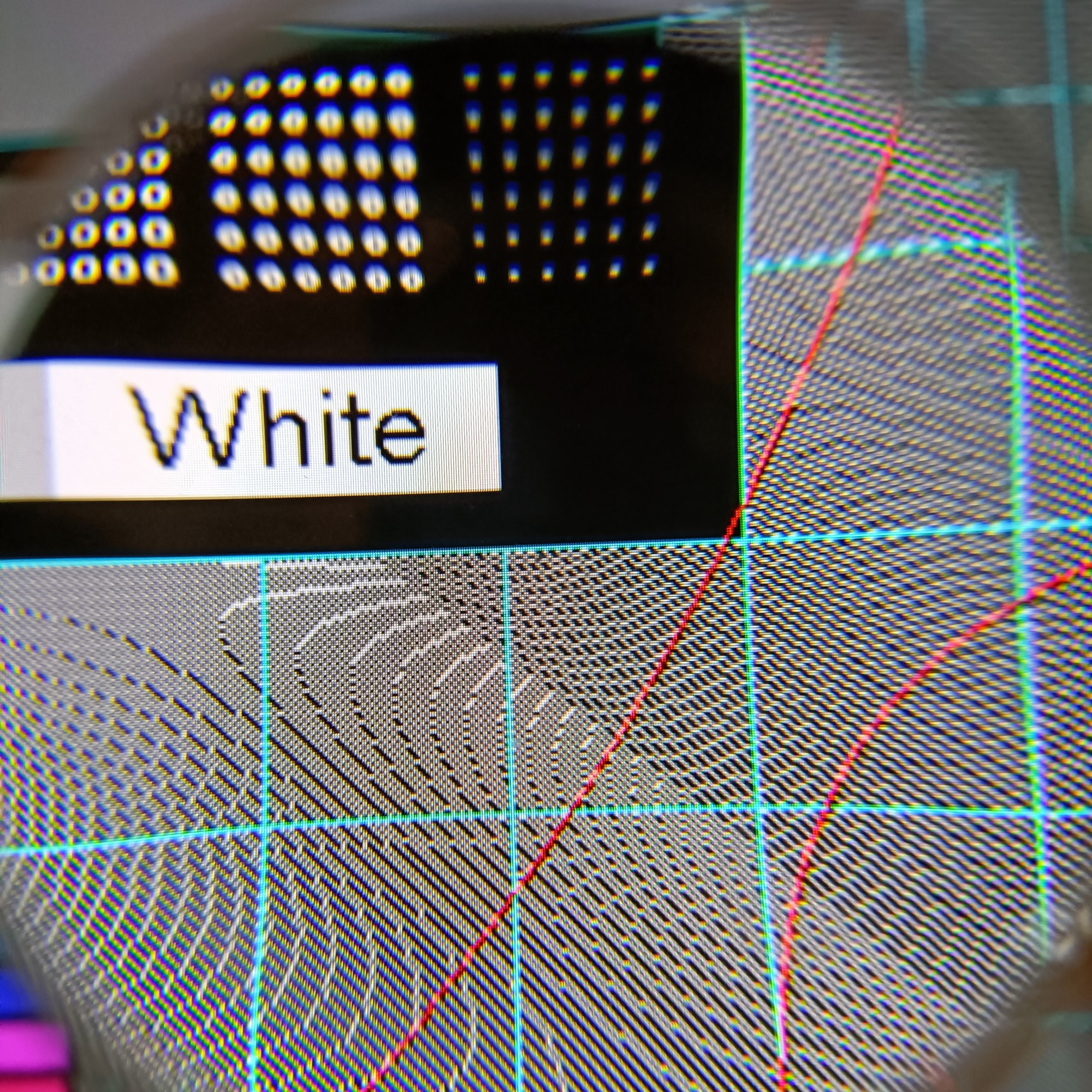

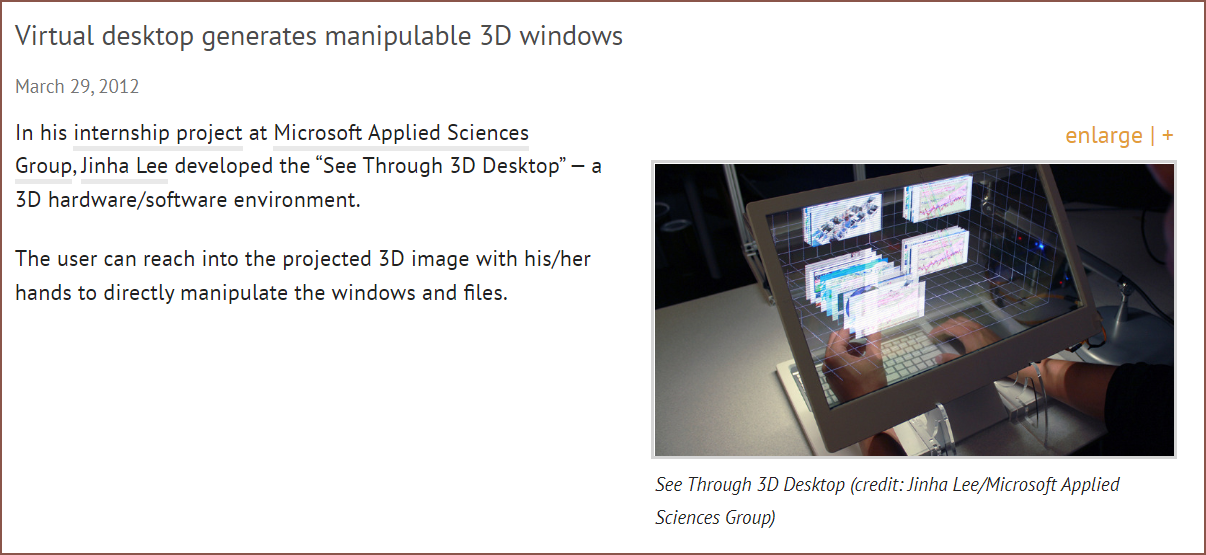

I think I've found  Now I'm watching the below video, and have already found out something eye-opening; everything in Windows is actually made of windows, such as buttons and status bars.

Now I'm watching the below video, and have already found out something eye-opening; everything in Windows is actually made of windows, such as buttons and status bars.