So, I have two main, not-so-straightforward things to solve: configure the TFT display and setup the voice recognition.

To get this display to work, a hardware overlay needs to be added, which sets up the framebuffer on it (with a quite bad fps rate, but that's not that important here) and after that, in order to use it for Xorg (GUI) an additional piece of software called fbcp is used which basically copies image from the Xorg session to a framebuffer which is displayed on the screen. The simplest way to get all of this is to just use the SD card image provided by Waveshare. The downside is that it's not really a recent OS version.

For the voice recognition I initially wanted to use something as simple as possible - there's no need to actually recognize the speech, just to compare the sound with the one stored locally (remember the voice dialing on old Nokia phones?). Unfortunately, I couldn't find anything like that (edit: there was a snowboy hotword detection few years ago, and there are few other hotword detection libraries I found later). Building it from scratch probably wouldn't take too long but it would take more time than I wanted to spend on this. So I decided to go with voice2json (https://voice2json.org/) which can use few different backends (both online and local) and handles a lot of boring stuff. Well, unfortunately, after installing it it complained about the libc being too old... So I decided to try to upgrade the OS which, after a lot of time, broke the Xorg configuration for the display.

The second try was to start with fresh OS image and then configure the display. The basic framebuffer support was easy to setup (basically, clone the https://github.com/swkim01/waveshare-dtoverlays , copy the relevant files and run it and it worked) but fbcp was crashing with SEGFAULT.

After some time thinking about dropping the whole project, I decided to go again with the waveshare image and try to use something else for voice recognition.

While still using the latest OS image, I found the SpeechRecognition python module (https://pypi.org/project/SpeechRecognition/) and tested it. It (partially) worked: the sound coming from microphone was quite noisy but after disabling the automatic gain control for the microphone using alsamixer and yelling at the microphone a bit louder, it managed to recognize a phrase. Unfortunatelly, using offline recognition turned out to be really slow. But using some online backend gave relatively fast response so I decided to drop the offline-only requirement.

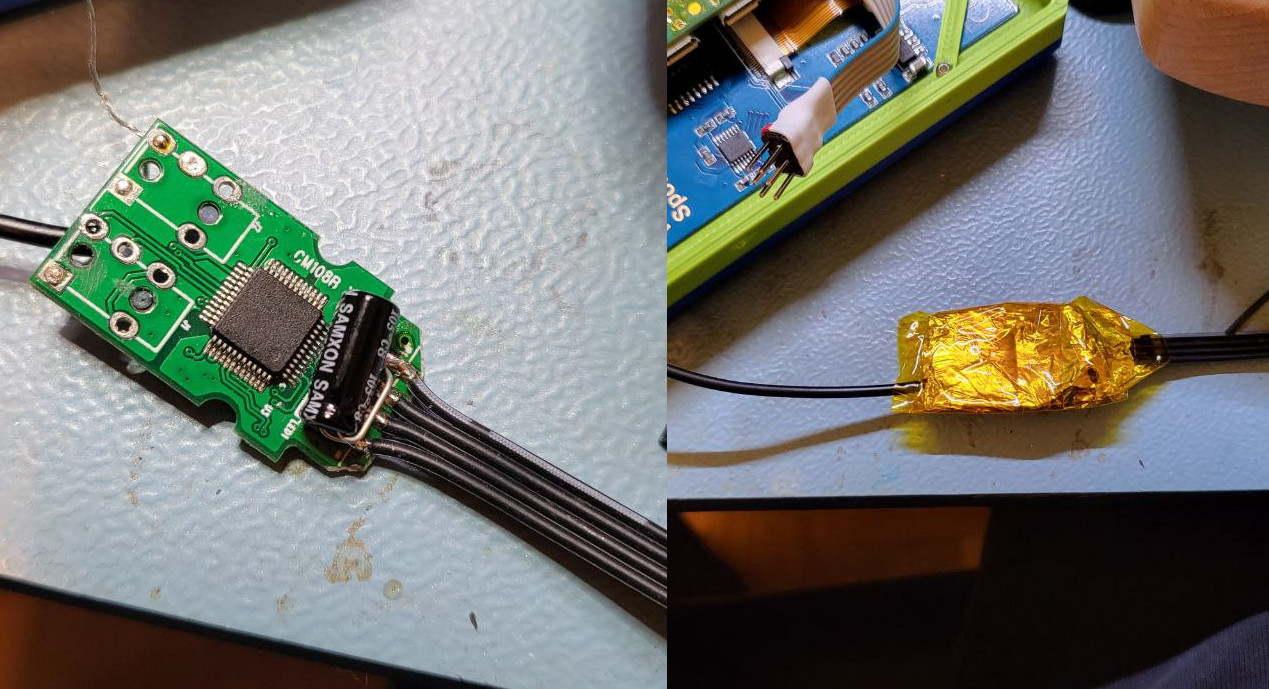

The other problem was noisy microphone signal probably caused by the power supply and other noise. Adding the 100uF capacitor to the USB soundcard board, removing the connectors to make it smaller and shielding it by wrapping it into some grounded aluminum tape turned out to be a good solutions and the noise level dropped significantly.

After I switched back to the OS image provided by waveshare, I realized that SpeechRecognition required newer python. That was easily (with some 30-60 minutes of compiling time) solved by compiling the Python 3.9 (here's one of the guides: https://tecadmin.net/how-to-install-python-3-9-on-debian-9/)

I also managed to combine arecord and a custom python script in order to display the microphone waveform on the I2C screen (code available in the repository).

That was enough of software for now.

Igor Brkic

Igor Brkic

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.