Each of TJ's motors is paired with a positional encoder. By reading these positional encoders, we can sense the position of Tj's arms, head, eyes and ears.

In this demo, we'll start by activating the motor that controls TJ's eye blinking and ear wiggling loop. Then, we will read the state of each of the two GPIO pins connected to the positional encoder associated with that motor. Finally, we'll evaluate the readings to find the position of TJ's eyes and ears.

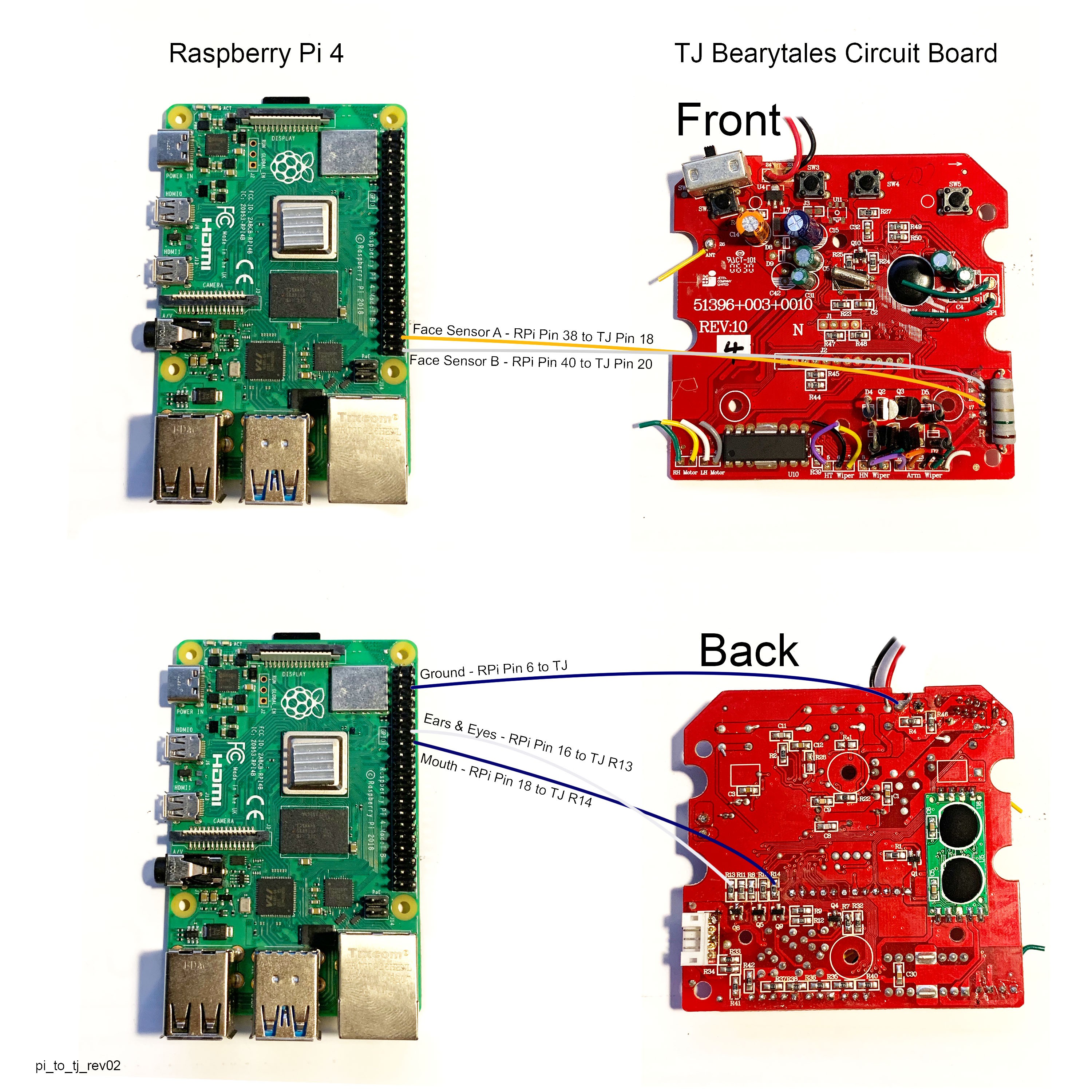

But first we needed to solder two wires to TJ's positional encoder on the front of the board. We then attached the wires to GPIO pins on the Raspberry Pi.

We wrote the following code to read the value of the positional encoder associated with TJ's eye blinking and ear wiggling loop.

#Script to test one of the positional encoders on TJ Bearytales

# Copyright 2023 Steph & Jack Nelson

# SPDX-License-Identifier: MIT

# https://hackaday.io/project/187602-tj-bearytales-rewritten

#Import libraries

import time

import RPi.GPIO as GPIO

# Set the GPIO numbering mode to BOARD

GPIO.setmode(GPIO.BOARD)

# MotorC:

earsEyes = 16

mouth = 18

# Set up the GPIO pins for the sensors

# Face Sensors

faceSensorA = 38

faceSensorB = 40

# Setup all pins we are using to control motors as outputs and set them to low

for motorPin in [earsEyes, mouth]:

GPIO.setup(motorPin, GPIO.OUT)

GPIO.output(motorPin, GPIO.LOW)

# Setup all pins we are using to read sensors as inputs and set them to be pulled up

for sensorPin in [faceSensorA, faceSensorB]:

GPIO.setup(sensorPin, GPIO.IN, GPIO.PUD_UP)

try:

GPIO.output(earsEyes, GPIO.HIGH)

while True:

# Read the state of the GPIO pins

pinStateA = GPIO.input(faceSensorA)

pinStateB = GPIO.input(faceSensorB)

if pinStateA == True and pinStateB == False:

status = 'Eyes Opening'

if pinStateA == True and pinStateB == True:

status = 'Eyes Open, Ears Wiggling'

if pinStateA == False and pinStateB == True:

status = 'Eyes Closing'

if pinStateA == False and pinStateB == False:

status = 'Eyes Shut, Ears Still'

print(f'SensorA: {bool(pinStateA)} \tsensorB: {bool(pinStateB)} \tPosition:{status} ', end='\r', flush=True)

time.sleep(.01)

except:

# Ensure all motors are off

for motorPin in [earsEyes, mouth]:

GPIO.setup(motorPin, GPIO.LOW)

# Clean up GPIO resources

GPIO.cleanup()

print('Cleanup Done.\n\n~~Thanks for playing!~~')

In this video you can see the test running, and in slow motion you can see the different states of TJ's eyes and ears, and the value reported by the positional encoder.

The results of the test are that the eyes open motion state corresponds to the 'true true' positional encoder state, and that the eyes closed motion state corresponds to the 'false false' positional encoder state. You can see that these pins have not been debounced, and so the results are somewhat noisy. You can write this test for all of the three sensor pairs to evaluate which positions correspond to which encoder states.

Mx. Jack Nelson

Mx. Jack Nelson

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.