Update: Random thoughts on potential explanations to be explored. (at the bottom).

-----------------

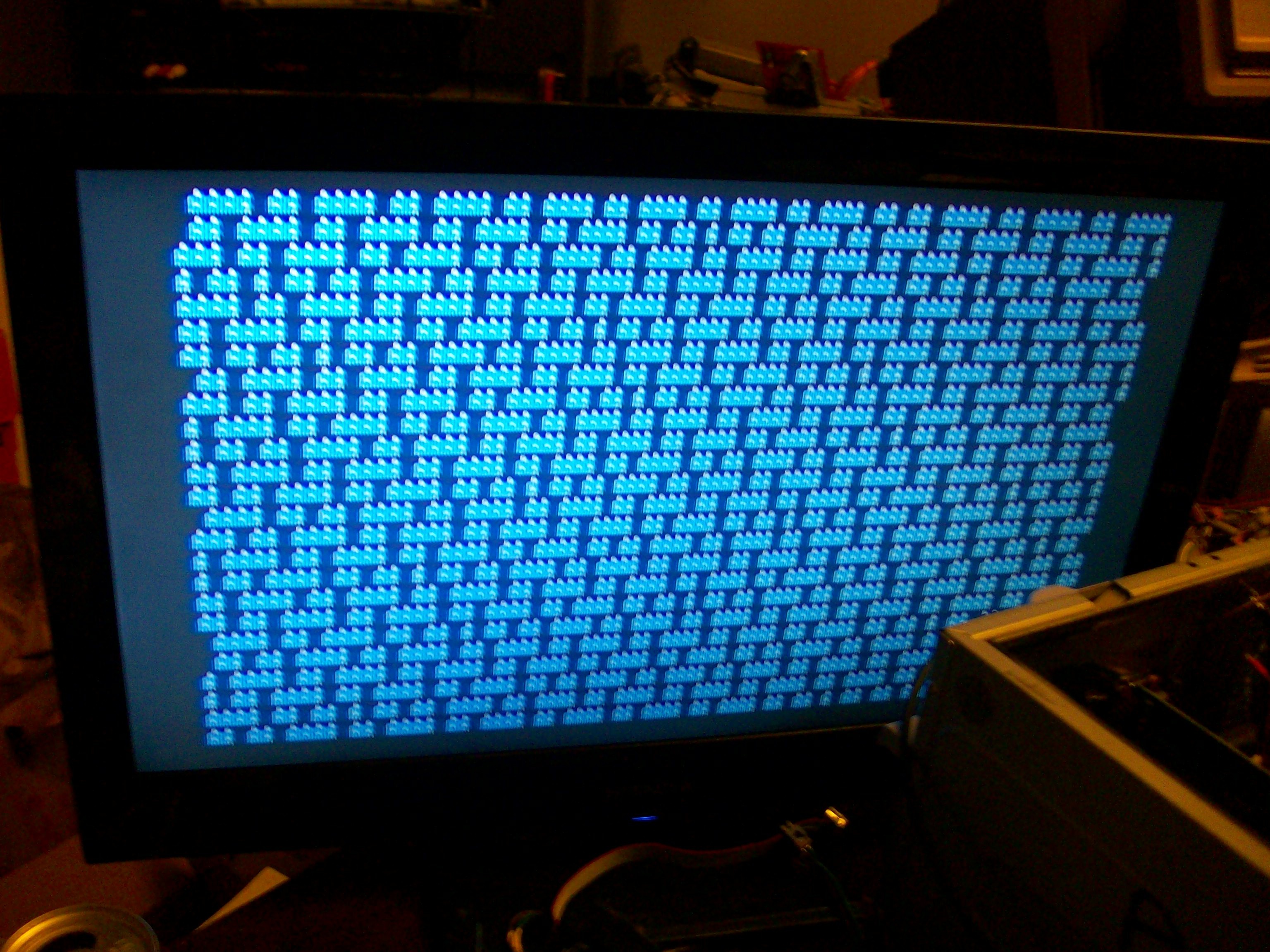

WE HAVE CGA! -ish...

So, technically, it's supposed to be All A's... but what canya do...? Looks kinda cool, a bit like a video game town.Or a wall of bricks, maybe legos... maybe I'll try red on gray.

There seems to be a somewhat regular pattern, maybe having to do with my bus-timing not being 100%. (almost speaks to me of 'beating' in the physics sense, which would make more sense if my AVR clock and the Bus clock weren't synchronized). Though, the DRAM verifies are still giving only one error in 640KB... So, maybe the ISA CGA card has slightly different bus-timing requirements.

Over all, this is pretty exciting... My old-school Atmega8515 8-bit AVR sitting in an 8088 CPU socket, driving an ISA CGA card...

----------

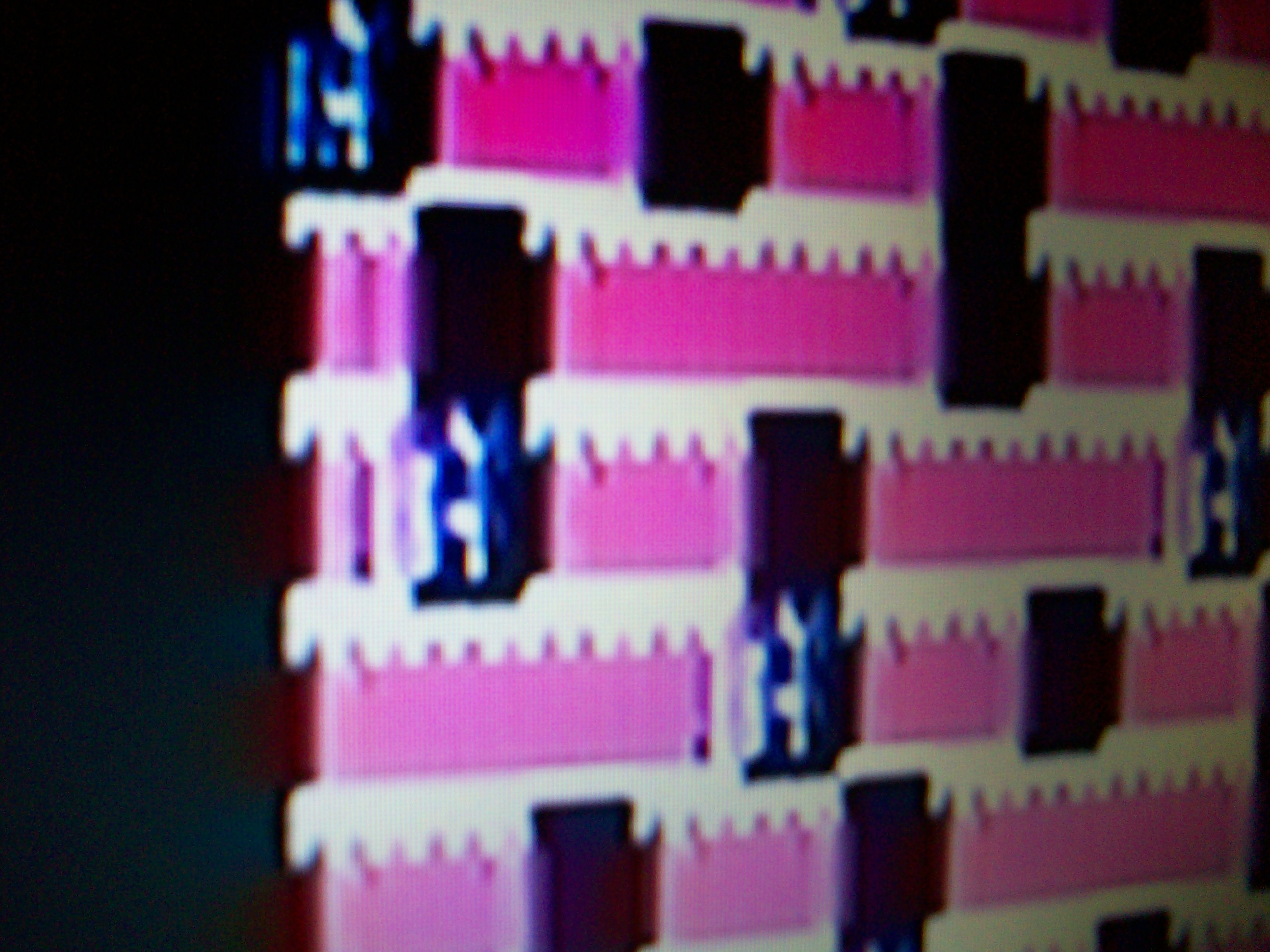

Haha! All I changed was the background/foreground color attributes...

This looks real cool.

Herein we've got numerous factors at play...

My CGA card is known for being a bit flakey... (see previous logs) Combine that with an LCD, rather than a CRT, and there's some other interesting effects...

Now we've added flakey bus-interface...

But how can this pattern possibly be explained? What I programmed, again, is a full screen of nothing but the letter A in red on a background of white.

Perty durn cool, though.

--------

Hah, fewer factors at-play than I thought...

Though, the LCD definitely seems to be syncing differently on different colors, whereas the CRT has pretty sharp vertical edges.

---------------------------------------

UPDATE: Thoughts On Why:

Frankly, I'm a bit excited by the weirdness of this. Realistically, its coming up with much more interesting visuals than I would. (See the next log)

I copied most of my CGA-initialization from the PC/XT BIOS Assembly-Listing, (converting it to C). (I'll throw that file up in the files section). But, there are definitely some x86 instructions I don't understand, and parsing it was a bit of a mind-bender for me, some of it I just threw up my arms and made assumptions about what the goal was. So it's plausible I missed some initialization-stuff. Also I skipped over some parts, like the bit that clears the memory. (What a weird idea that there's a machine-instruction that can load a value to 16384 memory locations!).

Aside from that, it seems some parts of the initialization appears to be interspersed in numerous locations across dozens of pages of otherwise unrelated assembly. So, it's even more likely I've missed something. Also, I didn't have the patience to stare at even more assembly to implement the remaining INT10 (video-I/O) functions... After I finally got initialization coded-up, I just started writing data to the VRAM.

Also, I vaguely recall something about a portion of the memory being used for the character-set above 0x7f, so I should look into that.

There's also some info 'round the web regarding the card's registers. I didn't look hard, but get the impression the majority of those aren't low-level enough to explain the *very initial* initialization-process... those I found seem to presume the card's already initialized (and you wish to reconfigure it). Though, I'm willing to bet that low-level info is out there, and probably in greater-depth than ever, what with the decades, and the demoscene.

Also interesting to note: I broke the DRAM write/verify routine up a little bit: instead of writing all 640K then reading it all back (which took about 4 seconds), it now handles that in 256 chunks, that way I can do some "realtime" stuff in the meantime... And... Now the DRAM shows no verification-failures! So, at least as far as that goes, interfacing with the bus is pretty reliable. Earlier verification-failures must've [still] been refresh-related.Though, as I found out a log or two ago, different types of memories (and I/O devices) respond differently to the "typical" memory-bus: DRAMs apparently latch their writes on the falling-edge of /WE (?!), whereas SRAMs usually latch on the rising-edge. So, the 8088's strict bus timings may very well be designed to handle a variety of different cases that my timing doesn't quite match-up with.

It's also quite plausible the CGA card is flakey... The images it displays are definitely weird (illegible in most cases, even in DOS), and its ... OH MAN I bet I got it (I'll come back to that). The card was quite corroded, requiring quite a bit of cleanup/reflow. It's entirely plausible I have some DRAM address/data lines shorted or traces that've been mostly-degraded.

"OH MAN, I bet I got it": It "looks a bit like beating," right...? And In part of the effort getting the card cleaned-up and functioning I installed a separate crystal oscillator on-board, separate from the ISA's clock. Seems odd to me there was a place for a separate crystal, if it requires the timing to match the system-bus, but that'd sure explain the "beating."

Also, now that I think about it, there's a specific register to read-back, indicating that it's OK to write to the VRAM, the BIOS pays attention to that in some of its routines (which were too many and too long for me to translate to C). I *thought* that was only to prevent the "snow" effect, which occurs when you write a memory location at the same time the raster-drawing tries to read it. But maybe there's more to it than that. Hmmmm....

------------

As far as write/verify to the VRAM, I hadn't tried that before posting this log. I've since added a "counter" displayed in the upper-left corner of the screen, indicating the attribute-settings used... (It was *hard* finding attributes that would display clearly! But that was a problem with DOS and my BIOS-Extension, as well)... But, in implementing that, it definitely had some issue displaying the number. First I tried writing each character 10 times, but it'd overwrite some with spaces (interestingly, almost always spaces.... maybe 0xff from pull-resistors? hmmm), and sometimes different attributes. So, then, for those important characters I did write/verify loops until verified correctly. Not quite read-back of the whole shebang, but a test (and no indication of how many times it takes to get right...)

And... no... My bright-idea regarding "beating" doesn't make as much sense... as I thought... Because it works with DOS (despite being illegible).

Eric Hertz

Eric Hertz

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

What happens when you try to read back video RAM to the AVR? I wonder if you are writing to video memory properly, but it's not displaying because the CGA card needs some software setup. Are there registers on the card you need to setup? I don't know much about CGA, but I know VGA cards need a bit of initialization code. Usually the VGA BIOS is the fitst thing you see when you boot a PC, even before the memory test.

Are you sure? yes | no

Thanks for that, I've updated this log with ramblings in response ;)

In short, you're probably on to something.

And, yahknow, now that you mention it... ISA-cards' ROMs is a great example of a reason to implement the x86 instruction-set... Those and drivers. Hmmm... (Though, source-code for drivers are findable for most devices, e.g. in the linux kernel. Probably not so much for ROMs).

(My first computer was a Macintosh 68040 which had a 486-based "DOS Compatibility Card"... always found it pretty interesting that that 486 could access the Macintosh's Floppy drive/controller (and hard-disk images!) before the x86 OS was loaded... Hadn't even thought of the fact the thing could run in a window or in full-screen mode. Guess they must've had a pretty customized BIOS! Edit: Even weirder, it could *share* RAM with the 68040... How did that work?!)

Are you sure? yes | no

Cool :-)

The ISA bus was well known as the most inconsistent "industry standard" ever. I guess that the timing assumptions (4.77MHz fixed frequency) can lead to interesting simulation problems.

Are you sure? yes | no

Ah hah! Interesting.

I'm getting some really interesting results, here... Kinda makes me wonder how many of these are repeatable, and if-so, whether e.g. game-developers might've made use of oddities like these. I could imagine simulating these things would be darn-near impossible without knowing the original inside-and-out... and not at the specification-level, but the actual physical-world-level.

Are you sure? yes | no

I can imagine. If the original IBM PC developers would have had access to your 8088 emulation they might have come up with something more predictable after playing with some parameters ;-)

By the way, this reminds me of the first I2C bit-banging code I wrote two decades ago: I unintentionally violated the 400kHz timing limits at a 300kHz bit rate by not ensuring 50% duty cycle at all times.

Are you sure? yes | no

Interesting... what was the result...? Would've thought I2C to be pretty well-defined... to the extent that even bit-banged with crazy-duty-cycles under 400kHz, would work...

I've looked at some OS-level I2C implementations with support for old monitors... It's amazing the tremendous amount of code they implemented to support a monitor or two that most people would never encounter, just because it didn't meet I2C specs. And the fact they did bit-banging on those ports, despite the fact those ports were GPIOs *and* dedicated I2C controllers. Didn't make sense to me, but I spose if some devices don't follow the specs 100%, it could. Thing is, things like that aren't *that* difficult to define: latch on an edge, the end! Right? OTOH, kinda reminds me of endianness... One might think these things were set-in-stone, but there's always room for interpretation. Similar with my experiences with SDRAM; there's some base-level of communication that *has* to work, to use those DIMMs in a PC... but the actual implementation of that communication is entirely up to the designers' understanding.

BTW: your username is hard to _at_ ;)

Are you sure? yes | no

About the I2C: it turned out that some devices don't care about the time of one bit cycle. No, they insist on the timing from one rising to the next falling edge or vice versa: "1µs means 500kHz, and I don't care if the total bit time is 3µs - balance the timing or bust ". And here we don't even talk about the complications associated with multi-master, or bit-stretching. Yes, it's worth the trouble of using certified I2C µC peripherals, even if the C code for I2C bit-banging is a quick Internet search away.

The world of interface specification is full of endianness ambiguities. I remember that in the J1939 alarm signaling protocol the endianness can be declared, and one of the alternatives basically says "it's complicated" ;-)

Hehe you're right, when I chose my user name I didn't think of @ addressing. I try to follow the discussions in which I participated. If you don't get a reply please send me a PM :-)

Are you sure? yes | no

Weird about that I2C device. I've only implemented a handful of I2C devices, so thankfully haven't run into any like that. But good to know about for future endeavors! That'd be frustrating to figure out.

Reminds me, again, of (PC-100-era, not DDR) SDRAM-ambiguities I've recently learnt about. Certain internal state-transitions need to happen between cycles, so I think most devices do internal-stuff by using the falling-edge, while they do I/O on the rising-edge. OTOH, some devices, apparently, don't do it that way (which I'd've thought the most obvious!), and (I'm totally hypothesizing) instead rely on some sort of PLL-like clock-resyncing/multiplying system to handle internal tasks. The end-effect being that some devices don't really care what clock-input you give 'em, it could be 5% duty-cycle as long as it meets the setup/hold requirements, or could even be completely bit-banged with whatever arbitrary signal one chooses. But the ones based on PLLs require darn-near 50% duty-cycles and stable clock frequencies. Both meet the generic specs, but one's a lot nicer to hack! Besides that, the other system is significantly more complicated... why not use the signals already available? And, of course, that level of detail usually isn't in the datasheets. They often seem to cough-up the generic specs when it comes to timings like those.

Are you sure? yes | no

Actually, it's better this way... I'm not particularly creative in the artistic sense... If I had a perfectly-functional video-interface, I dunno what I'd do with it.

Are you sure? yes | no

Sweet!

Can You have it print "Bad command or file name"? I think that was burned into my monitor phosphors in the 8088 days :-)

Are you sure? yes | no

LOL, awesome!

Did you see the update? I think it'd probably come out more like "Banned comma or find aim"

Are you sure? yes | no