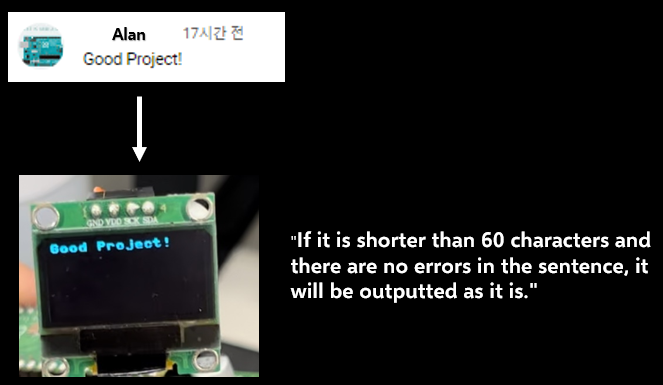

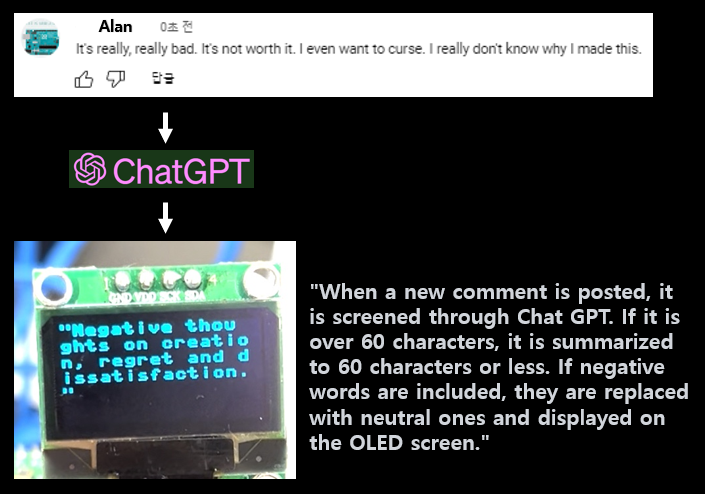

"Although there will be more information following, let me provide the operation explanation first."

"Let's get started. I'm not very fluent in English, so it may be difficult to understand. Also, there were a lot of debugging while writing the code, so there may be some unnecessary code included in the process."

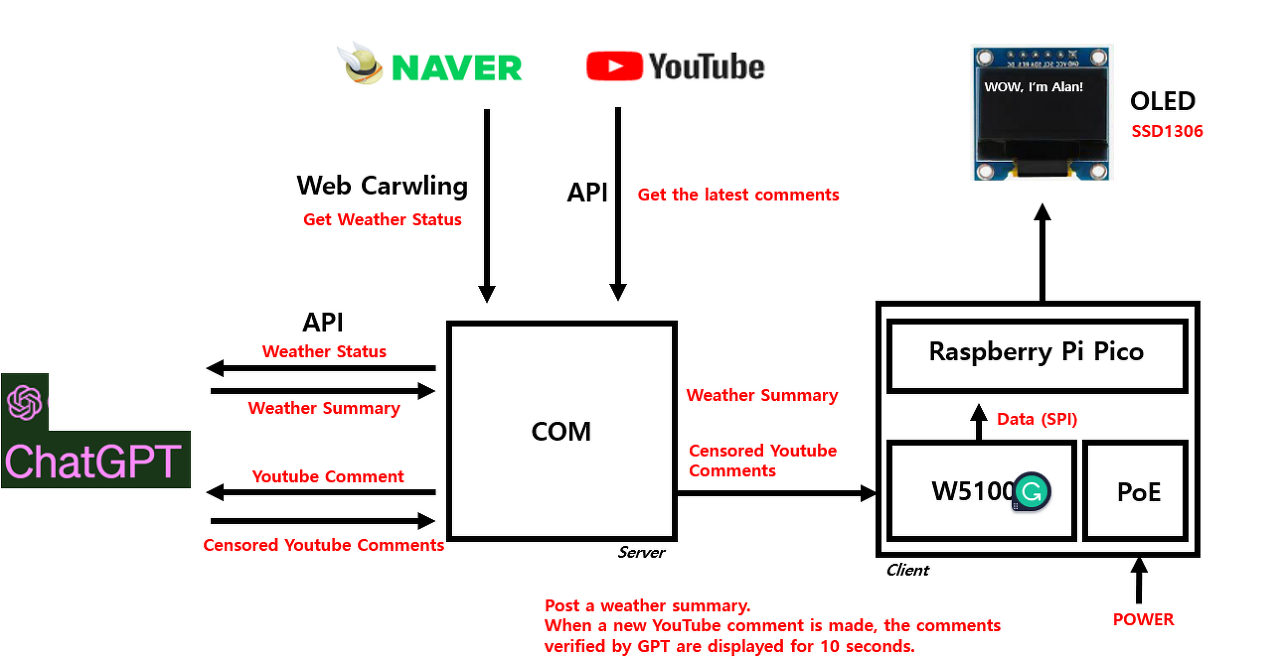

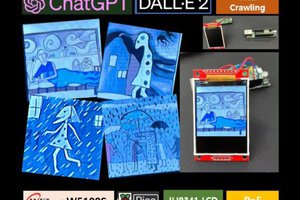

As the title suggests, we will bring real-time YouTube comments and weather information and carry out a project to spray them on OLEDs.

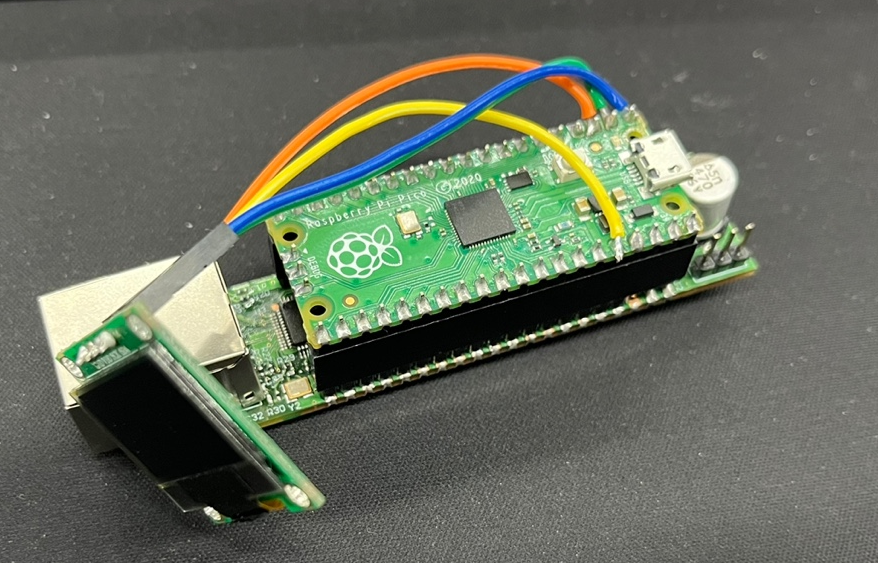

Let me introduce the hardware first.

First, it is a Raspberry Pi Pico board that becomes a body.

I chose it because I wanted to design it compact. (It's also relatively simple.)

The communication was conducted through Ethernet. I used the W5100S + PoE board that I made myself. If you're curious, please refer to the link below!

The OLEDs used are very cheap and simple SSD1306s. They communicate using SPI.

If you connect it all, it's small and simple like this.

Start

Now that the hardware connection is complete, let's start designing the software.

I want to put weather information on Oled. And if there is a new comment on YouTube that I posted, I want to put it on Oled for 10 seconds. If you have a device like this, it will be very fun and useful if you put it in front of the computer and use it.

It would be good to refer to the simplified block diagram below.

Web Crawling

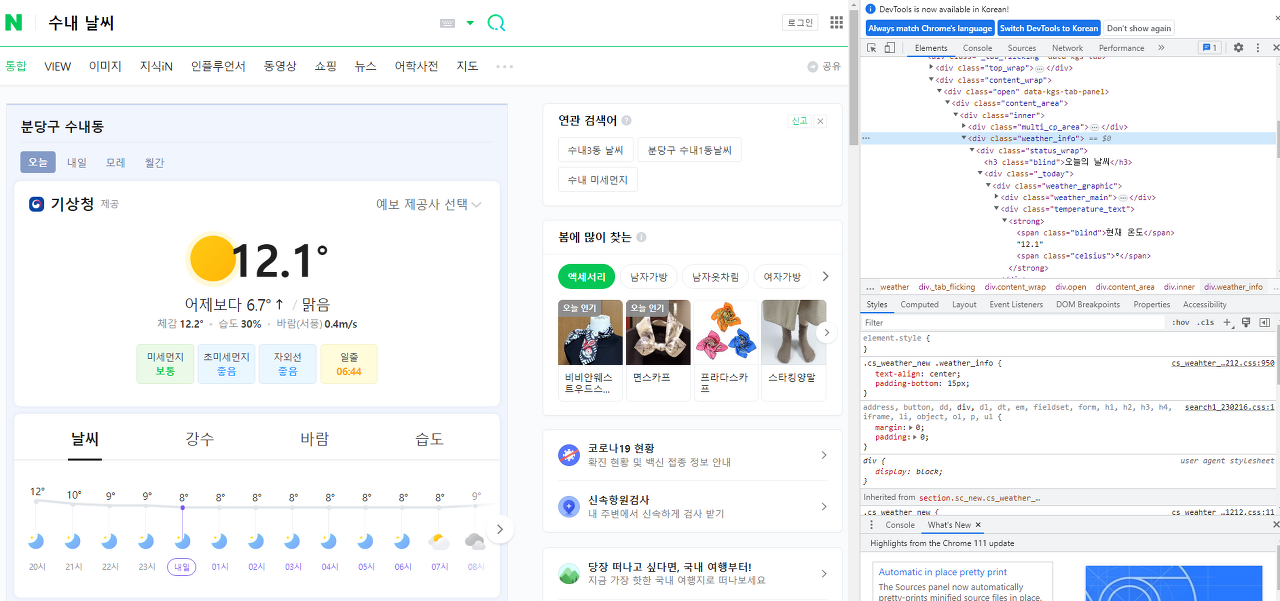

First of all, it's weather information, and I want to read it in a fun way as the weather caster tells me, not just weather information. So I decided to read it using the GPT API. GPT does not currently support real-time data services. So I decided to read it on Naver as WebCrawling.

It started implementing in a Python environment that is relatively light and easy to implement.

from bs4 import BeautifulSoup

def getweather() :

html = requests.get('http://search.naver.com/search.naver?query=수내+날씨')

soup = BeautifulSoup(html.text, 'html.parser')

global weather

weather = ''

address = "Bundang Sunae"

weather += address + '*'

weather_data = soup.find('div', {'class': 'weather_info'})

# Current Temperature

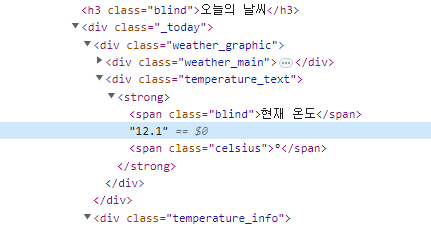

temperature = (str(weather_data.find('div', {'class': 'temperature_text'}).text.strip()[5:])[:-1])

weather += temperature + '*'

# Weather Status

weatherStatus = weather_data.find('span', {'class': 'weather before_slash'}).text

if weatherStatus == '맑음':

weatherPrint = 'Sunny'

elif '흐림':

weatherPrint = 'Cloud'

weather += weatherPrint

WebCrawling can be done simply with a package called BeautifulSoup.

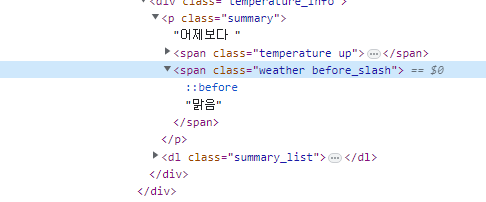

<Screen that appears when searching for weather in the water on the Naver site>

Let me deal with Crawling briefly.

In the script, temperature was read from temperature_text.

And weather was read in the span class of weather before_slash.

I want to read it in English, but I couldn't find it, so I just hardcoded it...

And when you run this, it will look like the following.

It outputs temperature and weather like this.

Youtube API

Next, I will read the latest comments using the YouTube API.

import pandas # For Data

from googleapiclient.discovery import build # For Google-API

The package can be imported by importing the google apiclient.discovery package, and the Pandas package is a package for importing comment data.

Pandas is an essential package when sending and receiving data.

def getYoutubecomments(youtubeID) :

comments = list()

api_obj = build('youtube', 'v3', developerKey='Youtube_Key')

response = api_obj.commentThreads().list(part='snippet,replies', videoId=youtubeID, maxResults=100).execute()

global df

while response:

for item in response['items']:

comment = item['snippet']['topLevelComment']['snippet']

comments.append(

[comment['textDisplay'], comment['authorDisplayName'], comment['publishedAt'], comment['likeCount']])

if item['snippet']['totalReplyCount'] > 0:

for reply_item in item['replies']['comments']:

...

Read more »

Alan

Alan

kutluhan_aktar

kutluhan_aktar

Mike Szczys

Mike Szczys

Jarrod

Jarrod

Tüm Türkçe konuşanların dikkatine! GPTTurkey.net adresinde ChatGPT Türkçe ile sohbete hazır olun. Bir hesaba kaydolmakla veya token kullanımını takip etmekle zamanınızı boşa harcamayın. Artık GPTTurkey.net ile ChatGPT'yi ücretsiz ve sınırsız olarak kullanabilirsiniz. OpenAI API kullanan bu inanılmaz web sitesi ile, bir dizi belirteçle kısıtlanmadan sohbet edebilir ve anlamlı diyaloglar kurabilirsiniz. İster doğal dil sohbeti, ister chatbot uygulamaları, öğrenme veya araştırma için kullanın, GPTTurkey.net'teki ChatGPT Türkçe size ücretsiz, sınırsız kullanım gücü verir. Öyleyse, bir an daha beklemeyin! ChatGPT Türkçe ile sohbetleriniz canlansın.