The history of Tiny Basic programming language goes back to the same era when first home/hobby microcomputers powered by 8-bit microprocessors started to appear.

Due to ROM/RAM size limitations (2kB each) and the CPU used (8080), the choice of Tiny Basic fell on this version:

;*************************************************************

;

; TINY BASIC FOR INTEL 8080

; VERSION 2.0

; BY LI-CHEN WANG

; MODIFIED AND TRANSLATED

; TO INTEL MNEMONICS

; BY ROGER RAUSKOLB

; 10 OCTOBER,1976

; @COPYLEFT

; ALL WRONGS RESERVED

;

;*************************************************************

I took the Tiny Basic source code from CPUville site (by Donn Steward which has many other great retro-computing resources there too) and made two modifications:

(1) changed from Intel 8251 UART to Motorola 6850 ACIA (I/O port locations and control / status register bits):

;--- definitions for Intel 8251 UART ------

;UART_DATA EQU 2H

;UART_CTRL EQU 3H

;UART_STATUS EQU 3H

;UART_TX_EMPTY EQU 1H

;UART_RX_FULL EQU 2H

;UART_INIT1 EQU 4EH ;1 STOP, NO PARITY, 8 DATA BITS, 16x CLOCK

;UART_INIT2 EQU 37H ;EH IR RTS ER SBRK RxE DTR TxE (RTS, ERROR RESET, ENABLE RX, DTR, ENABLE TX)

;--- definitions for Motorola 6850 ACIA ---

UART_DATA EQU 11H

UART_CTRL EQU 10H

UART_STATUS EQU 10H

UART_TX_EMPTY EQU 2H

UART_RX_FULL EQU 1H

UART_INIT1 EQU 03H ; reset

UART_INIT2 EQU 10H ; 8N1, divide clock by 1

;

(2) fixed the "overflow on change sign" bug

When changing the sign of 16-bit 2's complement integer, there are two cases when the MSB stays the same - from 8000H (-32768) to 8000H (and this is an overflow error) and from 0000H to 0000H (not an error). This second case was not handled in original (and was causing HOW? error message when I first ran the benchmark program).

;

CHGSGN: MOV A,H ;*** CHGSGN ***

PUSH PSW

CMA ;CHANGE SIGN OF HL

MOV H,A

MOV A,L

CMA

MOV L,A

INX H

POP PSW

XRA H

JP QHOW

MOV A,B ;AND ALSO FLIP B

XRI 80H

MOV B,A

RET

;

CHGSGN: MOV A,H ;*** CHGSGN ***

PUSH PSW

CMA ;CHANGE SIGN OF HL

MOV H,A

MOV A,L

CMA

MOV L,A

INX H

POP PSW

XRA H

JM FLIPB ;OK, OLD AND NEW SIGNS ARE DIFFERENT

MOV A,H

ORA L

JNZ QHOW ;ERROR IF -(-32768)

FLIPB: MOV A,B ;AND ALSO FLIP B

XRI 80H

MOV B,A

RET

To assemble into binary I used zmac cross-assembler, with -8 command line flag to use the 8080-style mnemonics (instead of default Z80-style). Note that the VHDL project in ISE 14.7 which produces the .bin file to download to FPGA is using the .hex file output, not the .bin (which would be the case in programming EPROMs for example). This process is described in a separate project log.

Running and benchmarking

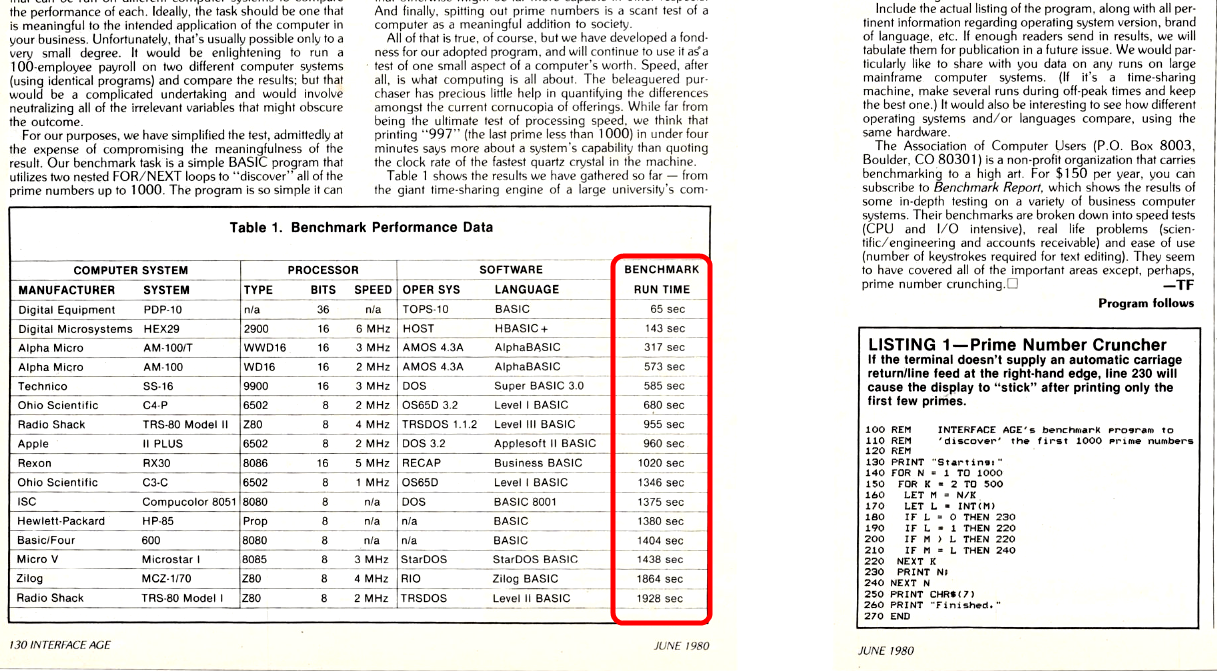

To test the Tiny Basic, I used the benchmark test program proposed by June 1980 Interface mag article - a very simple algorithm to find first 1000 prime number. Only two minor modifications were done - as Tiny Basic has no INT() - because doesn't support floating point numbers, and commenting out CHR$(7) (which on many somewhat compatible ANSI platforms would produce a beep "bell")

100 REM -------------------------------------

101 REM Simple benchmark - find primes < 1000

103 REM -------------------------------------

104 REM https://archive.org/details/InterfaceAge198006/page/n131/mode/2up

110 REM -------------------------------------

130 PRINT "Starting."

140 FOR N = 1 TO 1000

150 FOR K = 2 TO 500

160 LET L = N/K

170 LET M = N-K*L

180 IF L = 0 GOTO 230

190 IF L = 1 GOTO 220

200 IF M > L GOTO 220

210 IF M = L GOTO 240

220 NEXT K

230 PRINT N;

240 NEXT N

250 REM PRINT CHR$(7)

260 PRINT "Finished."

270 STOP

Results running at different CPU clock frequencies:

104 REM https://archive.org/details/InterfaceAge198006/page/n131/mode/2up

105 REM SW210 CPU (MHz) Result (s)

106 REM 100 01.5625 52m23 3143

107 REM 101 03.1250 26m12 1572

108 REM 110 06.2500 13m06 786

109 REM 111 25.0000 3m17 197

110 REM -------------------------------------

It can be seen that the execution speed is in strong linear correlation with CPU clock frequency (this is expected because serial I/O is sparsely used, and memory interface never adds any wait cycles, always follows CPU speed - not a case in many real computers of that era). 8080 running at 25MHz "warp speed" is comparable to another exotic bit-sliced processor of the era (HEX-29 was AMD's "showcase design" introduced in the classic bit-slice design cookbook) at 6MHz, while at the more realistic 3.125MHz it is comparable to the 3MHz 8085-based computer of the era (Intel 8080A was rated to max 2MHz clock frequency).

(image from LALU (lookup ALU CPU))

zpekic

zpekic

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.