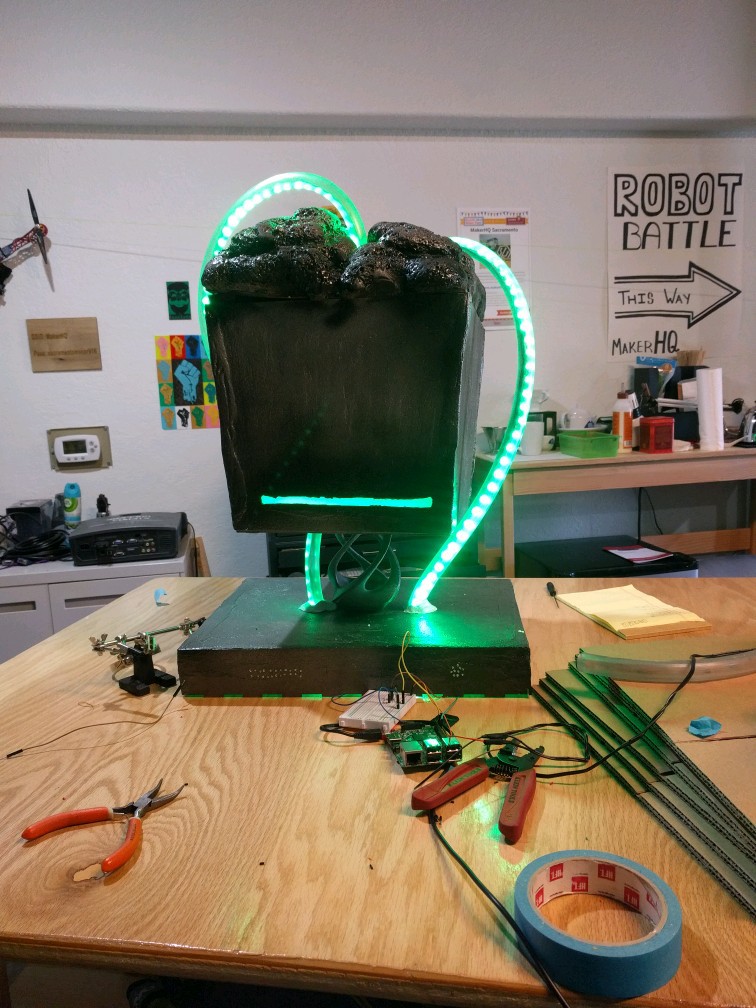

Deep Thought is your normal, somewhat-creepy talking brain that gives you all the answers you ever need to know!

This project utilizes speech recognition, Neopixel LED Strips, and the Raspberry Pi to bring us the beginnings of our own little home-baked personal, all-knowing assistant. Who needs Alexa when you have Deep Thought?

The video above is our prototype, getting Deep Thought to recognize certain patterns in order to respond with the correct phrases according to Hitchhiker's Guide to the Galaxy. We hope to add some more functionality to Deep Thought in the coming weeks and give him a permanent role in our makerspace!

Richard Julian

Richard Julian

Arya

Arya

Mike

Mike

RodolpheH

RodolpheH

jason.gullickson

jason.gullickson