Point Cloud Kinematics

There's actually a lot more to it than meets the eye as far as information is concerned, but it's embedded and bloody hard to get to, because its several layers of integrals each with their own meta-information. I've touched on Cloud Theory before, and used it to solve many problems including this one, but for a cloud to have structure requires a bit of extra work mathematically.

Our universe, being atomic, relies on embedded information to give it form. Look at a piece of shiny metal, its pretty flat and solid, but zoom in on a microscope and you see hills and valleys, great rifts into the surface and it doesnt even look flat any more.

Zoom in further with a scanning electron microscope and you begin to see order - regular patterns as the atoms themselves stack in polyhedral forms.

If you could zoom in further you'd see very little, because the components of an atom are so small even a single photon cant bounce off of them. In fact so small they only exist because they are there, and they are only 'there' because of boundaries formed by opposing forces creating an event horizon - a point at which an electron for example to be considered part of an atom or not. It's an orbital system much like the solar system, its size is governed by the mass within it, which is the sum of all the orbiting parts. That in turn governs where it can be in a structure, and the structure's material behaviour relies upon it as meta-information.

To describe a material mathematically, you then have to also supply information about how it is built - much as an architect supplies meta-information to a builder by using a standard brick size. Without this information the building wont be to scale, even the scale written on the plan. And yet, that information does not appear on the plan, brick size is a meta; information that describes information.

A cloud is a special type of information. It contains no data. It IS data, as a unit, but it is formed solely of meta-information. Each particle in the cloud is only there because another refers to it, so a cloud either exists or it doesnt as an entity, and is only an entity when it contains information. It is self-referential so all the elements refer only to other elements within the set, and it doesnt have a root like a tree of information does.

A neural network is a good example of this type of information, as is a complete dictionary. Every word in the language has a meaning which is described by other words, each of which are also described. Reading a dictionary as a hypertext document can be done, however you'd visit words like 'to', 'and' and 'the' rather a few times before you were done with accessing every word in it at least once. You could draw this map of hops from word to word, and that drawing is the meta-map for the language, it's syntax embedded in the list of words. Given wholemeal to a computer, it enables clever tricks like Siri, which isnt very intelligent even though it understands sentence construction and the meaning of the words within a phrase. There's more, context, which supplies information not even contained in the words. Structure...

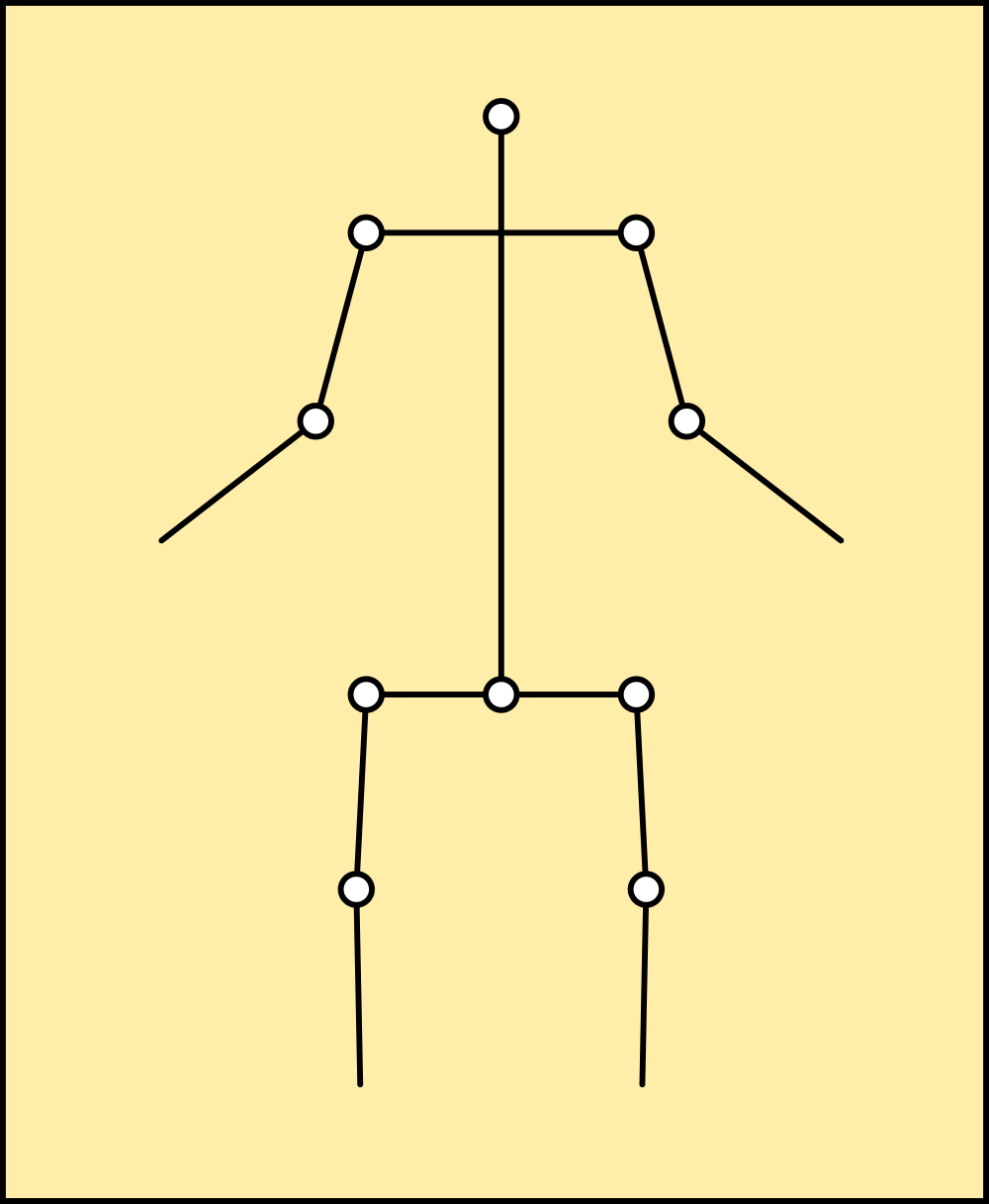

This meta-information is why I've applied cloud theory to robotics, and so far it has covered language processing, visual recognition and now balance, and even though the maths is complicated to create it, cloud-based analysis of the surface of the robot is a lot simpler than the trigonometry required to calculate the physics as well.

But its not all obvious...

I first tried to create a framework for the parts to hang off of and immediately ran into trouble with Gimballing. I figured it would be a simple task to assign a series of coordinates from which I could obtain angle and radius information, modify it, and then write it back to the framework coordinates.

This works, and hangs the parts off correctly using the axes to offset each part. To begin with, I just stacked them on top of each other, and then rebuilt that part of the calculation to find the angle the part is at, which worked until I hit the body servos. Working upwards from the foot on the floor, the first servo rotates in the Y axis to tilt the robot back and forwards, and the next tilts it left-and right. Above that another pair of back-and-forwards and above that another left-right tilt. This allows the body to remain parallel to the foot and locate above it in any position within a small circle, so to turn I added a servo to swing the leg around it's axis. This is the one that causes all the trouble, because it changes a left-right tilt into a back-forward tilt as far as the body is concerned, and worse, reverses the other leg completely so a back-forward tilt is a forward-back one.

This is called Gimballing, and its a pain in the arse.

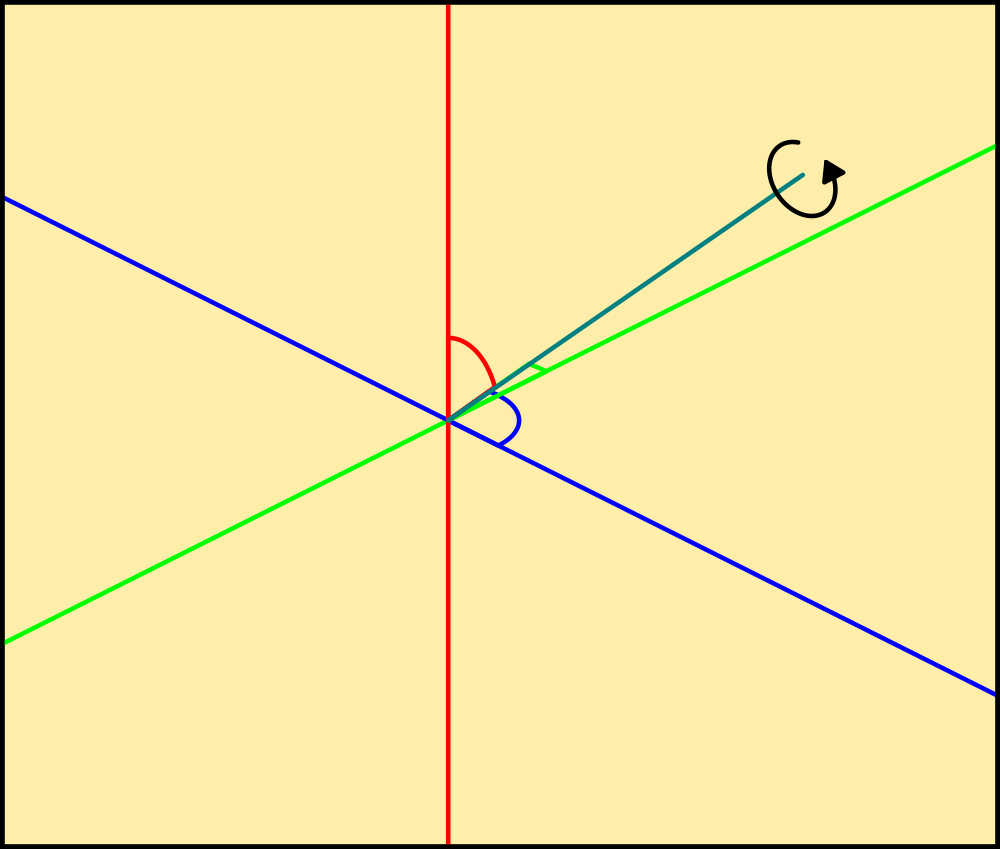

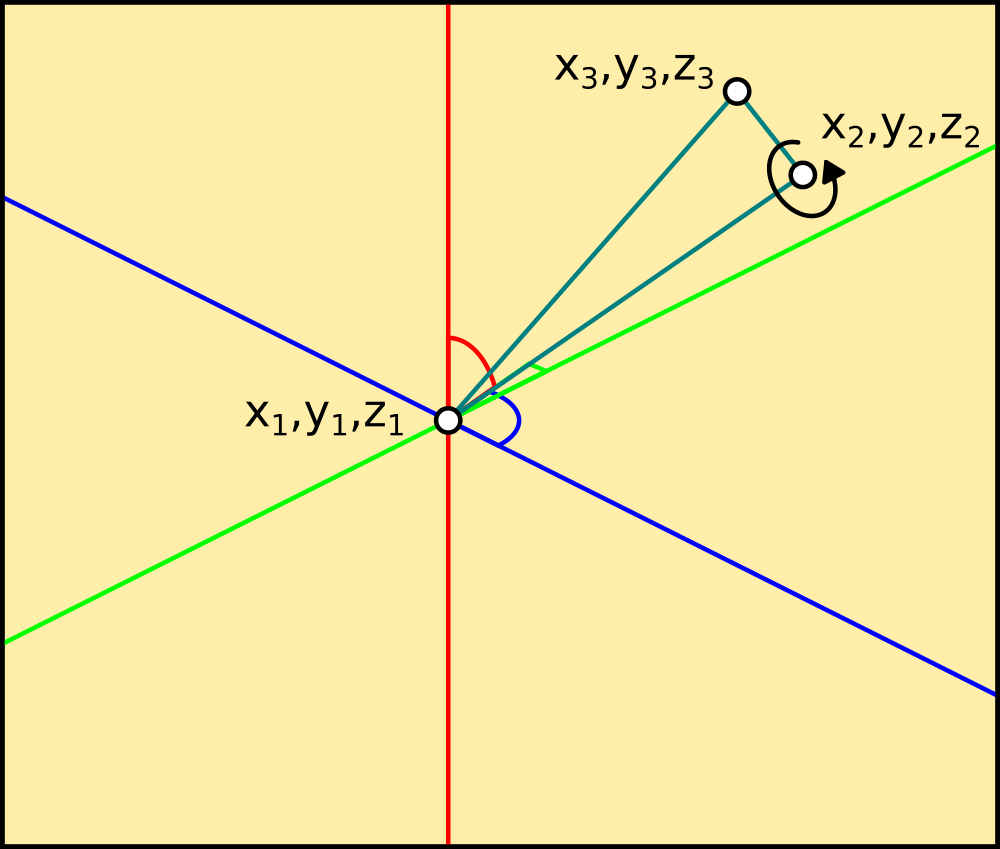

The above drawing illustrates this neatly. X in green, Y in blue and Z in red. The actual angles of the cyan line are specified from the centre as offsets from the axis, so looking along the red line green and blue are a cross and the cyan line rotates around it. The same applies with each axis, but, it doesnt specify rotation around the axis of the cyan line. It's just a line, it needs to have at least another dimension, width or height, before I can tell which way up it is as well as which way its pointing. That gives it an area, a plane surface that contains information about the axis of its edge, which a single line doesnt have.

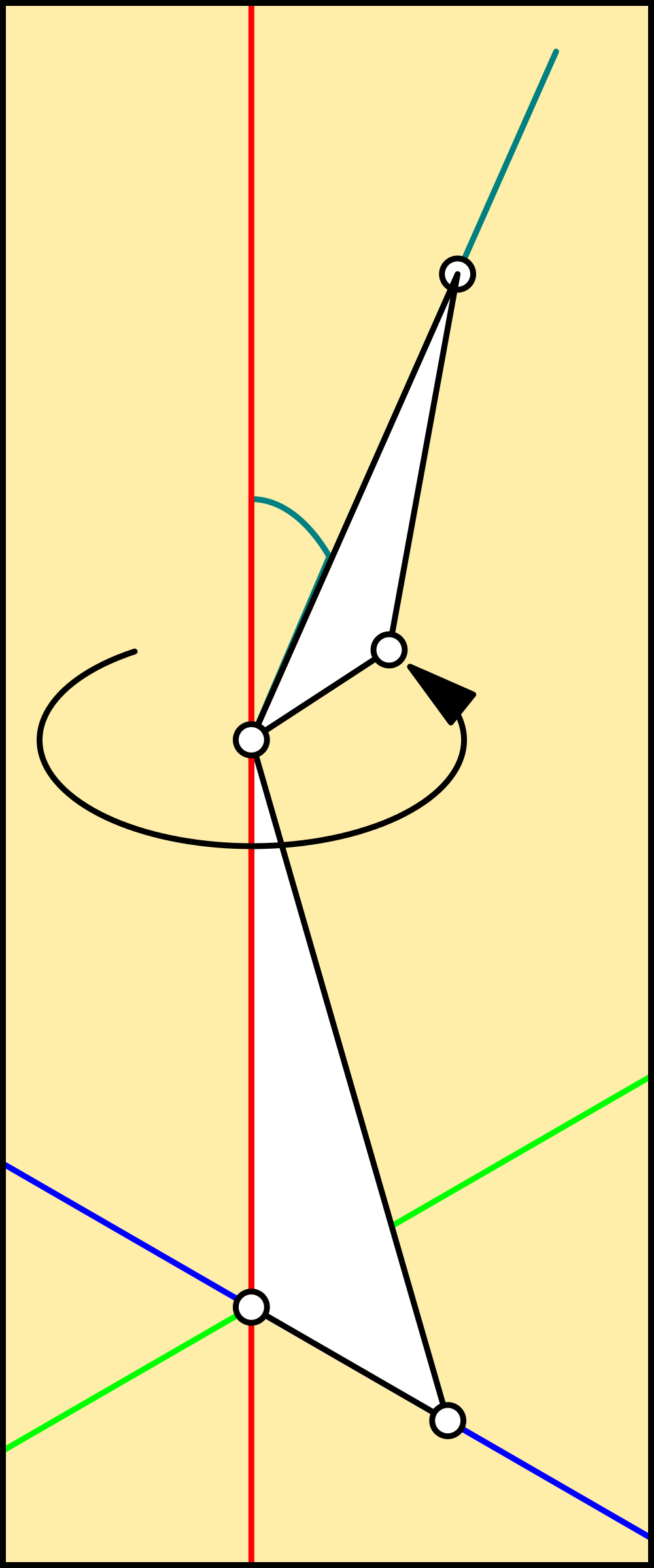

So, each of the joints in the robot has coordinates like this: (XYZ), (XYZ), (XYZ) which each specify a corner of a right-triangle. The Hypotenuse points at the origin at an an angle oblique to the axis which runs along its bottom edge, and the right angle rotates around it, giving it dimension that I can use to locate the top surface of the part - and therefore keep it pointing in the right direction. Cartesian coordinates translate and rotate with respect to each other, so the rotation is done using Pythagorean maths rather than Euclidean, which makes no account of world coordinates like this.

While it isnt immediately apparent, the vertex of the right triangle is the locus for the part, which points at the locus of the next. It is done this way because the very first right triangle sits with it's Opposite on the origin pointing North, and the Adjacent and Hypotenuse point upwards, to meet at the next vertex, which points at right-angles to articulate the joint around the Opposite's axis. This continues up the limb, through the body and out to the free foot.

Assigning a weight to each of those nodes and averaging it across the X-Y plane is enough to balance the entire structure without calculating the limb angles, or computing the entire mesh - although it works with even more accuracy and would be needed with another pair of limbs not in contact with the ground, or non-symmetrical parts. technically this is is cloud sampling rather than cloud analysis, but the end result is the same.

This is all it takes to balance a model using Point Cloud Kinematics.

while not quit: # loop forever

model=[] # store the models coords as a cloud

xaverage=[] # stores all the x coordinates for averaging

yaverage=[]

for n in range(len(coords)): model.append([0,0,0]) # place all points at the centre

for n in range(len(mesh)-1,-1,-1): # begin at the free foot and work down

for m in mesh[n]: # pick up the right coordinates for the part

for c in m[0]: # all 4 corners of the all the polygons

model[c]=coords[c] # transfer them to the model

for c in range(len(model)): # now go through the model points

x,y,z=model[c] # one by one

s=0 # clear last offset position

if n>0: # if not on the floor

r=axes[n][0][1][0]+str(axes[n][0][1][1]) # pick up the joints actual positions

x,y,z=rotate(x,y,z,r) # rotate the part around the origin

s=origins[n][2]-origins[n-1][2] # move the part +z so it matches the axis

model[c]=x,y,z+s # of the next part below it

for n in model:

xaverage.append(n[0]) # store the modified coords for averaging

yaverage.append(n[1])

balance=[sum(xaverage)/len(xaverage),sum(yaverage)/len(yaverage)] # bang, thats the centre

if int(balance[0])>1: # line up the ankle servo

axes[1][0][1][1]=axes[1][0][1][1]+1

chg=True

if int(balance[0])<0:

axes[1][0][1][1]=axes[1][0][1][1]-1

chg=True

if chg==True: # display the model

pygame.draw.rect(screen,(255,255,192),((0,0),(1024,768))) # clear the screen

rend=qspoly(renderise(mesh,model,(cx,cy),'z'+str(ya)+' x'+str(xa),True)) # build the render

display(rend,'z'+str(ya)+' x'+str(xa))

chg=False

Morning.Star

Morning.Star

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.