( u n d e r c o n s t r u c t i o n )

So, the cat-detector was tested in better lighting conditions! Remember, we are building a cat-detector to test out image classification using TensorFlow on the Pi, and to investigate some of our future directions for elephant detection too. For instance, we can test out tweeting our labelled cat images, and asking twitter users to add their own labels (i.e. the names of the cats). I.e. supervised learning. Much of this post will cover that.

1. Let's see what happened with the cat-detector in better lighting conditions!

Oh no! Well the largest component of the image is actually very similar to a prayer rug however!

Prayer rug again!

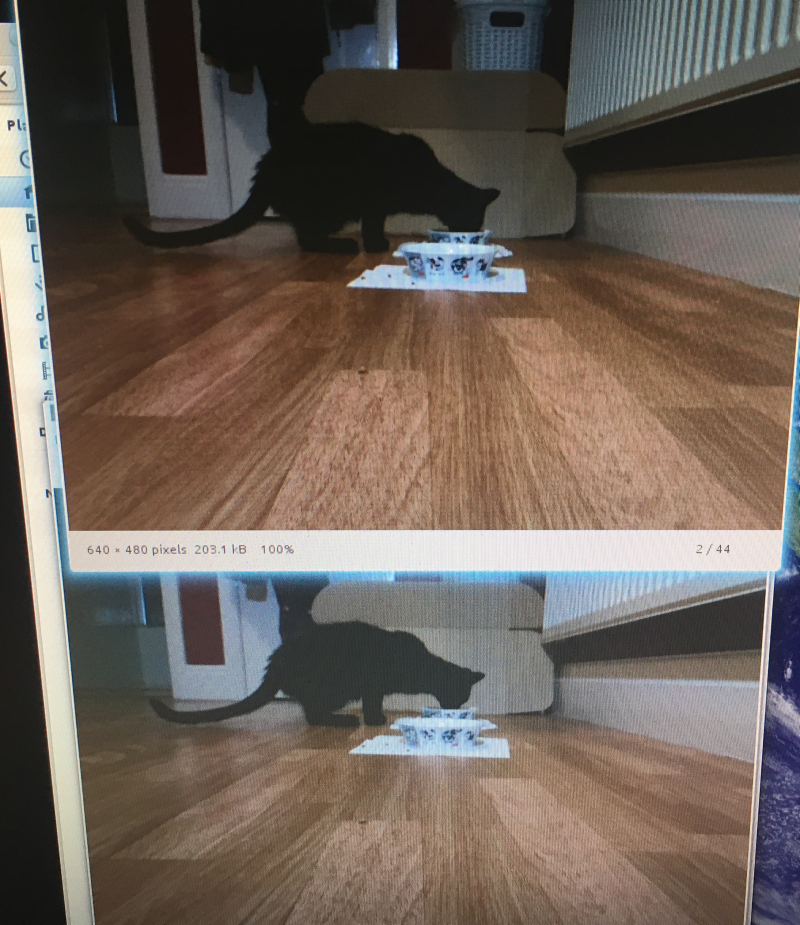

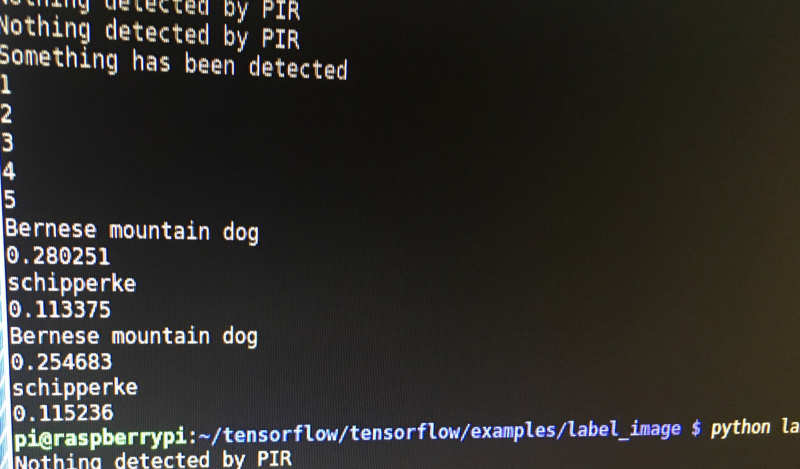

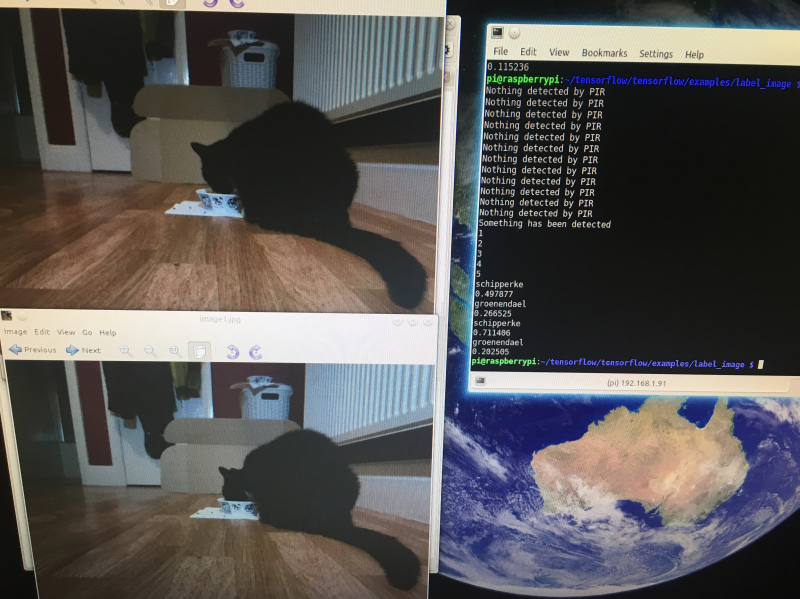

Here the cat-detector camera is elevated about 10cm off the floor. At least it got an animal this time. And LaLa's ears do resemble the shape of mini pincher's ears, to be fair!

Here are some more results. Not very good really! The top guess being a Bernese mountain dog!

2. What can we do to improve the accuracy of our cat-detector?

Honestly, I don't think we can get much better images acquired for cats than these at their feeding station! They are not going to come and pose for the camera! So what can we do then?

As I was saying earlier, we can start working on our own cat-detector to detect individual cats (e.g. LaLa and Po). You can see how hard it would be based on the images above (Po is first, then LaLa). They look really similar! Maybe we could look at fixing a camera so it gets their faces when they are eating out the bowl? That would be useful if we wanted to apply the cat-detector to entry control (i.e. cat doors) too!

So:

-- Fix camera so it can acquire images of cat faces when they are eating

-- Acquire up to 1000 cat face images for each cat. Well, let's get 200 each and go with that first. So just have a Pi taking images when the PIR gets triggered and label them LaLa or Po manually.

-- Then we can go about training a CN using some of the kernels from the Kaggle Dogs Vs. Cats Redux for inspiration -> https://www.kaggle.com/c/dogs-vs-cats-redux-kernels-edition/kernels

I know some of them use Keras e.g. https://blog.keras.io/building-powerful-image-classification-models-using-very-little-data.html

-- Then we can try out our new cat-detector!

3. Anyway, let's add code to tweet out the results from the cat-detector now!

Here's the general code we need to add:

from twython import Twython

C_KEY = ''

C_SECRET = ''

A_TOKEN = ''

A_SECRET = ''

twitter = Twython(C_KEY, C_SECRET, A_TOKEN, A_SECRET)

photo = open('image1.jpg', 'rb')

response = twitter.upload_media(media=photo)

twitter.update_status(status=message, media_ids=[response['media_id']])

You can obtain your C_KEY, C_SECRET, A_TOKEN, and A_SECRET from twitter. Here's how to do that: https://twython.readthedocs.io/en/latest/usage/starting_out.html

So next we put our detector results in the message string variable (status=message)

message=("I have spotted: " + top_label + " (" + top_result + ")")

Ok, so we are good to go with twitter now! Now we could try the idea of asking twitter users to add their own labels to the images. We could ask them to reply with #catdetector #lala if the image was of LaLa (large black and white cat), and ask them to reply with #catdetctor #po if the image was of Po (small black cat) !

** so I will hang back on this next bit, and just try labelling them myself first, since I don't have a VPS ready/code ready.

The entire idea of this is to test the approach to supervised learning I envisaged for elephants. So in this case, I off-load the work of labelling images to twitter users. They reply to cat images with the hashtags above. Then I associate these replies with given cat images, store them in a database. Then when I have enough, I will go ahead and retrain InceptionV3 with two new classes: PoCat and LaLaCat! I'll do some it manually to start, then we can automate the entire process.

3. Getting the replies to our cat images!

So, I only have found how to do this first with Tweepy. But I'm sure we can do the same with Twython later. So what we are doing is streaming realtime tweets, and filtering them for the hashtags we mentioned. Note that we don't do our data analysis on raspberry pi, we do it on another machine, probably a virtual server! The steps are:

-- Create a stream listener

import tweepy

#override tweepy.StreamListener to add logic to on_status

class MyStreamListener(tweepy.StreamListener):

def on_status(self, status):

print(status.text)

-- Create stream

myStreamListener = MyStreamListener()

myStream = tweepy.Stream(auth = api.auth, listener=myStreamListener)

-- Start the stream with filters

myStream.filter(track=['#catdetector'])

See the Tweepy docs here: https://github.com/tweepy/tweepy/blob/master/docs/streaming_how_to.rst

4. Analysis of the tweets we get back

Ok, so we should be getting all the tweets coming in with the #catdetector hashtag on them! So the data we get for each tweet is as follows: https://developer.twitter.com/en/docs/tweets/data-dictionary/overview/tweet-object

So we need to analyse the tweets based on what we find in there:

in_reply_to_status_id (Int64) "in_reply_to_status_id":114749583439036416

So that will give us which cat image the tweet is replying to!

text (String) "text":" it was a cat and it was #Po #catdetector "

The actual UTF-8 text of the status update. So we can parse this string for hashtag specifying the cat that was in the image!

So we go ahead and link each tweet to a specific cat image, then parse the string of the tweet status update to see if it contained #LaLa or #Po

5. Storing the data

Now we can put the data into a relational database like SQLite. Well, we will be doing all this in realtime as we get the tweets from stream and analyse them.

import dataset

db = dataset.connect("sqlite:///cat_id.db")

So what's going into the database?

We want:

- a link to the cat image that was in the tweet that twitter user replied to (from in_reply_to_status_id)

- how the twitter user labelled our cat (parsed from text i.e. the status update)

----

Anyway, I am a long way from completing this bit! I got a lot of useful info from https://www.dataquest.io/blog/streaming-data-python/

Neil K. Sheridan

Neil K. Sheridan

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.