In order for a machine to harvest a crop it needs to be able to see and identify the crop. After putting together an algorithm to train a model, I found the raspberry Pi remote desk top I’m using doesn’t seem to get along too well with the model. Actually, it crashes during training. I decided it would be better to train the model on my laptop and run the model on the Raspberry pi.

It was obvious python was not designed for a Windows machine and scipy turned out to be very difficult to load. I ended up loading WinPython 3.5.2 which had scipy preinstalled and it was easy to add tensor flow and tflearn after that.

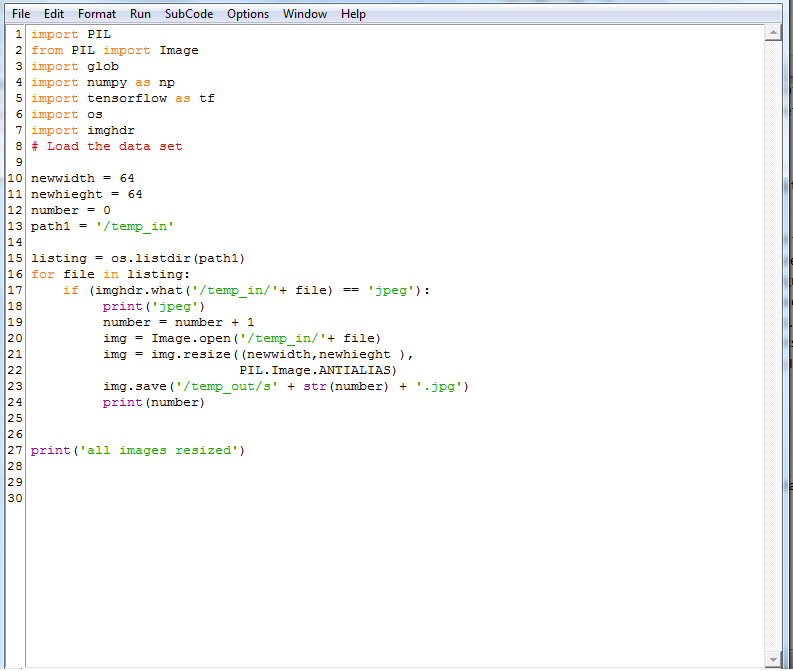

Next, I wanted to be able to use my own dataset. I decided a strawberry would be a good target to start with. It would be very tedious to search and individually save the thousands of images needed to train a model. Luckily, I found a Google app called Fatkun batch download image and it worked great for saving the images. Next, I needed to resize them all to the same size. I started with a model based on cifar-10, with 32x32x3 size images. After testing, I had better luck with a larger image of 64x64x3. Below is a short program I wrote to resize the images for me. It not only resizes but also counts the images as it resizes them so I would know how many images I had. After the strawberry images, I also loaded additional images such as dogs, cats and birds and “non-strawberry” images.

Below is the algorithm I’m using to train the model. It works but still needs some work. I loaded a few strawberry pictures in a test folder to test the model. It does identify most on the images as strawberries. It is a good start.

from __future__ import division, print_function, absolute_import

#from skimage import color, io

#from scipy.misc import imresize

import numpy as np

from sklearn.cross_validation import train_test_split

import os

import scipy

from glob import glob

import tflearn

from tflearn.data_utils import shuffle, to_categorical

from tflearn.layers.core import input_data, dropout, fully_connected

from tflearn.layers.conv import conv_2d, max_pool_2d

from tflearn.layers.estimator import regression

from tflearn.data_preprocessing import ImagePreprocessing

from tflearn.data_augmentation import ImageAugmentation

from tflearn.metrics import Accuracy

# Load the data set

strawberry_files = sorted(glob('/strawberry_64_sm/*.jpg'))

nonstrawberry_files = sorted(glob('/non_strawberry/*.jpg'))

n_files = len(strawberry_files) + len(nonstrawberry_files)

print(n_files)

size_image = 64

allX = np.zeros((n_files, size_image, size_image, 3), dtype='float64')

ally = np.zeros(n_files)

count = 0

for f in strawberry_files:

try:

img = io.imread(f)

new_img = imresize(img, (size_image, size_image, 3))

allX[count] = np.array(new_img)

ally[count] = 0

count += 1

except:

continue

for f in nonstrawberry_files:

try:

img = io.imread(f)

new_img = imresize(img, (size_image, size_image, 3))

allX[count] = np.array(new_img)

ally[count] = 1

count += 1

except:

continue

#######

# test-train split

X, X_test, Y, Y_test = train_test_split(allX, ally, test_size=0.1, random_state=42)

# encode the Ys

Y = to_categorical(Y, 2)

Y_test = to_categorical(Y_test, 2)

###################################

# Image transformations

###################################

# normalisation of images

img_prep = ImagePreprocessing()

img_prep.add_featurewise_zero_center()

img_prep.add_featurewise_stdnorm()

# Create extra synthetic training data by flipping & rotating images

img_aug = ImageAugmentation()

img_aug.add_random_flip_leftright()

img_aug.add_random_rotation(max_angle=25.)

###################################

#

###################################

# Define te network architecture:

# Input is a 64x64 image and 3 color channels

network = input_data(shape=[None, 64, 64, 3],

data_preprocessing=img_prep,

data_augmentation=img_aug)

print('step one start')

# Step 1: Convolution

network = conv_2d(network, 32, 3, activation='relu')

print('step 2 start')

# Step 2: Max pooling

network = max_pool_2d(network, 2)

print('step 3 start')

# Step 3: Convolution

network = conv_2d(network, 64, 3, activation='relu')

print('step 4 start')

# Step 4: Convolution

network = conv_2d(network, 64, 3, activation='relu')

print('step 5 start')

# Step 5: Max pooling

network = max_pool_2d(network, 2)

print('step 6 start')

# Step 6: Fully-connected 1024 node neural network

network = fully_connected(network, 1024, activation='relu')

print('step 7 start')

# Step 7: Dropout

network = dropout(network, 0.5)

print('step 8 start')

# Step 8: Fully-connected neural network with two outputs

network = fully_connected(network, 2, activation='softmax')

# Tell tflearn how to train the network

acc = Accuracy(name="Accuracy")

network = regression(network, optimizer='adam',

loss='categorical_crossentropy',

learning_rate=0.001, metric=acc)

# Training

model = tflearn.DNN(network, checkpoint_path='strawberry.tflearn',

max_checkpoints=3, tensorboard_verbose=0)

#Train for 100 epochs

model.fit(X, Y, validation_set=(X_test, Y_test), batch_size=100,

n_epoch=100, run_id='strawberry',snapshot_epoch=False, show_metric=True)

# Save the models weights

model.save('strawberry')

print("Network trained and saved")

#Reload the weights to test the model

model.load('./strawberry')

print('strawberry model reloaded')

####test model load and test agianst the test images

import glob

for image in glob.glob("/test_image/*.jpg"):

print(image)

img = scipy.ndimage.imread(image, mode="RGB")

img = scipy.misc.imresize(img, (64, 64),

interp="bicubic").astype(np.float32, casting='unsafe')

print(model.predict([img]))

Dennis

Dennis

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

I've tried using a PixyCam

http://charmedlabs.com/default/pixy-cmucam5/

But it is very lighting dependant so would suffer badly in strong sunlight. Also, it only recognises colours and not shapes at all.

Your solution is the way to go!

Are you sure? yes | no

Thanks! I've been working with USB cams. Most do not do well

in bright sunlight (they white out). I have 2 new cameras I got off of eBay for

a dollar each. I've tested them in bright sunlight and So far so good. I'm also working on saving a few images as the

model is identifying objects so the robot will retain its model during down

times. In theory it should get better as time goes by.

Are you sure? yes | no

Nice work!

Are you sure? yes | no

Thanks! We can also use the same technology on the Weedinator!

Are you sure? yes | no

That would be fantastic!

Are you sure? yes | no