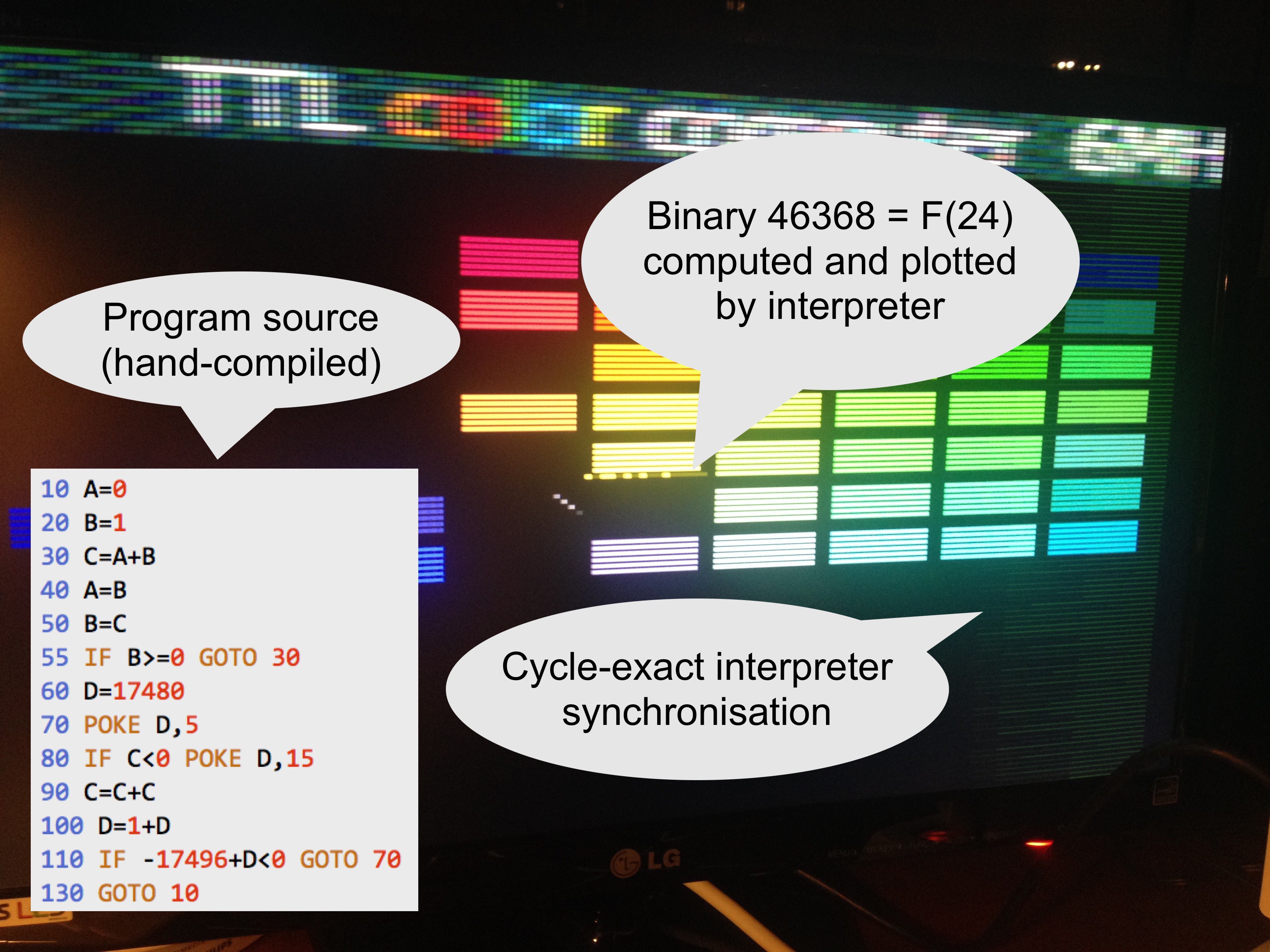

This photo captures the inner application interpreter's first sign of life. Very early this morning, or late last night, the virtual CPU ran its first program. It calculated the largest 16-bit Fibonacci number to be 1011010100100000 and plotted that in the middle of the screen. The video loop was still playing the balls and bricks game, unaware of what was happening, but sometimes the ball bounces off the 16-bit result.

The interpreter is the virtual CPU that makes it possible for mere mortals to write applications without worrying about the arcane video timing requirements.

For this test I hand-compiled the BASIC program into interpreter code and preloaded it into RAM. Shown here is the interpreter running during every 4th visible VGA scan line. It dispatches instructions and keeps track of their duration until it runs out of time for the next sync pulse. It can't stream pixels at the same time so these lines render black. I don't mind the bonus retro look at all.

With this the system undergoes quite a metamorphosis:

- The TTL computer: 8-bits, planar RAM address space, RISC, Harvard architecture, ROM programmable

- The inner virtual CPU: 16-bits, (mostly) linear address space, CISC, Von Neumann architecture, RAM programmable

A typical interpreter instruction takes between 14 and 28 clock cycles. The slowest is 'ADDW' or 16-bits addition. This timing includes advancing the (virtual) program counter and checking the elapsed time before the video logic must take back control. It also needs a couple of branches and operations to figure out the carry between low byte an high byte. That is the price you pay for not having a status register. But is that slow? Lets compare this with 16-bit addition on the MOS 6502, which looks like this:

CLC ;2 cycles

LDA $10 ;3 cycles

ADC $20 ;3 cycles

STA $30 ;3 cycles

LDA $11 ;3 cycles

ADC $21 ;3 cycles

STA $31 ;3 cycles

;total 20 cycles or 20 µsec

The TTL computer executes its equivalent ADDW instruction in 28/6.25MHz = 4.5 µsec.

We should be able to get out roughly 60k virtual instructions per second while the screen is active, or 300k per second with the screen off. So I believe the interpreter's raw speed is quite on par with the microprocessors of the day. The system itself of course loses effective speed because its hardware multiplexes the function of multiple components: CPU, video chip and audio chip are all handled by the same parts. And to make things worse, the computer "wastes" most of its time redrawing every pixel row 3 times and maintaining a modern 60Hz frame rate. PAL or NTSC signals of the day were 4 times less demanding than even the easiest VGA mode.

Next step at the software front is finding a good notation for the source language.

On the hardware side, there is some progress on a nice enclosure. I hope to have a preview soon.

Marcel van Kervinck

Marcel van Kervinck

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.