Today, digital computers are everywhere: not only on our desktops, but also in the palm of our hand as a smartphone, or behind the dashboard of our car. However, this situation is not very old: the first personal computers appeared in the late 70's, mobile phones at the turn of the century, and smartphones only 10 years ago with the first iPhone. Actually, this acceleration is rather unique, since computers only appeared with WW II.

But as far as can be traced back in History, humankind used means to represent or count items: livestock, grains, etc. First using the fingers, then stones, rods, abacus and numerous other aids.

Other specialized devices (such as astrolabe, the Antikythera mechanism or clock towers) were invented to compute astronomical positions or for navigation use.

Eventually, more general purpose devices were built in order to perform arbitrary sets of arithmetic or logical operations, like slide rules or (electro-)mechanical calculators.

And despite the fact that some of these machines (like Jacquard's loom, which used punched cards to control pattern being woven) introduced the notion of programmability, they cannot be considered as computers, since it applied to machine tools and not to general arithmetic or logical operations. The term of computer was used since the early 17th century to designate human computers performing long and tedious mathematical calculations.

What would have been the first general-purpose computer is the Analytical Engine, proposed by Charles Babbage in 1834:

Source: Bruno Barral (ByB) CC BY-SA 2.5, via Wikimedia Commons

But unfortunately, this computer was never completed.

Instead, at the turn of the 20th century, and because of their lower complexity, many computing machines were based on direct mapping of values to continuous physical phenomena such as electrical, mechanical or hydraulic quantities. However, because of the measurement accuracy and inherent random jitter added by various uncontrolled phenomenon, processes could not be reliably repeated with exact equivalence on these analog computers.

In contrast, digital computers (from Latin digitus, finger) use sets of discrete symbols to represent values, and because of this indirect mapping, they are able to have repeatable results with no accuracy or random noise problem.

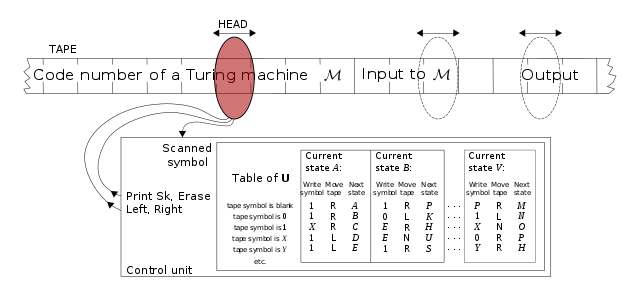

And it was only in 1936 in his famous paper "On Computable Numbers, with an Application to the Entscheidungsproblem" that Alan Turing first described a class of digital computing machines now known as Turing machines. Being kind of odd by today's standards, a Turing machine can be considered having 3 distinct elements:

- an infinite tape, divided into a sequence of squares, each carrying a symbol from a finite alphabet

- a read/write head that can read the symbol contained in one single square of the tape at a time, write a new symbol there and move one square to the left or to the right

- a control element as a device with a finite number of internal states

At any given time, the next operation of the Turing machine is determined by:

- the control element's current state

- the symbol read by the read head

The operation consists in 3 parts:

- print a new symbol int the current square on the tape (it may be the same as the read symbol)

- put the control element into a new state (which may be the same as the previous state)

- move the read/write head one square to the left or to the right

For its operation, a finite part of the tape is filled with a starting sequence of symbols, the remainder of the tape being left blank (containing a special blank symbol), and the read/write head is placed at a particular starting square on the tape. The Turing machine then starts to compute following its rules of operation.

Source: wvbailey (Own work) GFDL or CC BY-SA 3.0, via Wikimedia Commons

Despite its singular construction, the Turing machine model captures for the first time the notions of:

- algorithm as a self-contained sequence of actions to be performed in the control element's internal states

- sequential memory under the form of its infinite tape

- processor as a Turing machine controls all data manipulation done by a digital computer

In his paper, Turing also demonstrated that it is possible to design a Universal Turing Machine (UTM) that can act like any particular Turing machine when supplied with a description of that machine placed on the tape of the UTM using a certain code and starting sequence of the particular Turing machine, in which case the UTM will imitate the operation of the particular Turing machine. This reduces the problem of simulating all particular Turing machines to the problem of simulating only any UTM.

Source: By Cbuckley (Own work) GFDL or CC-BY-SA-3.0, via Wikimedia Commons

Thus, the UTM may be considered as the first implementation of an interpretive routine or alternatively, as the first stored-program computer, where programs not only operate on numbers, but are represented themselves as numbers.

However, Turing machines are not limited to model computers, but rather they are intended to model abstract computation itself. For example, it is very possible for a Turing Machine to model human computation process. Historically, computers, which compute only on their (fixed) internal storage, were developed only later. But except for the limitations imposed by their finite memory stores, modern computers are said to be Turing-complete, i.e. they have algorithm execution capability equivalent to a Universal Turing Machine.

As a conclusion, it can be observed that modern computers differs mainly from abstract Turing machines in that they only have a finite memory capacity, but that they share the ability to follow an algorithm using a processing unit and memory for storing both data and instructions. For practical purposes, real-world computers also add some data input/output capabilities in order to be useful for an application.

Squonk42

Squonk42

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.