The second and last post on computer history...

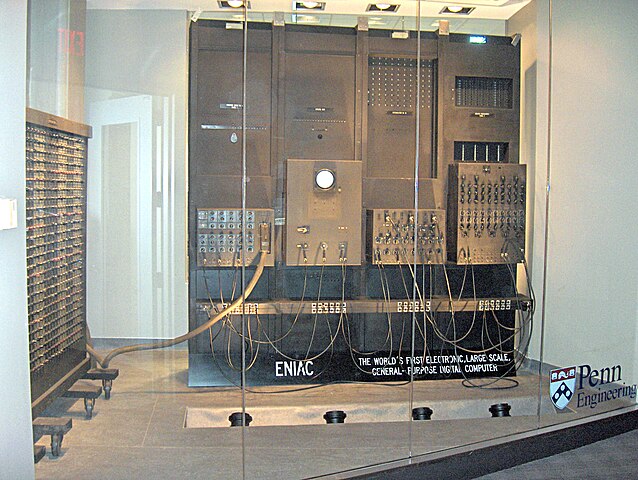

For a long time, the first physical digital computer was considered to be the ENIAC, but this is no longer considered true.

Source:TexasDex at English Wikipedia GFDL or CC-BY-SA-3.0, via Wikimedia Commons

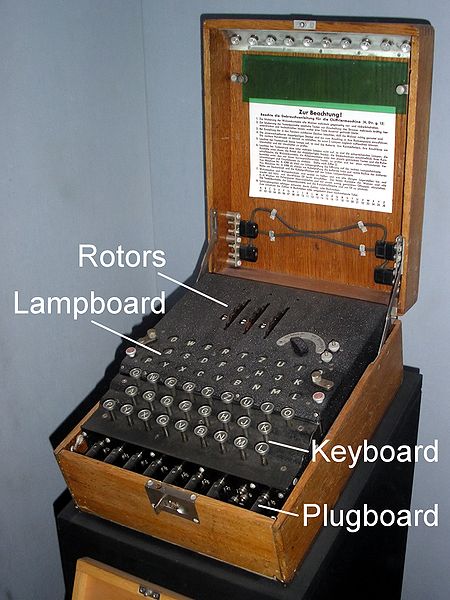

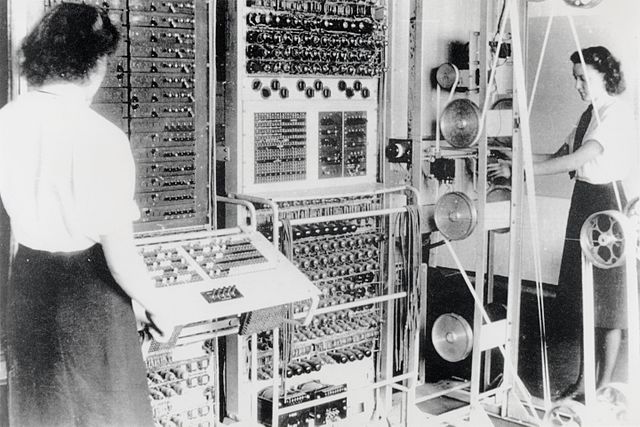

It was not until the 70s that the fact that electronic computation had been used successfully during WWII by the Government Code and Cypher School (GC&CS) at Bletchley Park was acknowledged, where Turing played a major role by designing the electromechanical cryptologic bomb used for speeding up the codebreaking of the German Enigma machine.

Source: Karsten Sperling, http://spiff.de/photo

Source: Karsten Sperling, http://spiff.de/photo

And it was not until 1983 that details on the hardware of the Colossus I machine used later during the war (February 1944) were declassified. Other details were held secret until 1986, and even today, some documents remain classified.

Source: See page for author [Public domain], via Wikimedia Commons

Source: See page for author [Public domain], via Wikimedia Commons

This machine was used to decipher messages from the Lorenz cipher machine used by the German High Command, and Turing was involved in it at least for some of the methods used to perform wheel-breaking, but also by recommending Tommy Flowers (with whom he worked on the bomb) to Max Newman, if not some other yet undocumented implications.

To add to this complexity, Turing also had contacts as soon as in 1935-1938 during his Princeton years with John von Neumann who worked later on the ENIAC and the EDVAC, during his research for the first atomic bomb.

Source: See page for author [Public domain], via Wikimedia Commons

Source: See page for author [Public domain], via Wikimedia Commons

Because of this secrecy around Bletchley Park operation and later because of the revelation of Turing's homosexuality and death, it is very difficult to find out the truth regarding the first physical digital computer: having heroes and wise generals on the battlefield was considered for a long time to be a better image of victory during WWII than mathematicians in a dark room...

F.H. Hinsley, official historian of GC&CS, has estimated that "the war in Europe was shortened by at least two years as a result of the signals intelligence operation carried out at Bletchley Park". But it may well be underestimated: if you consider that the Lorentz cipher was routinely cracked in July 1942 (the first battle of El Alamein was 1-27 July 1942) when for the first time the German troops were defeated, and the Mark I Colossus started its operation in February 1944 (just before D-Day), it may well be that it actually changed the war.

Even worse, Turing's personal situation lead to strange rewriting of some part of History, amnesia, minimization of his role, if not complete credit re-attributions.

But neither the Colossus nor the ENIAC could be actually considered as the first physical digital computer, both storing programs differently from numbers, and in this regards, were just massive electronic calculating machines.

The first specification of an electronic stored-program general-purpose digital computer is von Neumann's "First Draft of a Report on the EDVAC" (dated May 1945) and contained little engineering detail, followed shortly by Turing's "Proposal for Development in the Mathematics Division of an Automatic Computing Engine (ACE)" (dated October-December 1945), which provided a relatively more complete specification.

However, the EDVAC was only completed 6 years later (and not by von Neumann), and Turing left the National Physical Laboratory (NPL) in London in 1948, before a small pilot model of the Automatic Computing Engine was achieved in May 1950. He then joined Max Newman in September 1948 at the Royal Society Computing Machine Laboratory at Manchester University.

This is where the Manchester Small-Scale Experimental Machine (SSEM), nicknamed Baby, ran its first program on the 21st June 1948 and became the world's first stored-program computer. It was built by Frederic C. Williams, Tom Kilburn and Geoff Tootill, who reported it in a letter to the Journal Nature published in September 1948.

Source: By Parrot of Doom (Own work) [CC BY-SA 3.0 or GFDL], via Wikimedia Commons

Source: By Parrot of Doom (Own work) [CC BY-SA 3.0 or GFDL], via Wikimedia Commons

This prototype machine quickly led to the construction of the more practical Manchester Mark 1 computer which was operational in April 1949.

In turn, it led to the development of the Ferranti Mark 1 computer, considered as the world's first commercially available general-purpose computer in February 1951.

However, Turing's shadow still floats over the Manchester computers, since Williams is explicit concerning Turing's role and gives something of the flavor of the explanation that he and Kilburn received:

'Tom Kilburn and I knew nothing about computers, but a lot about circuits. Professor Newman and Mr A.M. Turing in the Mathematics Department knew a lot about computers and substantially nothing about electronics. They took us by the hand and explained how numbers could live in houses with addresses and how if they did they could be kept track of during a calculation.' (Williams, F.C. 'Early Computers at Manchester University' The Radio and Electronic Engineer, 45 (1975): 237-331, p. 328.)'

Turing did not write the first program to run on the Manchester Baby, but the third, to carry out long division, and later developed an optimized algorithm to compute Mersenne prime, known as the "Mersenne Express" for the Manchester Mark 1 computer.

Squonk42

Squonk42

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Court rulings are not generally used by historians to establish scientific precedence. This is covered in the IEEE paper "What Does It Mean To Be The First Computer?". I liked this paper, it is by Michael R. Williams. He has some other papers on computer history, and perhaps he can send you a copy. The paper really should be outside of the IEEE pay wall, I think, and as an IEEE past president the author might have some pull for getting it released.

The same sort of situation is happening today in biology with the CRISPR patents. The scientific precedence is different from the result of the patent litigation because of legal details.

Are you sure? yes | no

Yes, I don't like scientific publications that are behind a paywall either, I think it is against the prevalent concept of sharing knowledge. Not that I am against paying a fee, but the amounts generally requested are way too expensive, I really fell like I am paying for their cocktail crackers.

The situation for CRISPR looks even worse, as what is patented are the principles of life.

As for our comparatively innocuous computers, I think that the stored-program condition should prevail, and everything below it considered as just glorified (huge) automatic calculators.

I am not a lawyer nor an historian, but as an engineer I just try to understand the roots of computer science as clearly as possible. Being not an American nor a British (or German) citizen, and having no acquaintance with any of the players or any of their ideas outside the computer field, I try to stay on purely technical grounds.

Nevertheless, I must admit that discovering these early machines is really interesting, not only on a theoretical and technological perspective, but also for the historical context around them.

Are you sure? yes | no

Following the "first to publish" rule, then Turing must be credited for its independent solution on the mathematical "decidability problem" based on the notion of computable numbers and a notional computing machine, known today as the Turing Machine. Turing also was the first to publish a machine where instructions are stored as data with its Universal Turing Machine in the same seminal 1937 paper.

However, because of the secrecy around cryptography during WWII and classified research on the atomic bomb, as well as Turing's personal situation, the "first to publish" rule is difficult to apply: Turing's ACE report of 1946 was only formally published in.... 1986! (A.M. Turing's ACE Report of 1946 and Other Papers, edited by B.E. Carpenter and R.W. Doran, MIT Press, 1986, ISBN 0-262-03114-0).

Von Neumann should probably be credited for the concept of Random Access Memory in its EDVAC paper, although this "First Draft Report" was neither formally published either, and raised a lot of controversy, as Von Neumann, ENIAC inventors John Mauchly and J. Presper Eckert and maybe other at the University of Pennsylvania's Moore School of Electrical Engineering role is not clearly specified.

The publish order is then difficult to follow, given that even formally unpublished, these papers were circulating among a group of early Anglo-American mathematicians and electronic engineers, largely sharing ideas, like what can be observed between the EDVAC and ACE papers.

Thus, the "first to run" rule seems in this case more appropriate for early computers, as this can be traced back more easily. It is also a proof that the concept is indeed applicable and working.

AFAICT, the flip-flop was invented by William Henry Eccles and Frank Wilfred Jordan, in the patent "Improvements in ionic relays" British patent number: GB 148582 (filed: 21 June 1918; published: 5 August 1920) https://worldwide.espacenet.com/publicationDetails/originalDocument?CC=GB&NR=148582&KC=&FT=E.

Apparently, the AND-gate was invented by Nikola Tesla: "System of Signaling" https://teslauniverse.com/nikola-tesla/patents/us-patent-725605-system-signaling and "Method of Signaling" https://teslauniverse.com/nikola-tesla/patents/us-patent-723188-method-signaling, filed 16 July 1900, published 14 April 1903 and 17 March 1903, respectively. However, during the review period, the USPO told Tesla that another patent application for a similar concept had been received from Reginald Fessende, but Tesla's claims were eventually supported.

But a gate or flip-flop does not make a calculator, and not quite a computer...

Are you sure? yes | no

I found a good reference "What Does It Mean To Be The First Computer?" but it is behind a paywall. Here are a few quotes of interest:

"Historians are not known for providing definitive answers and, when faced with a particular machine will never call it the first, but will always hedge their bets by saying something like:

Project yyyy was the first mechanical, analog, automatic, non-programmable, fully operational, calculating machine available in country xxxxx.

If you add enough adjectives, anything can be described as being first. However such an answer (which usually avoids even using the term computer) will seldom satisfy any particular group of people who would really like their machine to be called first."

And later...

"I think it is fair to say that most people would equate a computer to a device that actually had a stored program as its control mechanism. That is the one major design factor that allows the modern computer to perform such a varied set of tasks with little or no time being spent in reconfiguring the control system. The origin of the stored program concept is quite clear – it was from the ENIAC group at the Moore School. What is not clear is who actually came up with the idea in the first place. The usual name associated with it is John von Neumann. Although von Neumann made many contributions to the organization and development of computers, he is certainly not the person who originated the stored program concept – it was being discussed, and even written about, before von Neumann became involved."

The first published paper I found of the type I was thinking about is here: The Electronic Numerical Integrator and Computer (ENIAC), H. H. Goldstine and Adele Goldstine, Mathematical Tables and Other Aids to Computation, Vol. 2, No. 15 (Jul., 1946), pp. 97-110.

Are you sure? yes | no

ENIAC was put through its paces for the press in 1946 by the US authorities as the first computer (I even read that it was used during WWII to decode German cipher, which is just plain wrong!), and this situation lasted until the Honeywell, Inc. v. Sperry Rand Corp., et al. 180 USPQ 673 (D. Minn. 1973) case, where lawyers ruled out the U.S. Patent 3,120,606 for ENIAC, applied for in 1947 and granted only in 1964, and put the invention of the electronic digital computer in the public domain while at the same time providing legal recognition to Atanasoff-Berry as the inventors of the first electronic digital computer. Hopefully, both Pascal's calculator and Babbage's analytical engine were invented before patents even existed, or they could have rewound History until then :-)

Precedence of the ENIAC as the first computer is further challenged since the existence of the Colossus I machine was disclosed in 1976, adding even more contenders with the Zuse Z3 computer...

But on a strict engineering point of view, the Atanasoff-Berry machine was not even Turing complete, and the Colossi, Zuse Z3 and ENIAC were not stored-program computers (at least not until 1948 for the ENIAC, were it was improved by adding a primitive read-only stored programming mechanism).

Since Turing proved in 1936 that his "universal computing machine" would be capable of performing any conceivable mathematical computation if it were representable as an algorithm, stored-program capability should be considered as the minimum condition for a machine to be considered as a computer.

Thus:

In any case, the ENIAC cannot be considered as the first computer, at least since 1973.

Are you sure? yes | no

The tradition of the priority rule in science gives credit for "first" to "first to publish" and not "first to build", "first to deploy" or "first to sell". It would be great to have PDFs of the first publications describing each of the original general-purpose computers. I would like to read them in published order and decide for myself!

The priority rule makes it look more like PhD scientists invent things instead of engineers or mathematicians, though. Which was the case for computers?

Before that, who published the first reliable gate and flip-flop schematics? What was different about them?

Are you sure? yes | no

Actually, everything since the 1945 EDVAC and ACE designs (pipelining, parallelism, cache, virtual memory and vector processsing) have been technological rather than fundamental revolutions: As for the RISC architecture, the ACE design with its 11 simple instructions and 32 orthogonal registers are not far from the RISC principles.

Are you sure? yes | no

This is a fascinating era... But I'm happy we're now far from it !

Are you sure? yes | no