The first motivation that predated the early computers was to automate arithmetic calculation algorithms, so it is no wonder if an arithmetic unit is at the heart of the processor and the computer itself.

But besides calculation, algorithms can also perform data processing (including validation, sorting, summarization, aggregation, analysis, reporting, classification, etc.) and automated reasoning, which both require formal mathematical logic instead of ambiguous rhetoric, syllogism or philosophy in order to be fully automated.

This is why the arithmetic unit is always completed with a logic unit to form the Arithmetic and Logic Unit (ALU) as the central part of the processor and computer.

Arithmetic

Formally, arithmetic is the branch of mathematics that studies numbers, and especially the properties of the traditional operations between them—addition, subtraction, multiplication and division. But of course, this greatly depends on how the number are represented. Even if today the main numeral system is the Hindu–Arabic one, this has not always been the case.

There have been numeral systems using 2, 3, 4, 5, 6, 8, 10, 12, 16, 20 or 60 as a base. BTW, duodecimal (base 12) is still used today for counting hours and in music, whereas sexagesimal (base 60) is also used for measuring time, angles and geographic coordinates, mainly because of their superior highly composite number property, that makes them a more convenient number system for computing fractions than most other number systems.

But only considering the common decimal (base 10) numeral system, the positional notation where each symbol represents an order of magnitude ("ones place", "tens place", "hundreds place", etc.) was only introduced in Europe by Leonardo Fibonacci in 1202 in his Liber Abaci, but is in wide use only since much later (since the middle of the 16th century), replacing the Roman numeral system (which is still used today in certain contexts such as years) because of its easier arithmetic rules.

If handling digits having 10 possible symbols or values is still possible using mechanical devices with wheels, gears and teeth or cogs, this approach is difficult to transpose to electro-mechanical relays (their contact can only be in 2 different states: open or closed) or fully electronic vacuum tubes or solid-state transistors. Even if vacuum tubes and transistors can be used in analog circuits to represent an infinity of continuous values, we already saw in Part 1 that digital computing was a much better solution.

The idea of using 2 bands of analog values as far apart as possible from one another to provide the best immunity against glitches leads to consider using a binary (base 2) numeral system with positional notation, as devised by Gottfried Wilhelm Leibniz in "Explication de l’arithmétique binaire, qui se sert des seuls caractères O et I avec des remarques sur son utilité et sur ce qu’elle donne le sens des anciennes figures chinoises de Fohy" in 1703 (translation into English here).

Except for a few esoteric machines, all computers today are using binary numbers extensively, although not all arithmetic operations are supported in hardware, as the most complex ones (multiplication, division and square root) can be carried out by a software algorithm using additions/subtractions and left/right number positional shifts.

The positional left shift on a binary number is equivalent to adding a number to itself, whereas the positional right shift on a binary number can (expensively) be obtained by repeatedly incrementing a counter once while another counter is incremented twice and matched against the binary number to shift positionally right. Thus, left/right number positional shifts can be obtained from additions only and are not strictly required to perform all arithmetic operations.

And because of the general rules of arithmetic, all operations on numbers can be decomposed into a series of operations on 2 numbers called operands, producing a single result.

Moreover, as the method of complements which was used in many mechanical calculators as an alternative to running the gears backwards can be transposed to other digital techniques to perform subtraction, implementing an arithmetic unit can thus be limited to having a 2-operand binary number subtractor only, as x+y can be computed as -(-x-y)!

Logic

Whereas arithmetic deals with specified numbers, algebra introduces quantities without fixed values, known as variables.

Formally, the Boolean algebra is a branch of algebra in which the values of variables are the truth values true and false, as formalized by George Boole in his two famous books "The Mathematical Analysis of Logic" (1847) and "An Investigation of the Laws of Thought on Which are Founded the Mathematical Theories of Logic and Probabilities" (1854).. It is a symbolic method of investigating logical relationships (or propositions) where no limitation has been placed on the number of elements. From Edward Huntington’s postulates (fourth set, given on page 280) published in 1933, it can be demonstrated that in every Boolean algebra there are two specific elements: the identity elements. Hence, any Boolean algebra has at least two elements.

In his 1937 paper "A Symbolic Analysis of Relay and Switching Circuits", Claude Shannon implemented a two-element Boolean algebra with a circuit of switches, where a switch is defined a device that can be placed in either one of two stable positions: off or on. These positions can just as well be designated 0 and 1 (or the reverse). For this reason, the two-element Boolean algebra has been called switching algebra. Basically, his paper demonstrates that this two-valued switching algebra is isomorphic with propositional logic as defined by George Boole. Hence, whatever terminology, operations, and techniques are used in logic can be applied to Boolean algebra, and vice versa, to the point that today switching algebra and Boolean algebra are often used interchangeably.

It is thus perfectly valid to use a binary digit to represent a logic condition, and use the Boolean algebra to perform logic operations that are part of algorithms.

Bitwise Operations

As just demonstrated above, a single binary digit or bit can be used to represent a logic condition, and multiple bits can be used to represent a binary number. But multiple bits can also be considered as a collection of single bits at the level of their individual bits, on which the Boolean (or switching) algebra applies too. Such Boolean operations on collections of bits (NOT, AND, OR, XOR) are called bitwise operations, and these are often completed by other operations on these sets of bits in the form of positional shifts or rotations, which we found previously useful for some arithmetic operations.

Switching algebra was introduced in terms of two binary operations (AND and OR) and one unary operation (NOT). Every switching expression is solely made up of variables connected by various combinations of these three operators, which leads to the concept of functionally complete or universal set of Boolean operations. It follows that the set of operations {AND, OR, NOT} is universal. It can be demonstrated that the reduced sets [AND, NOT] and [OR, NOT] are universal, too. Going further, the reduced sets [NAND] and [NOR] are universal, meaning that the only required Boolean operations that are necessary to implement all Boolean operations are the NAND or NOR operations. However, as bitwise operations are not difficult to implement in hardware and are often used in computations, a broader set of operations ( generally [NOT, AND, OR, XOR]) is available in all modern processors for optimization purposes.

Symbols and Characters

Additionally, multiple bits may be used to represent a symbol or a text character for example, but it is then purely a usage convention based on e a specific encoding (such as ASCII or UTF-8) and does not involve any particular calculation or interpretation from the point of view of the processor.

Putting it all together

So far, so good: an ALU could be implemented with only an n-bit subtractor and either NAND or NOR logic operations.

Actually, we could optimize it further to only an n-bit subtractor and a NOT logic operation if we do not consider bitwise-operations, and we would obtain an ALU similar to the one used in h the first computer, the Manchester Mark I, or to the ongoing 8-bit TTL CPU Hackaday project Hackaday project!

Being even more extreme, we could argue that the n-bit subtractor could be implemented from logic operations (refer to the last example in Shannon's paper above) and the ALU could then be limited to a succession of NAND or NOR logic operations.

Then, as a NAND or NOR logic operation can be simulated by addressing 4 consecutive memory locations containing the equivalent truth table (1, 1, 1, 0) or (1, 0, 0, 0), the ALU could then be limited to... a single memory register, as demonstrated by Raùl Rojas in his 2010 paper "The smallest Arithmetic Logic Unit"!

Although this is an interesting result for abstract machine theory, real-world computer implement a larger set of hardware primitive functions to obtain a better efficiency:

- CLR: clear all bits

- ADD: arithmetic addition

- SUB: arithmetic subtraction

- NOT: 1's complement bitwise logic negation

- AND: bitwise logical and

- OR: bitwise logical or

- XOR: bitwise logical exclusive or

- at least one of ASL or LSL: arithmetic or logical bitwise shift left

- at least one of ASR or LSR: :arithmetic or logical bitwise shift right

This primitive operation set is often completed by derived operations, such as:

- NEG: arithmetic 2's complement, i.e. performing NOT and ADDing 1

- INC: arithmetic increment by 1

- DEC: arithmetic decrement by 1

- ROL: logical bitwise rotate left

- ROR: logical bitwise rotate right

Very often, arithmetic and rotations operations are derived in variants that include an input carry bit:

- ADC: arithmetic addition with carry

- SBC: arithmetic subtraction with carry (or borrow)

- RLC: logical bitwise rotate left through carry

- RRC: logical bitwise rotate right through carry

At the expense of a large increase in complexity, modern processor also include hardware-based signed and unsigned multiplication and division operations, and processing of non-integer numbers using floating -point arithmetic in a specialized hardware floating-point unit (FPU).

For our educational purpose, we will limit ourselves to a simple n-bit integer ALU only without hardware-based arithmetic multiplication or division that can be emulated in software.

Condition Flags

In order to give algorithms the ability to behave differently, based on the result of previous calculations, the ALU must at least provide a single-bit storage (or flag) for a simple logic condition.

Theoretically, only a single condition is necessary, and the most obvious condition is nullity, i.e. a condition obtained when the last arithmetic calculation resulted in a null (zero) result. It is very common to call this condition flag Z, and to add some additional significant ones:

- Z: the last arithmetic operation resulted in a null (zero) result

- N: the last arithmetic operation resulted in a negative result (i.e. a value with its most significant bit set to 1)

- C: the last arithmetic operation generated an output carry

- V: the last arithmetic operation generated a signed number overflow (i.e. when the carry input and output for the most significant bit is different, resulting in a 2's complement wrap around)

Whenever applicable, these flags are often updated for logical bitwise operations, in order for algorithms to test bitwise conditions too.

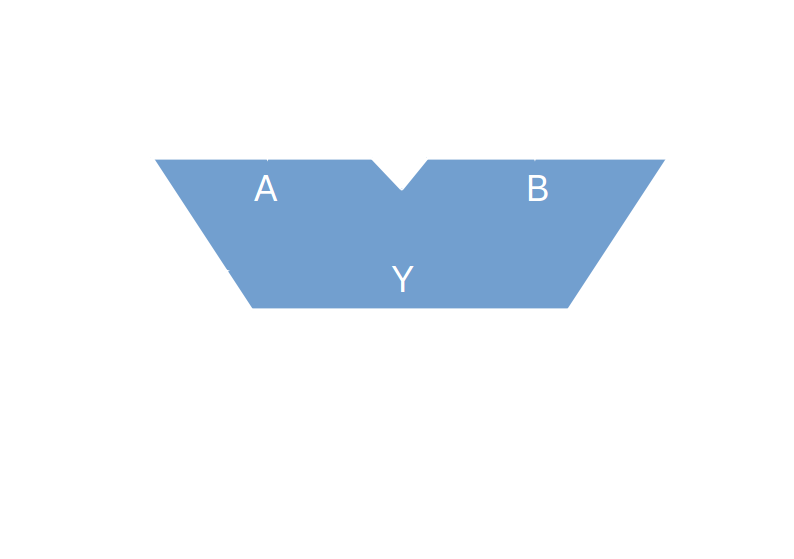

As the ALU takes 2 integer operands and produces 1 single integer result, with the operation to perform encoded into an opcode, and that it also depends on input status flags and generates some output status flags, it is often represented as a V-shaped block like this:

Squonk42

Squonk42

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.