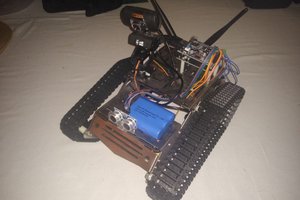

To control the robot I use my L1VM with some libraries written in C. The main control program is written in my language Brackets.

The motor driver is connected to the serial port of the Raspberry Pi.

With OpenCV the pictures of the webcam are scanned for identical parts in both pictures. An object in front is placed in a different location in the pictures. The control program uses this difference to calculate the objects distance. The goal is to avoid driving into obstacles.

jay-t

jay-t

BTom

BTom

Petar Crnjak

Petar Crnjak

Sinclair Gurny

Sinclair Gurny

Yes, I use the OpenCV library. It is a algorithm to get keypoints in both pictures. The function to get the distances is my own code. I did use a "calibration" method to calculate the distances from 50 mm up to 250 mm. Everything farer away is filtered out. Maybe I do a calibration for it later.