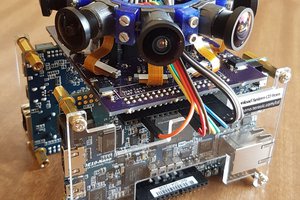

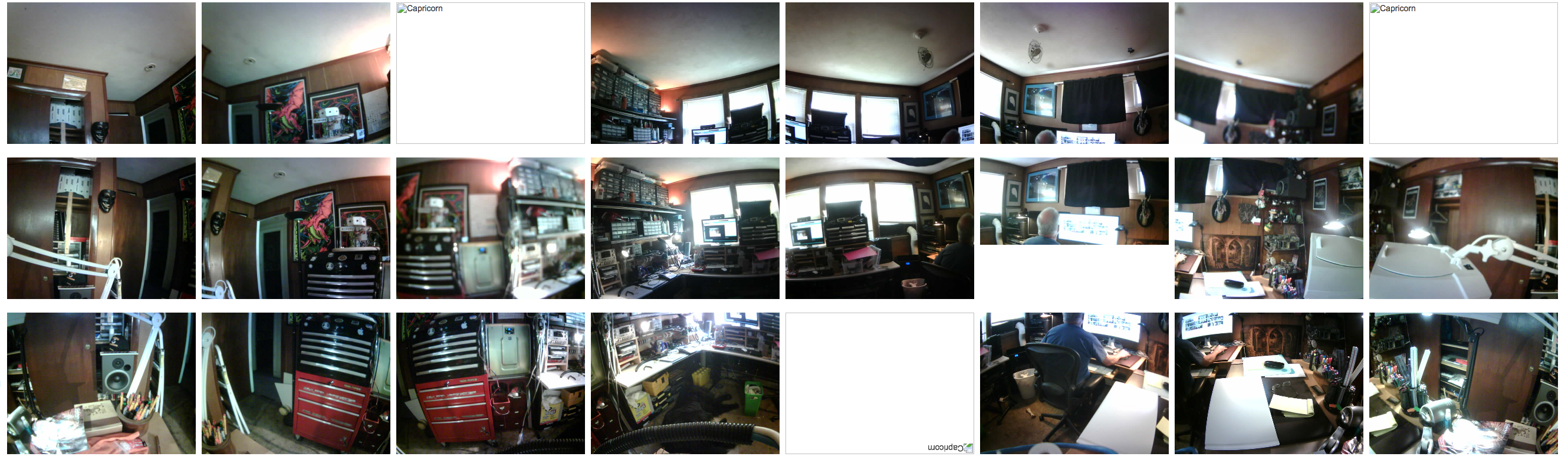

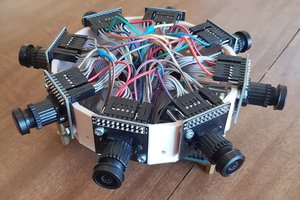

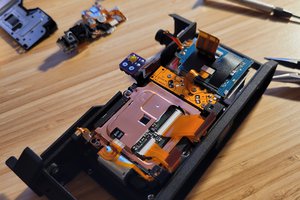

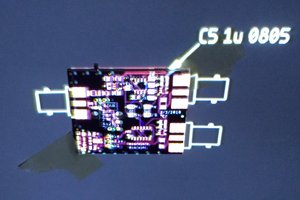

The system uses cameras supplied from Arducam and a custom 3D printed enclosure. The full rig has 26 cameras arranged in a Rhombicuboctahedron. where the cameras are grouped into 3 banks of 8 cameras, where each bank is controlled by a Beaglebone Black. These rings provide 45 degree look down, look up, and equatorial views. The last two cameras are controlled by a 4th Beaglebone which also orchestrates data flow into the Jetson. The Linux device tree has been modified to support dual I2C/SPI busses, and a C++ program handles both camera interactions and provisioning a basic REST interface that allows for sending commands and getting data. An nVidia Jetson is used to fuse all of the camera feeds together into a data model based on a hypersphere, which is then delivered to AWS for cloud processing.

Current status is that the equatorial ring hardware V1 is complete and operation, the ring driver software running on the BeagleBone is operational, and the image fusion system is operational in that it can process all linear integration solutions to establish optimal linear overlap. Current activity involves working on the final non-Euclidian math that handles the non-linear final fusion parts of the image and construction of the hypersphere data model that allows the system to track both overlapping field of view and the fact that while there is overlap, the incidence angles on overlap are all unique.

Mark Mullin

Mark Mullin

Kevin Kadooka

Kevin Kadooka

Ted Yapo

Ted Yapo