You will find here the details, explanations and specifications for a file format called 3RGB:

- Supports 24bpp RGB (no palette, only "continuous signals")

- Multiple levels of features and compression, each level adds a simple feature that the encoder and decoder can accept or ignore.

- Works with square tiles (8×8 pixels initially, now 4×4)

- Each tile can be encoded with absolute or relative values (used for delta-pictures in animations)

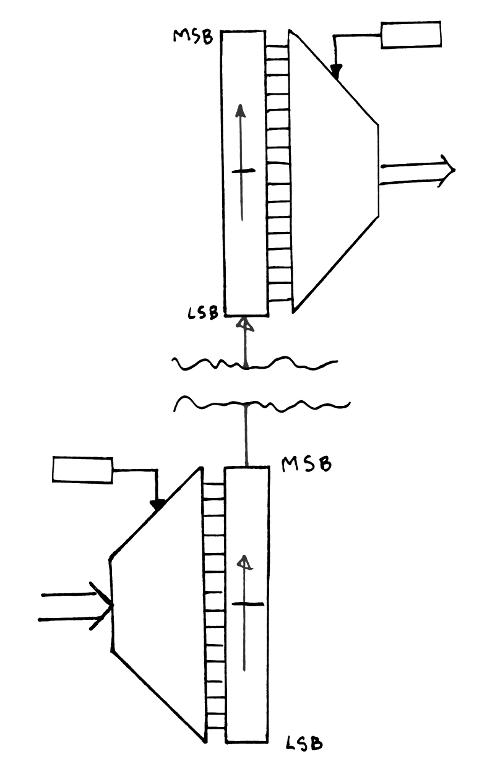

- Hierarchical organisation with min/max boundaries of each tile stored in separate 3R-encoded arrays

- Prediction filter : Paeth or none.

- scan orders : normal raster (for direct encoding), BitShuffle (when using 3R encoding) or some other pattern for increased locality...

- Uses the 3R residue compaction algorithm to reduce the size of filtered blocks

- Uses a Colorspace that is inspired by 3R to reduce the size of the pixels

The requirements so far are not for a solid, stable, monolithic file format like JPEG, GIF or PNG, but rather a toolchest of simple, efficient techniques that can be added progressively to save a bit more bits each time. Depending on the application, one feature can be enabled if it doesn't add much work and yields significant gains. This allows us to design the file/stream format step by step, starting from the most basic and trivial features, providing us with a functioning framework to develop more sophisticated features and evaluate their benefits.

Note that the purposes are compactness and speed of the decoder, rather than high compression ratio. The CODEC tries to grab all the low-hanging fruits to maximise the gains with the least efforts. In particular, the decoder should be as simple as possible and easy to implement, with a small and efficient digital circuit.

Logs:

1. Colorspace

2. Chunking

3. Recursive definition of a 2D signal

4. Tiling

5. Phase-out encoder/decoder

6. Another case of combinatorial dilemma

7. Colorspace decomposition (episode 2: the big swap)

8. Basic algorithm for one block

9. Design of the phase-out code

10. Extension to alpha channel

11. Phase-out in C

12. Block sizes and other considerations...

.

Yann Guidon / YGDES

Yann Guidon / YGDES

Just found more references about Hilbert's Curve and the Gray code...

http://binvis.io/

https://corte.si/posts/visualisation/binvis/index.html

https://corte.si/posts/visualisation/entropy/index.html

https://corte.si/posts/code/hilbert/portrait/index.html

https://www.grant-trebbin.com/2017/03/calculating-hilbert-curve-coordinates.html

...

Oh, and there are 2 variants : Hilbert curve and Moore curve :-D