-

YouTube video!

10/20/2017 at 17:20 • 0 comments -

Hurray, new filter working

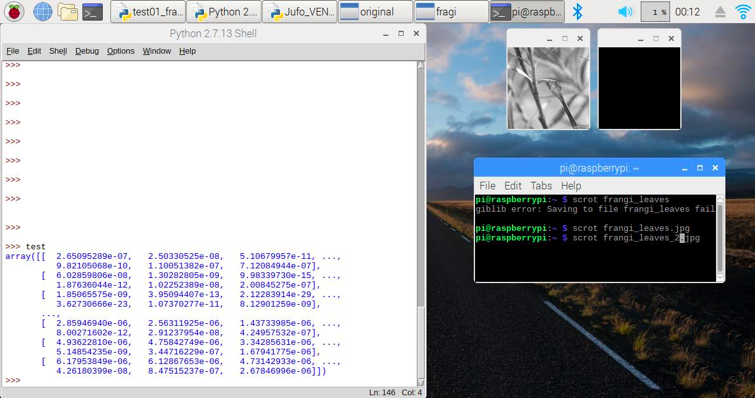

10/20/2017 at 12:59 • 0 commentsFor some strange reason, a detailed analysis of the problem helped 😉: Any conversion of the filter results to a picture shown should have transferred the image to 8Bit grayscale (uint8). To find out, why this happened, we printed out the arrays containing the filtered results:

As you can see, the array contains very, very small values (e^-6 to -23 e.g.) – and if values between 0 and 1 are transformed to the range from 0 to 255, these very very small numbers – just stay zero.

Just out of curiosity, we tried what would happen if you just multiply this array with a huge factor, e.g. 2500000 – and we got our filtered results 😊!

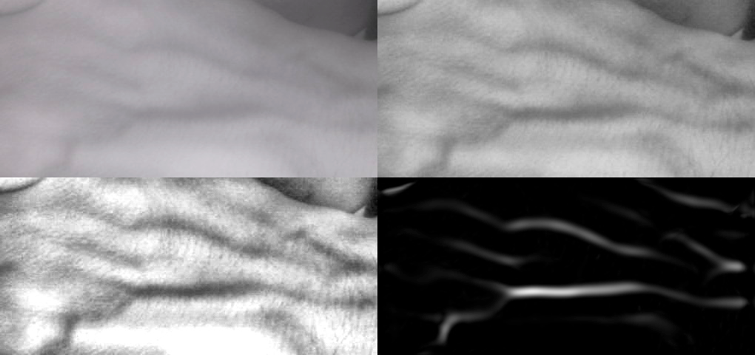

This image shows the original camera NIR image (upper left) and the first and second stage image enhancement by overall and region specific level adjustment (CLAHE filter). The last (lower right) picture shows the frangi filter result, and you can see that it’s just the major veins being left.

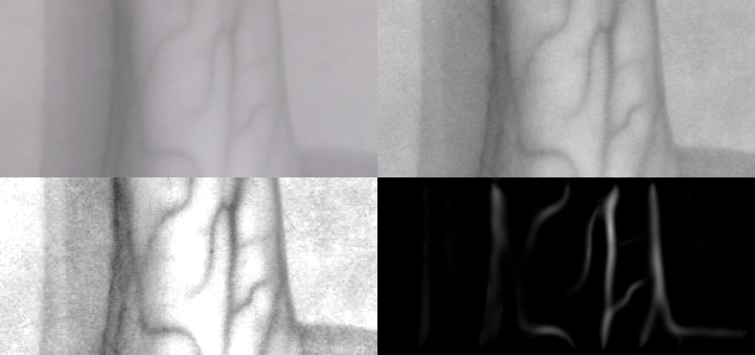

Another try with the arm instead of the back of the hand delivers the same astounding results:

The veins are clearly marked out and the frangi filter result could even be further processed by find contours etc, because it is just the veins left…

So, do make a long story short: Yes, the frangi filter is a major contribution to vein detection and could make future versions of our Veinfinder even more powerful.

Thanks to Dr. Halimeh from the Coagulation Centre in Duisburg, we were able to compare an earlier prototype with a professional medical vein detection system – and even at that stage, both delivered comparable results concerning the detection depth of veins.

The only drawback at the moment is processing speed, because the frangi filter really slows things down – so this is just the starting point for future analysis, coding and hacking 😉…

-

New features

10/20/2017 at 12:55 • 0 commentsResearching how to further improve our Venenfinder, we came across a filter that is used for detecting and isolating vessel-like structures (branches of trees; blood vessels). It is used to isolate the filigree structures of retinal blood vessels by looking for continuous edges – the frangi filter.

It is named after the Alejandro F. Frangi who developed this multi-stage filter together with collegues for vessel identification in 1998.

Luckily there is a Python library for it 😊 – and it is part of the scikit-image processing libraries, but you have to compile from the sources since the Frangi filter itself was introduced in version 0.13x up and 0.12x is the latest you can get via apt-get install.

As explained in the previous post, we simply could not get this filter to install/compile in the virtual environments, so we went for a clean install of Raspian Stretch and OpenCV 3.3 without any virtual environments to get the desired image processing libraries.

We opted for the latest, the dev 0.14 version. As describes in the documentation , you need to run the following commands to install dependencies, get the source code and compile it:

sudo apt-get install python-matplotlib python-numpy python-pil python-scipysudo apt-get install build-essential cython

git clone https://github.com/scikit-image/scikit-image.git

pip install -e

If everything is working fine, you can test it in Python:

python

Then try to import it and get the version number:

>>> import skimage

>>> skimage.__version__

and your Pi should return:

'0.14dev'

Then we tried the new filter with a simple static image, some leaflets and … it did not work ☹

We always got a black image containing the frangi filter results, no matter what.

-

Software Update: Raspian Stretch and openCV 3.3

10/20/2017 at 12:48 • 0 commentsBuild-Log: Adapt to new Raspian Strech and openCV3.3

We started from scratch following mostly Adrian’s superb tutorial.

In a nutshell, it is a couple of commands you need to get the necessary packages and install open CV (and later Skimage) from source:

sudo raspi-config

Following the advise in the forum, we changed the swapsize accordingly and rebooted afterwards – just after we did the reboot to expands root-FS. Then we followed Adrians’s blog, but again did not install the virtual environments, because in all the other tests we could not get Python 3 to work with Sci-Image-Filters and CV2.

Before doing anything else change an important bit in the config to make compiling with 4 cores possible: Edit /etc/dphys-swapfile and change CONF_SWAPSIZE to 2048 and reboot. You can then do make -j4 (tip from Stephen Hayes on Adrians Blog)

sudo apt-get update && sudo apt-get upgrade sudo apt-get install build-essential cmake pkg-config sudo apt-get install libjpeg-dev libtiff5-dev libjasper-dev libpng12-dev sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev sudo apt-get install libxvidcore-dev libx264-dev sudo apt-get install libgtk2.0-dev libgtk-3-dev sudo apt-get install libatlas-base-dev gfortran sudo apt-get install python2.7-dev python3-dev cd ~ wget -O opencv.zip https://github.com/Itseez/opencv/archive/3.3.0.zip unzip opencv.zip wget -O opencv_contrib.zip https://github.com/Itseez/opencv_contrib/archive/3.3.0.zip unzip opencv_contrib.zip wget https://bootstrap.pypa.io/get-pip.py sudo python get-pip.py pip install numpycd ~/opencv-3.3.0/ mkdir build cd build cmake -D CMAKE_BUILD_TYPE=RELEASE \ -D CMAKE_INSTALL_PREFIX=/usr/local \ -D INSTALL_PYTHON_EXAMPLES=ON \ -D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib-3.3.0/modules \ -D BUILD_EXAMPLES=ON .. make -j4 sudo make install sudo ldconfigNow you should be able to test that openCV ist successfully installed:

python Your Pi should “answer” with: Python 2.7.13 (default, Jan 19 2017, 14:48:08) [GCC 6.3.0 20170124] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> Now check that OpenCV can be imported: >>>import cv2 (nothing should happen, just a new line): >>> cv2.__version__ Your Pi should give you: '3.3.0'Your Pi should “answer” with:

Python 2.7.13 (default, Jan 19 2017, 14:48:08) [GCC 6.3.0 20170124] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> Now check that OpenCV can be imported: >>>import cv2 (nothing should happen, just a new line): >>> cv2.__version__ Your Pi should give you: '3.3.0'Leave the Python interpreter by quit() – now we are finished 😊

We verified that the latest Version of Venenfinder will work with this Version of OpenCV.

-

Putting it all together

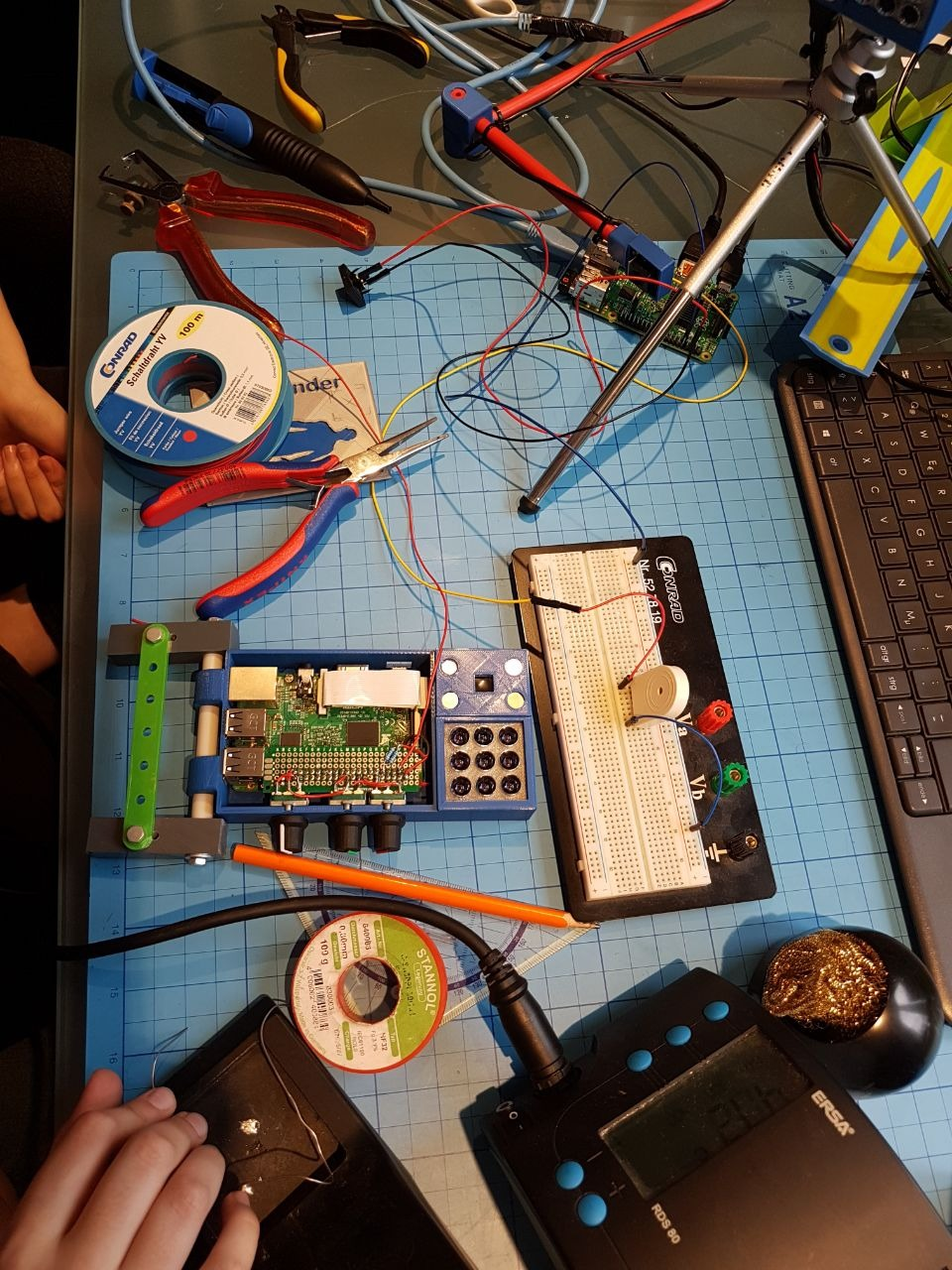

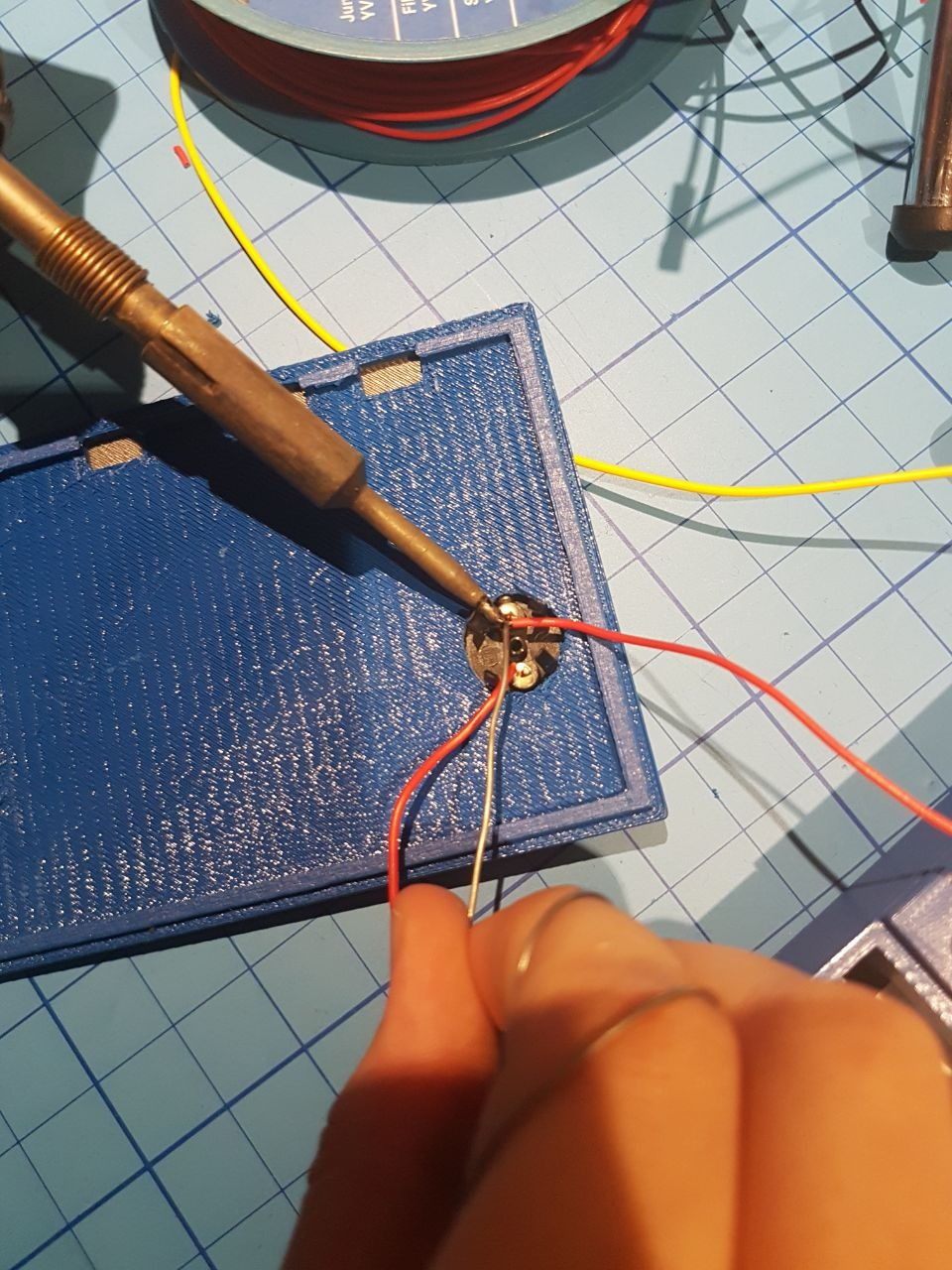

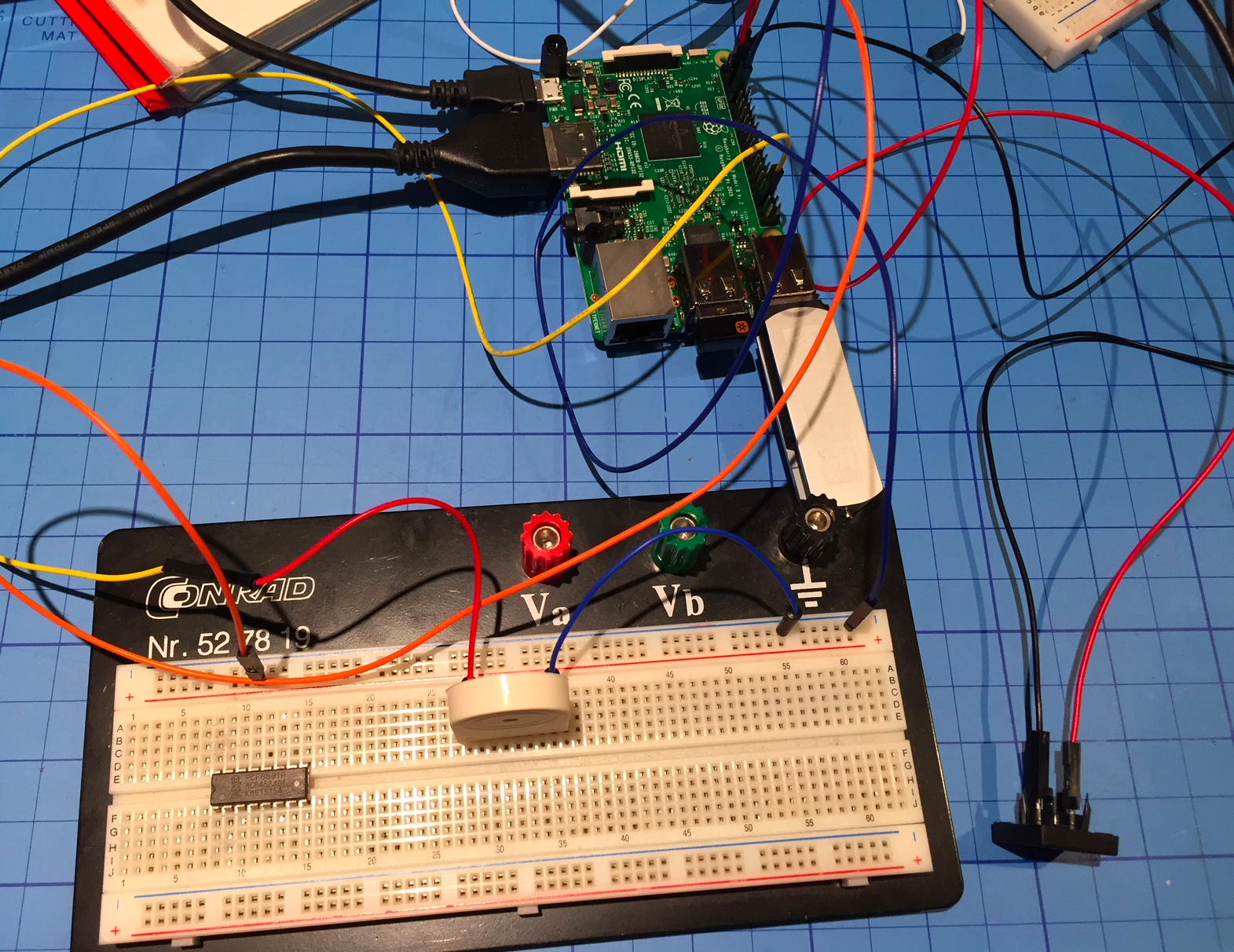

10/20/2017 at 12:44 • 0 commentsIn the latest posts, I described how we installed the buzzer and the switch and here is how we put it all together on the Raspberry!

As we mentioned before, we fist tired them out on a prototyping board (breadboard) and a spare Raspberry Pi3 and after we were sure everything is fine, we integrated both to the Venenfinder as an “embedded medical device”:

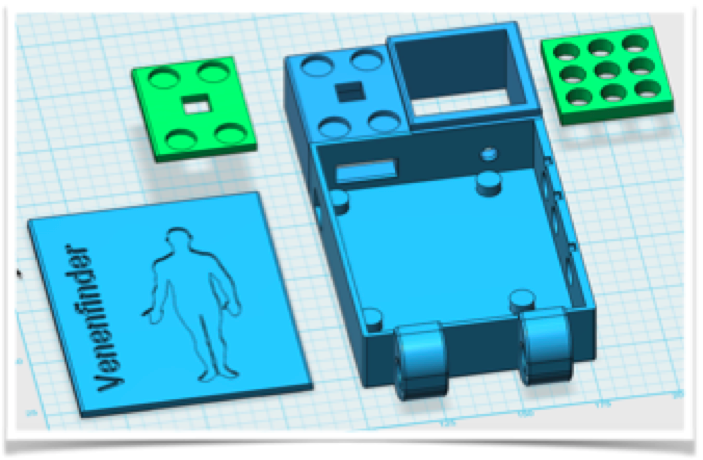

Here we had to deal with a few issues – we wanted to “lock down” the Venenfinder a bit in term of casing, so people who just want to use it for medical reasons won’t have to worry about electronics, wires etc. Of course it is still open under the CC license! But since we did not plan to have easy maintenance access, we had to take our device a bit apart, unfortunately.

The (active) buzzer fits in the casing quite well, and we even found a little space for the on/off push button. In order to save time we opted against 3d-printing the lid again with a precise opening for the switch. We just drilled a hole instead (a real “life-hack” 😉).

And we integrated the code from the buzzer and on/off examples into the main Venenfinder – which is updated on the Github repo along with the new schematics:

Furthermore, we got the chance to present this new prototype at another hemophilia patient meeting in Berlin on October, 15th and several people tried it immediately as you can see in the pictures. My brother Elias did a little presentation in front of the entire audience and gave the opportunity to test the device by imaging veins of different people…

-

Switch off

10/14/2017 at 16:32 • 0 commentsSince we always used to turn the Raspberry Pi off just by pulling the plug, which isn't good for the computer and the SD-card system, we applied a switch to the mobile version in the previous log that turns the Raspberry Pi on - and we need a way to turn it off.

Because, since it is running headless, one otherwise would have to ssh into it to tell it to "sudo shutdown -h" - not a working solution for people who just want to use the device as effortless as possible :-)

We decided to use the same switch to turn the Raspberry Pi on as to shut it down safely to keep things simple.

Additionally to the libraries we needed before (time, GPIO), we now need one to send system calls like the "sudo shutdown -h"...

from subprocess import call # for shutdownSince it is good practice to use definitions/variables we declare pin3 to be the one we use for shutdown:

onoff_pin = 3Then we declare this GPIO to be an input and since we a lazy and want to keep additional electronics to a minimum, we tell it to use the internal pull-up resistors, so the pin has always a defined level and is not "floating":

GPIO.setup(onoff_pin, GPIO.IN, pull_up_down=GPIO.PUD_UP) # define onoff_pin as input, pulldownWhen the on/off-Button is pressed, the system should notify the user of shutdown - of course by the buzzer we integrated in the previous build log. Now it should beep for one second, then tell the Raspberry to shut down. We need to declare a method that will be called when the switch is pressed - because we want it to be triggered by an interrupt (like the encoders) and not watch for it in the main loop doing the image processing:

def shutmedown(channel): GPIO.output(buzzerpin, True) # Buzzer on time.sleep(1) # for 1 sec GPIO.output(buzzerpin, False) # then off again GPIO.cleanup() # not really needed, we shut down now anyway call("sudo shutdown -h now", shell = True) # this is the system call to shut downAfter we declared the method, we need to configure the interrupt that is triggered as soon as the button is pressed. Since we used a pull-up internal resistor, the state of our GPIO-Pin is always true - until the button is pressed. The level changed from true (high = 3.3 V) to false (low = 0V = GND), meaning we have a falling edge, a drop:

GPIO.add_event_detect(onoff_pin, GPIO.FALLING, callback = shutmedown, bouncetime = 500) #interrupt falling edge to on/off-button, debounce by 500ms

Debouncing is not really necessary, so the 500ms is uncritical. We don't count, just any press on the button will shut the Raspberry down.

We tried it with a spare Raspberry on a breadboard and since it worked straight away, we now include buzzer and on/off key into our prototype as a next step.

-

Buzz, buzz, buzz

10/14/2017 at 16:00 • 0 commentsIn addition to the starting switch, we applied an active buzzer that beeps three times when the Raspberry Pi turned on. This is important to know when the Raspi has fully booted because you can't see when everything has loaded, since there is no display.

The buzzer is connected to the GPIO pin 19 and to GND. To make life easier for us we chose to use an active buzzer that doesn't need to be switched on and off to generate a sound, it simply needs to be connected to power for the time you would like to hear the buzz.

Again, this was tested on a different Raspberry on a breadboard before we went to install it on the "ready prototype". We only have this one mobile prototype and we have promised to send it to a patient in need asap - so we need to make sure everything is fine.

Here we need to add some code - first, we import some libraries:

import RPi.GPIO as GPIO #for the pins import time #for delayingNext we define the GPIO pin for the buzzer:

buzzerpin = 19 # attach buzzer between pin19 and GND; check polarity! GPIO.setmode(GPIO.BCM) # use BCM numbering scheme as before GPIO.setup(buzzerpin, GPIO.OUT) time.sleep(0.5) # give it a short restAs soon as everything is set up (camera etc.) we want the buzzer to make a short beeping sequence to notify the user that a connection to the streaming NIR video is now possible. We opted for a short 3x beeping sound:

for i in range(1,4): # runs 3 times GPIO.output(buzzerpin, True) # switch to high (=switch active buzzer on) time.sleep(0.2) # time between beeps GPIO.output(buzzerpin, False) # switch to low (=switch active buzzer off) time.sleep(0.2)That was all - it worked astonishing well ;-)

Now we have a little snippet we can add to the main code later... just before the main loop starts. Next task will be the power-off routine...

-

Switch on :-)

10/14/2017 at 15:26 • 0 commentsWhile using the Venenfinder, we realized, there are some ordinary difficulties with our prototype, so we decided to make some updates!

We wanted to apply a switch to the mobile version that turns the Raspberry Pi on and off, because we always used to turn the Raspi off just by pulling the cable, which isn't good for the computer and the SD-card system ;-)

We didn't want to test these updates on our "ready prototype" - just in case something gets wrong - so we at first tested these modifications with another Raspberry.

If the Raspberry is powered via the Micro-USB-Cable it starts immediately as soon as power is applied. If you properly shut the computer down you normally have to repower (unplug, replug) either the Micro-USB-Cable or the power supply to boot up again. But there is an easy option to it: A switch causing the Raspberry to start - it just need to be connected to GPIO 3 (or 5 depending on the scheme used).

At least we now do not waste our USB-Socket any more ;-)

Our next plan is to use the same switch to shut the Raspberry Pi down safely without just pulling the plug. And, since it is running headless, you would have to ssh into it to tell it to "sudo shutdown -h" - not a working solution for people who just want to use the device as effortless as possible. Oh, and we also want to add a buzzer to get a signal when the Raspberry is fully booted!

-

Mobile Version

08/13/2017 at 15:21 • 0 comments![]() (c) https://www.jugend-forscht.de/projektdatenbank/venenfinder-ein-assistenzsystem-zur-venenpunktion.html

(c) https://www.jugend-forscht.de/projektdatenbank/venenfinder-ein-assistenzsystem-zur-venenpunktion.htmlMost people have their smartphone almost always near by, so that in the ideal case for the "evaluation unit with screen" no further costs arise, since the smartphone assumes this. Older smartphones or keyboard handhelds often have a smaller IR blocking filter (different from devices and manufacturers, the iPhone, for example, has a very strong IR blocking filter), so they are not capable of performing the optimization algorithms, but in the video preview Could already show veins more clearly by mere IR irradiation. In this case, a very cost-effective solution would be possible (only IR LEDs are required). Another possibility to develop a mobile variant is to connect the modified webcam to a smartphone using a USB-on-the-go (UTG) adapter. Not all smartphones support this - but it would be a way to bypass the built-in camera. Then you would have a corresponding IR-sensitive camera with built-in lighting and the possibility to additionally optimize the video mobile by software. We chose the second version.

For the hardware you simply need to print the stl-files according to your 3D-Printer’s software. The case is designed to fit the Raspberry Pi3 with the three encoders attached, but you can adjust it to you needs. The Encoders attach to GND and to the GPIOS 20,21 / 18, 23 and 24,25. The IR-LEDs used have a peak at 940 or 950 nm and require a 12 Ohm Resistor, it you connect three of the LEDs in series. Connect 3 series of the LEDs with a resistor parallel and you have an array of 3x3 LEDs, which will fit into the casing designed for the reflectors.

If you want to stream the calculated image to your smartphone, TV or tablet, you either need to integrate the Raspberry into your local Wi-Fi network – or just start a new one. We don’t want the user to have to deal with editing Wi-Fi settings on a terminal session,

The veins are illuminated with IR light (950nm) and the back scattering is captured by the Raspberry Camera (the one without the IR-filter). You can use old analogue film tape as a filter to block visible light and let only pass IR- light. The camera picture is processed in several stages to get an improved distribution of light and dark parts of the image (histogram equalization). The reason to use near IR illumination lies in the optical properties of human skin and in the absorbance spectrum of hemoglobin.

The device was developed by us (code, illumination, 3d-files as well as numerous tests of prototypes and real-world tests in a hospital). In this tutorial we reference to the following blogs who helped us developing this mobile version of the “Venenfinder”:

We cite some steps from Adrian’s blog on how to install openCV on the Raspberry Pi from scratch: https://www.pyimagesearch.com

We just decided to turn the Pi into a hotspot. Here we followed Phil Martin’s blog on how to use the Raspberry as a Wi-Fi Access point: https://frillip.com/using-your-raspberry-pi-3-as-a-wifi-access-point-with-hostapd/

Since you need a way to change the setting of the image enhancement, we decided to use rotary encoders. These are basically just 2 switches and they sequence they close and open tells you the direction the knob was turned. We soldered 3 rotary encoders to a little board and created a Raspberry HAT on our own. For the code we used: http://www.bobrathbone.com/raspberrypi/Raspberry%20Rotary%20Encoders.pdf

We used some code from Igor Maculan – he programmed a Simple Python Motion Jpeg (mjpeg) Server using a webcam, and we changed it to Picam, added the encoder and display of parameters. Original Code:

https://gist.github.com/n3wtron/4624820

To rebuild this you can find the 3d files and the python program on my blog: https://zerozeroonezeroonezeroonezero.wordpress.com/

And there is a tutorial that is linked here, where you can turn the Raspberry into a hotspot step by step.

-

Webcam Version

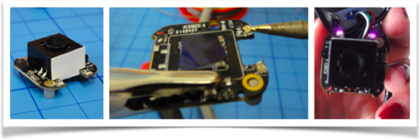

08/13/2017 at 15:19 • 0 commentsAs an alternative to the ready-to-use Pi-Camera, we (re)used a webcam that already had been modified in a previous research project (Eye controlled wheelchair, http://hackaday.io/project/5955).

We had removed the infrared filter and replaced the two white LEDs with infrared LEDs. The following figure shows the webcam without housing, the converter chip without optics and the completely reconstructed camera with the two IR-LEDs (violet dots).

Figure 12: modified webcam from the project "Eye controls wheelchair"

A USB webcam offers several advantages: It can be connected to other computers than a Raspberry Pi or even smartphones (see “Whats next”) and is more flexible concerning the connection cable. Furthermore, this camera has a built-in autofocus and already supports illumination sufficient enough the range needed here (well, you have to exchange 2 white SMD LEDs for IR ones).

The disadvantage is that rebuilding this device will be more complicated since soldering SMD components is required along with removing the IR blocking filter (cut it off using a sharp blade). Additionally using a USB camera with the Raspberry Pi, you may loose a bit speed/performance because the CPU has to do the transfer over the USB2, while the Raspberry camera can be handled by the graphics processor with no additional load to the CPU.

We added a mounting option, consisting of a tripod base with 3d-printed fittings and 9mm plastic tubes:

Figure 13: Construction of the camera for the modified webcam The code is a bit different, since the camera does auto contrast and auto brightness along with autofocus. This second prototype can be build from scratch and you can of course modify it to use the Raspberry Pi Camera as well. For the moment the Raspberry is still without an enclosure attached to the back of the monitor for easy access – but still not comfortable enough for the intended users.

After Buildlog 3: “Testing the prototypes with Professionals”

Our vein-detection system can not only be used for intravenous medication but also for obtaining blood samples. Both the image acquisition and the calculation of image optimizations are carried out on the fly, meaning patient and doctor see directly where the vein to be punctured can be found.

Therefore we kindly asked two haemophilia specialists to have a look at our Vein detector and give us a feedback. In particular, we discussed our two prototypes with Dr. Klamroth, chief physician of the Center for Vascular Medicine at the Vivantes Clinic in Berlin. He confirmed that only veins near the surface are found with optical devices. Veins below 1mm depth should be localized by ultrasound. Furthermore, finding veins by cooling the skin and using thermography imaging is counterproductive, because veins contract if the skin is cold and are therefore even more difficult to puncture… Dr. Klamroth advised us to extend the results so far and, if necessary, to look for ways to additionally mark the veins in the video displayed as an orientational aid for the user.

A few weeks later, we were able to present and discuss our prototypes in the Competence Care Centre for Haemophilia in Duisburg (www.gzrr.de). Dr. Halimeh and Dr. Kappert tested both prototypes in comparism with their professional medical system for Vein illumination. The professional device uses IR light as well, but then projects the image back to the skin using red a laser. Of course the professional system is much easier to use, no bootup time and adjustments needed, but we can compete in terms of image quality!

Results:

The experiments we carried out as well as the research on scientific papers have shown that a universal can be realized with infrared lighting, independently of the skin pigmentation: Below 800nm, the skin dye melanin absorbs large parts of the irradiated light - above 1100nm, very much irradiated light is absorbed by the water in the tissue (see Figures 2 and 4). The combination of infrared and light-red lighting would be ideal for little pigmented skin, but our Venenfinder would not be suitable for more pigmented skin. At the wavelength used, we can distinguish veins from surrounding tissue - they are recognizable only as a pale glimmer. Adjusting the contrasts and the brightness distribution on the fly, we get a much clearer picture. A fully automatic adjustment provides first good results, but with the help of three parameters, the result can be significantly improved again.

Our development is based on the inexpensive camera systems a) modified webcam (approx. 50 €) or b) Raspberry Pi Cam (about 35 €), and can certainly compete with professional systems, which provide a much finer graduation of the grayscale and thus finer “shadows” (veins), while the technology available to us only returns a slightly differentiated gray image. The veins differ from the skin surface only by a minimal brightness deviation of about 5% of the total brightness distribution.

What’s next?

We came across another algorithm that is used for enhancing blood vessel pattern ins retinal images, e.g.: Frangi et al (named after their publication). Trying this with the auto-leveled images or the manual improved ones seems like a good plan 😉

Additionally, this approach could be ported to mobile phones. There is software out that captures from USB-Cameras attached to an Android phone already, so “all there is to do” is to implement the image adjustment filters for Android…

Assistance System for Vein Detection

Using NIR (near infrared) Illumination and real-time image processing, we can make the veins more visible!

Myrijam

Myrijam