What

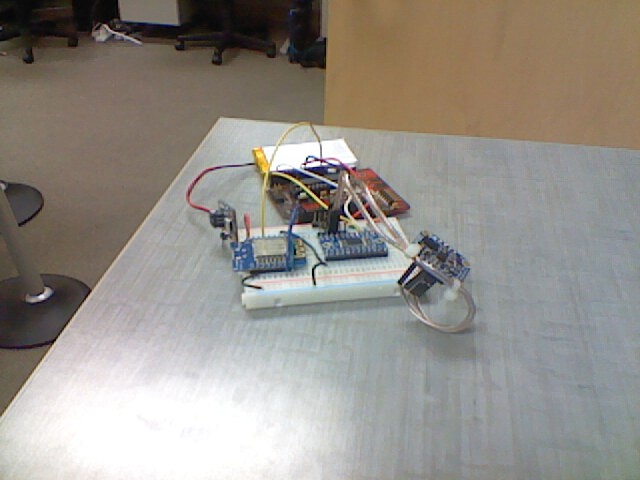

This project is the end result of a task to create an open source gesture input toolkit for my lab while I was an undergraduate research aide in college. I still hack on it in my free time, but now with the goal of bringing gesture input and niche assistive use cases together.

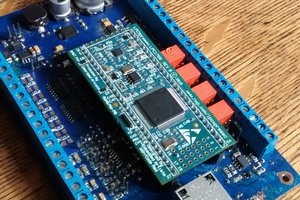

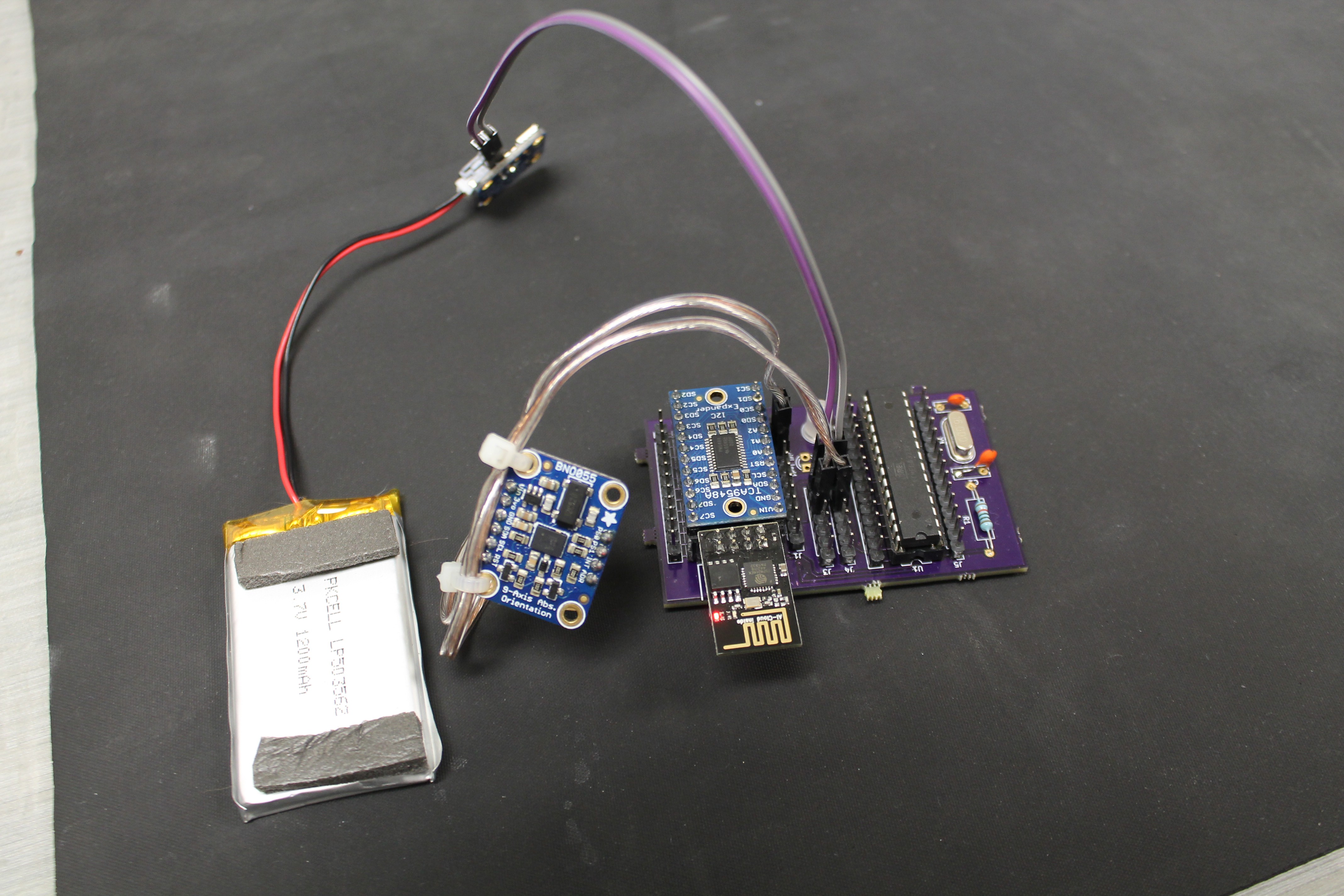

The aim is to use orientation sensors in tracking individual fingers on a hand. The resulting system -- worn as a glove -- can then be used to interpret gestures, recognize sign language, type with one hand, or track patient progress in physical therapy.

Why

Recent developments in computer hardware and software show that the "next big thing" in computing is going to be a shakedown of how users interact with their devices. This is evident in Apple, Google, and Microsoft's rabid push of voice recognition systems in phones and laptops. Tracking progress forward, gesture input may soon be a staple of computer interaction.

Existing solutions seem to be pretty limited. Motion capture suits often leave out fine hand detail as their goal is to record broad character movements for video animation. Camera based tracking is expensive and area constrained. Most VR gesture tracking is too blunt.

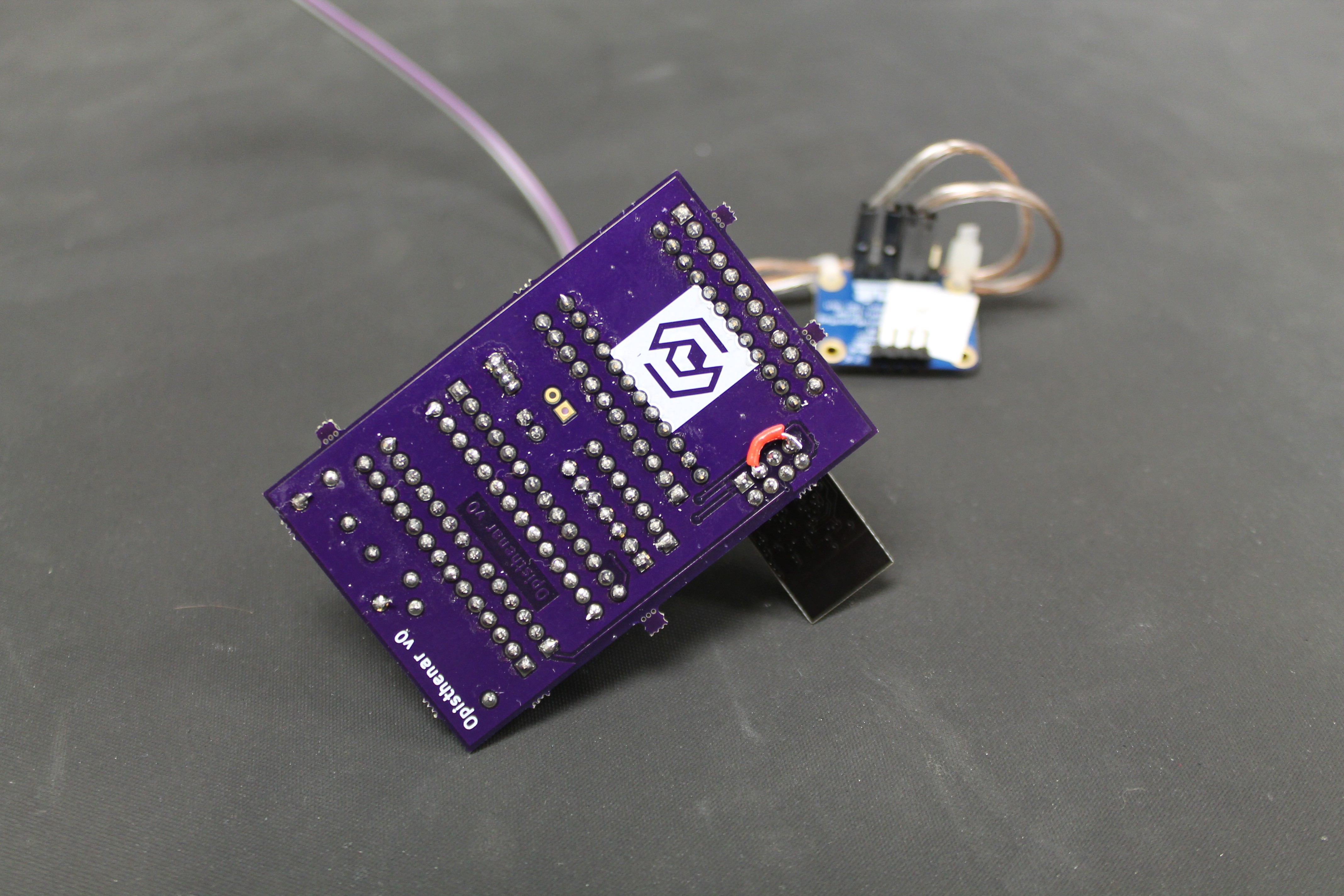

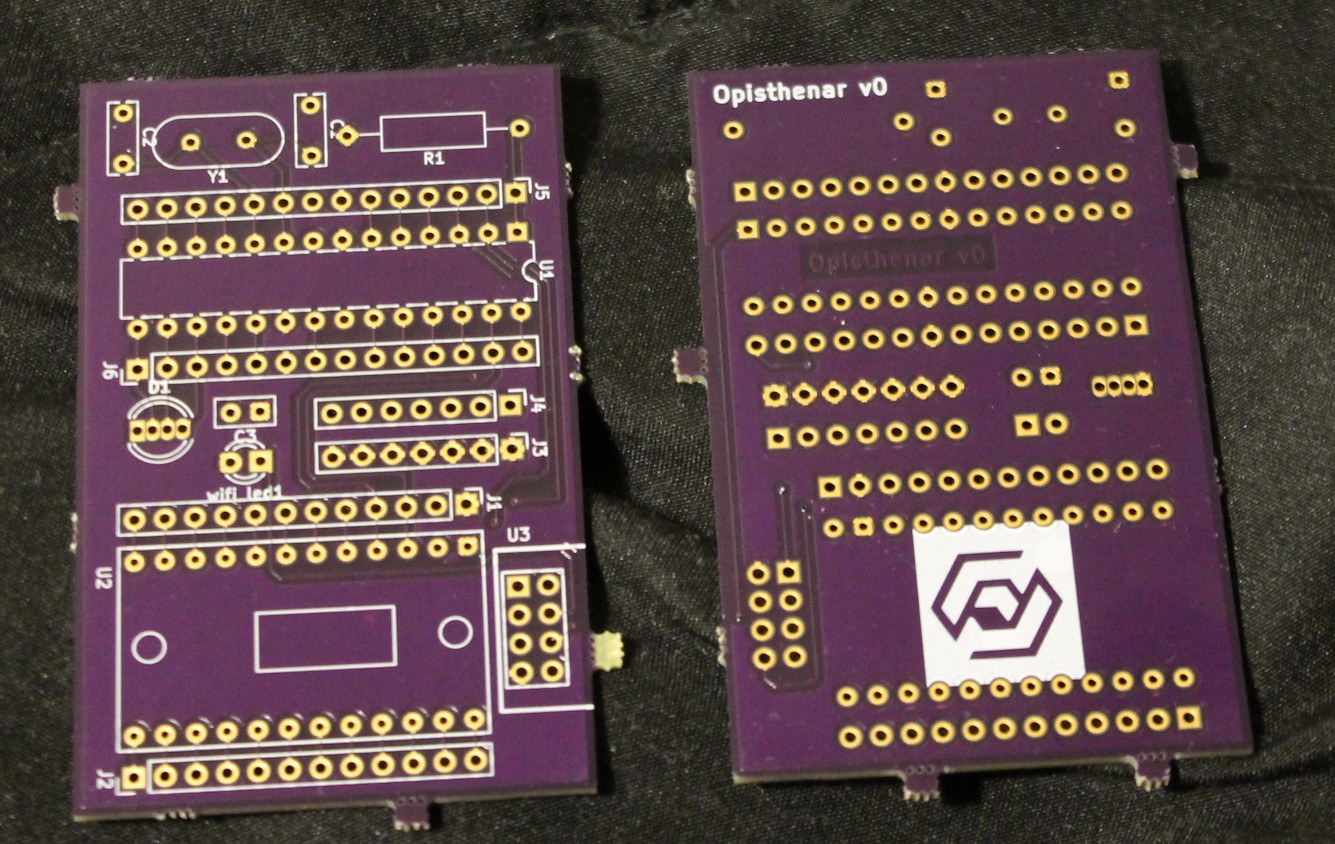

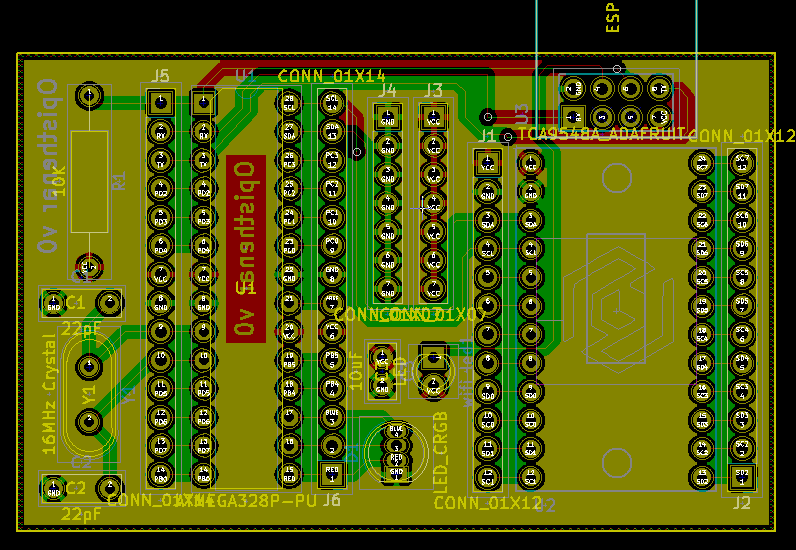

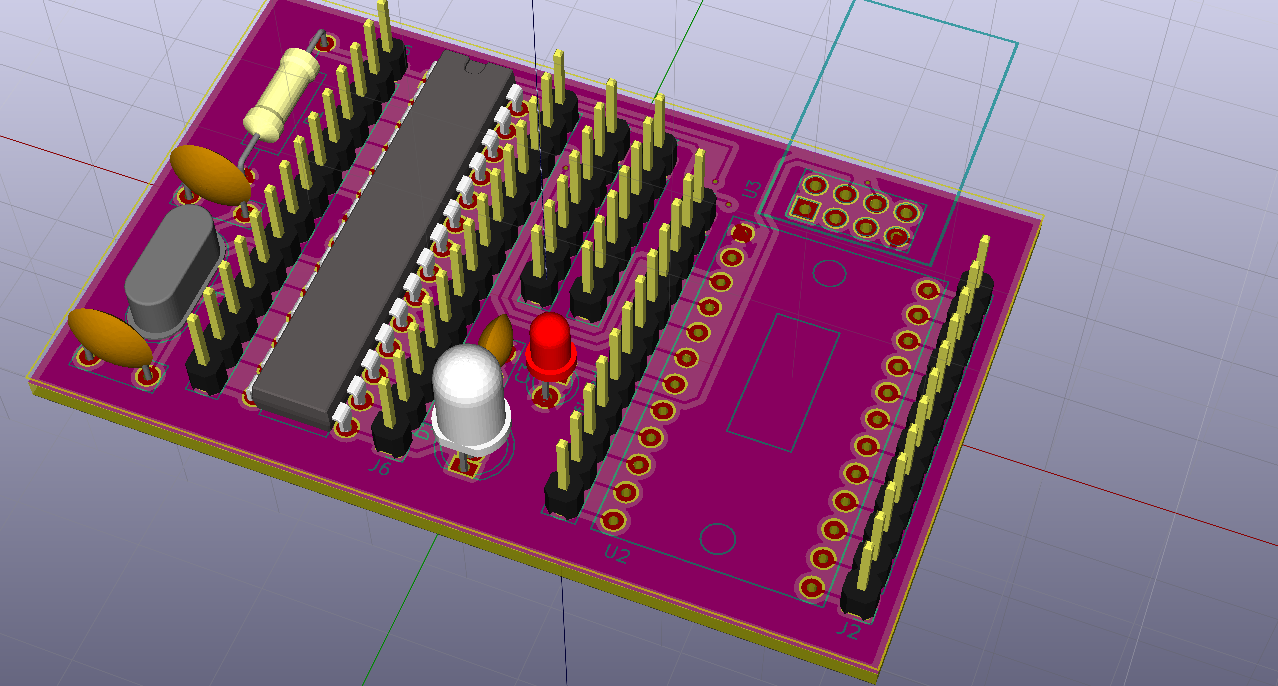

There are many other similar project, but they tend to target a specific use case and wind up being bespoke hardware, bespoke firmware, and custom data transmission that speaks to a single desktop application. I want to make a common platform that can then be used to meet many end use cases, hopefully providing a robust and cost effective platform for more gesture hacking projects and even real world use.

Regardless, sometimes it feels like I'm just one more hamster on the wheel:

How

A human factors lab at my local college is investigating gesture input methods for computing. I want to build assistive tools. By combining use cases I hope to develop something with staying power. Similar to how GPUs are great for medical research, but the GPU industry's money is from video games. If Gestum can be good at common gesture input, then that user base may help make the system more available for other applications.

Who

Well there's myself, and some amazing folks over at H2I (Human Interface Innovation Lab) in a nearby college. The lab is working on using "natural" gestures as input to computers. During my undergrad I worked for H2I on their open source kit for this gesture input, and now that I've left I still contribute to the project as a community member because I'm interested in using the same tech for tracking physical therapy progress and interpreting sign language.

Other Projects

There are several other open source projects with similar goals. Originally I just wanted to do sign language interpretation, but after reading about the other ways IMU gloves are being built for niche targets I want to see if one platform could meet many end uses.

- https://hackaday.io/project/21657-sotto-a-silent-one-handed-modular-keyset

- IMU gesture based chorded key entry

- http://hackaday.com/2017/07/10/hackaday-prize-entry-stroke-rehabilitation-through-biofeedback/

- EXOmind, tracks stroke victims' rehabilitation through IMU glove

- http://jafari.tamu.edu/wp-content/uploads/2016/07/JBHI-00032-2016-final.pdf

- Sign language interpretation

- https://hackaday.io/project/10233-onero-sign-language-translation-device

- Sign language interpretation

- http://www.ece.mcmaster.ca/faculty/debruin/debruin/EE%204BI6/CapstoneFinalReport-Hands%20On.pdf

- Sign language interpretation

- https://hackaday.com/2016/10/11/hackaday-prize-entry-handson-gloves-speaks-sign-language/

- Sign language interpretation

- http://repository.uwyo.edu/cgi/viewcontent.cgi?article=1552&context=ugrd

- Computer interface glove

Helpful Links

Information that I've found useful while working on this project.

- https://hackaday.io/project/12850-ideasx/log/47612-gesture-recognition-the-beginning

- A project based on Assistive Tech buttons reviewing gesture recognition

- http://hackaday.com/2013/07/31/an-introduction-to-inertial-navigation-systems/

- HackADay post introducing...

Christopher

Christopher

AngelLM

AngelLM

georg ottinger

georg ottinger

oshpark

oshpark