Let's look at the functionality for both the physical hand prototype, as well as its low- level control API. Firstly, I will talk about the hand prototype’s design, cost, ability, and stability before discussing the low-level API’s design and performance.

The Hand Prototype

The robotic hand prototype was designed as a very simplified version of the human hand. Designing and building a robotic hand that approximates the dexterity of the human hand would have been great but frankly impossible due to budget constraints and this so far being an individual project. The prototype built is much simpler, but has enough dexterity to collect meaningful data and perform basic grasping operations.

The hand has five fingers, and five degrees of freedom. Force is transmitted through tendons using a Bowden system from five servo motors:

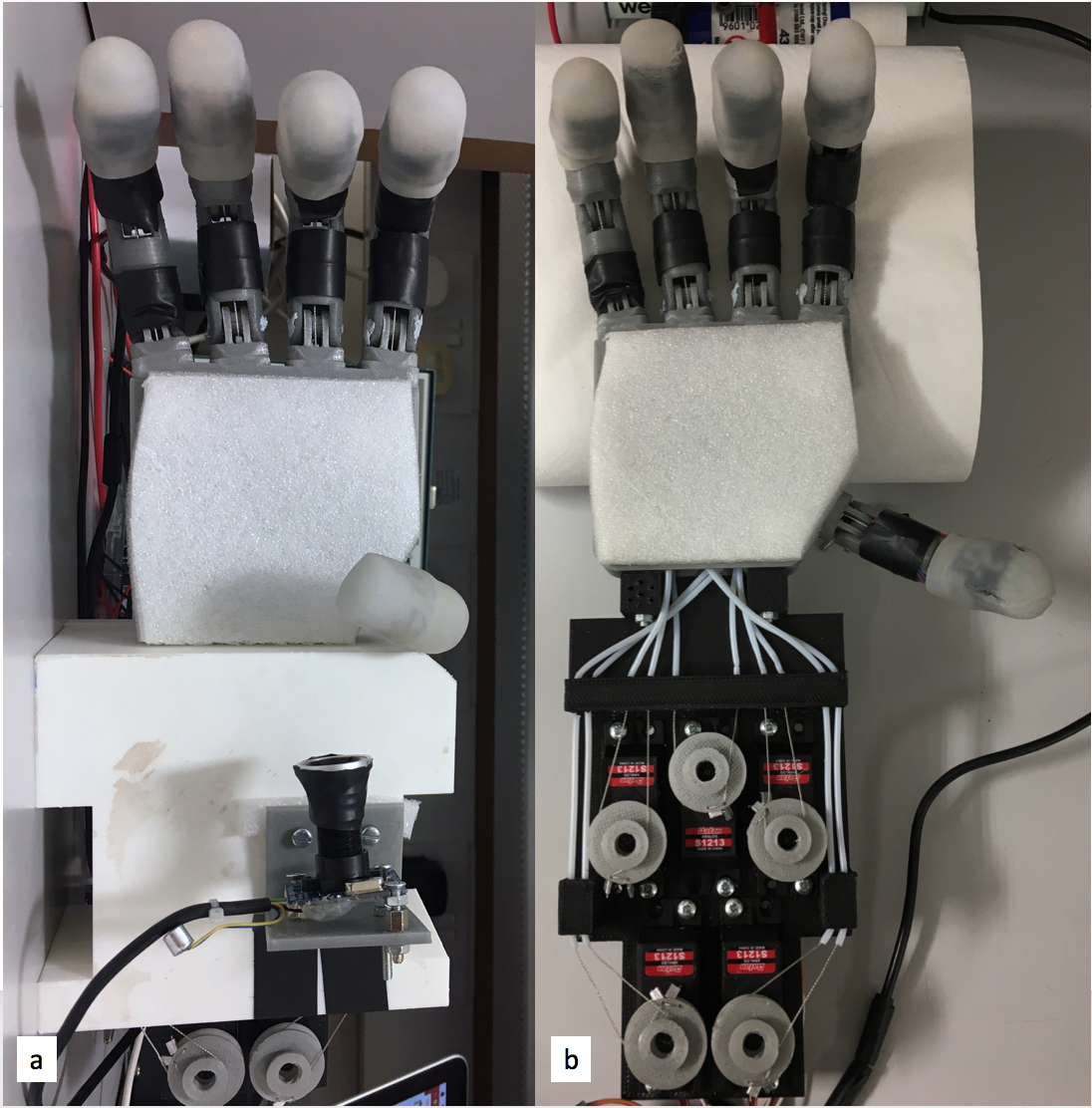

The fully assembled hand prototype with mounted camera and servo bed cover(a), the hand prototype with servo bed cover and camera removed to give view to the actuator and tendon system (b).

The fully assembled hand prototype with mounted camera and servo bed cover(a), the hand prototype with servo bed cover and camera removed to give view to the actuator and tendon system (b).In its full assembly the hand weighs 655g.

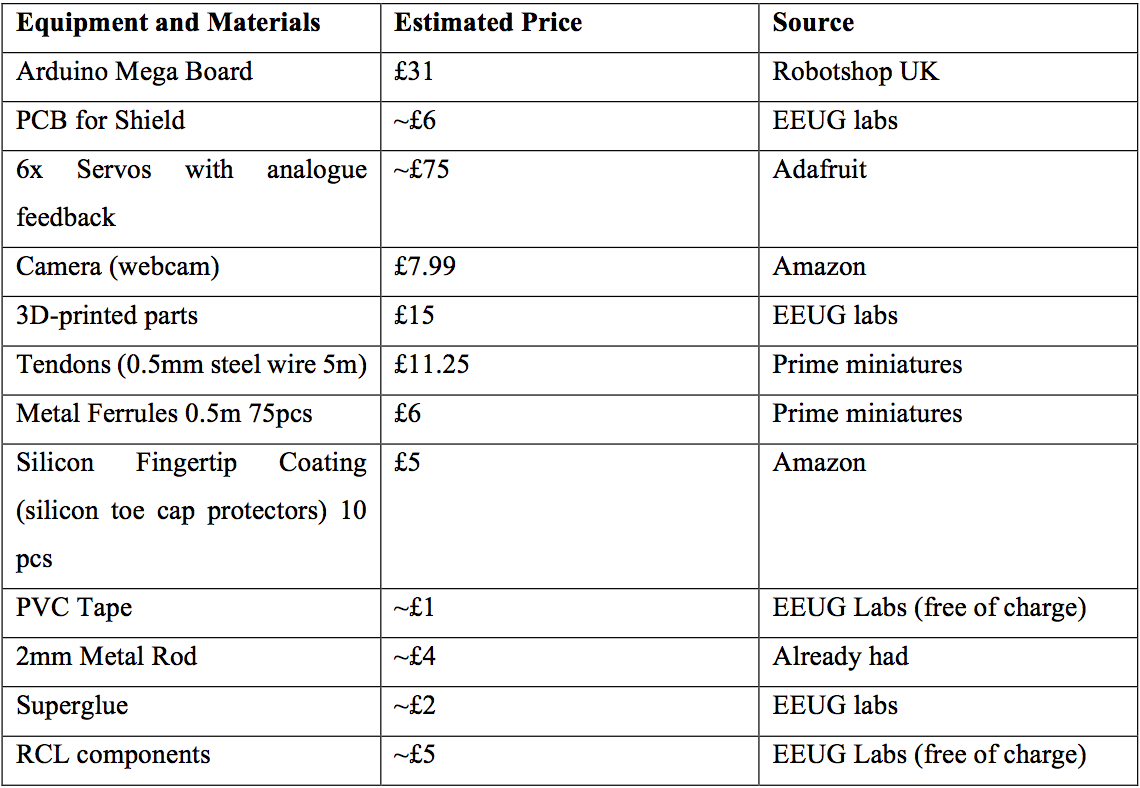

The overall cost of the hand is estimated at £170 (see Table below for a break down). This makes it an incredibly cheap smart prosthetic/robotic hand.

Flexibility and Ability

The tendon-based transmission system allows for the fingers to flex and extend, but not for abduction/adduction, hyper-extension, or individual control of any of the fingers’ phalanges:

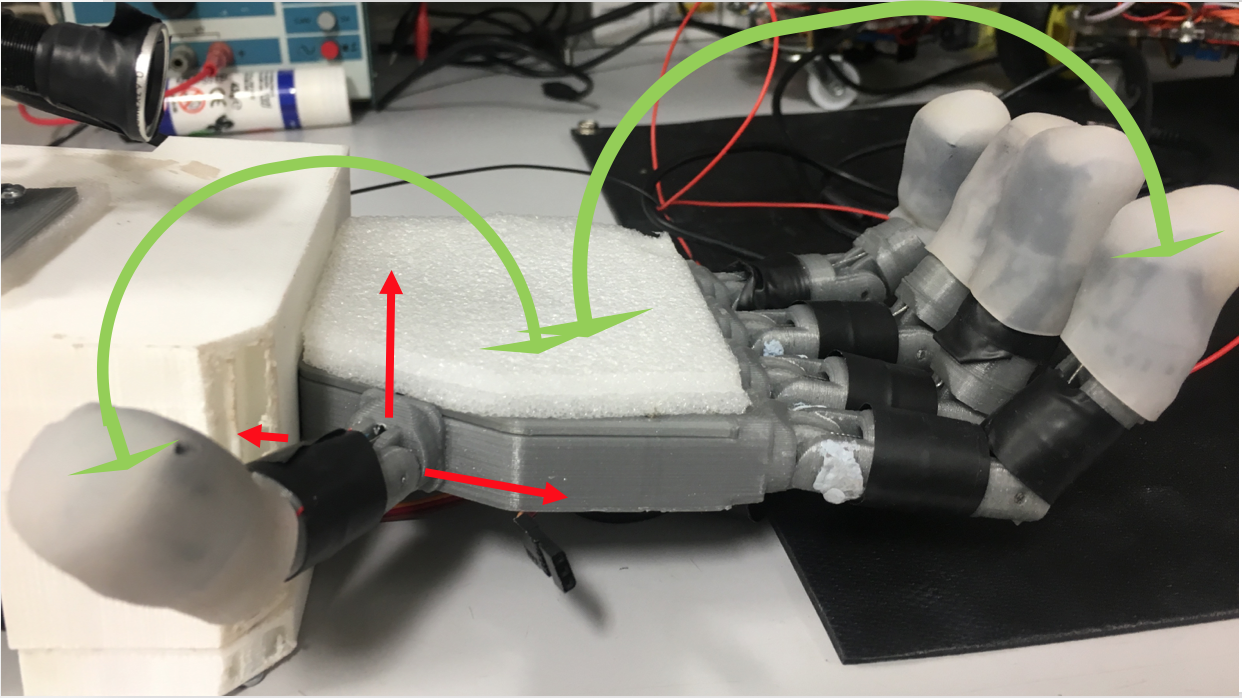

All fingers are able of flexion and extension (indicated by green arrows for thumb and index), but not of abduction, adduction, neither radial nor palmar (indicated for the thumb in red).

All fingers are able of flexion and extension (indicated by green arrows for thumb and index), but not of abduction, adduction, neither radial nor palmar (indicated for the thumb in red).This limits the use of the thumb, compared to a human thumb in its dexterity. The robotic thumb is placed in a position that allows it to perform pinch grasps in conjunction with the index and/or the middle finger, but not with any of the other possible fingers:

The opposability of the thumb is limited to being able to perform pinch grasps with the index (a) and the middle finger (b), but not with the ring or little finger (c).

The opposability of the thumb is limited to being able to perform pinch grasps with the index (a) and the middle finger (b), but not with the ring or little finger (c).This makes it a compromise between what was achievable to design given the limited amount of time and a fully opposed thumb. In our tests the hand was able to perform both precision and power prismatic grasps (wrap and pinch).

Stability

Payloads of up to 220g where tested which is just over a third of the hand prototype’s overall weight. Higher weights need to be tested in the future. Apart from tendons, no parts of the prototype had to be replaced so far. Tendons are prone to snapping due to wear and tear or when too much pressure is applied to an object. However, they are low-cost and easy to replace.

Comparison to Human Hand

The hand prototype is slightly larger than an average female hand and has a similar form factor to an average human hand. All fingers are spaced out and angled in a way that resembles a human hand in a relaxed open position, apart from the thumb, which is angled to approximate an opposed thumb:

Performance of Low-level API

The low-level API manages to reliably communicate with the underlying hardware by translating external opening and closing commands into motor control signals. The hand is able to open and close using the appropriate fingers specified by the input command.

The extended low-level API is capable of collecting and using servo feedback data. The feedback is used reliably to stop fingers from closing when they exceeded their resistance by making contact with an object. This protects the servo motors, the object in question, and the hand itself from damage. It also allows us to estimate each servo’s actual position. The collection of this information was an integral part of collecting ground truth for the data used to train the Convolutional Neural Networks that form the centre of the hand prototype’s high-level control.

The low-level API provides a simple interface to operate the hand, by using only two main functions (open_Hand and close_Hand).

In the next logs I will describe the process of collecting training data, as well as designing and writing the Convolutional Neural Network driven high-level control that gives the hand its intelligence.

Stephanie Stoll

Stephanie Stoll

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.