Over the past couple months, I've been tinkering with machine learning to try and train an object detection neural network that can detect playing cards. The OpenCV algorithm I used (described in this video) works great at detecting cards, but it doesn't work if the cards are overlapping even the slightest bit. Unfortunately, blackjack is always dealt with the cards overlapping. If my blackjack robot is going to work, it needs to be able to count cards even when they're overlapping.

Someone told me that I might be able to train an object detection classifier (a type of neural network) to recognize the cards even if they're partially obscured or overlapping. Object detection classifiers recognize patterns to identify objects, so they only need to see a portion of the object to detect it. I decided to use Google's TensorFlow machine learning framework to train a playing card detection classifier.

I've spent lots of time learning about machine learning (enough to make a tutorial showing how to train your own) and I've taken hundreds of pictures of playing cards to feed to the training API. Unfortunately, it's starting to seem like machine learning isn't going to be the silver bullet I hoped it would be. The trained playing card detector just doesn't work very well.

For the most part, it works great when it has a clear view of the cards (so does my OpenCV algorithm):

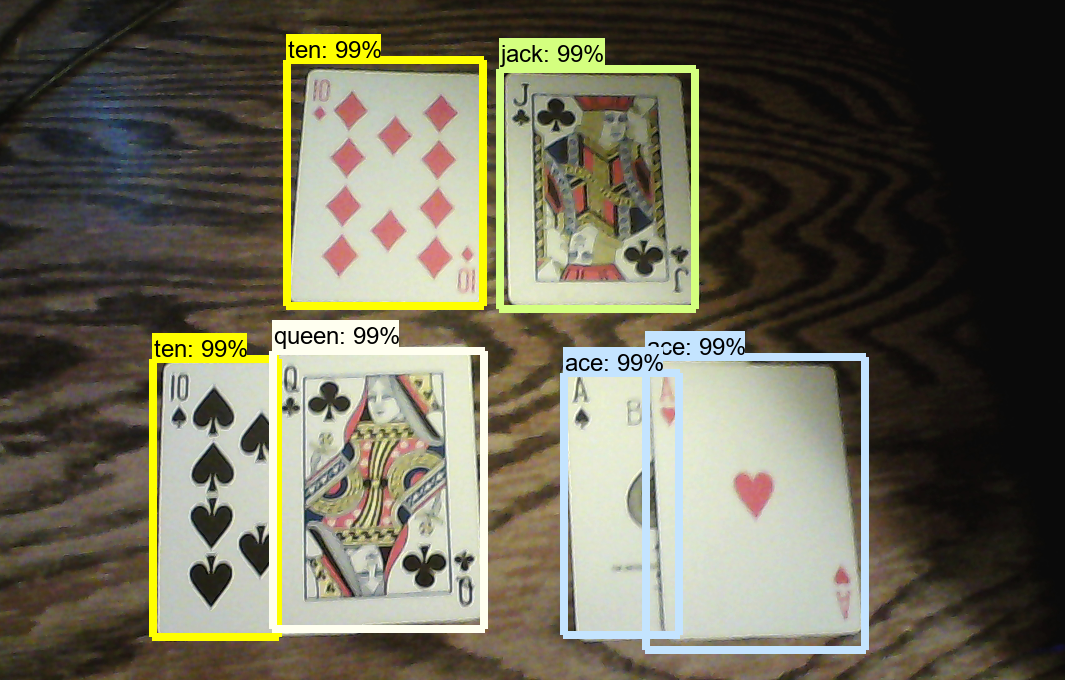

And it even works if the cards are overlapping:

But if I deal some actual blackjack hands in front of the camera, the way it would be done in a casino, it isn't able to detect all the cards. The cards are too overlapped for it to see all the cards.

Right now, it isn't trained well enough to distinguish that there are two cards in each hand. It only sees the top card. It's possible that if I fed the trainer hundreds more clearly labeled pictures of overlapping cards, it might be able to see both the cards. I've already given it 367 training pictures, but maybe it will work better if I give it 1,000 more. Also, it is still a little inaccurate and sometimes incorrectly identifies cards. More training data might help with this, too.

However, there are some other problems. My classifier is trained off Google's Faster RCNN Inception model, which takes lots of processing power. I want to run my blackjack robot on a Raspberry Pi, which has limited processing power. I tried using the lower-power MobileNet-SSD model, but it doesn't work very well at identifying individual cards. I need to find a way to keep the processing requirements low while still having good accuracy.

Also, I only have the detector trained to recognize card ranks nine, ten, jack, queen, king, and ace. It will take lots more training pictures to get it to work with every card rank. I have a sneaking suspicion that it won't work as well on the lower numbers (four is very similar to five, etc.).

However, I'm still going to try! My next step is to train the detector to recognize ALL cards, not just nine through ace. Then, I'll run it on a Raspberry Pi and see if it's still able to detect cards fast enough, and make a YouTube video about it.

I'm still trying to think of how I might be able to get it to work with the cards overlapping. I think the solution will involve a combination of machine learning and some image processing with OpenCV. Please let me know if you have any ideas!

Evan Juras

Evan Juras

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Something I always wanted to experiment with is semi automated dataset creation for these kind of very limited specific detection applications. F.e. you seem to already reliably detect bounding boxes of cards in OpenCV, you maybe then just make a video of you playing cards and then let OpenCV create bounding boxes for the cards and then only have to assign labels manually (or let your old algorithm do that as best as it can and only correct the mistakes).

Something in that vein.

For a generic approach I wanted to use a standard photo jig where you have a rotating table where you place the object on to get a photo of every possible angle for your object.

For applications that are also only happening in a specific place like a table I also wanted to try if I could photograph the object on a green screen and then rotoscope the object to place it automatically on a large data set of photos of different materials that tables are usually made out of and or just a large dataset of tables if that should exist :)

Are you sure? yes | no

It's definitely possible to generate synthetic images for training. After I posted this, @geaxgx linked me this video, which shows how he used synthetic images to train a playing card detector: https://www.youtube.com/watch?v=pnntrewH0xg

It's impressive! I couldn't believe how well it worked, so I followed in his steps and did the same thing. He provided code that allows you to do it on your own. https://github.com/geaxgx/playing-card-detection

Now my playing card detector works like a charm: https://twitter.com/EdjeElectronics/status/1054141549188022272 . I will be making a video about it in the coming months!

Are you sure? yes | no

Oh thats super interesting! Thanks for the links :)

Looking forward to the update.

Are you sure? yes | no