Developed on Python to leverage state-of-the-art extensibility and easily integrated plugins

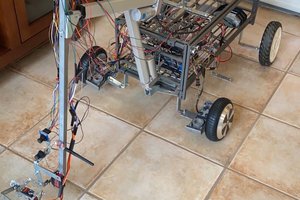

Able to use low cost robots with the accuracy and dexterity of much more expensive robots, achieved in part by:

a) Ability to drive movement by inexpensive DC gear head motors, including the inherent ability to specify movement by applied force rather than strict space/time control that steppers impose.

b) Arms and linkages don't have to have super tight tolerances, because accuracy is achieved by tightly integrated visual feedback that's easy to program and low complexity processing.

3. Ability to program easily with a GUI and visual interface that sees things from the robots' camera perspective.

Flexible use of coordinate spaces that make programming arms with 6 or more degrees of freedom super easy. Got a bot arm with 13 linkages that can reach around corners? Easy. Simple arm with only 2 degrees of freedom, with one polar and one cartesian? Any arm geometry works.

caltadaniel

caltadaniel

Petar Crnjak

Petar Crnjak

BTom

BTom

maloneth4

maloneth4

Updated the general parameter optimizer. The parameter optimizer is an engine that will generate a series of guesses for the best parameter values based on scoring feedback.

This helps robots by optimizing PID values that control inexpensive DC gear head motors to behave predictably like more expensive (and generally much weaker) servos.

One uses the optimizer by setting up and configuring the class by telling it the nature of the parameters to work on, and then calling the member function OptimizeIteration (last_score). You hand it the score of the last iteration (more positive is better, None if you're just starting), and the function hands back a dictionary of parameter values to try next. Then you run your machine (whatever it is) and evaluate how well it worked with those parameters, assigning a score, and iterate until OptimizeIteration returns None, indicating that it thinks it found the best possible values.

The update to the optimizer allows a higher level controller manage the types of searches that take place by defining a different class based on the type of searching one wants to do.

The first class is IsoParameterOptimizer, which as its name implies only changes one parameter at a time until a local maxima is found for that parameter only, and then it finds a new local maxima for the next parameter, and so on. It keeps trying to find local maximas for each parameter sequentially until there is no movement for any parameters, where it declares good enough and returns None to indicate a wide local maxima has been found.

A second class is SearchRadiusOptimizer, which tests a number of values all around a central point in as many dimensions as there are parameters. Be careful using this as the number of iterations can climb very high very fast with 3 or more parameters.

For scoring PID performance, a scoring engine (PIDScoring) is defined that deducts score for variance from the set value, too high acceleration, too high jerk (3rd derivative), and overshoot. The score penalties for each situation is user defined.