OVERVIEW

Using 470 WS2811 LED strips, and a Teensy 3.0 to drive the lights. Currently playing pre-rendered animations generated in Processing (for the plasma animations) and a C# app that extract video data and compiled it into LED data.

.

VEST CONSTRUCTION

The vest was sewn together by me, by tracing a regular vest, cutting and putting it together. (thanks for the lend of the sewing machine mum!)

The inside uses regular jacket lining to permit it to slip around freely and not get caught which might stretch the electronics and break them.

White fur was attached over the top of the vest for diffusion.

A zipper was used in the bottom to permit access to the electronics.

.

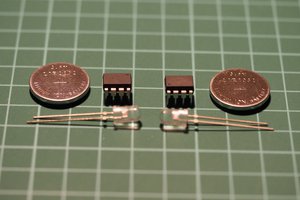

LEDS

470 WS2812 leds on regular non-waterproof strips, 30 per meter, were cut to size so that they were vertically mounted. This helped place minimal bending on the strips.

Each strip was coated with 12mm clear heat shrink to protect the leds, and keep water and playa dust off.

The strips run in on continuous sequence, up-down-up-down, around the entire vest with the lights at uniform spacing to create a square grid of lights.

.

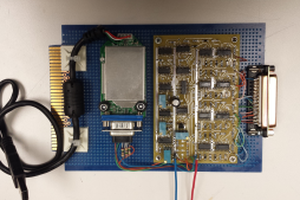

MICROCONTROLLER

After several attempts to generate plasma live on a TEENSY 3.0, which were successful but with a low frame rate of 4fps due to hefty maths, I opted for precompiled animations. It now runs at 31 frames per second, governed back to 25.

.

ANIMATION METHODS

The trick to getting the entire vest to operate like a canvas rathe than just turning lights on and off is to use an index of 470 LED objects, each holding the relative and correct X-Y co-ordiantes of the corresponding LED.

I used PROCESSING to create the plasma effect fame by frame. As each frame is rendered in Processing, I grab the color of the pixels from the image that is generated, based on the XY coordinates of all the LED object in the index.

The data is collected pixel by pixel, and then stored in a file as raw color data, with three bytes per pixel.

.

PLAYBACK

Storing data is done in sequence, reg-green-blue, from the first led to the last in each frame, then each frame is placed one after the other. The file length in bytes is:

LedCount * 3 * NumberOfFrames

The first three bytes are the (red green and blue) colors for the first LED, the fourth, fifth and six bytes are the red, green and blue colors for the second pixel.

In total there are 1410 bytes per frame, which is 470 * 3.

It happens to be the case that RGB colors extracted from a bit map image are the same as that used on the WS2811, (extracted as separate Red Green And Blue bytes) so you can simply grab the corresponding XY coordinate pixel colors from each video frame generated in Processing, and send it to the corresponding LED.

For video mapping, as with frames generated in processing, I simply process each frame from the video, extract the colors from the frame image by iterating the LED array, get the corresponding XY pixel color, and store the colors as raw bytes in a file.

To play back the precompiled frames, I have a Teensy 3.0 with an SD card attached.

Using the Neopixel library to manage the strip of leds, I simply get the first three bytes from the file, insert them end to end into a single integer (which is 32 bits but the neopixel library just ignores the 8 most significant bits) and copy the integer into the first location of the strip object. The second three bytes from the file go to the second led in the strip object...and so on for all 470 lights in that frame.

To play the next frame, just get the next lot of bytes from the file, which would start at the 1411th byte...

finchronicity

finchronicity

CJ

CJ

Grayson Schlichting

Grayson Schlichting

Films4You

Films4You

Thierry

Thierry