For the past couple of week I've had been playing with a Project Tango dev kit, the 3D scanning tablet from Google. The tablet contains an integrated depth sensor, motion tracking camera, 4MP camera, and is jammed with sensors for 6DOF. Understanding depth lets your virtual world interact with the real world in new ways.

Project Tango prototype is an Android smartphone-like device which tracks the 3D motion of the device, and creates a 3D model of the environment around it.

https://www.google.com/atap/project-tango/about-project-tango/

Project Tango technology gives a mobile device the ability to navigate the physical world similar to how we do as humans. Project Tango brings a new kind of spatial perception to the Android device platform by adding advanced computer vision, image processing, and special vision sensors. Project Tango devices can use these visual cues to help recognize the world around them. They can self-correct errors in motion tracking and relocalize in areas they've seen before.

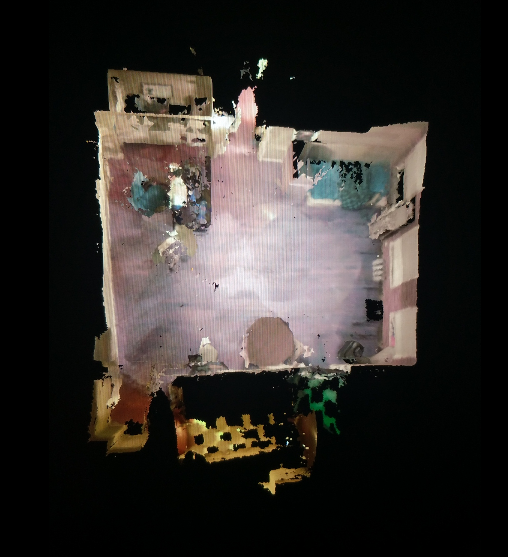

I already had experience importing the 3D scan into JanusVR but now was the first time I had physical access to the location of the scan, allowing me to do some very interesting mixed reality experiments. I began to scan of my apartment

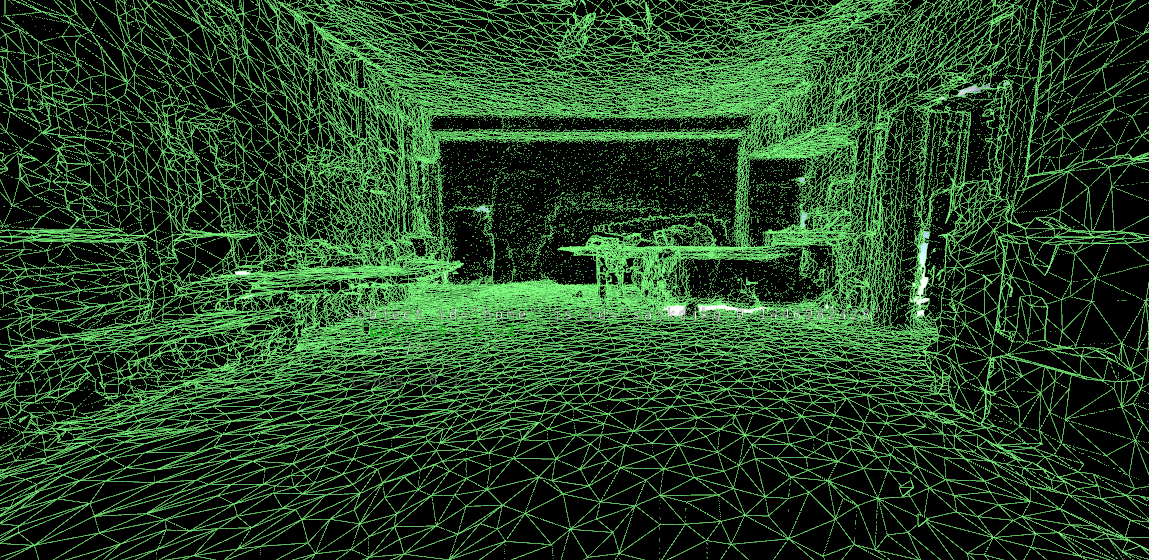

and soon realized how this quickly can fuel a mighty fuckton of triangles:

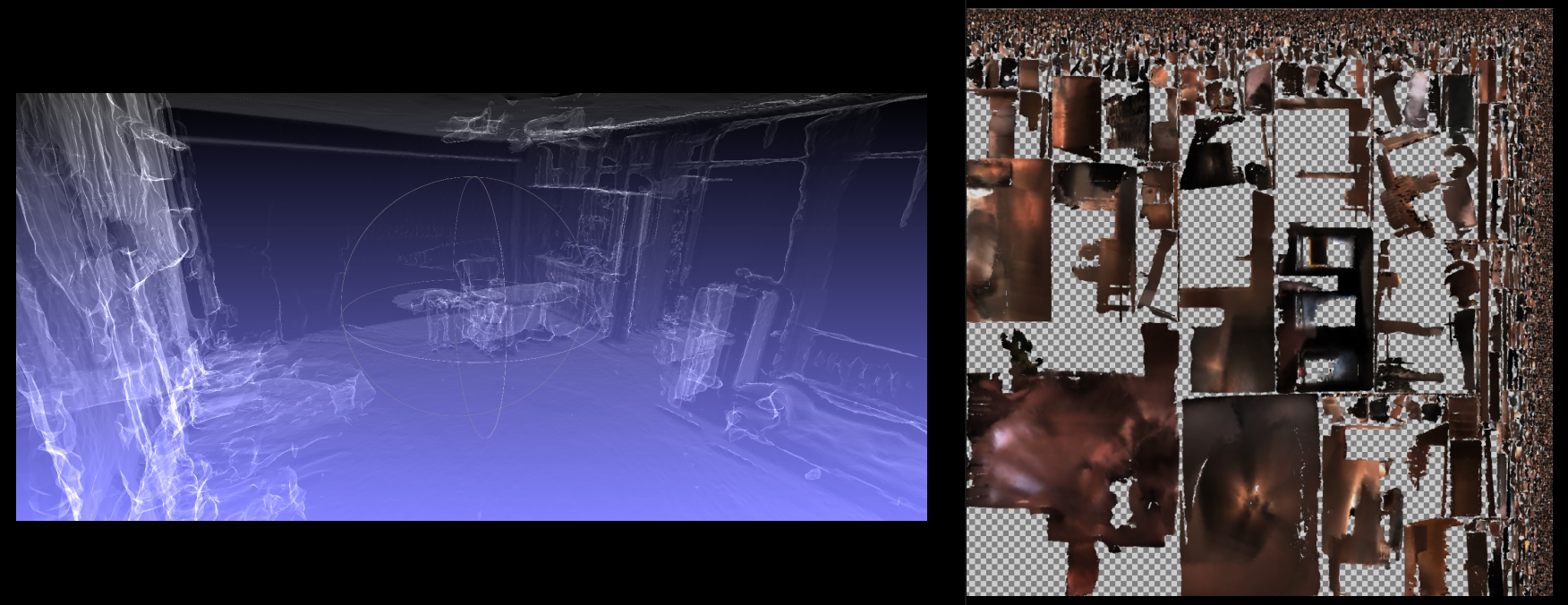

Tango allows you to export the scan into a standardized .obj format. I took the file and imported it into blender so that I can decimate the triangle count so something more manageable. One scan alone was about 1.3 million triangles, but can nicely be reduced to a modest < 300K depending on the room. A good rule of thumb has been a ratio of about 0.3 with the decimate modifier. Next, I noticed that the vertex colors were encoded in the wavefront obj.

values after x y and z. The color values range from 0 to 1.

In order to separate the skin from the bone, I had to bake the vertex colors onto a texture map. In order to do this, I loaded the file into meshlab and then exported it back out as a .ply filetype, a format principally designed to store three-dimensional data from 3D scanners.

The main steps I followed in order to separate skin from bone can be found in this video: Import that file into Blender, decimate, create a new image for the texture with atleast 4K resolution for the texture, UV unwrap the model and then go to bake and select vertex colors and have a margin of 2 and hit bake.

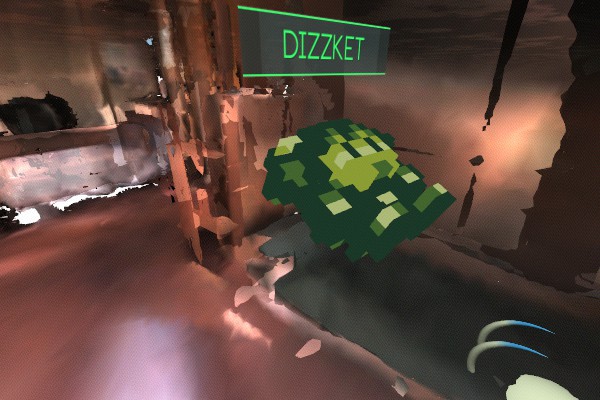

It was time to have some fun in Janus. I made a very simple FireBoxRoom with the apartment scan positioned in place and voila:It was time to invite guests from the internet over:

It was a surreal experience to have the internet invited into your personal habitat.

The full album can be found here: http://imgur.com/a/rOAvD

http://alpha.vrchive.com/image/Wc

http://alpha.vrchive.com/image/WF

http://alpha.vrchive.com/image/WS

Since August, we learned that Intel is working with Google to bring a Project Tango developer kit for Android smartphones using its RealSense 3D cameras, in a bid to drive depth sensing in the mobile space. Link to article

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.