Packets | Particles: Visualize the Network

In Arthurian legend, Avalon is a mythical island upon which King Arthur and Morgan Le Fay are notable characters. In more recent times, one of the most notable allusions is the movie Avalon (2001) which follows Ash, a a player in an illegal virtual reality video game whose sense of reality is challenged as she attempts to unravel the true nature and purpose of the game. It's a good movie if you're interested in simulated reality or if you're a fan of Ghost in The Shell since it's directed by the same person.

In Arthurian legend, Avalon is a mythical island upon which King Arthur and Morgan Le Fay are notable characters. In more recent times, one of the most notable allusions is the movie Avalon (2001) which follows Ash, a a player in an illegal virtual reality video game whose sense of reality is challenged as she attempts to unravel the true nature and purpose of the game. It's a good movie if you're interested in simulated reality or if you're a fan of Ghost in The Shell since it's directed by the same person.

"As the Internet of things advances, the very notion of a clear dividing line between reality and virtual reality becomes blurred" - Geoff Mulgan

With that said, I have updated the interface to AVALON with visual metaphors that allure to the legend.

<Particle id="1140" js_id="104" pos="-40 -2 -40" vel="-0.1 -0.1 -0.1" scale="0.02 0.02 0.02" col="#666666" lighting="false" loop="true" image_id="wifi" count="500" rate="50" duration="10" rand_pos="80 8 80" rand_vel="0.2 0.2 0.2" rand_accel="0.1 0.1 0.1" />For creating the WiFi particles, I extract the images from a packet capture and filter out the corrupted files. The files are tagged by the names so I set a cron job to do extraction and rotating files every day for fresh packets to crystallize into snow.

Network traffic gets visualized as particles in a virtual space as they fall to their doom. This can be applied to any type of network, be it local / wireless / etc. [ Packet | Particle ]

<Particle id="dog_27" js_id="25" pos="-200 -10 -200" vel="-1 0 0" col="#666666" lighting="false" loop="true" image_id="wifi_27" blend_src="src_color" blend_dest="src_alpha" count="15" rate="50" duration="20" rand_pos="480 480 480" rand_vel="0.1 0.1 0.1" rand_accel="0.1 0.1 0.1" accel="0 -1.2 0" />

<Particle id="dog_27" js_id="25" pos="-200 -10 -200" vel="-1 0 0" col="#666666" lighting="false" loop="true" image_id="wifi_27" blend_src="src_color" blend_dest="src_alpha" count="15" rate="50" duration="20" rand_pos="480 480 480" rand_vel="0.1 0.1 0.1" rand_accel="0.1 0.1 0.1" accel="0 -1.2 0" />Before long, it was time to decorate the tree with ornaments! I collected 360 media from my Ricoh Theta S and my Sublime paintings into 100+ shiny balls to hang on the tree.

I was thinking of a cool idea for this tree where you can touch an ornament and it will expand around you, immersing the viewer inside the scene like this:

Janus markup is remarkably powerful as a VR scripting language. I combine the decorated tree and the particles with only a few lines of code.

http://alpha.vrchive.com/image/yo

Link to full album: https://imgur.com/a/WdgK0

AVALON: Your own Virtual Private Island

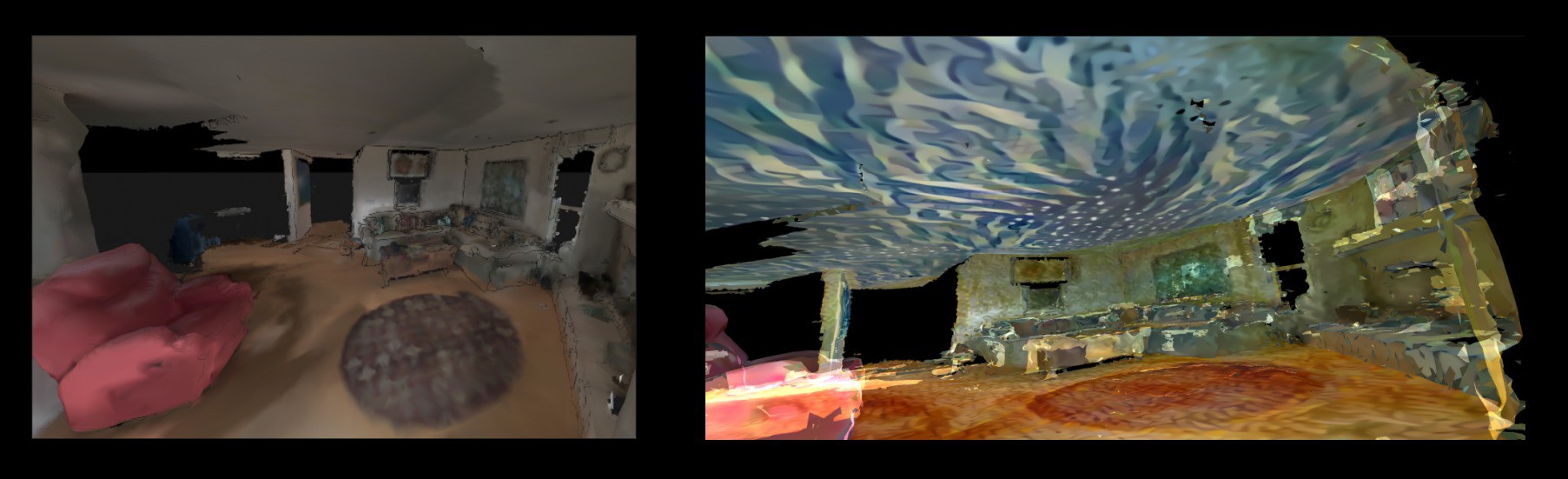

In Tango VR, I showed you how one can import a Project Tango scan into JanusVR.

Each section represents an object on the network but you can also conjoin all the sections into 1 piece by joining them in Blender. It can prove useful to have everything as one object if you wanted to move it around freely without having to manipulate every piece individually.

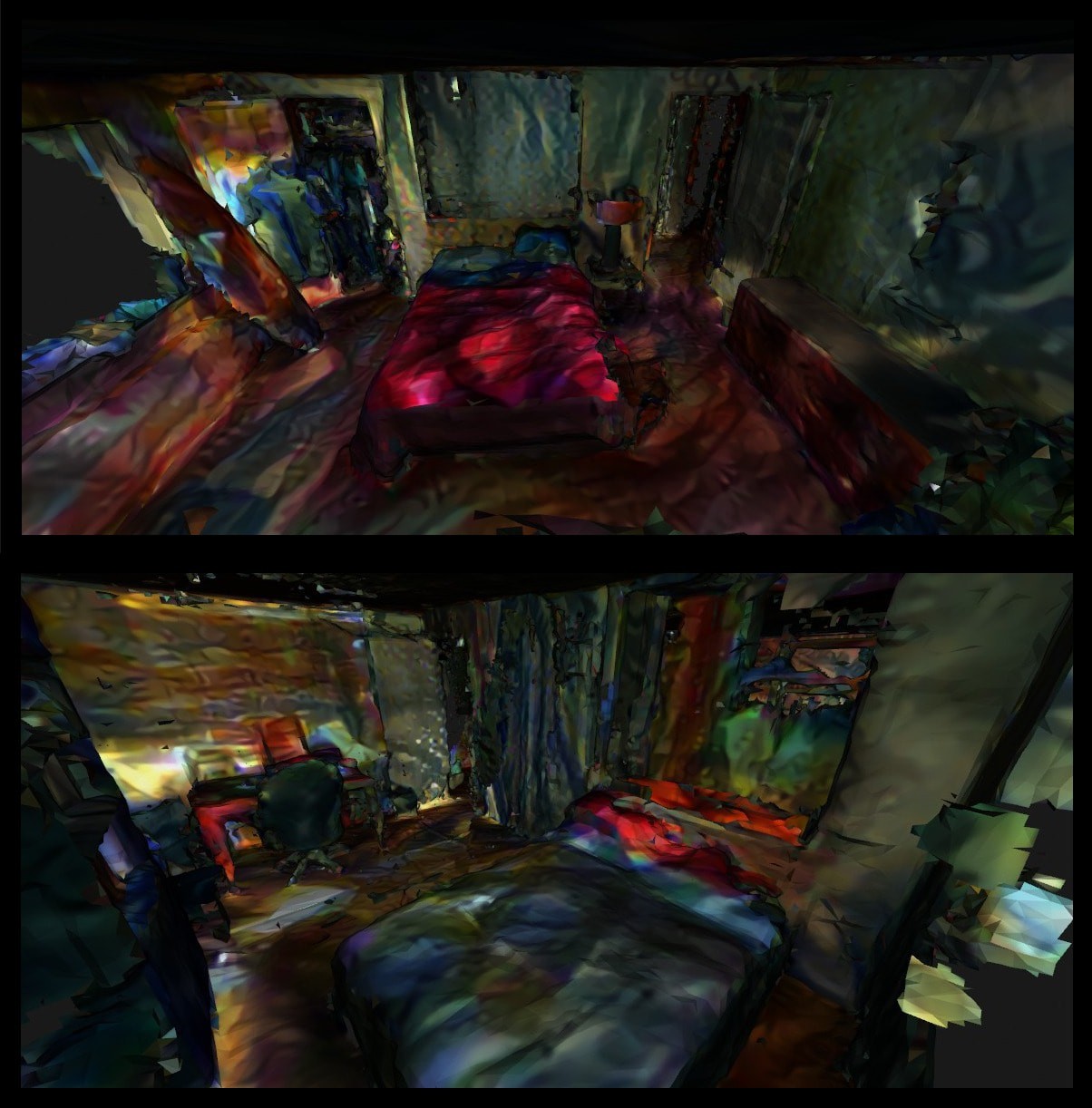

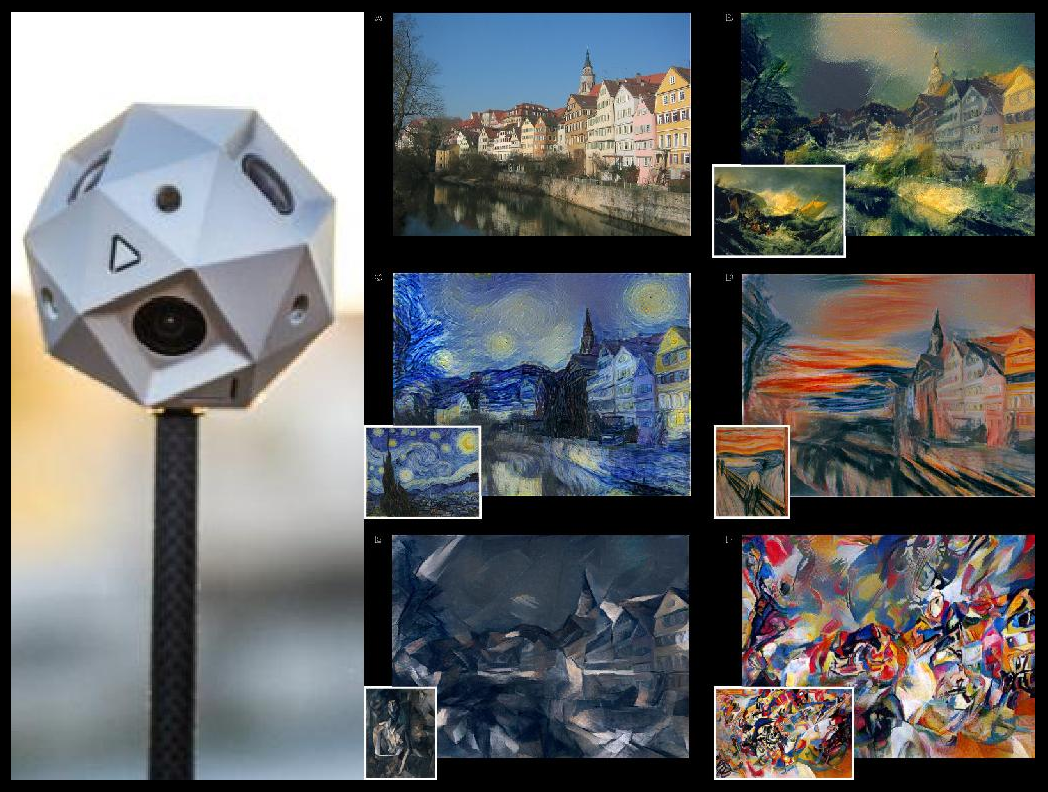

I also demonstrated a technique for separating the textures from the mesh from a 3D scan by baking the vertex colors onto a texture map in Blender. Here I take it one step further to process the texture maps with creativeAI software to stylize them into paintings. Because the texture maps are huge and my lone GTX 960 is a donkey, I had to resize the texture maps and sometimes only process half at a time then combine them in post. The final output resolution was 2048x2048, which is enough for our purposes. I have some ideas about pre-processing the textures by first layering them over with designs; stylizing the images into the skins that the neural networks paint into. My other idea would concern the scans themselves, taking only 1 piece of furniture or section at a time and condensing it with as much detail as possible with its own dedicated texture map.

Give me a Sistine chapel and I'll paint it with my 3D scanner.

With a 3D scanner one can render a painted vision of physical spaces. Kinda like that one movie...

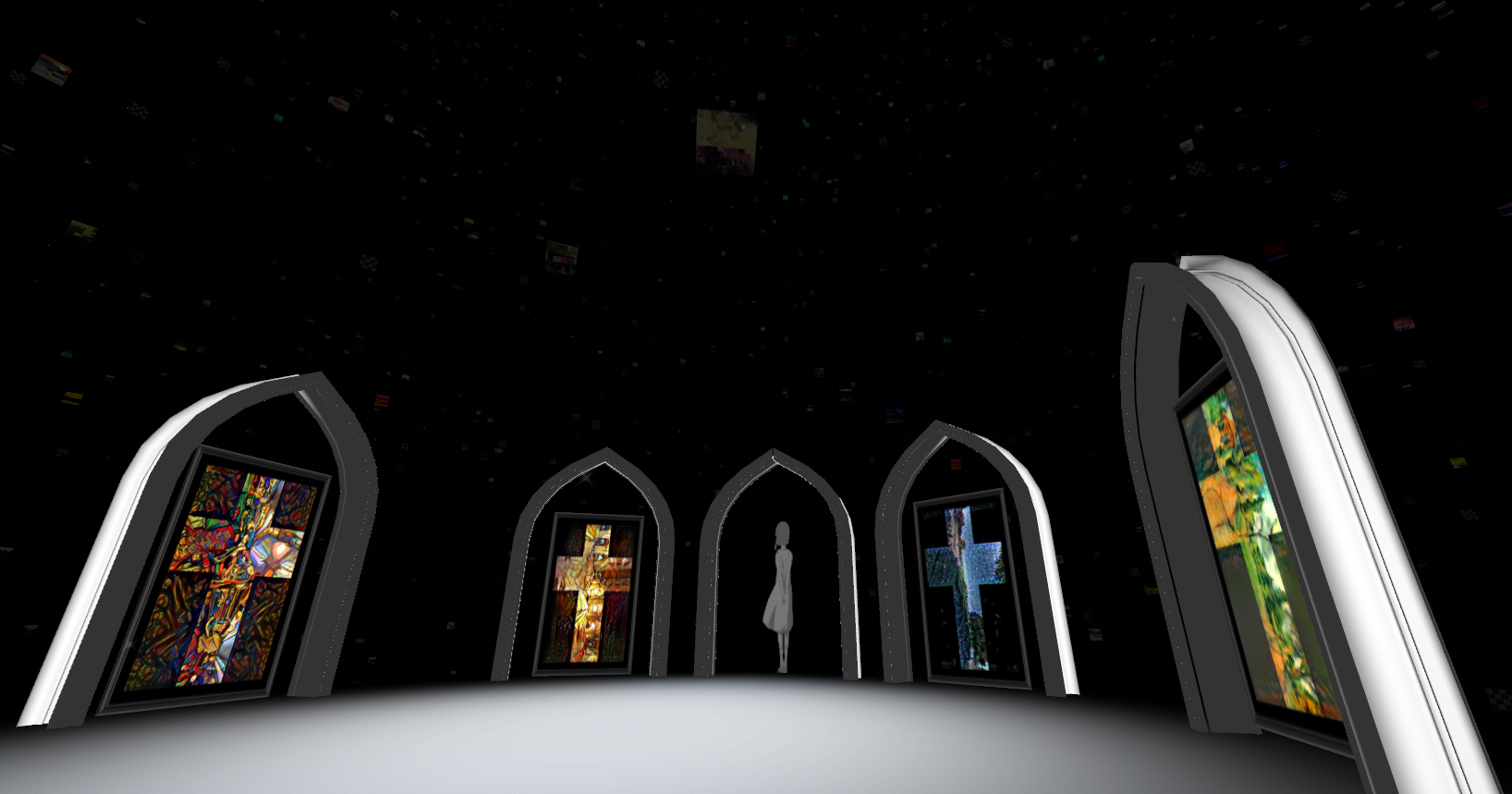

Then I drew the xmas tree and the virtual beach house together in the code.

The sand and water shader complete the illusion for the mythical island AVALON.

The arches were inspired by Dizzket's start room and represent gateways into the network.

These doors weave between layers of virtualities. Either you can navigate deeper into the world or across other nodes within the mesh network. The painting's are cubemaps [see Sublime VR] that have been processed through creative neural networks such as deep style to represent the channel surfing of mixed reality into an infinite assortment of machine dreams. You might appear in France, but it may be a painted vision of the scene that you're in. This produces interesting artistic scenery and protects privacy to obscure distinguishable features in the face of public 360 cameras.

Link to full album: https://imgur.com/a/UntYL

To be continued...

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

WOW !

Are you sure? yes | no