I have set my focus for 2016 on figuring out solutions for developing functional peer-to-peer multidimensional mixed reality networks. I have figured out an optimal path in achieving this by combining the tracking components of project tango with the infrastructure components of AVALON.

What is Mixed Reality?

Mixed reality (MR), sometimes referred to as hybrid reality, is the merging of real and virtual worlds to produce new environments and visualizations where physical and digital objects co-exist and interact in real time.

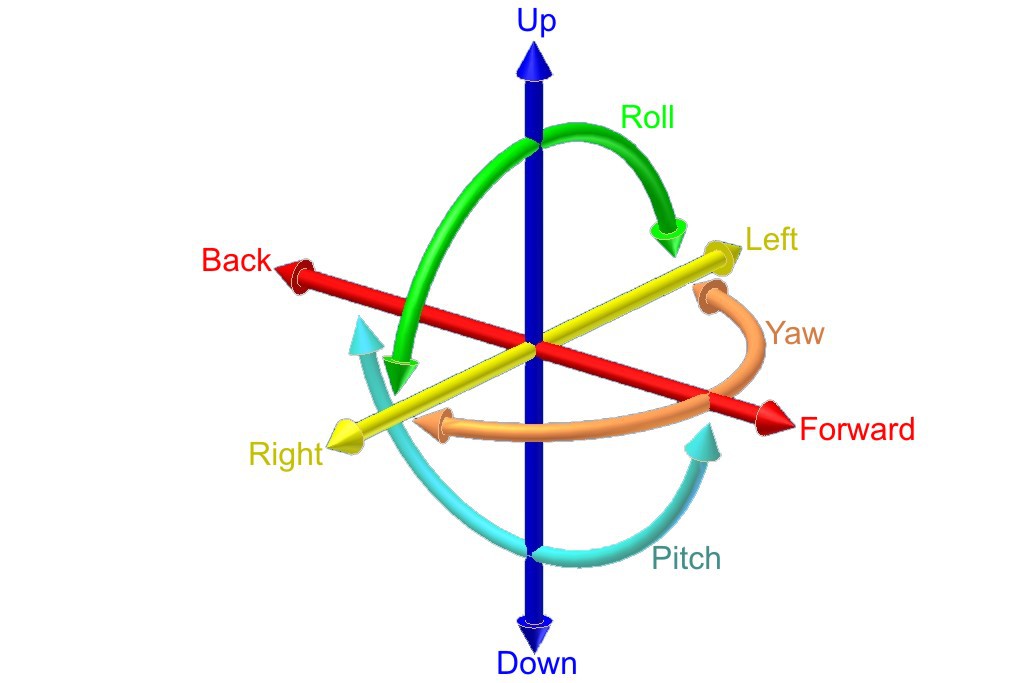

Generally speaking, VR/AR technology is enabled by sensors that allow for 6DOF tracking and screens with low motion to photon latency. It is critical that the physical motions of a persons actions are updated in near real-time on the screen. About 20 milliseconds or less will provide the minimum level of latency deemed acceptable and anything more is noticeably perceptible to the human senses.

There are three rotations that can be measured: yaw, pitch and roll. If you would also track the translations or movements of the device,

this would add another three (along the X, Y and Z axis): 6 DoF

With 6 DoF we are able to detect the absolute position of an object and represent any type of movement, no matter how complex, and reduce motion sickness at the same time. Moving your head without getting the correct visual feedback can introduce or intensify motion sickness which is a problem with the early headsets that only allow for 3 DoF. It breaks the immersion if you are unable to tilt your head to look around the corner of a building.

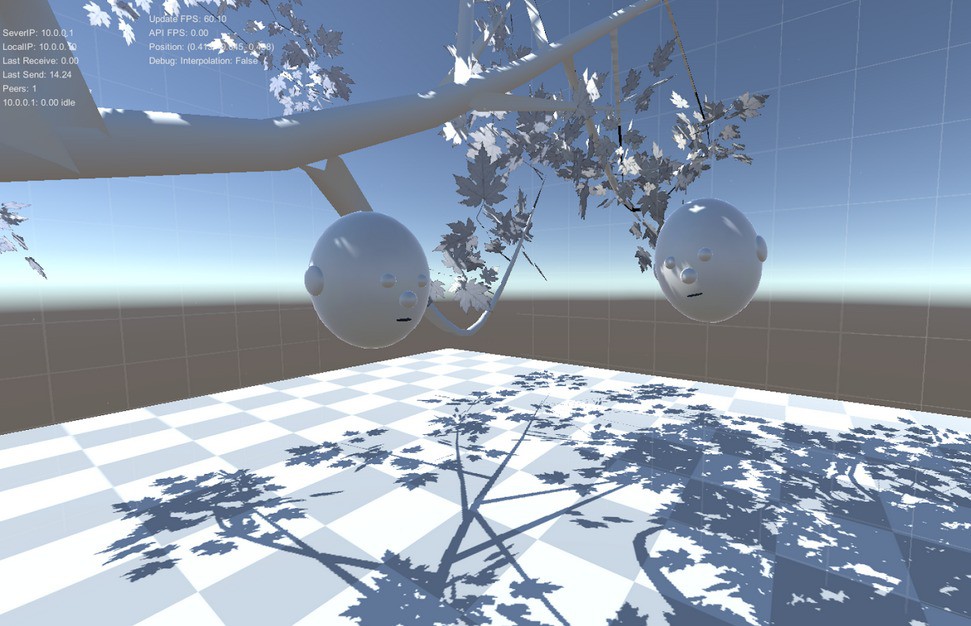

I became intrigued by a particular Project Tango application called MultiplayerVR by Johnny C Lee. This sample app the uses Project Tango devices in a multi-user environment. People can see each other in the same space, and interact under a peaceful tree.

The question then became, how do I place an object in the space and get other viewers to also see it from their perspective and to see each others position too?

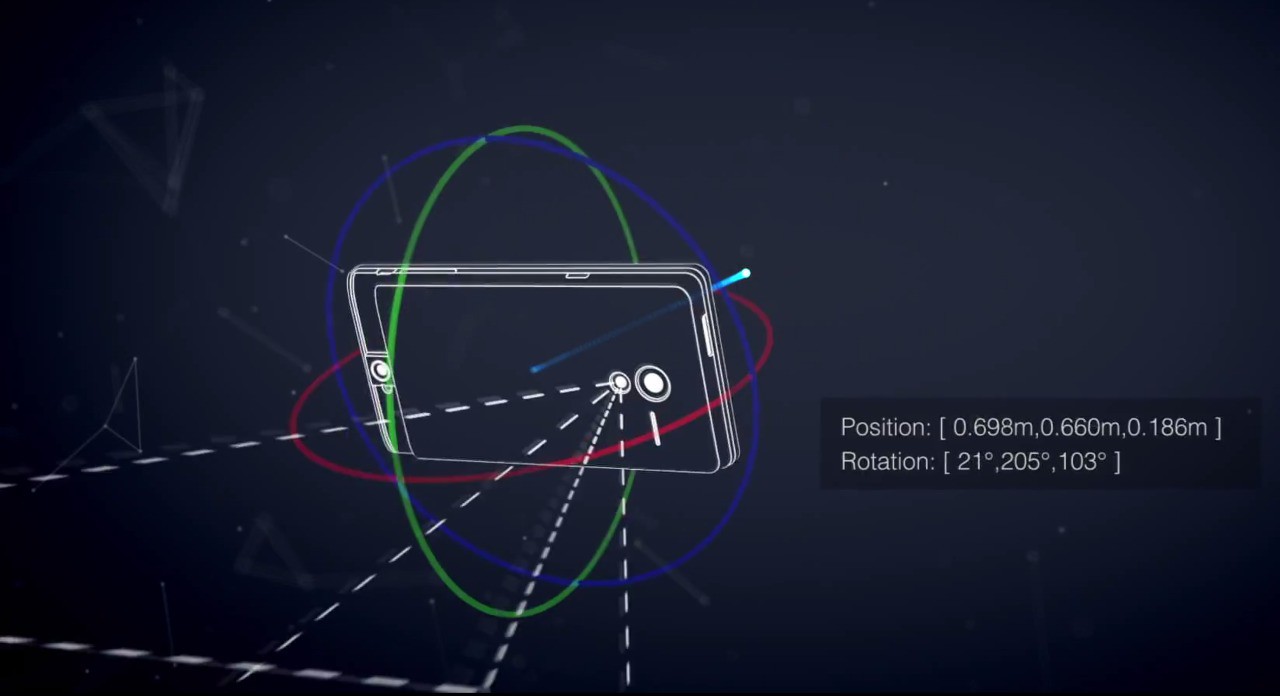

The answer really comes down to proper tracking. When describing the position and orientation, or pose of an object, it is important to specify what the reference frame is. For example, saying "the box is 3 meters away" is not enough. You have to ask, "3 meters from what? Where is the origin, (0,0,0)?" The origin might be the position of the device when the application first started, the corner of your desk, the front door of a building, or perhaps it's the Washington Monument. You must define the location and orientation of something with respect to something else to make the information meaningful. As a result, the Project Tango APIs use a system of coordinate frame pairs to specify pose requests.

In other words, you want to share an Area Definition File (ADF) between all the participants. Given that they all have this file loaded and are in that area then they are able to handle scene objects. In order to see positions of each connected person, you need to exchange ADF relative pose information.

The first challenge is to lock an object in space or a planar surface. With the right tracking, things can be stabilized between the dimensions. In the past we would use optical tracking with AR markers. This works rather well but it requires additional components such as the optical sensors, computer vision algorithms, and line of sight, which all cost on battery performance.

Microsoft Hololens seems to have gotten optical tracking down. The headset is jam packed with sensors and optics that capture as much data as possible, generating terabytes of data which requires a second processor called the Holographic Processing Unit to compute. The device understands where you look, and maps the world around you, all in real time.

The way Valve engineered Lighthouse is very clever in solving the input tracking problem without any cameras. The system beams non-visible light that and functions as a reference point for any positional tracking device (like a VR headset or a game controller) to figure out where it is in real 3D space. The receiver is covered with little photosensors that detect the flashes and the laser beams.

When a flash occurs, the headset simply starts counting (like a stopwatch) until it “sees” which one of its photosensors gets hit by a laser beam—and uses the relationship between where that photosensor exists on the headset, and when the beam hit the photosensor, to mathematically calculate its exact position relative to the base stations in the room.

I want to learn how Project Tango's positional information can be linked with Janus.

The first requirement is to have an ADF (AKA a Tango scan) and the ability to track when a user enters the space.

By default in JanusVR, the entrance for the player into the environment will be at

3D position "0 0 0", and the player will be facing direction "0 0 -1" (along the negative Z-axis). Also, in many cases you will find yourself at the origin when walking through a portal. Pressing "/" in Janus enables debug mode. The head movements and body translations will represent the 6 DoF.

I hypothesize that the multiserver is going to be used translate movement from connected devices into Janus because it handles all the methods and data between simultaneously connected clients. The message structure for the API states that every packet must contain a "method" field and optionally a "data" field. In the case of movement, the message structure might look like this:

When you pass through a portal:

{"method":"enter_room", "data": { "roomId": "345678354764987457" }}

When the user position has moved:

{"method":"move", "data": [0,0,0,0,0,0,0] }

I need the ability to manipulate my avatar in Janus by moving around my Tango device. With ghosts, I can record all of the positional data relative to the Tango from the browser. Here is an example from a ghost script I recorded from walking around the office:

{"method":"move", "data":{"pos":"-5.2118 -0.001 10.0495","dir":"-0.982109 -0.180519 0.0536091","view_dir":"-0.982109 -0.180519 0.0536091","up_dir":"-0.18025 0.983572 0.00983909","head_pos":"0 0 0","anim_id":"idle"}}

The ghost script records all types of information, including the users avatar, voice, and any interaction that person has in the room. Here's the snippet belonging to an avatar:

{"method":"move", "data":{"pos":"1.52321 4.06154 -0.578692","dir":"-0.529112 -0.198742 0.82495","view_dir":"-0.528664 -0.202787 0.824252","up_dir":"-0.109481 0.979223 0.170694","head_pos":"0 0 0","anim_id":"idle","avatar":"<FireBoxRoom><Assets><AssetObject id=^head^ src=^http://localhost:8080/ipfs/QmYwP5ww8B3wFYeiYk5AB9v6jqbyF8ovSeuoSoLgmaDdoM/head.obj^ mtl=^http://localhost:8080/ipfs/QmYwP5ww8B3wFYeiYk5AB9v6jqbyF8ovSeuoSoLgmaDdoM/head.mtl^ /><AssetObject id=^body^ src=^http://localhost:8080/ipfs/QmYwP5ww8B3wFYeiYk5AB9v6jqbyF8ovSeuoSoLgmaDdoM/human.obj^ mtl=^http://localhost:8080/ipfs/QmYwP5ww8B3wFYeiYk5AB9v6jqbyF8ovSeuoSoLgmaDdoM/human.mtl^ /></Assets><Room><Ghost id=^LA^ js_id=^148^ locked=^false^ onclick=^^ oncollision=^^ interp_time=^0.1^ pos=^-0.248582 3.432468 0.950055^ vel=^0 0 0^ xdir=^0.075406 0 0.997153^ ydir=^0 1 0^ zdir=^-0.997153 0 0.075406^ scale=^0.1 0.1 0.1^ col=^#ffffff^ lighting=^true^ visible=^true^ shader_id=^^ head_id=^head^ head_pos=^0 0 0^ body_id=^body^ anim_id=^^ anim_speed=^1^ eye_pos=^0 1.6 0^ eye_ipd=^0.065^ userid_pos=^0 0.5 0^ loop=^false^ gain=^1^ pitch=^1^ auto_play=^false^ cull_face=^back^ play_once=^false^ /></Room></FireBoxRoom>"}}

Incase you forgot, the only information belonging to an avatar is stored in a text file in ~/.local/share/janusvr/userid.txt

Link to full API documentation

My vision is that someone entering my ADF (Area Definition File) remotely from Janus can be tracked by my multiserver which can then augment their movements as well as avatar information to be represented as an interactive hologram in AR while simultaneously being represented as an object in VR. The potential problem is that this depends on the precision of the tracking: if the motion detection is not perfect, it will introduce virtual movements that you see but that you never actually did!. For the next few years, most communication and interaction with holograms is going to be limited to screens but I am curious to learn of greater potential uses for transmitting 3D information wirelessly. Does it really matter to have this type of information only viewable by humans? What if the 3D information itself is representative of something else such as a new procedure. This year I want to fully understand the capabilities and implications of manipulating geometry en scale with mah decentralized multidimensional mixed reality networks.

Hybrid Reality Experiments

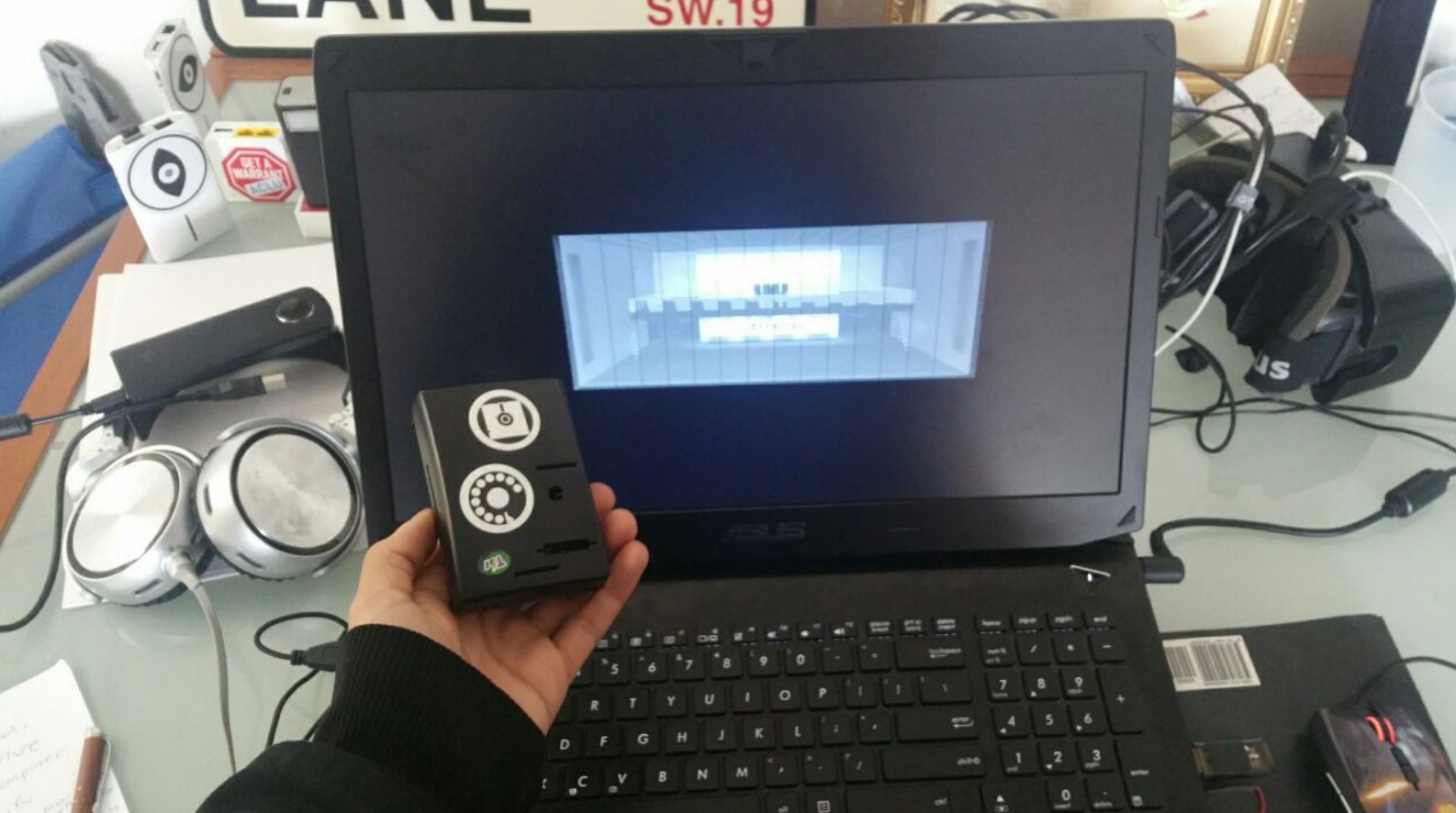

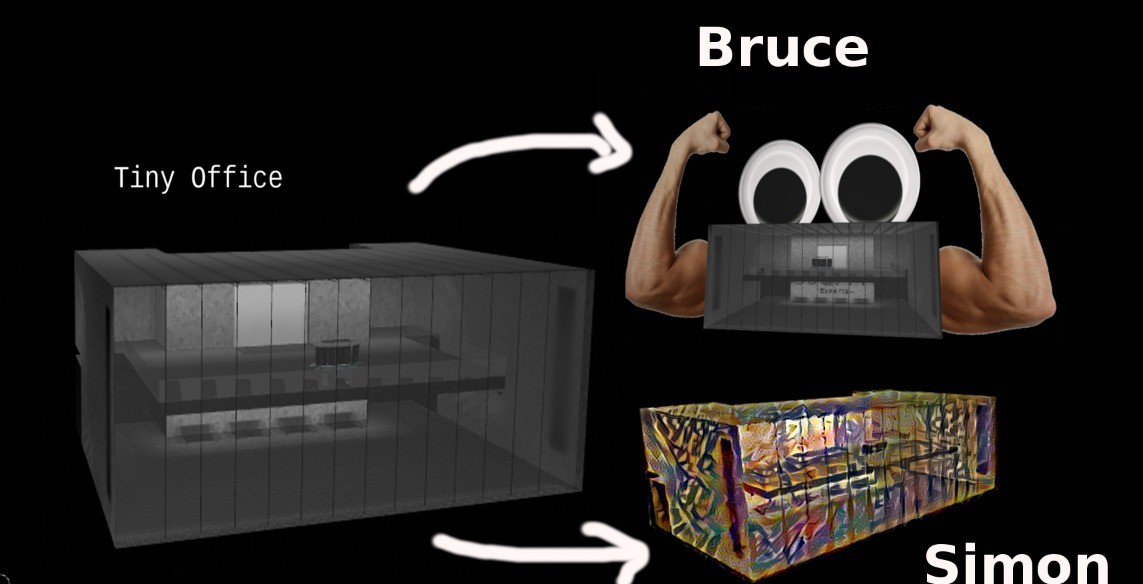

Tiny office is the beginnings of a mixed reality application that I'm working on to make cloud computing a literal term. One inspiration for this work has been from HoloLens and this awesome demo of combining MR with IOT:In contrast, my office app looked really dull and boring compared to Microsoft's...

so I needed to quickly jazz it up:

The idea is to give an object a persona [Bruce] or perhaps represent the object in its idle state as an art piece by periodically running its texture files through various creative neural networks that dream new skins. I also want the ability to change perspectives by being able to put on a headset and shrink into my office then peer out the window and feel as tiny as a beetle kind of like this.

Tiny Office represents an avatar for my Raspberry Pi running a GNU/Linux operating system.

For more information, check out Hacking Within VR Pt. 4.

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.