Abstract Ideas

I came to Hollywood with a plan and as doors opened that vision has steadily matured. It was late September 2015 when I was introduced to this space. I snapped this 360 with my phone:

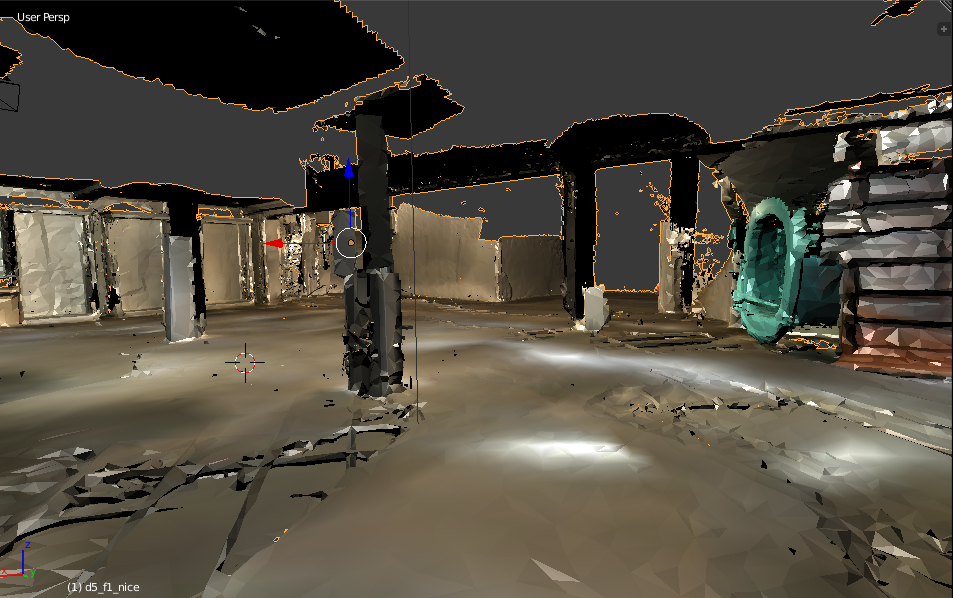

This is the story of a work in progress; a peak into a grand design for the ultimate experiment of connecting the digital and physical world together and starting a new art movement.I explained that this could be done by using a combination of live 360 and 3D scanning technology to transform the physical space into an online mixed reality world. I had the right tools to produce this vision, and so when my Tango came in I got to work scanning the space:

Normally the Tango is meant to scan small spaces or furniture, I had really tested the limits by scanning 10,000+ sq ft space with this device. With a little practice and 15+ scans later, I had pieces that were starting to look good and was ready to texture bake in blender:

At this point along, I felt victorious. With the space captured into a scale 3D model, everything else that follows gets easier and the astounding possibilities with an engine like Janus will be useful for tasks such as event planning. This can make a 3D modeller’s job easier as well. Link to Album

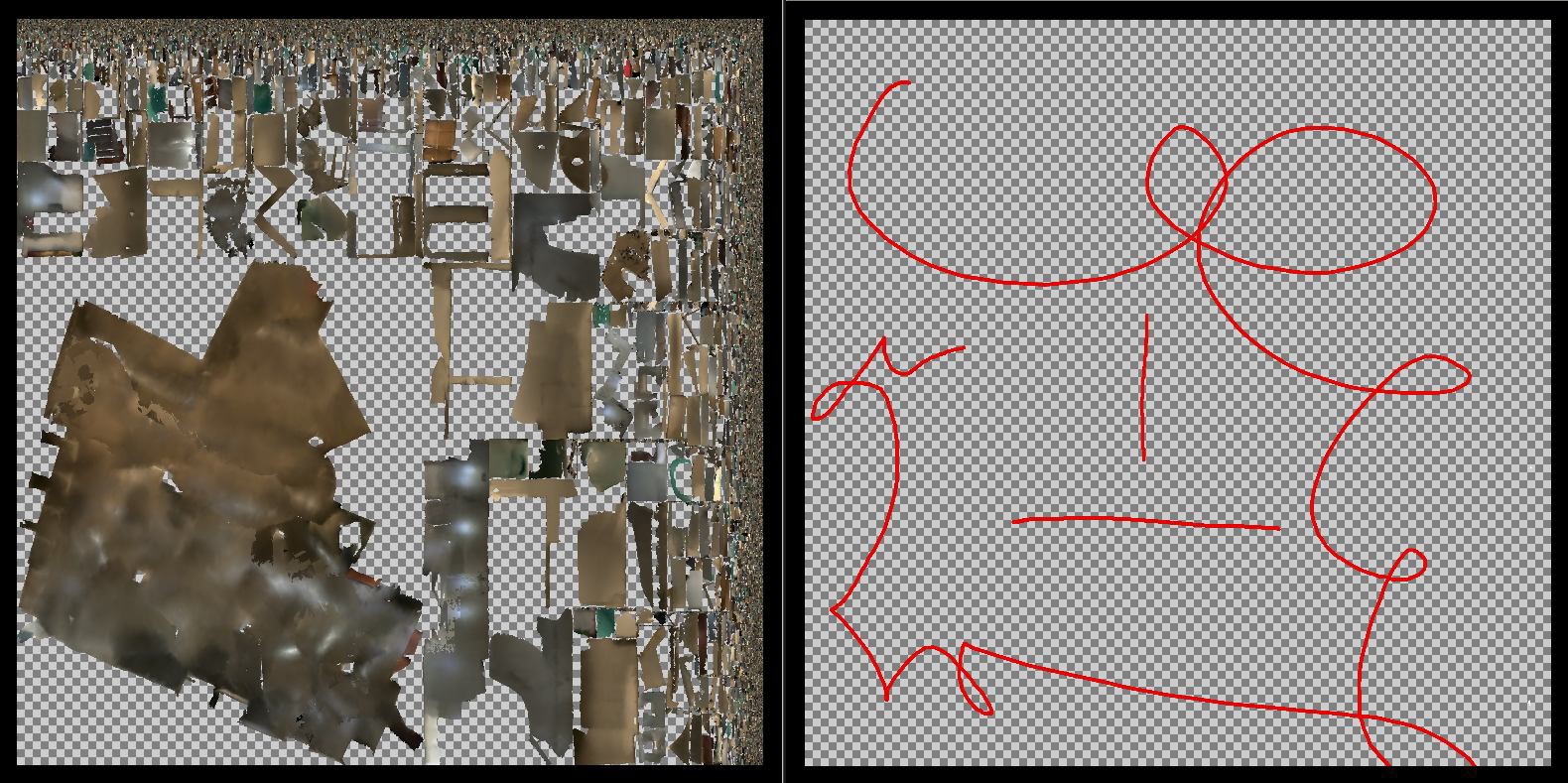

Next I wanted to experiment with the interesting neural network activity I was doing at the time:

I learned that the paint wears thin without substantial GPU processing power available. However, from a distance the results did look good. What if the gallery itself is part of the art show and with AVALON people can take it home with them or making it into a small sized hologram? I had long since stopped believing in mistakes, they are opportunities to obtain a deeper understanding of the system. Link to Video

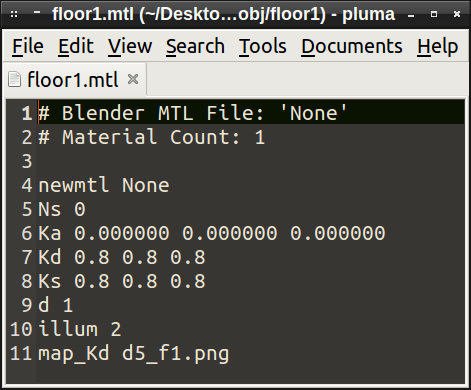

One method I discovered works really well for augmenting physical spaces is by stylizing the texture maps. For standard wavefront object files, they usually come with an mtl file that points to a texture.

newmtl Textured

Ka 1.000 1.000 1.000

Kd 1.000 1.000 1.000

Ks 0.000 0.000 0.000

d 1.0

illum 2

map_Ka lenna.tga # the ambient texture map

map_Kd lenna.tga # the diffuse texture map (most of the time, it will be the same as the ambient texture map)

map_d lenna_alpha.tga # the alpha texture map

map_Ks lenna.tga # specular color texture map

map_Ns lenna_spec.tga # specular highlight component

map_bump lenna_bump.tga # some implementations use 'map_bump' instead of 'bump' below

bump lenna_bump.tga # bump map (which by default uses luminance channel of the image)

disp lenna_disp.tga # displacement map

decal lenna_stencil.tga # stencil decal texture (defaults to 'matte' channel of the image)

You are allowed to change that line to map whatever texture you'd like, including gifs. Very super simple stuff for people with any level of 3D modeling experience.

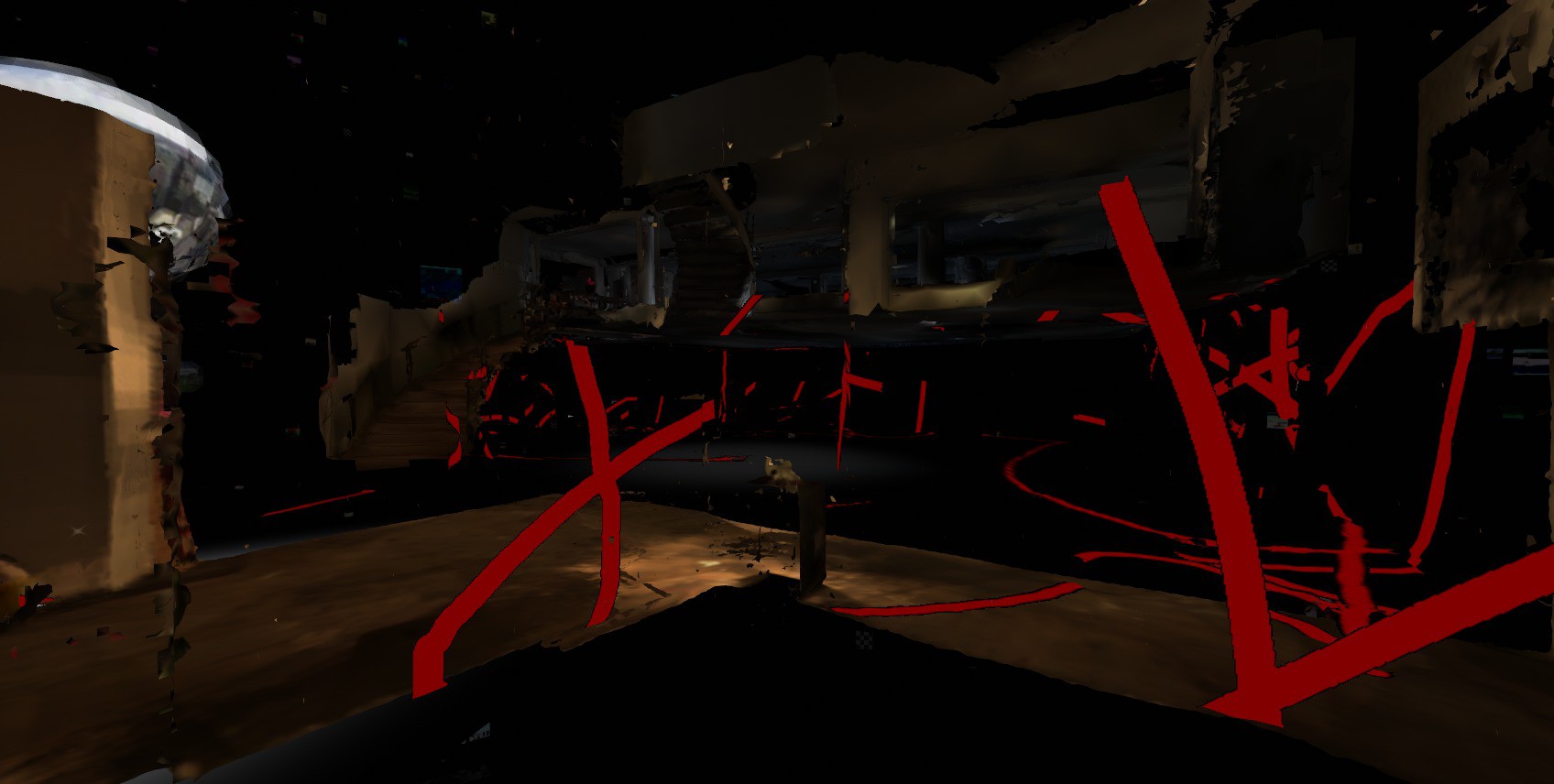

Digital graffiti. This had planted a seed in my mind that we'll return to later.

Digital graffiti. This had planted a seed in my mind that we'll return to later.

I played with generative algorithms for creating textures and experimented with alpha channels. What I came up with one night is similar to shadow puppet art, but instead of a shadow it is an object that lights the stage from behind or underneath:

I began to think about the possibility of using image processing scripts combined with my WiFi data visualization art in order to create puddles where the texture of the place transforms into a painting. Imagine a droplet of information that stains the fabric of the space you're in. Now it is around Christmas 2015 and as homage to the artist who opened the door for me I captured and optimized a photogrammetry scan (Link to album: http://imgur.com/a/mOi7R) so he can overlook this work in progress:

A New Year

I took a trip to the annual Chaos Communication Congress in Hamburg to create a virtual art installation.

You can check out pictures of it at 32c3.neocities.org or visit the link in Janus to see it in VR.

You can check out pictures of it at 32c3.neocities.org or visit the link in Janus to see it in VR.

The year is 2016 and the vision is starting to come to life. During the time I was scanning the Gallery, I got a call about an artist using the space to shoot her music video for VR. Intrigued by the telepresence robot and GoPro rig and equipped with 3D scans of the space already, I agreed to help with post production. It sounded fun ¯\_(ツ)_/¯

If this video was going to transition from 360 video into something that is actual virtual reality, it meant more than simple compositing tricks and video editing. The first thing needed were virtual actors. AssetGhosts will be perfect for this, they're animated and able to be controlled and scripted to do any type of task. Unfortunately they are a bit tedious to set up. I needed a fast way to visualize and play around with my assets so I came up with a simple one liner that will create a webpage of all the gifs in a directory which I can then upload to IPFS:

(echo "<html>"; echo "<body>";echo "<ul>";for i in *.gif;do basename=$(echo $i|rev|cut -d. -f2-|rev);echo "<li style='display:inline-block'><a href='$basename.gif'><img src='$basename.gif'></a>";done;echo "</ul>";echo "</body>";echo "</html>")>index.html

This makes it mad simple to go into a directory and build a pallet of assets that I can easily drag and drop in Janus with IPFS there to bring it online, in a peer-to-peer fashion (great for collaboration and version control).

I should mention that exploring other people's rooms in JanusVR is sometimes like treasure hunting. Because of the open manner in which FireBoxRoom's are built; if someone were to code a car into Janus, then suddenly everyone has a car. I took a sample of 40+ dancing GIFs from my internet collection and dropped them into the club. Then I would go and record a ghost of each dancing avatar in order of sequence by number (ctrl+g, press again to end recording) and Janus would automatically save each text file in the workspace folder. Then you just add in a line for each ghost you record:

<AssetGhost id="ghost_id" src="ghost.txt" />

Ghost files will record as much information as you give it, including the avatar that you currently possess in the text file so if you have a lot of ghosts then it is best to have no avatar otherwise you might end up with all cats: https://i.imgur.com/YflvqZN.gif

Also in your assets tree you should have objects that you will tag with each ghost file like such. Label these differently than your AssetGhost.

<AssetObject id="ghost_1" src="" mtl="" />

The final part is adding the Ghost underneath the room tag:

<Ghost id="ghost_id" xdir="-0.088896 0 -0.996041" ydir="0.125557 0.992023 -0.011206" zdir="0.988096 -0.126056 -0.088187" scale="0.8 0.8 1" lighting="false" shader_id="room_shader" head_id="ghost_blank_obj" head_pos="0 0 0" body_id="ghost_1" anim_id="idle" userid_pos="0 0.3 0" auto_play="true" cull_face="none" />

Overall, the markup should look like this when layering into the HTML code:

<FireBoxRoom>

<Assets>

<AssetGhost id="ghost_id" src="file.txt" />

<AssetObject id="ghost_body_m" src="http://ipfs.io/ipfs/QmRTVpJgfTJBNLcdbfJ1jWhRvkiDaTpizMAxYf2i32Peaw/meme/meme_17.obj" mtl="http://ipfs.io/ipfs/QmRTVpJgfTJBNLcdbfJ1jWhRvkiDaTpizMAxYf2i32Peaw/meme/meme_17.mtl" />

<AssetObject id="ghost_head" src="http://avatars.vrsites.com/mm/null.obj" mtl="http://avatars.vrsites.com/null.mtl" />

</Assets>

<Room>

<Ghost id="ghost_id" js_id="148" locked="false" onclick="" oncollision="" interp_time="0.1" pos="0 0.199 0.5" vel="0 0 0" accel="0 0 0" xdir="0.943801 0 0.330513" ydir="0 1 0" zdir="-0.330513 0 0.943801" scale="0.8 0.8 0.8" col="#ffffff" lighting="false" visible="true" head_id="ghost_head" head_pos="0 1 0" body_id="ghost_body_m" anim_id="" anim_speed="1" eye_pos="0 1.6 0" eye_ipd="0.065" userid_pos="0 0.5 0" loop="false" gain="1" pitch="1" auto_play="false" cull_face="none" play_once="false" />

</Room>

</FireBoxRoom>

The useful feature about ghosts is when a user ctrl-click's one it changes their avatar into that. This is how the seeds will spread throughout the metaverse.

Link to Album (creds to Spyduck for building the original Cyberia). I really liked the shader that provided the lights for this room. One only needs to look at the bottom of the code in order to modify vectors for the color, time, and placement of this effect:

void applySceneLights() {

directional(vec3(-0.5,-1,0),vec3(0.15,0.41,0.70)*0.1);

// dance lights

light(vec3(-0.3,4,-4), getBeamColor(0,0)*2, -1, 30, 0.8);

light(vec3(-0.3,4,5), getBeamColor(2,0.2)*2, -1, 30, 0.8);

light(vec3(0.6,3,-0.4), getBeamColor(2,0.5)*2, -1, 30, 0.8);

spotlight(vec3(-0.3,6,-4), vec3(0,-1,0), getBeamColor(0,0)*2, 50, 40, 30, 0.45);

spotlight(vec3(-0.3,6,5), vec3(0,-1,0), getBeamColor(2,0.2)*2, 50, 40, 30, 0.45);

spotlight(vec3(0.6,6,-0.4), vec3(0,-1,0), getBeamColor(2,0.5)*2, 50, 40, 30, 0.45);

// neon signs

spotlight(vec3(-17.71,1.78,7.68), vec3(0,0,-0.9), vec3(0.3,0.5,1)*2, 90, 80, 30, 2);

spotlight(vec3(-10.28,5.31,-8.79), vec3(0,0,0.9), vec3(0.3,0.5,1)*2, 90, 80, 30, 1.2);

// other

spotlight(vec3(-7.754,5.281,11.565), vec3(0,-0.5,0.6), vec3(1,1,1)*2, 75, 60, 30, 1.2);

}

An adjustment on the Room tag will make this shader global:

<Room pos="0 0 1" xdir="1 0 0" ydir="0 1 0" zdir="0 0 1" shader_id="room_shader">

(*・ω・) Party Time (ㆁᴗㆁ✿)

Work needs to be done in several areas:

- Optimize the scans of the gallery

- Implement generative textures

- Introduce 3D dancing avatars

- Build in support for live audio and DJing

- Test live video

- Tweak the particle effects / particle beam

- Decentralize everything as much as possible

This mixed reality project will ideally be platform agnostic. With such decides like the Meta 2 and Hololens finally reaching developers, we're at the DK1 stages of augmented reality. The metaverse party is online and just getting started, join us.

My vision began as a means to connect the physical and the digital together for an art show in Los Angeles that anybody can attend remotely from the internet. Guests will be able to view live 360 streaming of the event and explore the space. The information will flow both ways: meatspace attendees can have conversations with holograms that represent a person visiting from the internet.

http://alpha.vrchive.com/image/E5j

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Love it

Are you sure? yes | no