Last week, I was having a conversation with Alex Rich about the best time to post a Hackaday.io project update to maximize visibility - and thereby increase the project's number of new followers and skulls. Would it be best to post while there weren't many other people posting, so that the project would stay on the global feed for a longer period of time? Or would it be better to post during a high traffic period, when the larger number of viewers might make up for the fact that the project wouldn't be prominent on the feed for as long? I decided to do some sleuthing to try and find out when users most often publish project updates, and when users were most likely to skull/follow other projects.

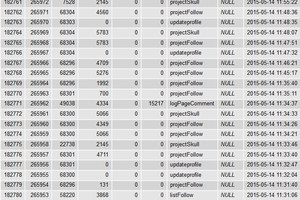

I decided that the simplest way to proceed would be to write a script that loads the global feed at http://hackaday.io/feed and parses the information about the type of each feed item and when it occurred. I thought it also might be helpful to know the user who triggered the feed item, and the project or user (or both) being updated, followed, or whatever else. Once the information was parsed, it could be saved to a database and analyzed after a large enough sample had been collected.

Step 1 was to figure out where exactly to find that information.Using the "Inspect Element" tool in Firefox, I was able to see that the feed is just an <ul>, with each <li> belonging to a class which corresponds to the type of feed item: updatedetails, projectFollow, projectSkull, etc. Each <li> contains two <div>s: one which contains the user's profile picture and a link to his/her profile, and another which contains the actual content. Importantly, the link attached to the profile picture is always of the form "/hacker/userid" and never contains the user's vanity url (e.g., hackaday.io/bobblake) – so I could grab the user id from the last part of this link. To get the time of the update, I would have to somehow parse the plain English representation given at the bottom of each feed item (a few seconds ago, 23 minutes ago, etc) and relate that to the time at which I loaded the page. Also, because the feed only displays to-the-minute accuracy for the most recent hour of posts, I would have to call my script once per hour to get the most precise time information. The rest of the data I wanted could be obtained by just reading in the feed item's title, links and all.

The next step was to write the script. I wrote it in PHP, using the phpQuery library to simplify traversing the DOM and accessing the HTML element properties. The script reads every post sequentially until it encounters one that contains the substring "hour" or "day", ensuring that it only reads posts that have their time specified to the nearest minute. Once the information is parsed, it gets inserted into a MySQL database on my server. I set up a cron job on my server to call the script every hour, on the hour – however, I realized at a certain point that the plain English timestamp on each post rounds up from 45 minutes to the hour. So, the timestamp goes directly from "45 minutes ago" to "an hour ago". All results in my database will therefore probably understate the actual number of posts each hour by about 25%.

I wrote a separate script to output the contents of the database to a CSV file for analysis using a spreadsheet. This script can be called by going to http://www.bobblake.me/had_scraper. All my code is available on github at https://github.com/bob-blake/hackaday_io_feed_scraper.

So now, it seems like all that is left to do is to wait a couple of weeks for the database to fill up with enough data to meaningfully analyze. That would be the case, except that the folks at Hackaday just announced their API – which very conveniently serves up all of the information that I just started scraping. Seriously, the API was released literally the day after I got this script running (I'm not bitter or anything; this was still a fun exercise). The API can be used to get feed items with to-the-minute precision...

Read more » Bob Blake

Bob Blake

Chad

Chad

Did you know we have an API that might make this a snap?

http://dev.hackaday.io