I've got a numerically-controlled oscillator (NCO) and lock-in detector working on a DE-0 nano FPGA board, which I've now got hooked up to my preamplifier board. At the moment I'm using a sigma-delta DAC implemented in the FPGA (50 MHz PWM output on a GPIO pin) to generate the ~32.768 kHz excitation sine wave.

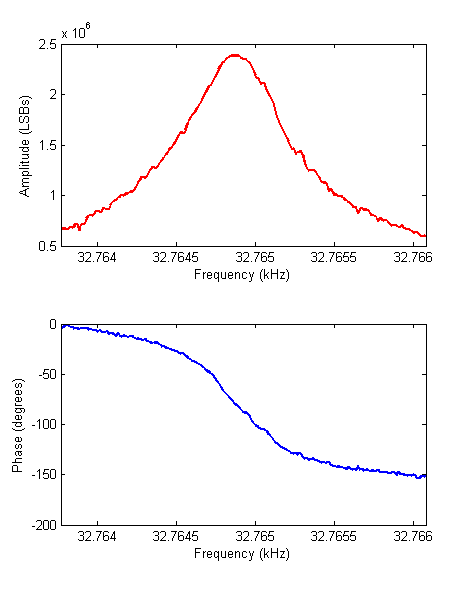

After finding the resonance of a tuning fork with a function generator and scope, I used a Nios II processor in the FPGA to sweep the frequency of the NCO over a narrow ~2.3 Hz range close to resonance, while reading the amplitude and phase outputs from the lock-in detector. Here are the results:

A bit noisy, possibly from the SMPS powering the preamplifier or the PWM DAC I used, but the resonance peak and phase shift are clearly defined. There is some offset in the phase introduced by the preamplifier. The reason I'm doing this frequency sweep is to zero in on the resonant frequency and find the phase at resonance, which in this case is not exactly -90 degrees (it's about -81 degrees) due to the offset error. Next step is to add a PI control loop between the lock-in and NCO, and use this resonant phase as the setpoint to the controller so that it always drives the sensor at resonance.

We can also calculate the Q-factor from the resonant frequency and width of the amplitude curve. Just looking at the amplitude graph, and using

gives a pretty large Q of about 40000. I expect it to decrease by an order of magnitude when I remove it from the metal can. Because this value is so high, the sweep, although very narrow, took about 30 seconds. The amplitude responds very slowly to changes in the drive frequency, but the frequency responds immediately.

Dan Berard

Dan Berard

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.