(This is the first of a series of logs that I'll be posting over the next few days giving a brief overview of the work I've done on Firefly to date, and what I'm hoping to get done over the next few months.)

One of the components of Firefly's base algorithmic layer deals with escaping from moving threats in complex, dangerous environments by mimicking how fruit flies avoid swatters—so, for example, if one of these robots is carrying out a search and rescue mission after an earthquake and the ceiling collapses or an object falls, this algorithm helps the robot recognize it and move away in time. This is where the story of Firefly begins, so, in this log, I'll talk about my inspiration for the project, and describe the process of designing and implementing hardware and software for escaping from moving threats.

Inspiration

Firefly started out as a high school science fair project. In the summer before ninth grade, my family returned from a vacation to find our house filled with fruit flies, because we had forgotten to throw out some bananas on the kitchen counter before we left. I spent the next month constantly trying to swat them and getting increasingly frustrated when they kept escaping, but I also couldn't help but wonder about what must be going on "under the hood"—what these flies must be doing to escape so quickly and effectively.

It turns out that the key to the fruit fly's escape is that they're able to accomplish a lot with very little: they use very simple simple sensing (their eyes, in particular, have the resolution of a 26 by 26 pixel camera), but, because they have such little sensory information to begin with, they're able to process all of that information very quickly to detect motion. The result of this is that fruit flies are able to see ten times faster than we can, even with brains a millionth as complex as ours, and this is what enables them to respond to moving threats so quickly.

Around the same time, drones were in the news a lot. It quickly became clear that although they had tremendous potential to help after emergencies or natural disasters, they simply weren't robust or capable enough. One specific problem was that these robots weren't able to react to quickly-moving threats in their environments. Existing algorithms for avoiding collisions don't work here because they're so computationally-intensive and rely on complex sensors, so I made the connection to fruit flies: I wanted to see whether we could apply the same simplicity that makes the fruit fly so effective at escaping to make flying robots better at reacting to their environments in real time.

Building a sensor module to detect approaching threats

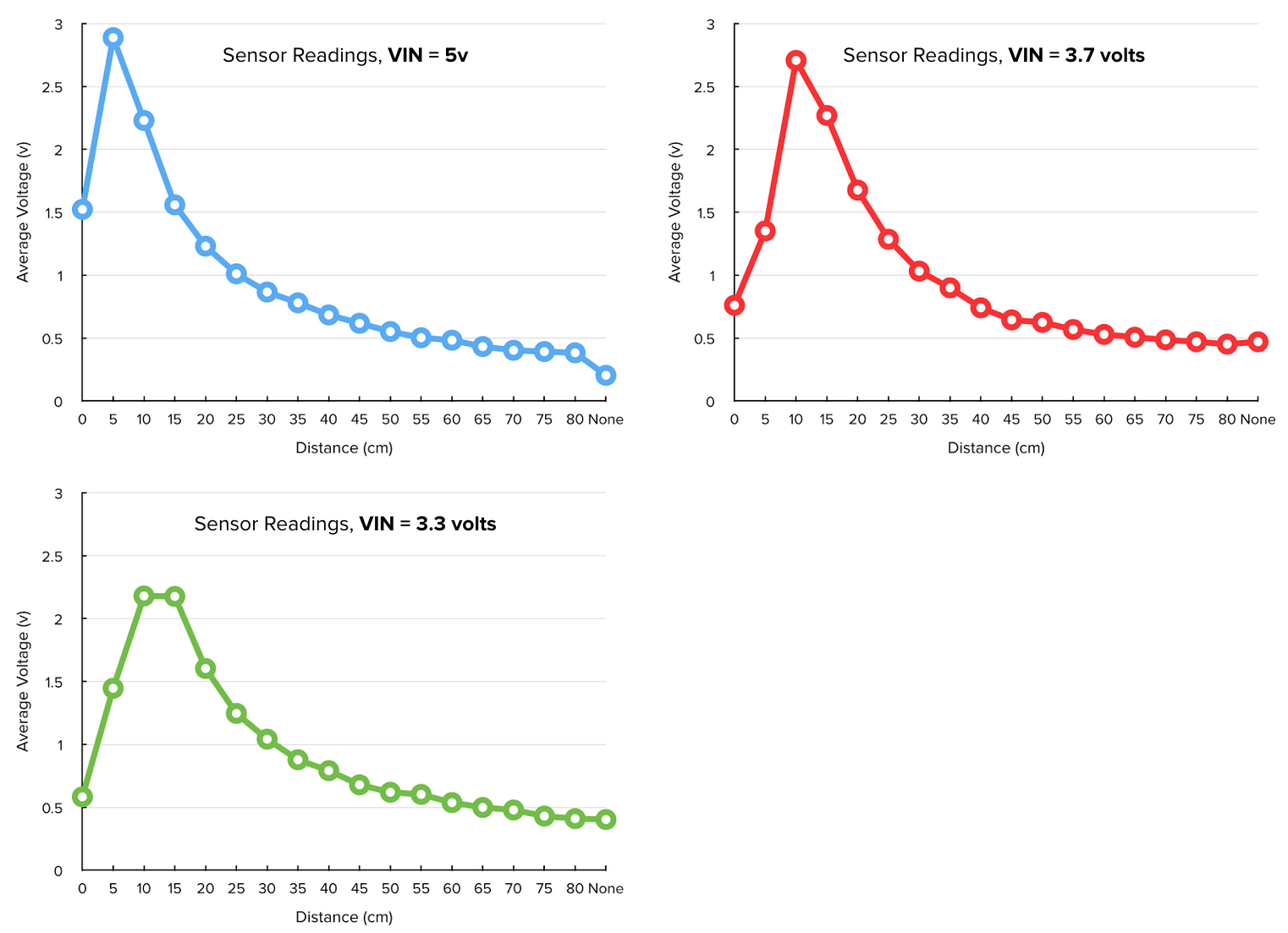

I had planned to use a small camera, combined with some simple computer vision algorithms, to detect threats. However, I found that even the lowest-resolution cameras available today create too much data to process in real time, especially on the 72 MHz ARM Cortex processor on the Crazyflie, the quadrotor on which I wanted to implement my work. I came up with a few alternatives and I eventually settled on these infrared distance sensors. (Sharp has since released a newer version that's twice as fast and has twice the range, so that's what I'm planning to use for my latest prototype.) The beauty of this approach is that each of these sensors produces a single number, compared to laser scanners or depth cameras, which produce tons of detailed data that’s nearly impossible to process and react to quickly.

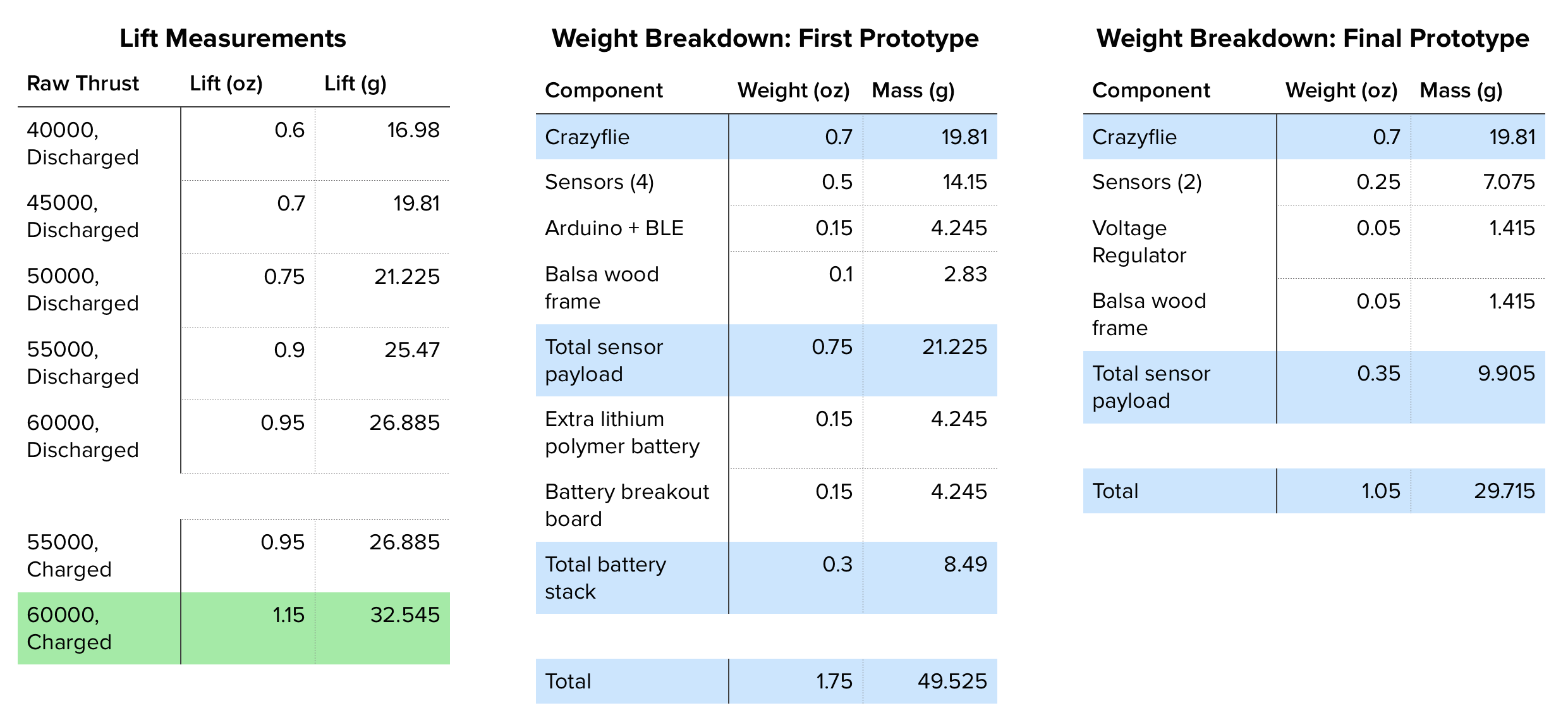

Initially, I wanted to use four of these sensors to precisely sense the direction from which a threat is approaching. I also wanted to use a separate microcontroller and battery to avoid directly modifying the Crazyflie's electronics. I built a balsa wood frame for the sensors, attached a Tinyduino, and added a small battery, and... my robot wouldn't take off, because it didn't have enough payload capacity to carry all of this added weight. I performed lift measurements and soon found that I could use a maximum of two sensors, and that I had to interface them directly with the Crazyflie.

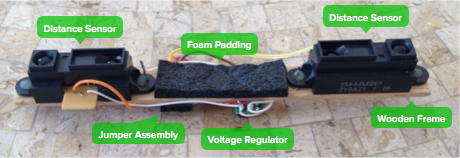

Using 3.3v would have been optimal for power, because the least current would be drawn from the quadrotor's battery, while using 3.7v (i.e., the unregulated output of the quadrotor's battery, which actually varies from 4.2v to 3v throughout the discharge cycle) would have been idea for weight, because I wouldn't need a voltage regulator at all. However, supplying the sensor at either of these voltages significantly reduced range, so I decided to add a 5v regulator to power the sensors. Here's the completed sensor module:

Creating algorithms to mimic the fruit fly's escape

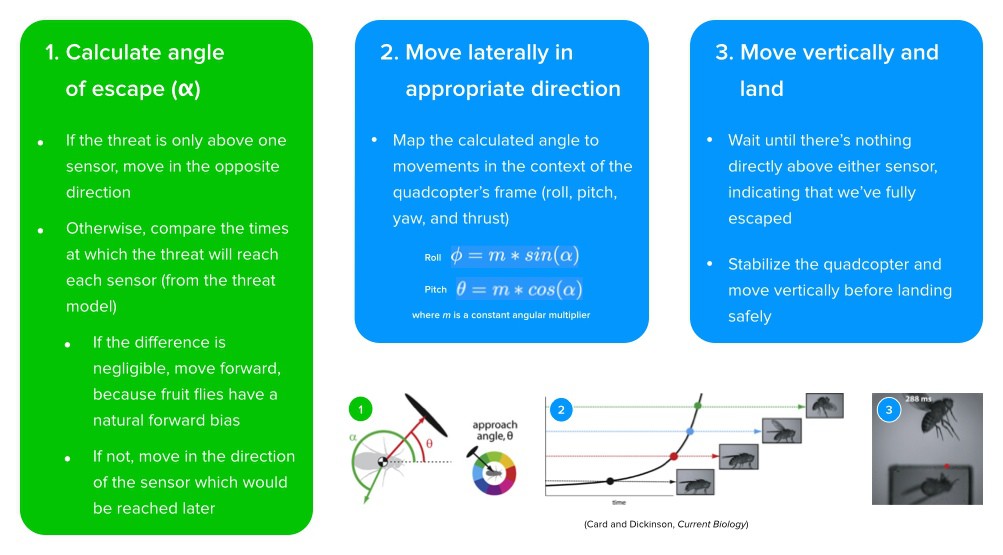

Next, I had to create algorithms that used the distances returned by these sensors to respond to threats. On a high level, this is done in three steps: identification, prediction, and escape.

- Identification. When the distance at either sensor drops below the maximum sensor range, assume that there's a threat, and start monitoring the output of these sensors more closely.

- This is the easiest step, but it's necessary because we need to know when a threat's approach starts.

- Prediction. Incorporating new measurements at 25 Hz, recursively model the threat's trajectory with a second-order polynomial. Use this polynomial to make predictions about when the threat will reach each sensor, and use these predictions to decide when—and in what direction—to escape.

- This is the hard step, which is why I initially skipped it. However, I found that, without this step, escape was unreliable, because the quadrotor wasted time and battery power responding to objects overhead that eventually moved away.

- I tested many different algorithms for this using offline data, including using the output of a Kalman filter's prediction step. However, I found that polynomial fitting was the most effective but also the least computationally-intensive.

- One implementation note: I used the sum-based equations for polynomial fitting, meaning that all we're really doing here is adding and multiplying numbers.

- Escape. Escape using a segmented escape algorithm based on fruit fly behaviors:

Because the Crazyflie wasn't very well-documented at the time of this work, I spent a while trying to reverse-engineer it and figure out how I could integrate my sensor module and escape algorithms. This blog post about interfacing the Crazyflie with an ultrasonic sensor was especially helpful, and, after a few weeks reading this post, looking at the Crazyflie's source code, and examining schematics, I decided to connect my sensors to pins 5 and 7 (analog inputs) on the Crazyflie's expansion header. I added about 500 lines of C code to the commander task in the Crazyflie's firmware.

Testing

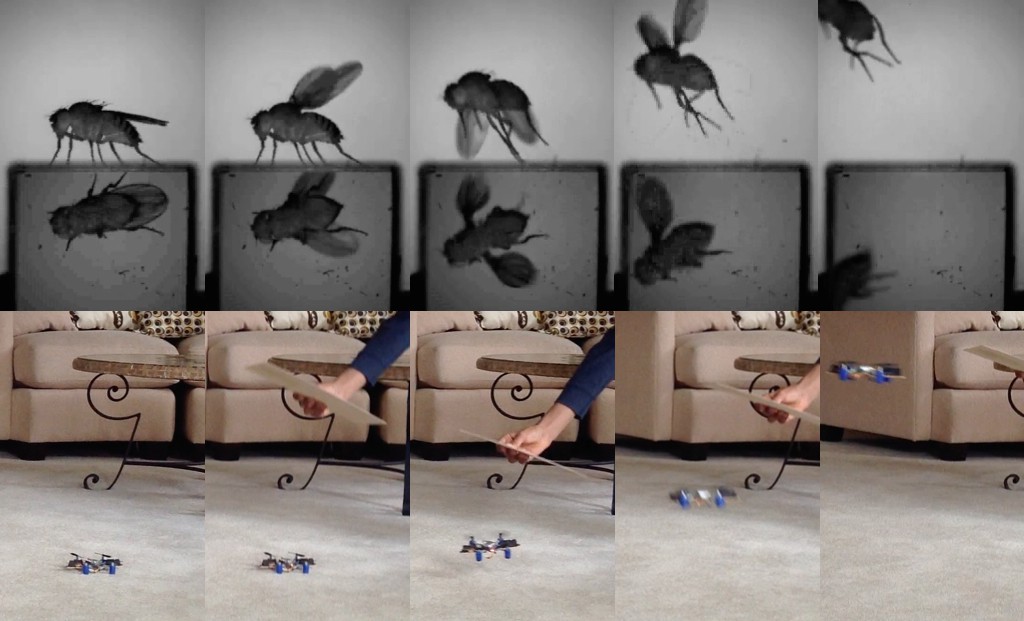

Finally, to test my robot, I used a sheet of plywood to simulate an approaching threat, and I was really excited when my robot escaped with a success rate of 100% across twenty experiments. Here's a slow-motion (0.25x) video of one of them:

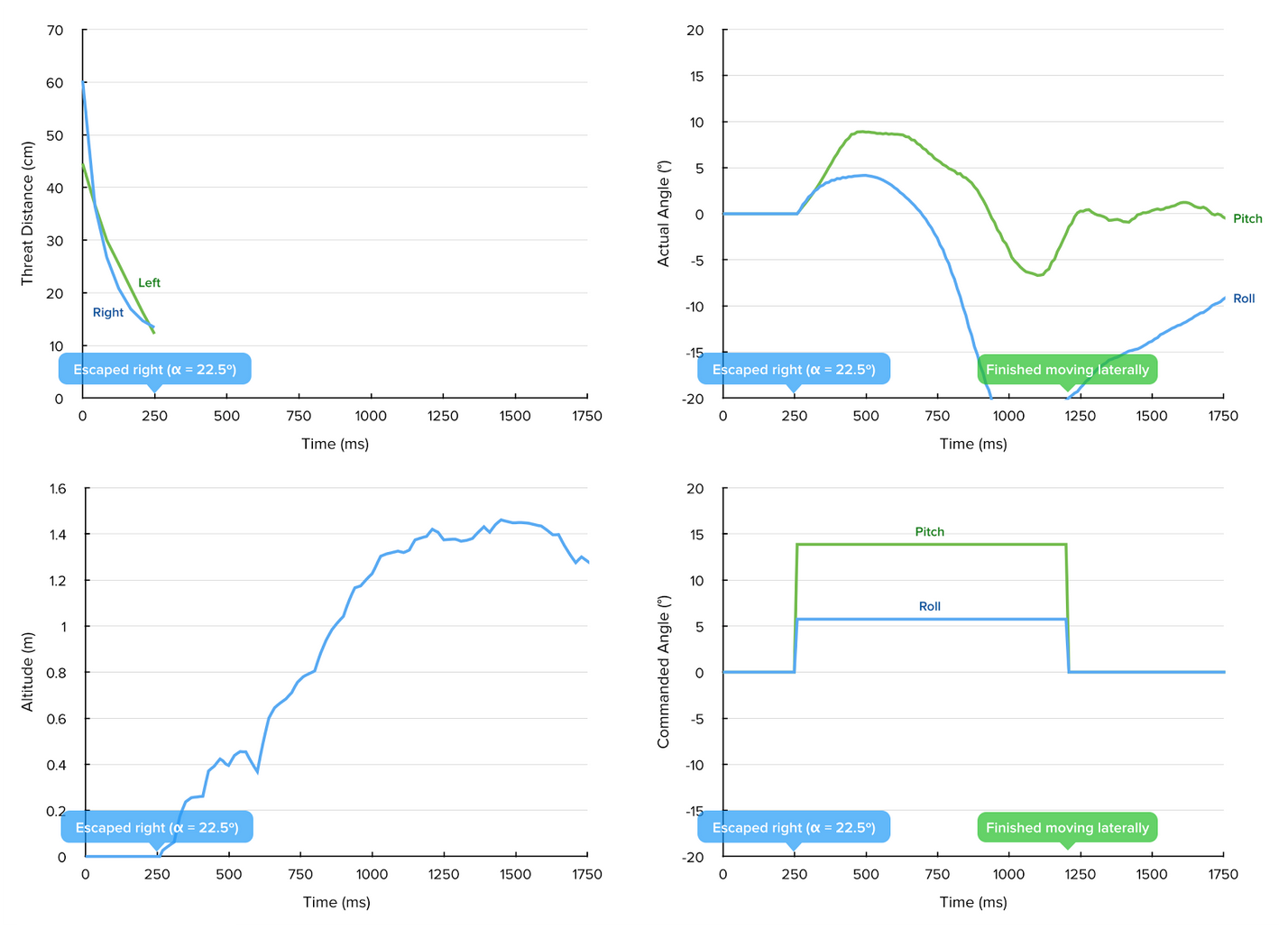

Also, here are some graphs showing sensor readings, control inputs, and control outputs for one experiment:

Mihir Garimella

Mihir Garimella

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.