The last log (Bit shuffling: what goes where ?) explains how the address bits are shuffled. Once the logical value and all the modes/options are obtained, these informations are compiled to generate a 32-bits word that is output to the file, which will program the Flash.

There are two conversion functions : hexadecimal and decimal. They are very similar and in fact can be merged into one, since the base parameter can work very well with this system (I just realise that in ASCII systems, it's better to have separate conversion routines, but here it's pointless). So let's just forget about function pointers...

Given the base parameter, it's easy to decompose the number into digits. There is only one corner case to deal with : what to display when the input is zero. Of course it should display ___0 (and not a void screen, or else people wonder if the circuit works at all) but there are 2 ways to achieve this :

- initialise the display to ___0 and use a while(>0){} loop (which is not entered in the only case where it is zeo)

- or initialise to nothing (____) and use a do..while (repeat...until) so the remainder of zero is written at least once.

The 2nd choice is better because the init value is all-cleared and the corner case happens only once, the do-while loop is lighter.

So we have the following code:

DigitLUT=[ "0", "1", "2", "3", "4", "5", "6",

"7", "8", "9", "A", "B", "C", "D", "E", "F"];

// The previously described recursive function

function recurse( index, logic, sign, zero_ext, base) {

msg+=" "+logic;

if (index>0){ //0) {

var bit=BinLUT[index--];

recurse(index, logic, sign, zero_ext, base);

if (bit < 0) {

// special code for the modes

switch(bit) {

case -1: sign=1; break;

case -2: zero_ext=42; break;

case -3: base=16; break;

}

}

else

logic|= 1 << bit;

recurse(index, logic, sign, zero_ext, base);

}

else {

// This is a "leaf" call,

// where conversion takes place:

var i=0; // index of the digit;

var d; // the digit

var m="\n";

do {

d=(logic % base)|0;

logic= (logic/base)|0;

m=DigitLUT[d]+m;

} while (logic > 0);

msg+=" - "+m;

}

}This code seems to work pretty well (once you solve rounding/FP issues with JavaScript by ORing 0)

------ -1

0 0 0 - 0

16384 - 16384

32768 32768 - 32768

49152 - 49152

------ -3

0 0 0 0 0 - 0

16384 - 4000

32768 32768 - 8000

49152 - C000This code is directly inspired by traditional conversion functions from binary to ASCII. However our case differs substantially from an ASCII terminal because each new iteration writes to a different digit, corresponding to different codes.

In JavaScript or other similar languages, this is where multi-dimensional arrays become useful: there are 5 arrays (one for each digit) with 16 sub-arrays (for all the values). The counter i starts to be useful...

Well, being a rebel, I prefer to use a single array with 5×16 entries and increment i by 16.

var i=0; // index of the digit;

var d; // the digit

var m="\n";

do {

d=(logic % base)|0;

logic= (logic/base)|0;

m=DigitLUT[d+i]+m;

i+=16;

} while (logic > 0);Then, it's "only a matter" of generating the rght bit pattern for each number.

Yes, the time has come to work on this...

The data pins are connected to the respective segments:

D 1 2 0 F2 1 F1 2 F3 A3 3 G3 C3 4 C1 G1 5 B1 F0 6 D1 E1 7 C0 D0 8 A2 B2 9 A1 F1 10 E3 D3 11 F2 B3 12 E2 G2 13 G0 E0 14 D2 C2 15 B0 A0Conversely, and more interesting, the segments are connected to the following data pins (+16 means connected to phase F2)

DigitPins=[

// A B C D E F G

[ 15+16, 15 , 7 , 7+16, 13+16, 5+16, 13 ],

[ 9 , 5 , 4 , 6 , 6+16, 9+16, 4+16 ],

[ 8 , 8+16, 14+16, 14 , 12 , 11 , 12+16 ],

[ 2+16, 11+16, 3+16, 10+16, 10 , 2 , 3],

];

Let's combine these pin numbers with the list of active segments (0=A, 1=B, etc.)

Numbers=[

[ 0, 1, 2, 3, 4, 5 ], // 0

[ 1, 2 ], // 1

[ 0, 1, 3, 4, 6 ], // 2

[ 0, 1, 2, 3, 6 ], // 3

[ 1, 2, 5, 6 ], // 4

[ 0, 2, 3, 5, 6 ], // 5

[ 0, 2, 3, 4, 5, 6 ], // 6

[ 0, 1, 2 ], // 7

[ 0, 1, 2, 3, 4, 5, 6 ], // 8

[ 0, 1, 2, 3, 5, 6 ], // 9

[ 0, 1, 2, 4, 5, 6 ], // A

[ 2, 3, 4, 5, 6 ], // B

[ 0, 3, 4, 5 ], // C

[ 1, 2, 3, 4, 6 ], // D

[ 0, 3, 4, 5, 6 ], // E

[ 0, 4, 5, 6 ] // F

];(ok this looks a lot like what I have done already for the #Discrete YASEP at "Redneck" disintegrated 7 segments decoder)

The first four digits can be compiled with these two arrays. The fifth digit is explained in the very first log: Decoding the extra digit

Value: 0 1 Y 2 W + Z 3 Y + Z 4 X + Y 5 X + Z 6 W + X + Z Intermediate coding: W = F1, ph=1 (phase 2) X = F2, ph=1 (phase 2) Y = F1, ph=0 (phase 1) Z = F2, ph=0 (phase 1)

Knowing (from above) the values of F1, F2 and the phases, it's easy to make the values by hand.

Y = 1 << /*F1 =*/ 1;

Z = 1 << /*F2 =*/ 0;

W = 1 << /*F1+16 =*/ 17;

X = 1 << /*F2+16 =*/ 16;

SegmentLUT={

33: Y , // 1

34: W + Z, // 2

35: Y + Z, // 3

36: X + Y , // 4

37: X + Z, // 5

38: W + X + Z // 6

};Now, a simple nested triple-loop combines the segments and the digits.

var k=0;

for (var j=0; j<4; j++) { // iterate the digits

for (var i=0; i<16; i++) { // iterate the values

var n=0;

// iterate the segments

for (var l=0; l< Numbers[i].length; l++)

n |= 1 << DigitPins[j][Numbers[i][l]];

// save the accumulated value

SegmentLUT[k++]=n;

}

}

You can check the result with the following code: function toBin(n) {

var m="", c;

for (var i=0; i<32; i++) {

c=" ";

if (n & 1)

c="#";

m=c+m;

n=n>>>1;

}

return m;

}

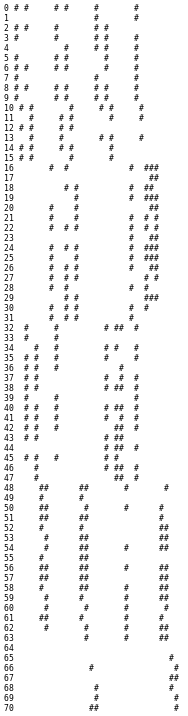

for (var i=0; i<=70; i++)

msg+=i+" "+toBin(SegmentLUT[i])+"\n";This shoudl give you something like that:

From there, things become pretty easy :-)

For example, we have the values of all the numbers at every position so we can create the initial value of 0000

var AllZero=

SegmentLUT[0]

|SegmentLUT[16]

|SegmentLUT[32]

|SegmentLUT[48];Note that most segments (30 out of 35) are turned on so the power consumption is close to maximal. Maybe zero-extension is not such a good idea after all but we'll see in practice... Now we can return to the leaf call of the recursive function. Here is what must be done:

- unconditionally remove the precedent digit ("clear" with a ~8) from the zero_ext

- lookup the new pattern from SegmentLUT and add it to zero_ext

- output the result

var n=zero_ext;

do {

d=(logic % base)|0;

logic= (logic/base)|0;

n = (n & ~SegmentLUT[i+8])

| SegmentLUT[i+d];

i+=16;

} while (logic > 0);

output(n);I have suffered a few lame inattention bugs (thanks to JS' weak checking) but the whole program works like a charm and must now be ported to C.

Yann Guidon / YGDES

Yann Guidon / YGDES

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.