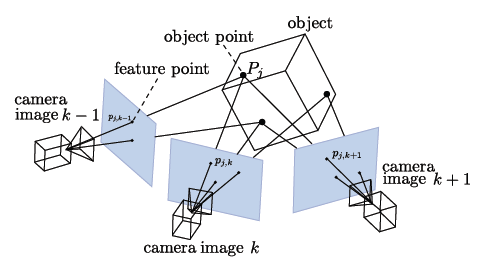

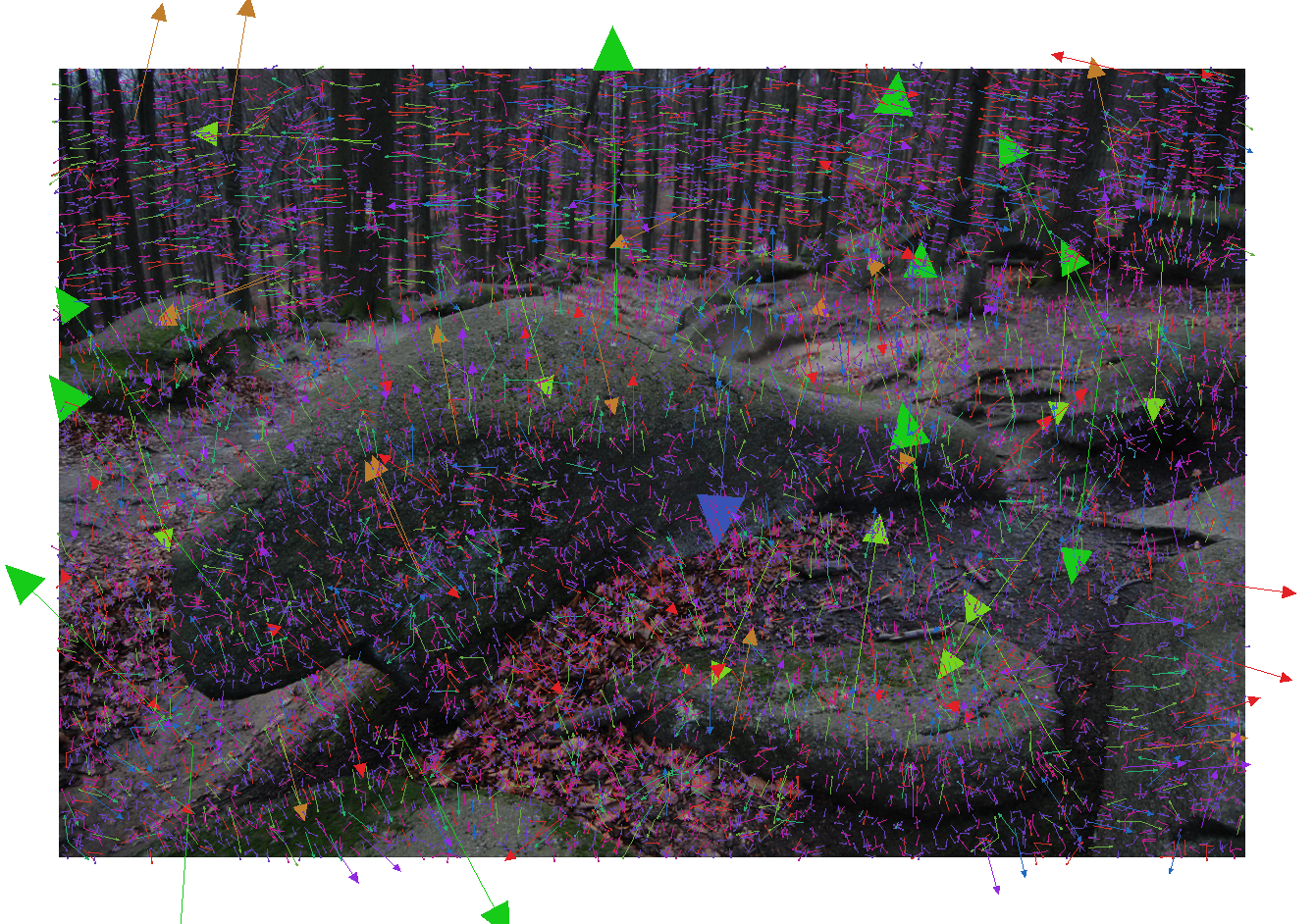

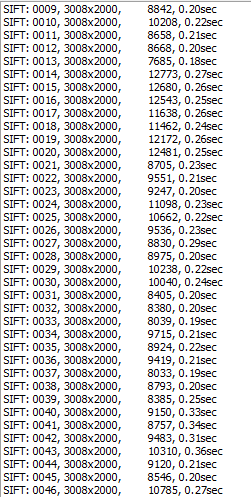

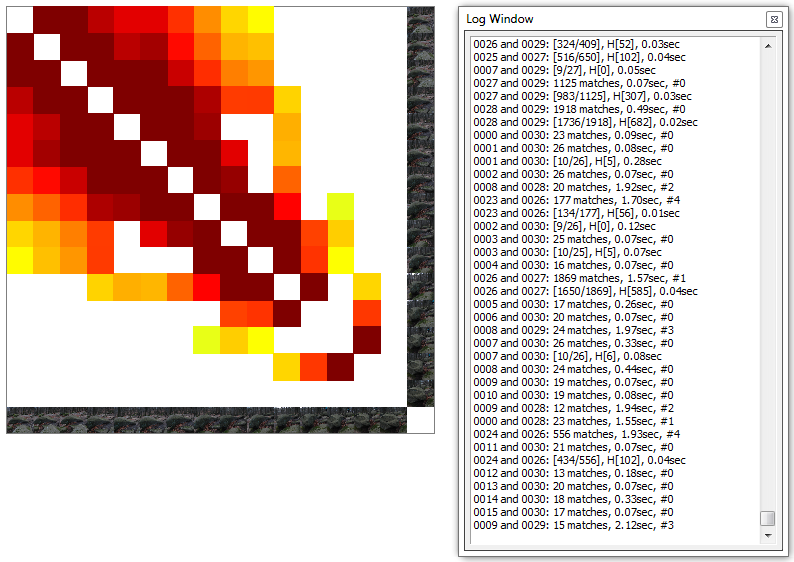

This project covers the practical use of photogrammetry and won't explain the algorithms in the background. Where needed some information will be given.

Photogrammetry 3D Scanning

This project is a brief tutorial from acquiring multiple images to a finished 3D print of a real world object.

Source:[

Source:[ Source: [

Source: [

master-puller

master-puller

B K

B K

Joan Horvath

Joan Horvath

TheMixedSignal

TheMixedSignal

Hi, Do you know how I might make a cheap lider type scanner to scan the ground maybe plugging a laser device into my phone? I am not looking for high res, just something i can tie in with gps on my phone to scan say terrain and objects at a basic level. If i can do this i might go for a next stage of object recognition.