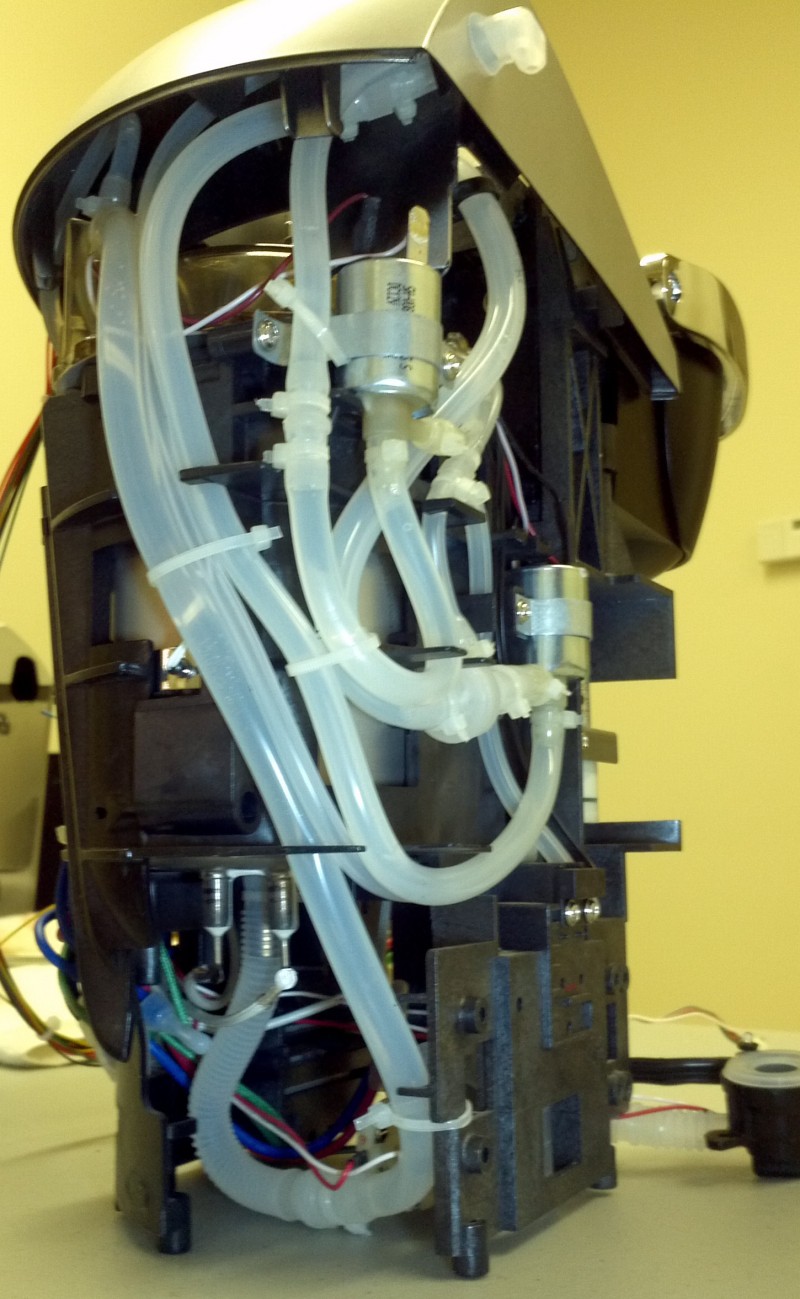

The system can be asked questions, told to remember things, made to serve information. Complex sequences of physical events can be arranged to occur without human interaction, or in response to human interaction. It makes coffee, and will deliver it. It will collect trash. It shows you the status of the stock market, or of the weather, or of a custom dataset. It can cleanly stop a machine in an emergency. It unlocks a chemical cabinet for an adult, but not for a child. It keeps the human safe, preserves data, and preserves itself to keep maintenance minimal and unobtrusive. It does not become obsolete in less than a year. It will do whatever it is enabled to do.

Almost every component in the system is optional, which makes it highly tolerant to failure. The presence or absence of devices, each with a small number of capabilities, determines the capabilities of the system as a whole.

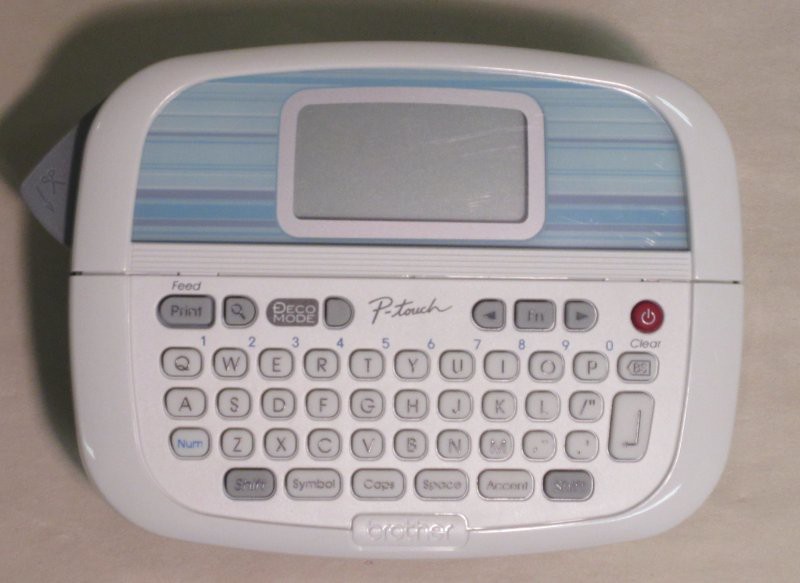

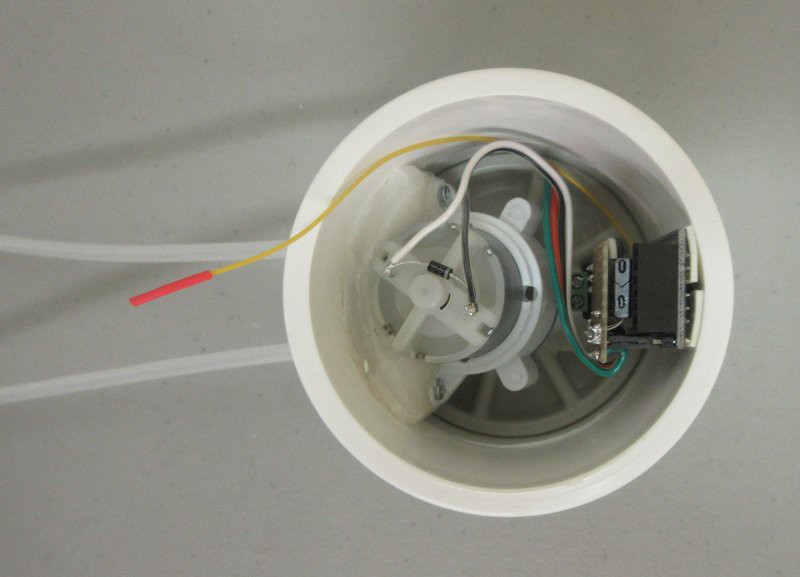

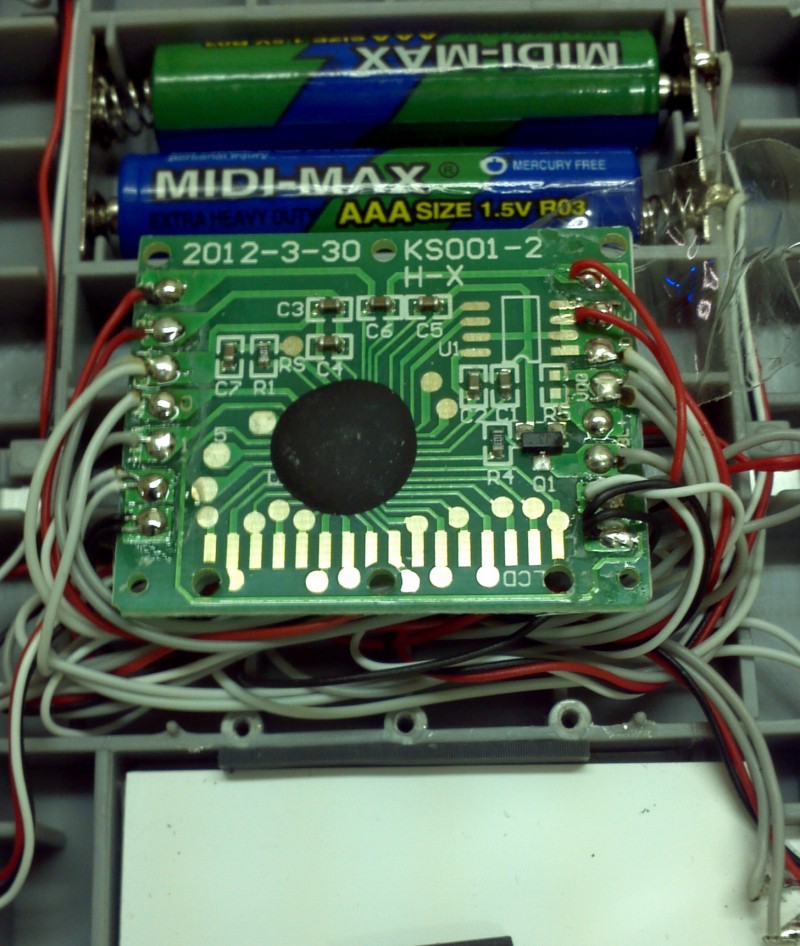

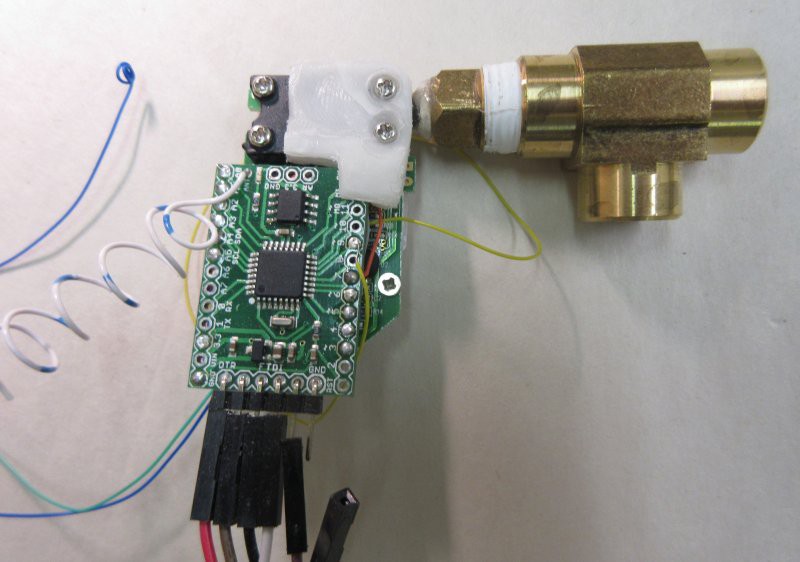

I call my reactive machine units "Reactrons". These devices have a fairly simple interface that amounts to listing what small set of abilities they have, and control points to execute those abilities. These devices are classified into a handful of groups:

- Integrons: human interaction nodes

- Recognizers: human detection and identification nodes

- Collectors: data acquisition and transmission nodes

- Transporters: movement of material or data

- Processors: conversion of material or data

- Energizers: control of power

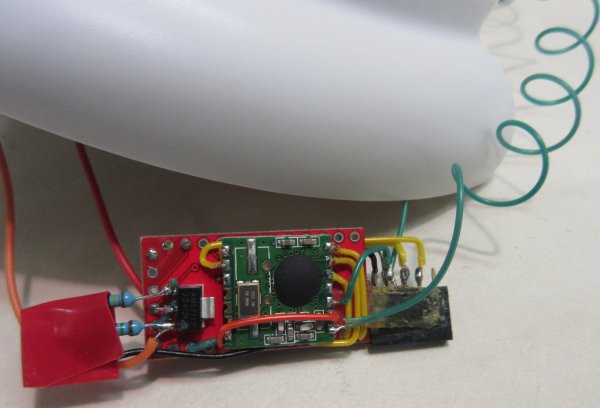

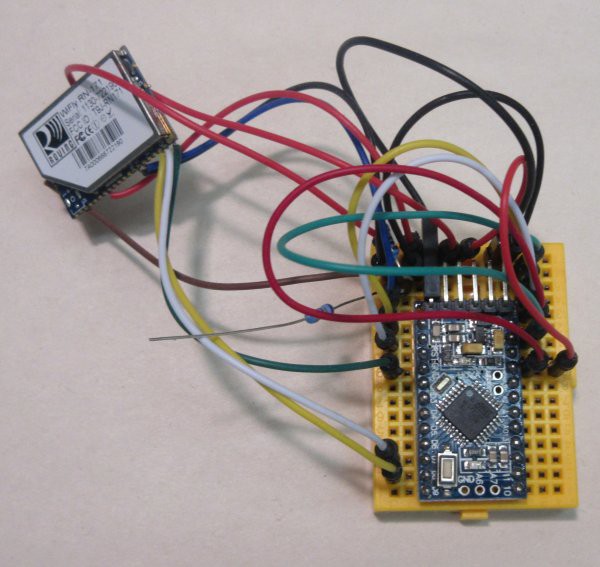

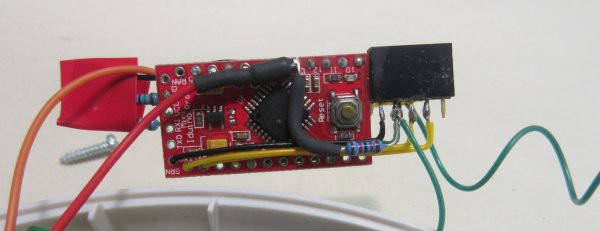

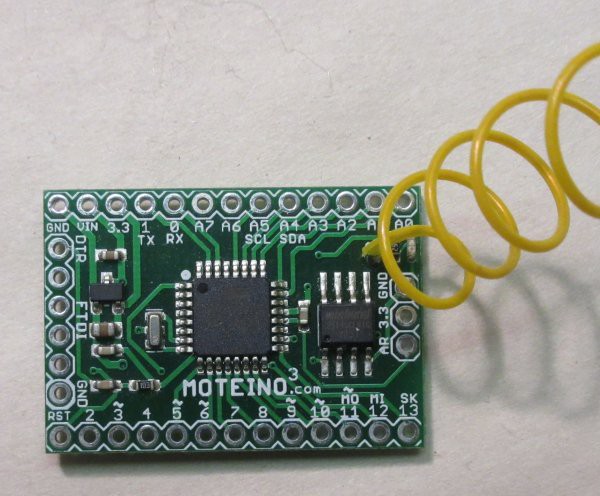

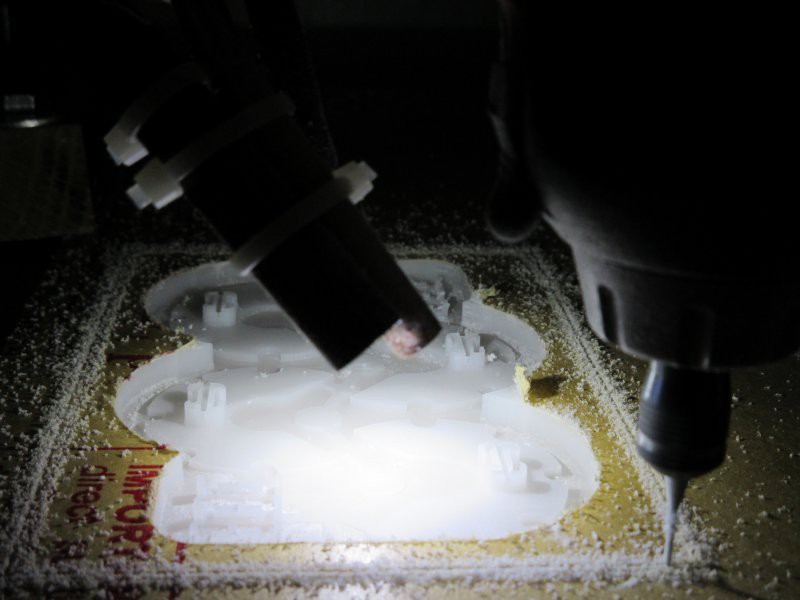

This project introduces Integrons and Recognizers as separate, discrete machines. The others are basically all familiar hardware, with a small control board added to provide them the ability to interface with the system.

What this system does:

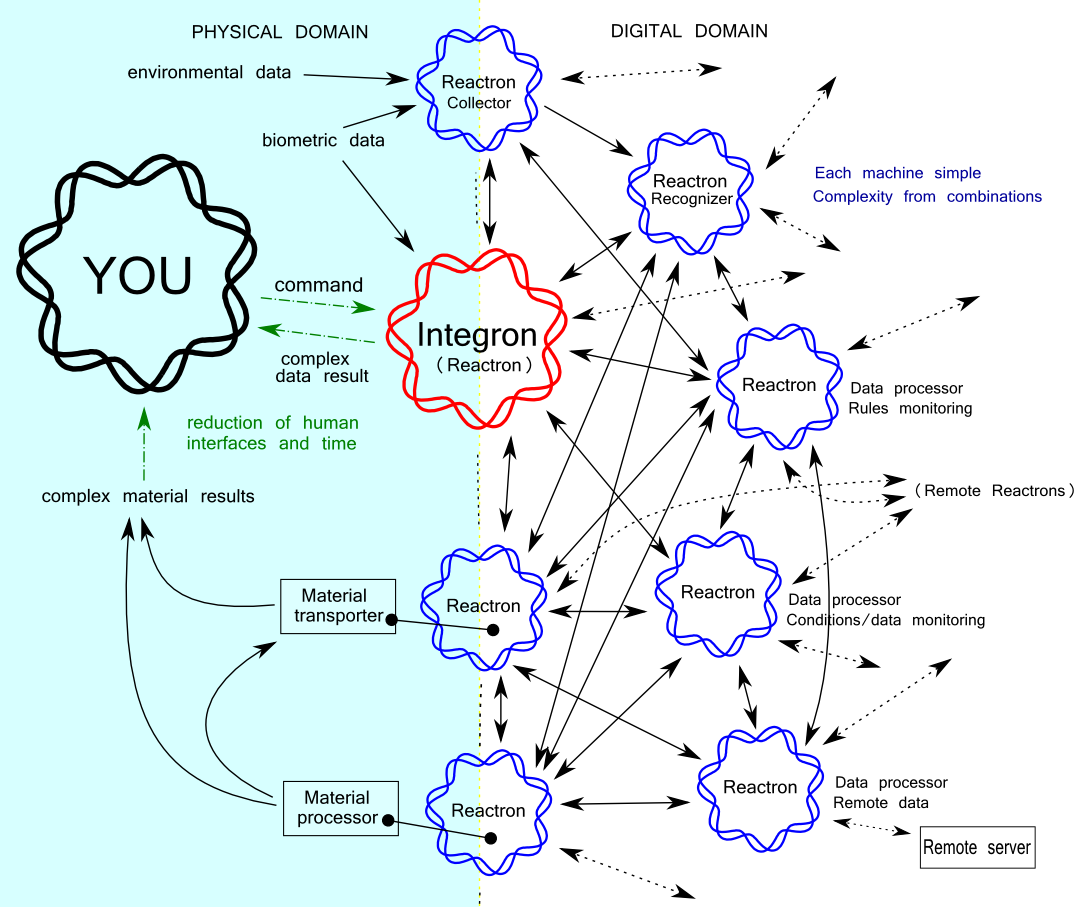

The main idea is to reduce the complexity and increase the number of machine nodes that are constantly on and around us. Here is a system diagram of the network. Note that it contains exemplars, and a multiplicity of units exist beyond this scope. The only unique and non-optional thing is YOU, and your time and experience, and that is the whole point.

They detect our position and do things asynchronously so that our needs are anticipated, for a high percentage of the time. Verbal commands and line-of-sight status indicators give a way to interact with the "culture" of nodes, but passive interaction is preferred. In order of priority:

- 1) Stuff happens based on rules you set up, so they are ready for you when you need it, sensors detecting your presence and waiting for certain conditions to occur.

- 2) Status of whatever dataset you like can be seen passively, from a distance, via lights. (...and sound if the system needs to alert you of something you defined.)

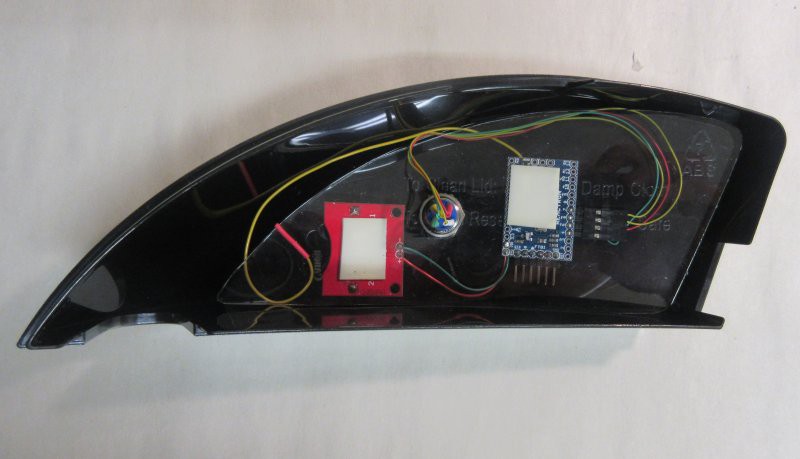

- 3) You can interact verbally from a distance with the Integrons (which then query the full network for the actual answer or status).

- 4) You can interact up close with the Integrons via screen and by gesture.

It is my hope that most of the interaction is #1 and #2, thereby allowing you to move through your life without interacting actively with the machines, most of the time, analogous to the way the doors just open for Maxwell Smart (https://www.youtube.com/watch?v=sWEvp217Tzw) without him breaking stride, only with more complex results than opening doors.

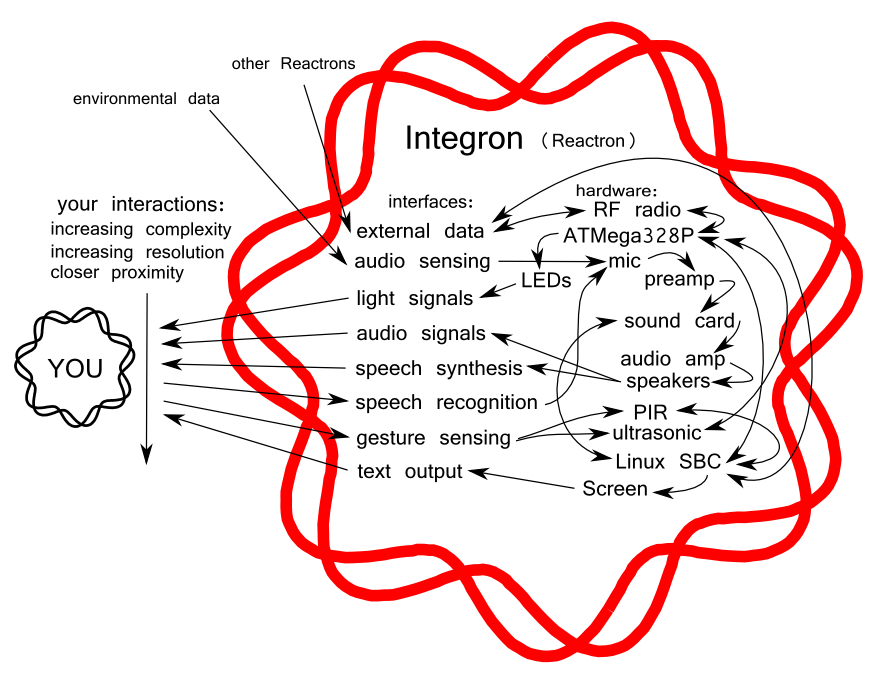

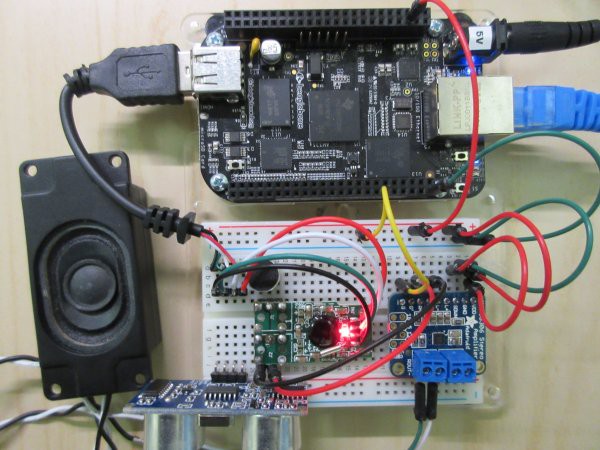

Here is a system diagram of the Integron unit itself, showing the internal components and how they are expected to interact. The only task of this unit is human integration with the network of nodes, as described above. This post describes what is currently working and what remains as of August 20th 2014.

The simplicity of each node is a huge factor, reducing MTBF for every node, and keeping costs low. Simple machines just work better and last longer. And you can have multiples so that when one breaks another steps in. This allows you to remove maintenance tasks from the critical path of your human activity. Save them up for the chore weekend, or delegate them. The simpler a machine is, the higher the chances are that one can build a machine to do the maintenance. At critical mass, we have...

Read more » Kenji Larsen

Kenji Larsen

Azdle

Azdle

ehughes

ehughes

Valentin

Valentin

On a side note, I've been playing around with junk cell phones recently. Buying old Android phones from EBAY to experiment with. Currently I unlock them and play with all kinds of interfaces to various game controllers to play MAME style retro games. Why am I mentioning this? I realized it was sort of pointless to buy boards like RasPi or Beaglebone black when I can buy 2 or 4 processor Android juggernauts for 20$-40$ a pop. AND they come with capacitive high resolution touch screens and incorporate batteries (built in UPS!), GPS, WiFi, etc etc etc. Re-purposing great electronics that will eventually end up in a junk pile seems like a good idea and they work really well. The only hurdle is IO. That's easily taken care of with devices based on Arduino or whatever has a USB, wi-fi or Bluetooth interface.