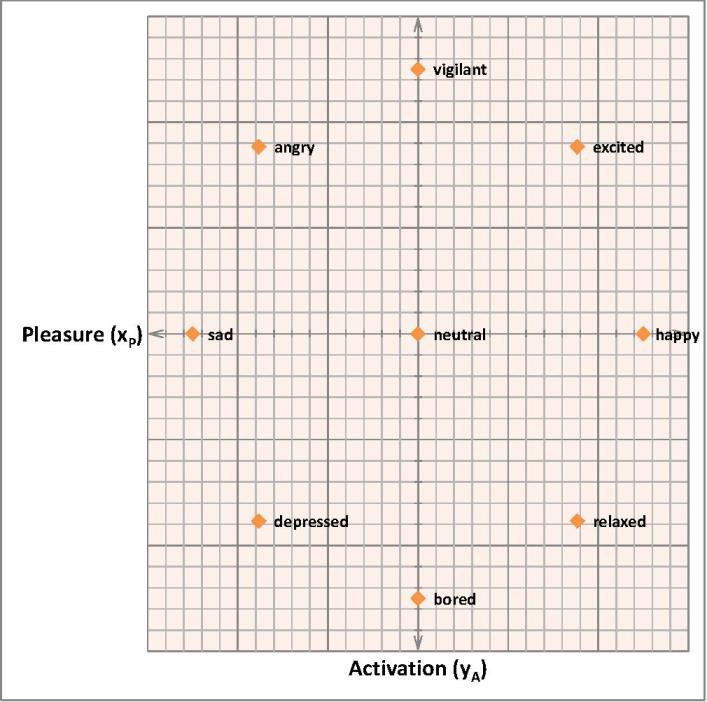

This log starts with the so called circumplex model of affect. Re-drawn and simplified model:

You might notice, that this model implicates the use of the KNN algorithm immediately. More formally: let the sample space be a 2-dimensional Euclidean space IR², the center coordinates (0,0) and the radius r ∈ IR > 0, then our classes are located at:

and

A remark about Pleasure and Activation before we continue: Activation could

be seen as a kind of entropy of the robot in a physical sense,

depending for instance on the ambient light level and temperature. Pleasure could be seen as a success rate of a given task the robot has to fulfill or to learn.

The distance between two points in a 2-dimensional Euclidean space is given by

which follows directly from the Pythagorean theorem.

With the defined boundaries we get a maximum distance between a class point and a new sample point of:

Here is a little sketch I wrote to demonstrate how the emotional agent works:

const float r = 5.0;

void setup() {

Serial.begin(9600);

Emotional_agent(5, 0);

}

void loop() {

}

void Emotional_agent(float x_P, float y_A) {

if(x_P < - r) x_P = - r; // limit the range of x_P and y_A

else if(x_P > r) x_P = r;

if(y_A < - r) y_A = - r;

else if(y_A > r) y_A = r;

float emotion_coordinates[2][9] = {

{0.0, 0.0, r, - r, 0.0, r/sqrt(2.0), - r/sqrt(2.0), r/sqrt(2.0), - r/sqrt(2.0)}, // x-coordinate of training samples

{0.0, r, 0.0, 0.0, -r, r/sqrt(2.0), r/sqrt(2.0), - r/sqrt(2.0), -r/sqrt(2.0)} // y- coordinate of training samples

};

char *emotions[] = {"neutral", "vigilant", "happy", "sad", "bored", "excited", "angry", "relaxed", "depressed"};

byte i_emotion;

byte closestEmotion;

float MaxDiff;

float MinDiff = sqrt(2.0) * r + r; //set MinDiff initially to maximum distance that can occure

for (i_emotion = 0; i_emotion < 9; i_emotion ++) {

// compute Euclidean distances

float Euclidian_distance = sqrt(pow((emotion_coordinates[0][i_emotion] - x_P),2.0) + pow((emotion_coordinates[1][i_emotion] - y_A),2.0));

MaxDiff = Euclidian_distance;

// find minimum distance

if (MaxDiff < MinDiff) {

MinDiff = MaxDiff;

closestEmotion = i_emotion;

}

}

Serial.println(emotions[closestEmotion]);

Serial.println("");

}

M. Bindhammer

M. Bindhammer

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

As far as I know the famous emotional robot 'Kismet' used a similar matrix. The robot can only express emotions mentioned in the matrix, using the KNN algorithm to find the closed match. I once wrote a little simulation where I used brightness and sound to manipulate the emotions of a virtual robot, see https://www.youtube.com/watch?v=e37p30Sybg8

Are you sure? yes | no

I am excited about this emotion matrix. I hope you will write more details later on, how a robot can express for example an emotion of (0.5r,0.5r).

What do you think to make the default/basic emotion somewhere around (0.7r,0.3r)? I prefer a robot which is most likely to be happy than neural. :)

Are you sure? yes | no