I live in neighborhood with a lot of squirrels living in the old oak trees. I like the squirrels but I hate when they eat my heirloom tomatoes. I also don’t like the fact they eat all the nectarines off my tree before they are ripe. I don’t mind so much they eat the apples and pomegranates as we usually have enough to share.

I don’t want to kill the squirrels, but I do want to figure out a way to keep them out of my tomatoes. I’m thinking of building an automatic sentry gun that shoots airsoft bb pellets at the squirrels. An automatic sentry gun is a gun that is mounted so it can rotate in the horizontal (pan) and vertical (tilt) axis. Mine would be computer controlled using a microcontroller (arduino) and two or three servos.

There was a site https://github.com/sentryGun53/Project-Sentry-Gun that had sentry gun that fired on movement. I want to do one better. I want to only shoot at squirrels. Not people, birds, or cats. In fact I want to make the gun visibly safe when a person is in the area by lowering a solid piece of plastic in front of the muzzle when it detects a person.

Phase 1 of this project is to investigate how to detect squirrels with machine learning. Phase 2 is to build a sentry gun that only targets squirrels (Squirrel Season!). There might be a Phase 3 where I mount a camera and an airsoft gun on a drone (Skynet for Squirrels?)

Phase 1 is essentially complete. Phase 2 hasn’t started yet. Here’s what I’ve learned from Phase 1.

Update 6/4/2018: I'm revamping what I did with Phase 1 to use a Jevois camera system. http://jevois.org/ The cam runs linux and has Darknet/Yolo preloaded. My thought is I can use it plus the RaspberryPi and won't need an additional laptop. I have written a python script that can get the results of the detection (what type and the coords) from the Jevois camera on the Pi. That all works great with the standard Yolo network it ships with.

Next, I put on the custom network that I made as part of Phase 1 and run into some roadblocks. The current version has YoloV2 so I have been retraining my network to use a Yolov2 compatible network. I'm in the middle of figuring out why that doesn't work right. When I have that sorted, I'll post the code and do a proper writeup.

Update: 6/30/2018: I'm still working to figure out how to get the cam to reliably detect the objects I want. I've trained a network based on Yolov2-tiny-voc. It reliably detects people and squirrels on my laptop but not on the Jevois. I suspect it's because the systems are running different versions of Yolo but I'm not sure if it's something else. I'll pretty happy with my python code that runs on either my windows PC or my Raspberry PI 2B and runs the cam. I'll post that at the bottom of this writeup.

Update 7/31/2018: I have the camera working with Yolov3 with the python code running on a Raspberry Pi 3. I've done multiple attempts at training the network but I have not succeed in detecting a squirrel in a live feed. I saw one sitting in the camera range yesterday and it didn't recognize it. I've tried turning down the threshold until it starts recognizing too many bogus things. I have one more thing to try (upping the network resolution per https://github.com/AlexeyAB/darknet/blob/master/README.md#how-to-improve-object-detection) and if that doesn't work, I'm going to capture a boatload of pictures off the camera directly and train from that. I've written a python script to do it and we'll see how that goes. By the way, I've started posting the code on my Github site: https://github.com/PeterQuinn925

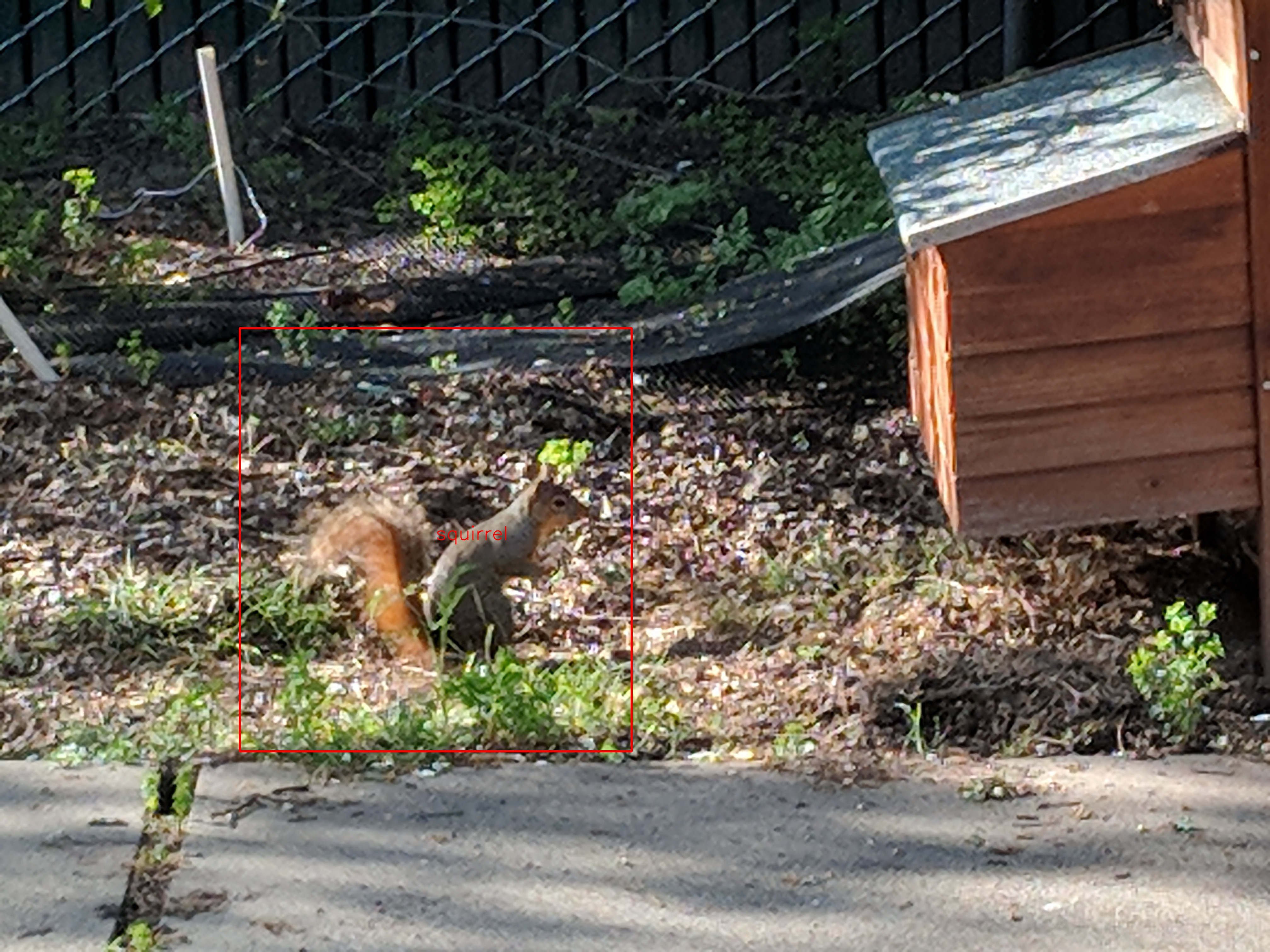

Update 9/5/2018: Success detecting squirrels! I'm not quite getting it live, but I am getting it through the Jevois camera. I have it taking shots when there's movement. With the background being trees, it just takes a tiny bit of wind to trigger a shot. I've been paging through thousands of photos and pulling out the few with squirrels. I've been using these to retrain Yolo and I'm starting to see results. The squirrels are quite a bit smaller and lower resolution than the ones I previously trained on.

I'm currently capturing the photos with the Jevois camera hooked to a raspi 3. All the raspi is doing is waiting for the Jevois to tell it that the view changed and it stores the photo. (cv_diff_headless.py). I am sharing the directory that the files are stuffed in with a linux laptop that's running Darknet. It's way slower than real time and that's something that I need to look at improving next. As mentioned above, all my code is now on Github here: https://github.com/PeterQuinn925/Squirrel

Performance & Architecture

I first tried doing everything on a Raspberry Pi 3. The performance is not good enough for machine learning. It is good enough to run a camera and send Jpegs when the scene changes to another machine to do the squirrel identification. I will be looking into using an Intel Movidius Neural Compute Stick in the future to see if I can do it all on a Raspberry Pi.

The final architecture for Phase 1 is to use a Raspberry Pi 3 with a standard USB webcam to take the pictures. The Pi is running a Python script that compares consecutive images from the video stream and save images that show changes as a JPEG using OpenCV. I used an old spare laptop to detect the relevant objects using YOLO.

The old laptop doesn’t have decent graphics card. With just the CPU it can detect objects in images in about 20 seconds. By comparison, my main laptop with an NVIDIA graphics card can detect objects in live video.

I obviously need to get to several frames per second performance if I want to implement the sentry gun in Phase 2. Worst case, I can dedicate a laptop with a graphics card to the task.

Image Capture

I am using a Raspberry Pi to capture the video. I initially bought the Raspberry Pi camera module. When it arrived, it didn’t work. I tried a bunch of things and then gave up on it. I bought a cheap ($5) USB webcam off of Amazon to use instead. It’s a 640x480 camera that isn’t very good, but good enough for now.

I installed OpenCV and numpy and wrote a script to capture the images. Since we’re really constrained on processing the images, I only want to send changed images to be checked for squirrels. I used the simplest way to check for movement - subtracted a BW image of the video stream with the previous BW image. The number of white pixels in the remaining image give you how much the image has changed. The camera itself is kind of noisy - in a room in my house, I was seeing 100,000 (out of 300,000) pixels change with no actual change in the scene.

Outside, I was seeing 150,000 pixels change without real movement - due to wind, shadows, etc. I will get a better camera soon and see if that makes an improvement.

Here’s the code:

#!/usr/bin/python3

import cv2

from datetime import datetime

import time

def diffImg(t0, t1, t2):

d1 = cv2.absdiff(t2, t1)

d2 = cv2.absdiff(t1, t0)

return cv2.bitwise_and(d1, d2)

cam = cv2.VideoCapture(0)

x = 150000 #threshhold for image difference to prevent false positives

jpg_limiter = 3 #only save one in n images

#winName = "Movement Indicator"

#cv2.namedWindow(winName, cv2.WINDOW_NORMAL) #commented out for headless

#cv2.resizeWindow(winName, 640, 480) #commented out for headless

s, img = cam.read()

#cv2.imshow( winName, img ) #commented out for headless

time.sleep(30) #give the camera a chance to stabilize

# Read three images first:

t_minus = cv2.cvtColor(cam.read()[1], cv2.COLOR_RGB2GRAY)

t = cv2.cvtColor(cam.read()[1], cv2.COLOR_RGB2GRAY)

t_plus = cv2.cvtColor(cam.read()[1], cv2.COLOR_RGB2GRAY)

i=0 # index to limit number of jpgs

while True:

s, img = cam.read() #not used for diff, needed for view at time of motion

#cv2.imshow( winName, img ) #commented out for headless

dimg=diffImg(t_minus, t, t_plus)

#print (cv2.countNonZero(dimg)) #if you need to tweak x, uncomment this

if cv2.countNonZero(dimg) > x:

if i>jpg_limiter:

imagefile = "//home//pi//images//P" + datetime.now().strftime('%Y%m%d_%Hh%Mm%Ss%f') + '.jpg'

cv2.imwrite(imagefile, img)

i=0

print (imagefile)

print (cv2.countNonZero(dimg))

i=i+1

# Read next image

t_minus = t

t = t_plus

t_plus = cv2.cvtColor(cam.read()[1], cv2.COLOR_RGB2GRAY)

key = cv2.waitKey(10)

if key == 27:

# cv2.destroyWindow(winName) #commented out for headless

break

print ("Goodbye")

To share the resulting Jpgs with the laptop, I installed NFS and mounted the Pi’s image directory on the laptop. I also made the script executable and called it from /etc/rc.local (see https://raspberrypi.stackexchange.com/questions/4123/running-a-python-script-at-startup)

It now operates fine as a headless photo server and I can use PuTTY to log into it if I need to.

Darknet/YOLO Install

I started by trying installing Darknet on a spare laptop running Linux (Ubuntu). It was straightforward to get it to run with the sample data. It takes about 20 seconds to detect a photo. I find it pretty amazing that this technology even exists. It would have been an impossible problem a decade ago.

I made an attempt to train on a subset of the COCO data just to get an idea of how to train the network. It was impossibly slow without a GPU. I never got usable data by training on this machine. I know now that it would have taken weeks of running to generate useful weights.

I have a low end NVIDIA graphics card in my main laptop (GeForce GTX 960M with 4GB). Since I had been doing all my work on Linux, I made an ill-fated attempt to install Darknet on a VirtualBox VM. Trying to get the NVIDIA drivers to work with the VM was a wasted effort. The performance with just a CPU in the VM was about the same as what I was getting with the original laptop, so nothing gained there.

The third attempt was using Windows on my main laptop for training. I was able to install the free community version of MS Visual Studio and used AlexeyB’s fork. It took several tries to be able to compile Darknet, mostly due to my clumsiness with VS. His instructions are quite good.

The biggest problem was pathing to the prerequisites: OpenCV, NVIDIA CUDA and CUDNN. You have to use OpenCV 3.4.0 not 3.4.1 or it won’t compile. I had some trouble finding and downloading the CUDNN files - I had to register as a developer on NVIDIA’s site and it didn’t work with Chrome. I was able to create an account and download the files using MS Edge.

Once I got all of this straight it was straightforward to compile. AlexeyB says to use VS2015, but I couldn’t get it. I was able to use VS2017, but did not let VS try to upgrade the project when I was prompted on first opening.

Running with a GPU makes a huge difference in performance for both detection and training. For detection, I was able to get nearly real time video detection and training was easily 10x faster.

YOLO Training Prep

For my purposes, I wanted to detect 4 classes of object - squirrels, people, cats, and birds. I want to eventually/maybe shoot at the squirrels but for sure not shoot at people, cats and birds.

I started with the VOC2007 images. You can find them on the main Yolo page or on Github. I don’t want all the classes and I didn’t think I needed all 10,000 photos, so I trimmed it down to 1000 photos. I added some squirrel photos that I had taken and a few of the likely birds that would be found in my backyard. I also used google image search to add a few more squirrels. I probably have about 100 squirrel photos.

Next, I built the Yolo_Mark software. This is a UI that will allow you to mark the relevant classes in the photos and create the required text file.

I edited the object data file to have 4 classes and the right paths. I copied and edited the .names file to have my four class names. There’s good amount of detail on what to do here. Then I put on some music and spent a few hours marking all the squirrel, birds, people, and cats in the photos.

Note - you can’t have the word “image” in any path or filename of the training files or darknet fails. There’s a bug somewhere.

YOLO Training

With all the images and data files set up, I started to train. I had a couple of false starts when I didn’t have the filters set correctly in the config file. I found with my graphics card that I couldn’t use Batch=64 and Subdivision=8 as recommended. I got out of memory errors with CUDA. I found somewhere that using a larger number of subdivisions would be slower but would use less memory. I found I could do ok with Subdivisions=24. It would run out of memory every few hundred iterations, but since it saves the files to backup after every hundred, I would just restart it when it failed.

It took about day to train. Given the fact that it stopped every hour or two and didn’t run overnight, it probably was something like 18 hours of actual running time. From time to time, I’d copy the weights file from the laptop with the GPU to the laptop that will do the detection and tested it on some sample files.

I tried to attach my weights file to this project but it's too large for this site.

Here’s a highlight from my notes at the time:

Yes! First squirrel detected at 54% likely with Pdq_1700.weights. Still not detecting people yet, so continuing training.

Darknet Python Script

Once I had a reasonably well trained weights, I wrote a python script to run Darknet. The only real hard part here was getting all the paths right. I needed the full path to python/darknet.py in the python path and in pdq_obj.data. I had to edit darknet.py to have the full path to the libdarknet.so.

It appears it was written to use Python 2.x and I’m using Python 3. I had to put parentheses around a print statement. I also needed to put a "b" in the load_net and load_meta statements in the script which appears to be a Python 3 requirement. Otherwise it was pretty straightforward.

import os,sys

import time

sys.path.append('/home/myusername/darknet/python')

sys.path.append('/home/myusername/darknet')

import darknet as dn

import pdb

import shutil

import numpy as np

import cv2

net = dn.load_net(b'/home/myusername/darknet/cfg/pdq.cfg',b'/home/myusername/darknet/pdq_3100.weights',0)

meta = dn.load_meta(b'/home/myusername/darknet/data/pdq_obj.data')

folder = '/home/myusername/Pictures'

while True:

files = os.listdir(folder)

#dn.detect fails occasionally. I suspect a race condition.

time.sleep(5)

for f in files:

if f.endswith(".jpg"):

print (f)

path = os.path.join(folder, f)

pathb = path.encode('utf-8')

res = dn.detect(net, meta, pathb)

print (res) #list of name, probability, bounding box center x, center y, width, height

i=0

new_path = '/home/myusername/Pictures/none/'+f #initialized to none

img = cv2.imread(path,cv2.IMREAD_COLOR) #load image in cv2

while i<len(res):

res_type = res[i][0].decode('utf-8')

if "person" in res_type:

#copy file to person directory

new_path = '/home/myusername/Pictures/people/'+f

#set the color for the person bounding box

box_color = (0,255,0)

elif "cat" in res_type:

new_path = '/home/myusername/Pictures/cat/'+f

box_color = (0,255,255)

elif "bird" in res_type:

new_path = '/home/myusername/Pictures/bird/'+f

box_color = (255,0,0)

elif "squirrel" in res_type:

new_path = '/home/myusername/Pictures/squirrel/'+f

box_color = (0,0,255)

#get bounding box

center_x=int(res[i][2][0])

center_y=int(res[i][2][1])

width = int(res[i][2][2])

height = int(res[i][2][3])

UL_x = int(center_x - width/2) #Upper Left corner X coord

UL_y = int(center_y + height/2) #Upper left Y

LR_x = int(center_x + width/2)

LR_y = int(center_y - height/2)

#write bounding box to image

cv2.rectangle(img,(UL_x,UL_y),(LR_x,LR_y),box_color,5)

#put label on bounding box

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(img,res_type,(center_x,center_y),font,2,box_color,2,cv2.LINE_AA)

i=i+1

cv2.imwrite(new_path,img) #wait until all the objects are marked and then write out.

#todo. This will end up being put in the last path that was found if there were multiple

#it would be good to put it all the paths.

os.remove(path) #remove the original

Future Improvement

Phase 1 is essentially complete. I want to do some more testing and maybe get a better webcam, as this one is pretty crummy. I also may want to balance the classes by adding more squirrel photos to improve accuracy. Then on to phase 2.

Python code for the Jevois Cam

#!/usr/bin/python3

import os,sys

import time

import shutil

import cv2

import serial

import logging

from datetime import datetime

def SendParm(cmd):

ser.write (cmd)

time.sleep(1)

line = ser.readline()

if Headless:

logging.info(cmd.decode('utf8'))

logging.info(line.decode('utf8'))

else:

print (cmd.decode('utf8'))

print (line.decode('utf8'))

#main -------

Headless=True

if len(sys.argv)>1:

if sys.argv[1]=='-show':

Headless=False

else:

Headless=True

if os.name == 'nt':

folder = 'C:\\users\\peter/jevois_capture'

logfile = 'c:\\users\\peter/jevois.log'

port = 'COM6'

camno = 1

else: #linux

folder = '/home/pi/jevois_capture'

logfile = '/home/pi/jevois.log'

port = '/dev/ttyACM0'

camno = 0

logging.basicConfig(filename=logfile,level=logging.DEBUG)

logging.info('------- Jevois Cam Startup --------')

ser = serial.Serial(port,115200,timeout=1)

# No windows in headless

if not Headless:

cv2.namedWindow("jevois", cv2.WINDOW_NORMAL)

cv2.resizeWindow("jevois",1280,480)

# cam 0 on pi, cam 1 on PC

camera = cv2.VideoCapture(camno)

#initialize the jevois cam. See below - don't change these as it will change the Jevois engine from YOLO to something else

camera.set(3,1280) #width

camera.set(4,480) #height

camera.set(5,15) #fps

s,img = camera.read()

#wait for Yolo to load on camera.

time.sleep(20)

#ser.write (b'setmapping2 YUYV 640 480 15.0 JeVois DarknetYOLO\n') # use YOLO

#ser.write (b'setmapping 15\n') # use YOLO

#### this setmappings stuff is not useful here. It doesn't use them. It uses the values

#### in the camera x y and FPS to look up the mode in the list. Use listmappings

#### Left in the script as it's useful place to copy from when debugging

#

#

# YOLO variables are documented here: http://jevois.org/moddoc/DarknetYOLO/modinfo.html

#

#SendParm (b'setpar serlog USB\n') #too many debug msgs. Only use if you have trouble

#

##################################################

# I put these commands in JEVOIS:/modules/JeVois/DarknetYOLO/params.cfg so I don't need this anymore

#SendParm (b'setpar datacfg cfg/pdq_obj.data\n')

#SendParm (b'setpar cfgfile cfg/pdq.cfg\n')

#SendParm (b'setpar weightfile weights/pdq_3100.weights\n')

######################################################

SendParm (b'setpar serstyle Normal\n') #use Normal format to include types and coordinates

time.sleep(2)

SendParm (b'setpar serout All\n') # output everything on serial

time.sleep(2)

while True:

s,img = camera.read()

if not Headless:

cv2.imshow("jevois", img )

cv2.waitKey(1)

line = ser.readline()

if not Headless:

print (line.decode('utf8'))

#example output when capturing a selection

# b'N2 person -388 -550 1316 1981\r\n'

# D2 id x y w h

# http://jevois.org/qa/index.php?qa=2079&qa_1=yolo-coordinate-output-to-serial-what-data-is-output

# coord system is -1000 to 1000 with 0,0 in the cam center. http://jevois.org/doc/group__coordhelpers.html

if len(line)>0:

if "DKY" in str(line):

pass #do nothing

elif "OK" in str(line):

pass #do nothing

else:

line_split = line.split(b" ")

line_split[1] = line_split[1].decode('utf8') #line_split[1] is the class of object found

x = int(line_split[2])

y = int(line_split[3])

w = int(line_split[4])

h = int(line_split[5])

#convert to pixels using 640x480

#scale x to 640 width from 2000 (-1000 to 1000) and offset by 640/2

x = int(x*640/2000+320)

w = int(w*640/2000)

#scale y to 480 height from 1500 (-750 to 750) and offset by 480/2.

y = int(y*480/1500+240)

h = int(h*480/1500)

if not Headless:

print ("x= "+str(x))

print ("y= "+str(y))

print ("w= "+str(w))

print ("h= "+str(h))

folder1 = folder + "/" + line_split[1] + "/"

if Headless:

logging.info(line_split[1]+" found")

else:

print (line_split[1]+" found")

if line_split[1] != "person" and line_split[1] != "cat":

#can't handle all the other classes yet

folder1 = folder + "/other/"

imagefile = datetime.now().strftime('%Y%m%d_%Hh%Mm%Ss%f') + '.jpg'

if Headless:

logging.info("writing image: "+imagefile)

else:

print("writing image: "+imagefile)

crop_img = img[0:480, 0:640] # crop to single image.

cv2.circle(crop_img,(x,y),25,(0,255,0),5)

cv2.rectangle(crop_img,(x-w,y-h),(x+w,y+h),(255,255,0),5)

cv2.imwrite(folder1+imagefile,crop_img)