After the initial enthusiasm I finally got the machine home and slowly started testing what is what.

The GPU test

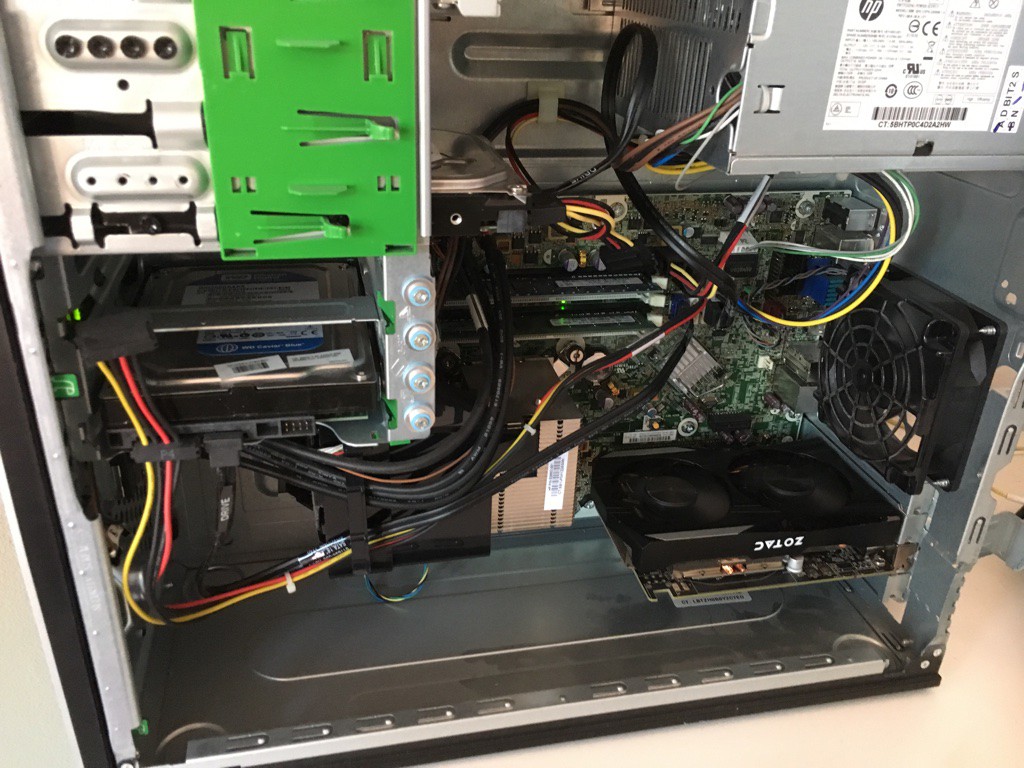

After much reading and deciding, turns out that the Microtower does indeed support a GPU. After some fiddling with the mechanism of the Tower the GTX 1050ti finaly was installed.

Thankfully I happened to have a compact model from Zotac because I don't think anything larger would have fit inside. I also had an old 160GB HDD I was using for testing different Linux Distros in the past, so put that thing in.

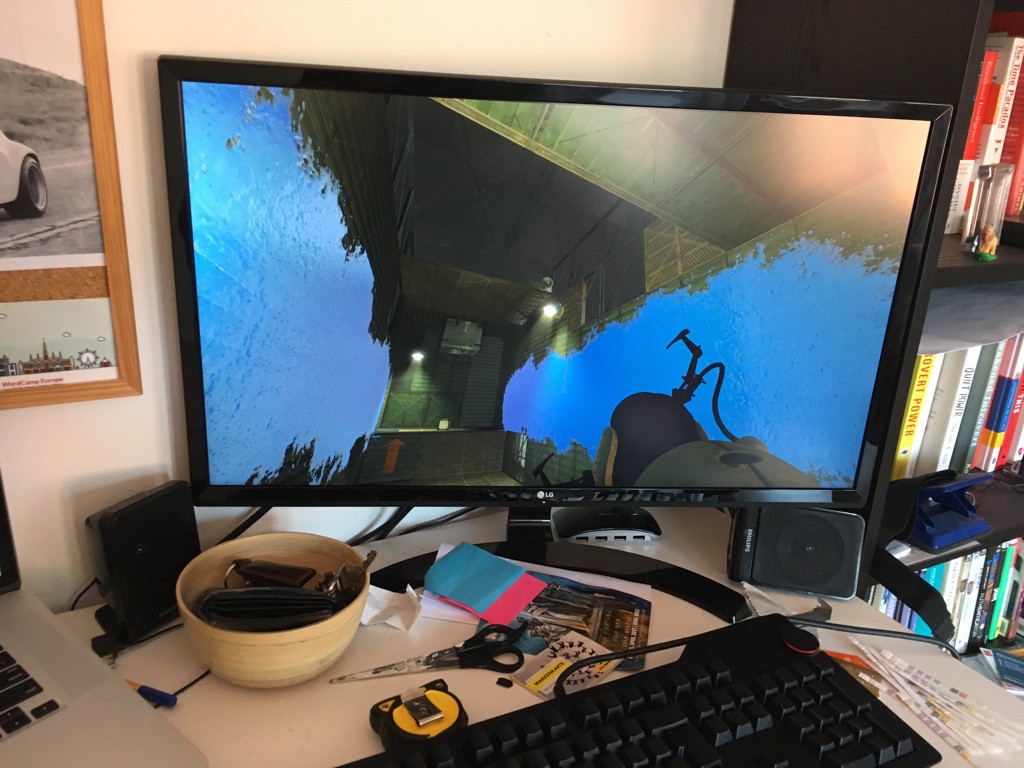

Turns out the 160GB still had Linux Mint 18.3 installed on it, with nvidia drivers and also had some games. With only 4GB of RAM I wasn't expecting much from this machine but pictures say more than words:

The thing was running Portal 2 on a 4K screen with very much acceptable frame rates. The 4 GB of RAM also proved itself to be acceptable for a linux machine with basic webbrowsing, video and audio consumption and the odd Spreadsheet.

Some basic testing begins

During the next couple of days, now with 2 HDDs and GPU inside, I decided to test some basic assumptions I had about software RAID with ZFS and btrfs. ZFS turns out to be very difficult to get working under linux. The only distro that actually managed to boot on ZFS without problems was Antegros.

My initial assuption was that software RAID would be smarter than traditional RAID and would use the full speed of 2 different devices instead of a combination of 2x(smales capacity) or 2x(slowest speed).

HDD setup

At this point the system had the WD Blue 250gb 7200rpm disk it came with, good for 90-95mb/s writes, and my old Seagate 160GB 7200rpm good for lightly over 45mb/s writes. From this setup you can see that there is a substantial difference in both drive size and speed.

Btrfs on 2 different disks

I tried btrfs with Fedora 28, Ubuntu 18.04 and Linux Mint 19. The Fedora installer offers substantially more setup options for both btrfs and LVMs compared to what the Debian derivatives have. While you can install Ubuntu on btrfs, it is basically being treated as any old ext4/XFS partition. Not very good.

Fedora shattered my expectations, following a few different write tests with dd and various block sizez, the btrfs drive it had setup was capable of adding the speeds of the 2 drives. I was seeing 130 to 140mb/s write speeds.

On Ubuntu I basically installed the system on one drive, then added the second drive after. Ran a rebalance to make sure the disks were loaded the same. I was honestly expecting similar performance to Fedora at this point, but no. I was seeing 90mb/s writes. Basically 2x slowest drive. Not very encouraging. Mint had the same behaviour as the Ubuntu it is based on.

Interesting setups

The way Fedora setup the disk made more of the space available for usage. It created the EFI and /boot partitions on the bigger 250gb disk, then it created a btrfs partition the same size as the entire other disk of 160GB. These 2 identical sized partitions then got striped. At the end of the 250gb disk I was still left with 80GB of unpartitioned space I could use to either increase the btrfs pool or just make another ext4 or XFS partition if I choose.

I'm not quite sure HOW the btrfs volume gets balanced with such different HDDs, but the speed is there. Was definitely not expecting this

Further distribution testing

After multiple failed attempts at getting ZFS on linux to work with Ubuntu and Fedora, I was ready to give up when I found out that Antegros has a built in option in the menu.

Tried Antegros, got it installed, it got to first boot and after the first restart it wouldn't boot anymore.

I wasn't able get any speed testing done with ZFS, but this will come once the new drives show up. Then I can install the system on a different drive and setup the Pool on the two disks separately.

Lucky accident

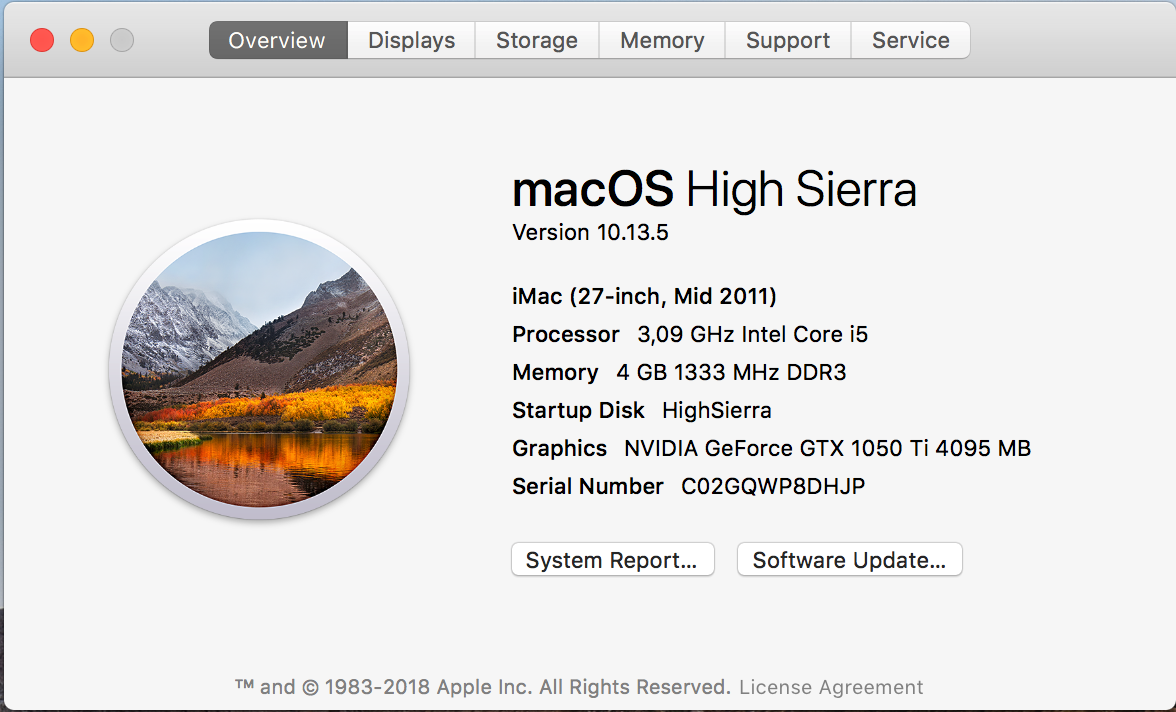

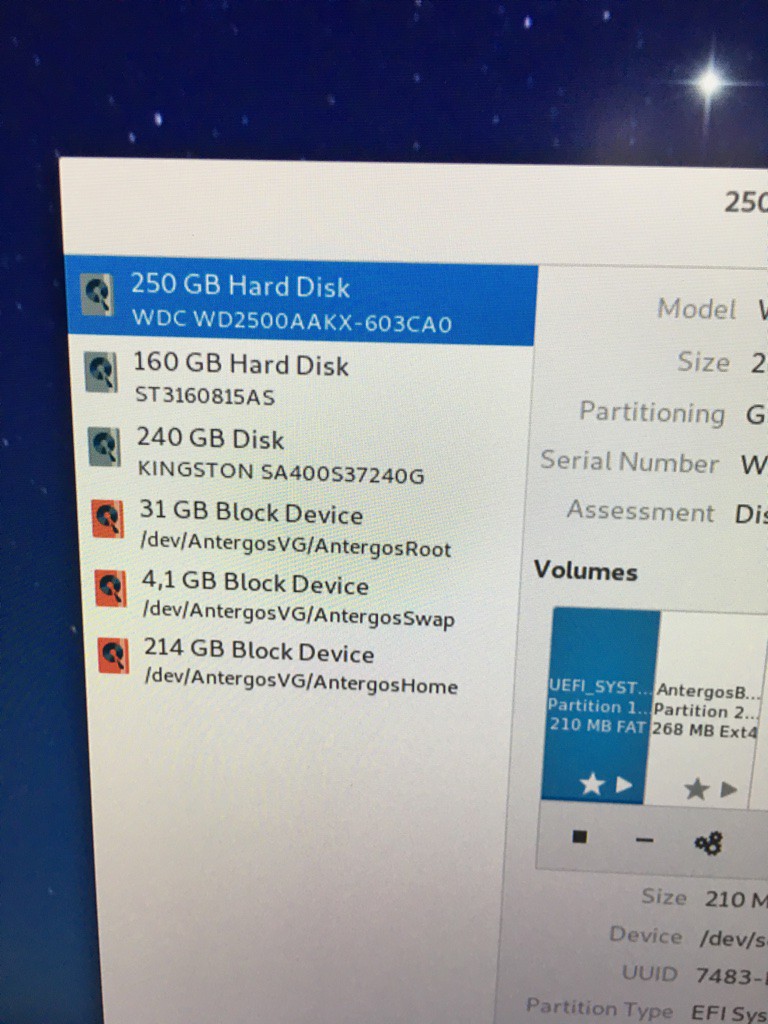

After testing various Distros with various setups I somehow managed to get here:

Turns out the HP 6200s and 8200s are quite compatible Hackintosh machines. I learned quite a lot about how OSX bootsup in the process and such. The install itself was quite painless once you get all the pieces together.

MacOS also seemed to be just as light as most Linux Distros. Was never expecting it to be usable with just 4GB of RAM. Something Windows 10 can't really manage.

The day the Postman came

After much delay buy the online shop I bought the parts, they eventually arrived.

The SATA controller, 2 SSDs and 16GB of DDR3 RAM. A few power splitters are also inside the package.

Right now I'm stil missing the GBit NIC and the 3 Drive Bay that both were not in stock. They will be delivered later when they become available.

Compatibility checks begin

I started testing the compatibility with the main part of the build, the PCI Sata controller.

It fit right underneath the GPU without problems. It got detected straight away in the BIOS, basically appeared before the boot process like most other RAID controllers(did I mention this card is also a RAID controller?). The exact model name in case you are wondering is Dawicontrol DC-3410 RAID. The only limitation is that because of the PCI bus it can only do SATA2. Which is enough for HDDs, but to slow for SSDs.

The Card installed and running looks like this:

You can barely see it underneath the GPU.

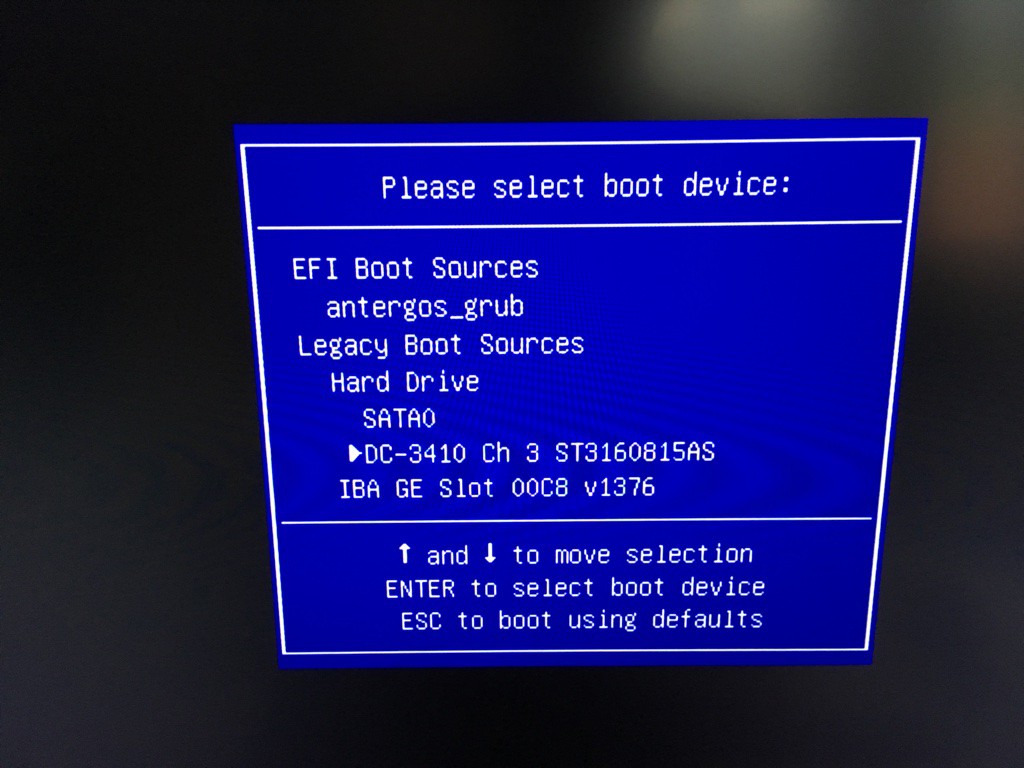

The drives show up at boot with the name of the controller:

The disks are also easily setup for RAID from inside the BIOS or with the provided software. I will have to test if Linux mdadm can control this card, but the disk on the card are detected right away in Linux. MacOS does not detect them.

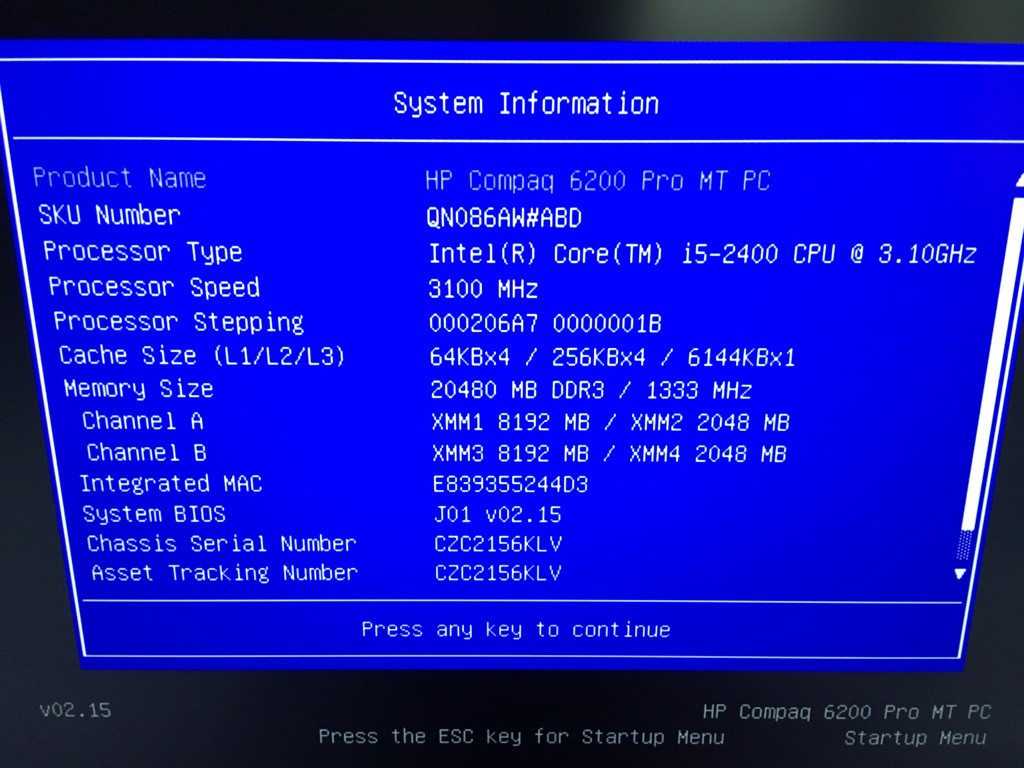

The dreaded RAM upgrade

I could probably write quite a bit about all the troubles of getting the right slot to accept the modules, getting the right timings and such...but no, they worked. Not only did the 16GB Mushkin kit work, it work in conjunction with the original modules. The Storea Pig is now equipped with 20GB of RAM.

To test that everything was compatible and working without error I fired up a couple of linux LiveDVDs(these usually fail at unpacking the squasfs with bad RAM) and fired up MacOS and played around, just in case. Everything but the HDDs on the controller are detected:

I think I got such compatible RAM is because I did quite a lot of research into what timings the HP motherboard expects. So for those interested, you need 1.5v Modules with 9-9-9-24 timings. If you want to get exactly the RAM kit I got the set is Mushkin 997018 2x8GB PC3-10600.

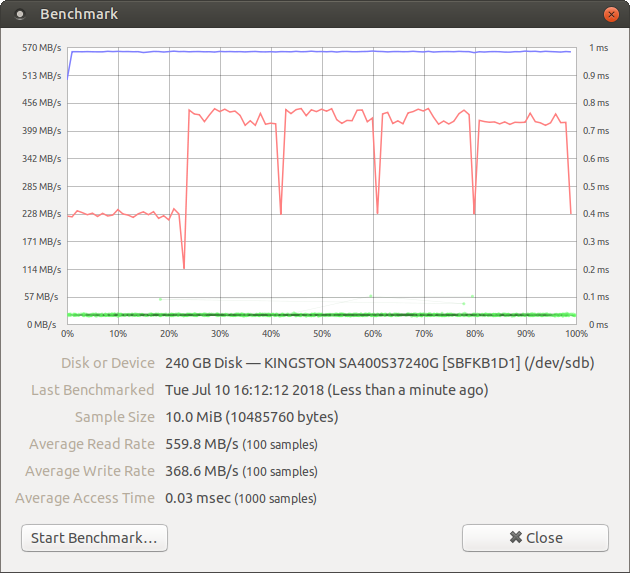

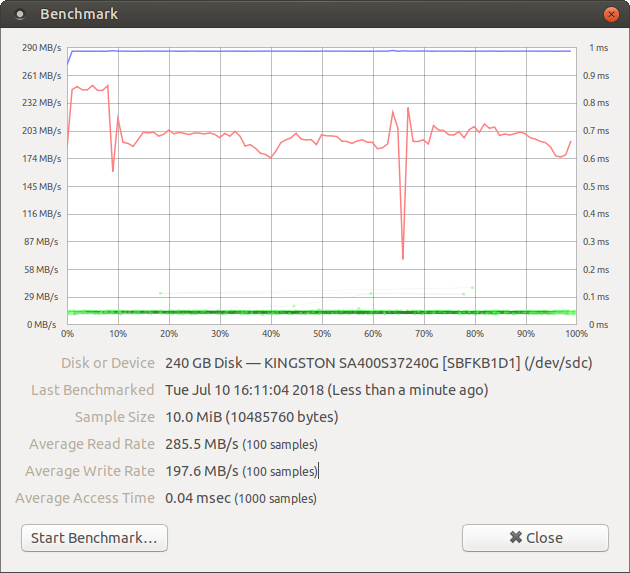

Some SDD speed testing

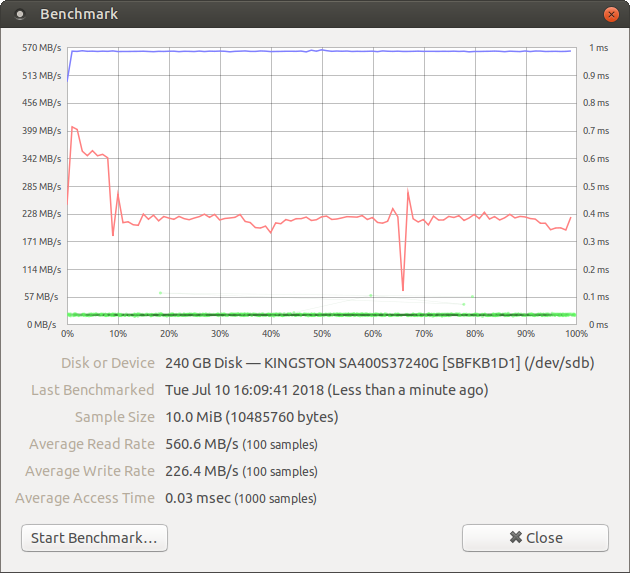

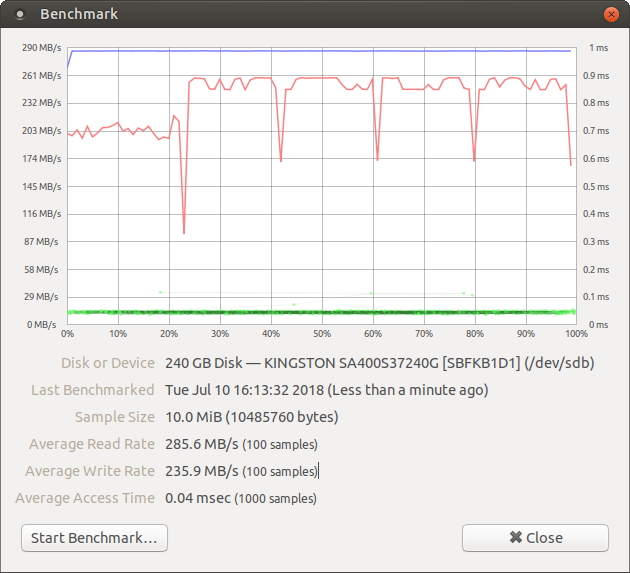

When I decided to go for SSDs on this system I based my plan on the fact that according to HPs technical documents the Motherboard has 2 SATA III (6gbps) ports. Following my testing I unfortunately wasn't able to find the second SATA III port. Only the first port SATA0 is indeed 6gbps and the SSDs run at full speed on that port. The other ports are all SATA II, so only half speed (3gbps). Here are some benchmark results:

The SSDs perform as expected considering the ports they are plugged into.

Booting

One thing that showed itself during this initial testing is the entire booting options from the Controller. Legacy boot seems to work no problems asked. EFI on the other hand doesn't get detected on the drives attached to the PCI controller.

I also tested booting MacOS from the controller, but it obviously didn't work since it does not detect the controller to begin with.

Antegros was installed in EFI mode, so I couldn't test that either, but the BIOS did delete the EFI record of it when the drive wasn't in the same port. I can now only manualy boot the efi file by navigating to it. A problem for future me.

Conclusion

So far I'm quite happy with how everything is working out. The RAM works surprisingly well without debugging and such. The controller is far more compatible than expected. Was honestly not expecting it to show up as boot options. I didn't buy it for the RAID functionality, I just needed the extra SATA port, but now I can also test hardware RAID with it.

Am a bit disappointed about the entire HP and SATA III situation. Only one SSD will be running at full speed. Maybe after some research I will find out a way to get it to also be SATA III on port 1 as well. All the technical documentation sais as much.

I will have to see how the power balance is holding on. The GPU never was part of the initial plan and it is the biggest consumer. We'll see how we are with power when all the HDDs are inside.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.