Hi everyone,

So all the hardware updates so far have been the work of our lead hardware engineer, Brian. Today we'd like to introduce a new addition to our team, Martin, who's expertise is in firmware (and software) and is taking on depth work on the Myriad X to start with (and then will be tackling all sorts of other Myriad X firmware and software).

So Martin's made some short work on getting the depth performance to be better, finding and solving a couple bugs, and experimenting with some depth processing techniques to see about improving the depth results (which will eventually, if they work well, will be implemented onto the SHAVES of the Myriad X).

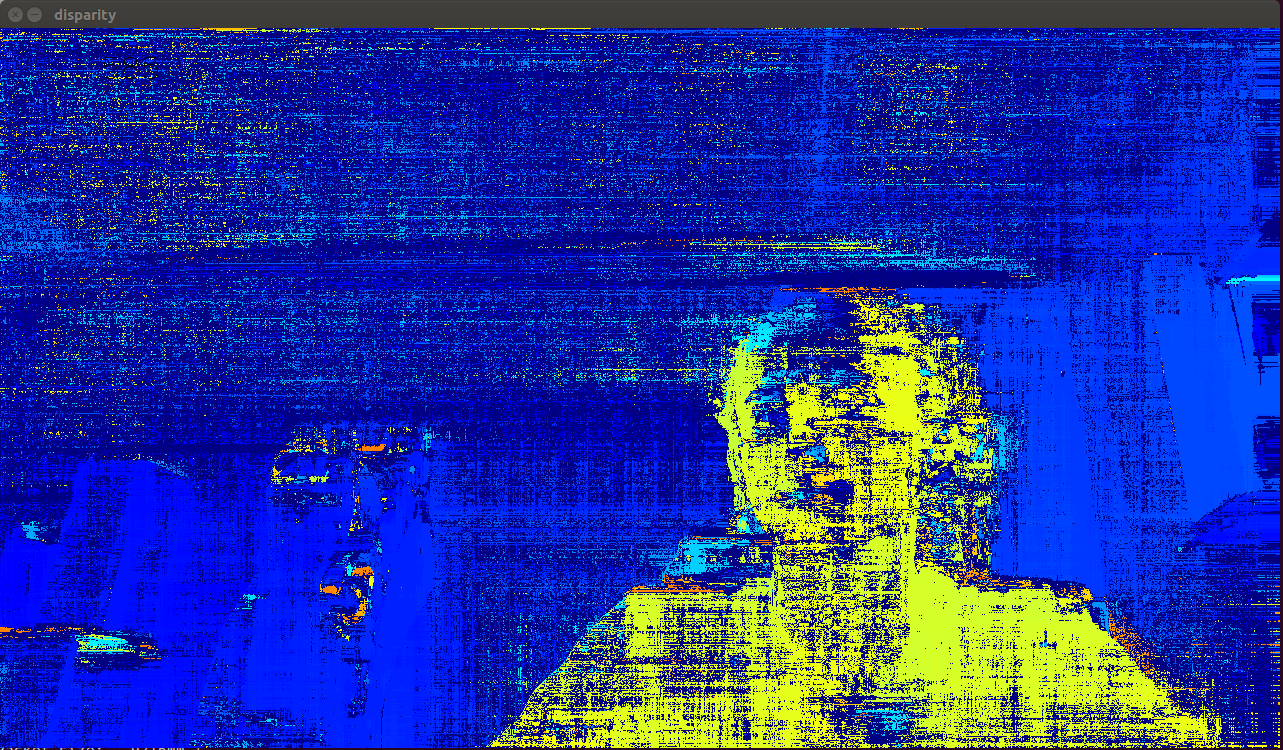

Here's the depth prior to calibration:

It turns out we had been using non-calibrated depth as result of a bug in loading the calibration that was found and fixed.

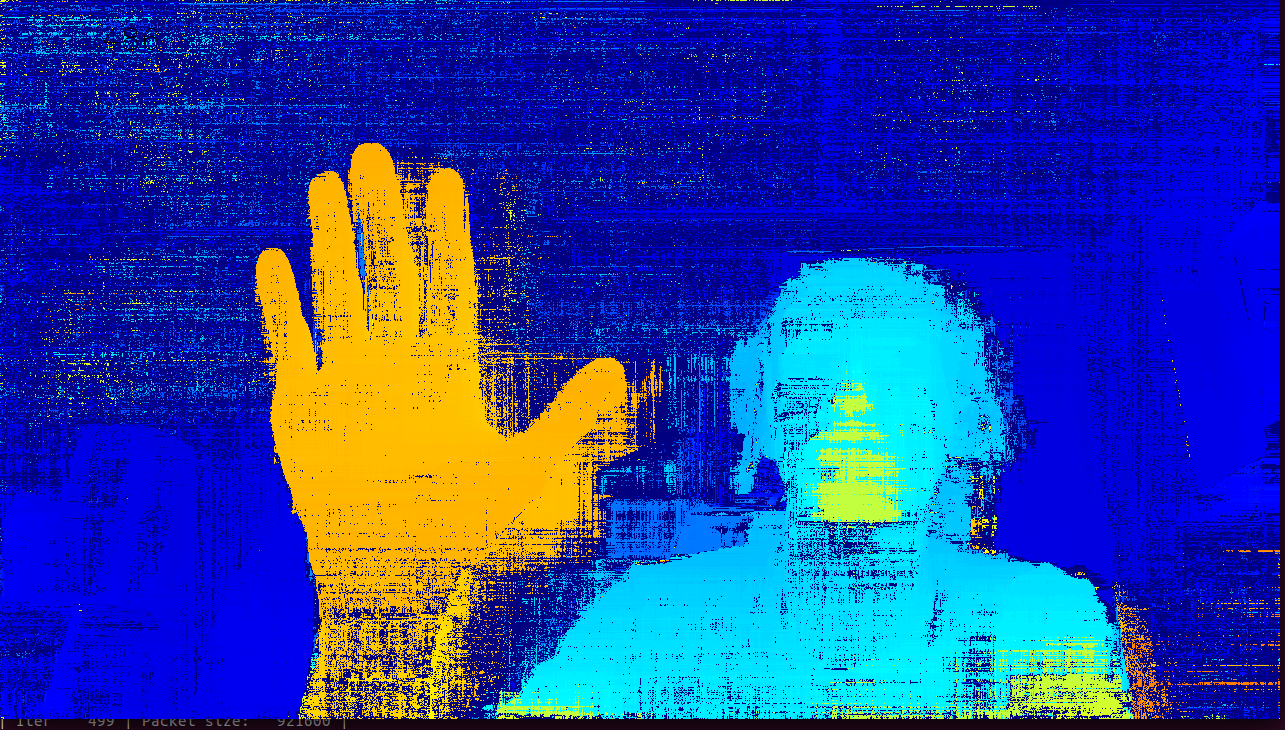

And here is with the bug fix and calibration applied:

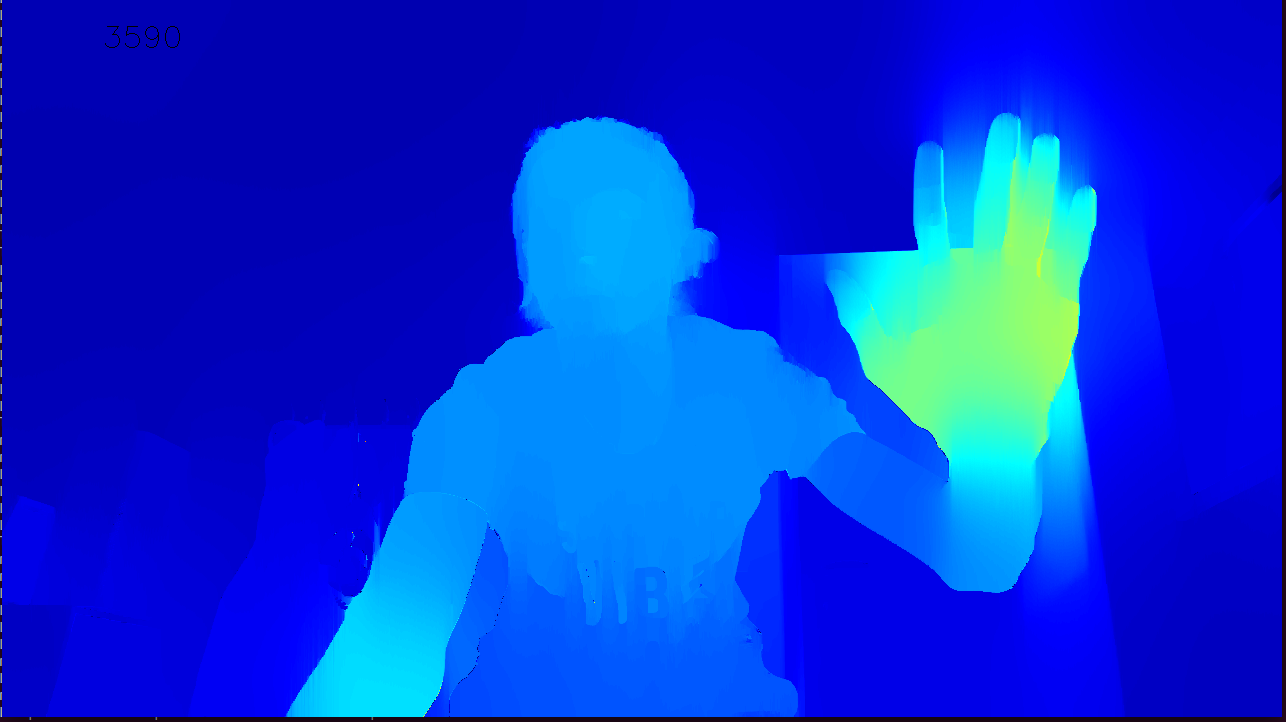

And here's with some edge detection on the mono and smoothing like the Intel D435 does:

And these are just initial guesstimates at the coefficients to use for smoothing, so they're probably not ideal for our setup.

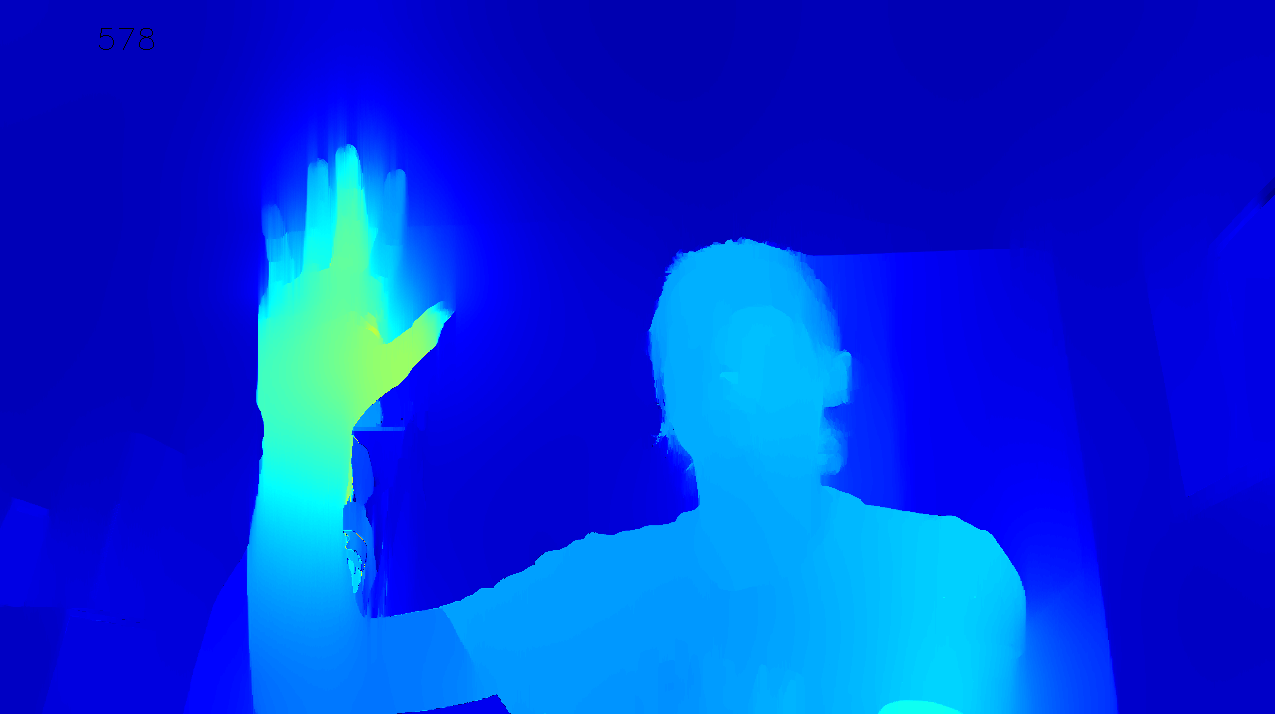

We quickly tried a bit less smoothing and that seems even better:

So we're still tweaking and experimenting, but we figured we'd update as these are starting to look about as good as the results from the D435 from our CommuteGuardian prototype (here).

And for comparison with that video, below are some results from median filtering (first video) and also mono camera-based edge detection and smoothing (second video). The edge detection isn't synced properly yet, so you can see ghosting as the mono (grayscale) video feed gets out of sync w/ the depth feed when there's motion.

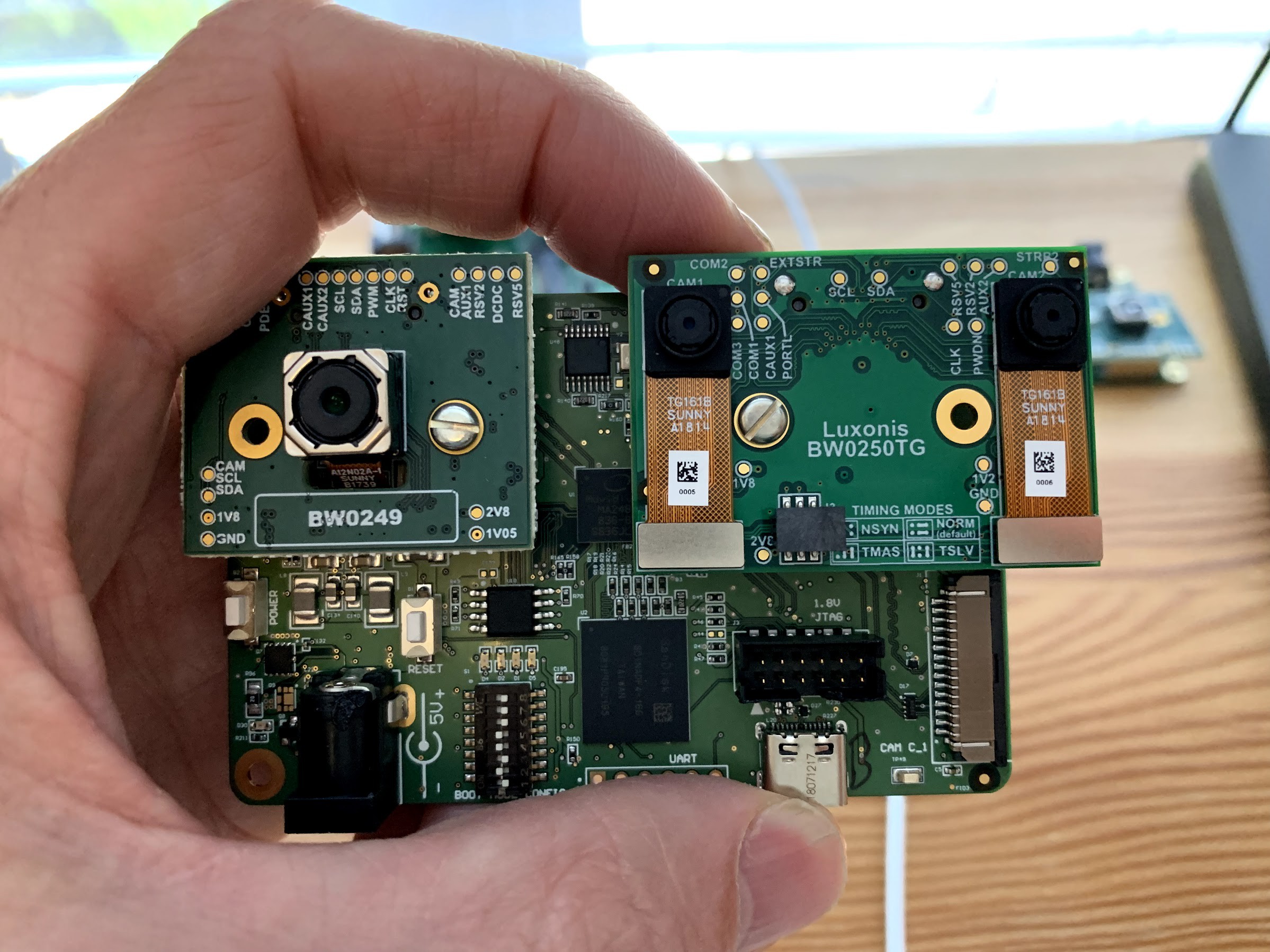

It's worth noting that this is depth on sensors that are pretty close-in, so that's why it quickly progresses to being towards the edge of the perceivable range of the disparity depth. Here's the board this is running on, which has the global-shutter (disparity depth) cameras spaced at ~34mm.

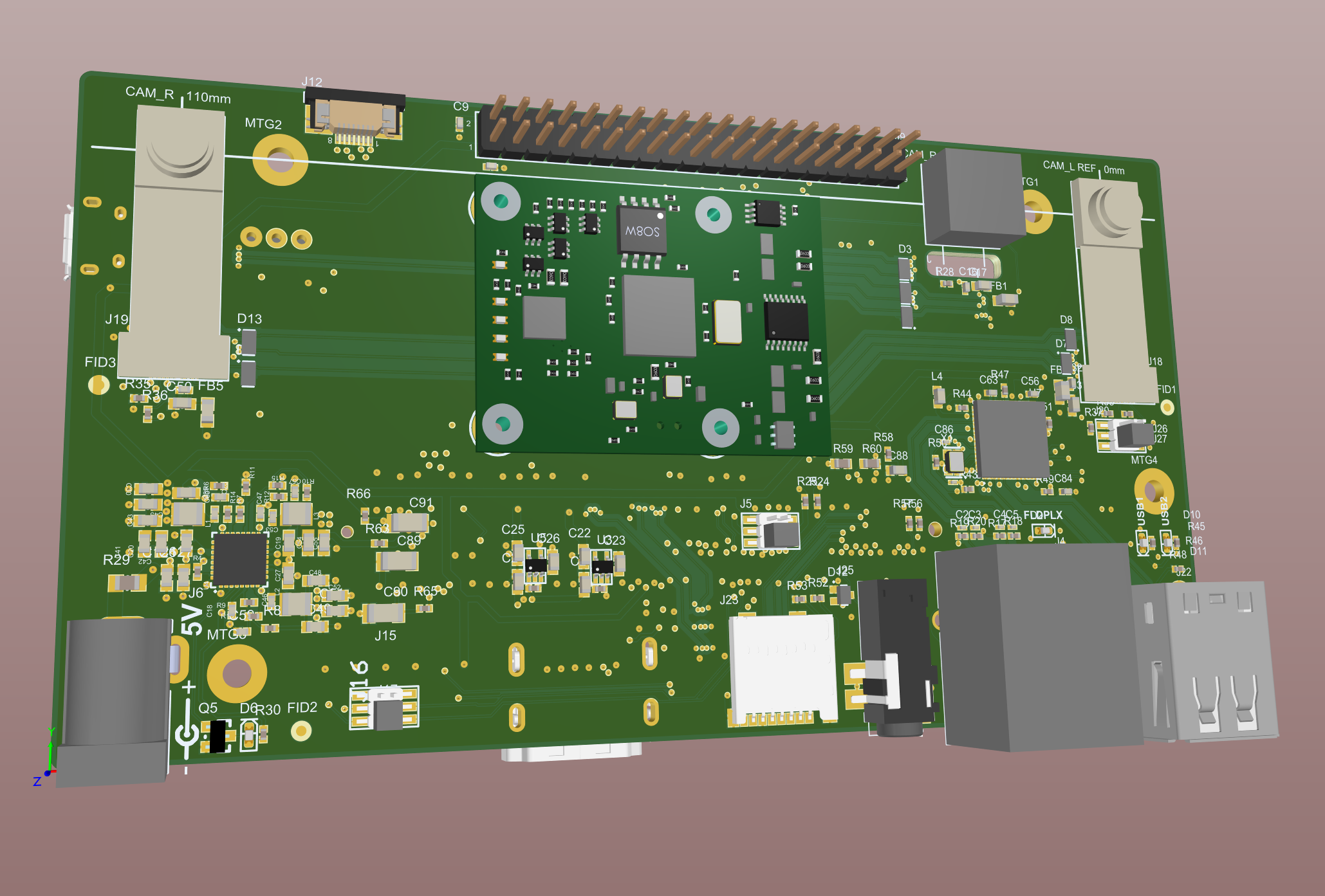

And on our DepthAI for Raspberry Pi the spacing will be much larger, which will a lot further-out depth sensing (likely around 12-15 meters), with spacing of 110mm between the cameras instead.

More updates to come!

Cheers,

The Luxonis team!

Brandon

Brandon

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.