Before getting started, I know how futile and pointless this project is, and that it could also be resolved in a much more efficient way using 'classic' control systems such as PID controllers, LQR... But I settled on a neural network as I was actually really interested to see how a neural network would cope with all the drawbacks coming with the hardware (play in the parts, lag in movement, imperfect calibration...)

There is something that fascinate me about AI's that can interact with the real world . And event though it is just math, it always feels like magic when you see the model learn on its own before your eyes .

THE INITIAL PLAN .

I wanted this project to be quite straight forward without big unknowns to deal with so I decided to reuse skills I already built up on other projects . I thought I would get it done in 2 days...lol

For the game and machine learning part I decided to use the game engine Unity coupled with the ML-agents toolkit also developed by Unity, I was already familiar with this toolkit so I had a half decent idea of what I was getting into even if it evolved quite a bit since the last time I used it .

The choice of the game was made early on, it needed to be a game requiring very few inputs so I could keep the mechanical parts very quick to design .

The ML-agent tool kit comes with a few pre-built examples, one of them being a ball balancing exercise, that could easily be turned into a game requiring only a single joystick .

THE ROBOT .

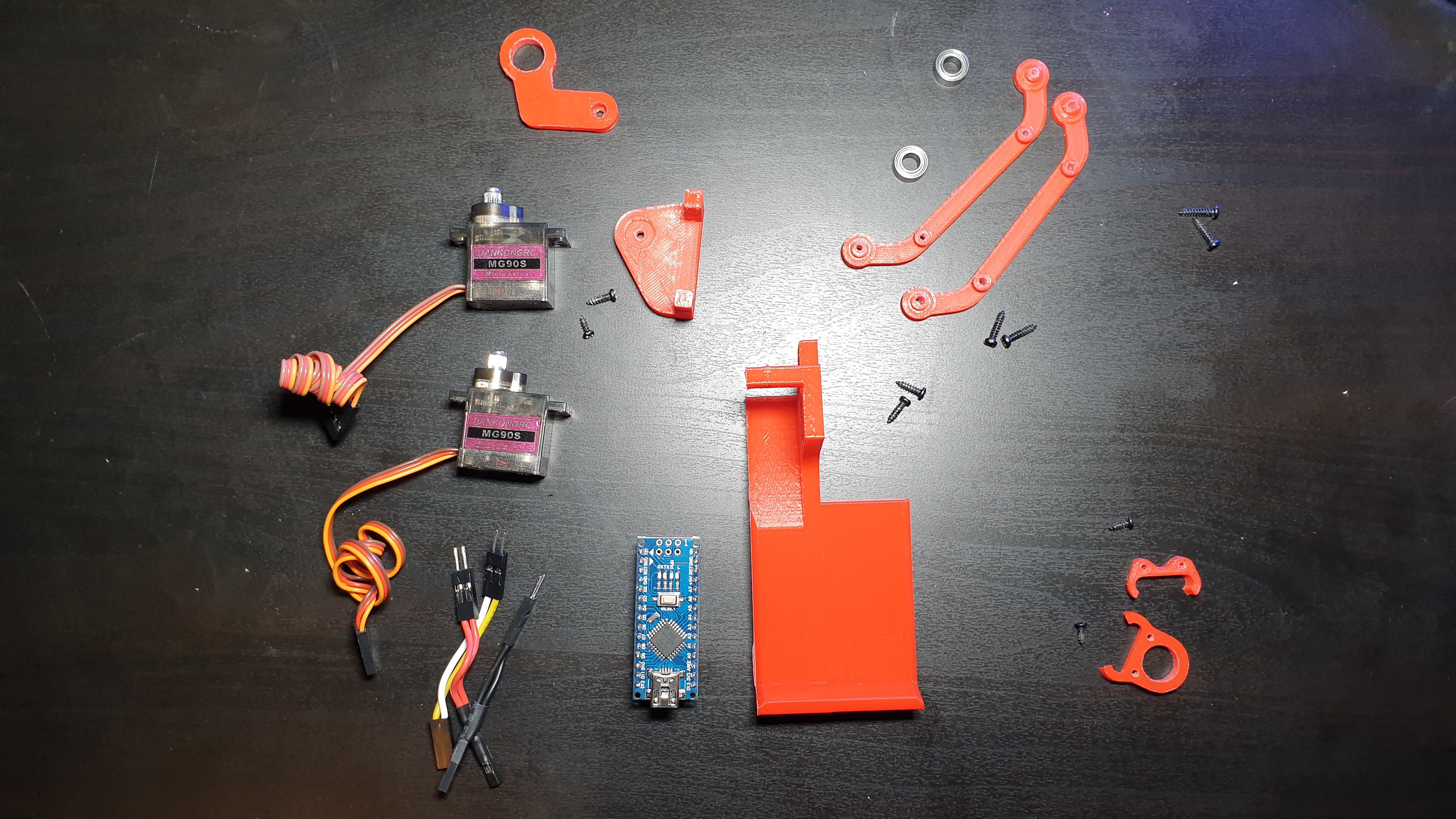

I then proceeded to design a simple arm that would actuate the joystick . I had a few MG-90s servos and an arduino nano collecting dust on a shelve so I quickly designed a few parts around those components .

The only thing I was really careful about was to try keeping the pivot point of the joystick aligned with the rotation axis of the servos as this would keep the programming simpler at a later stage...call me lazy if you want! ;)

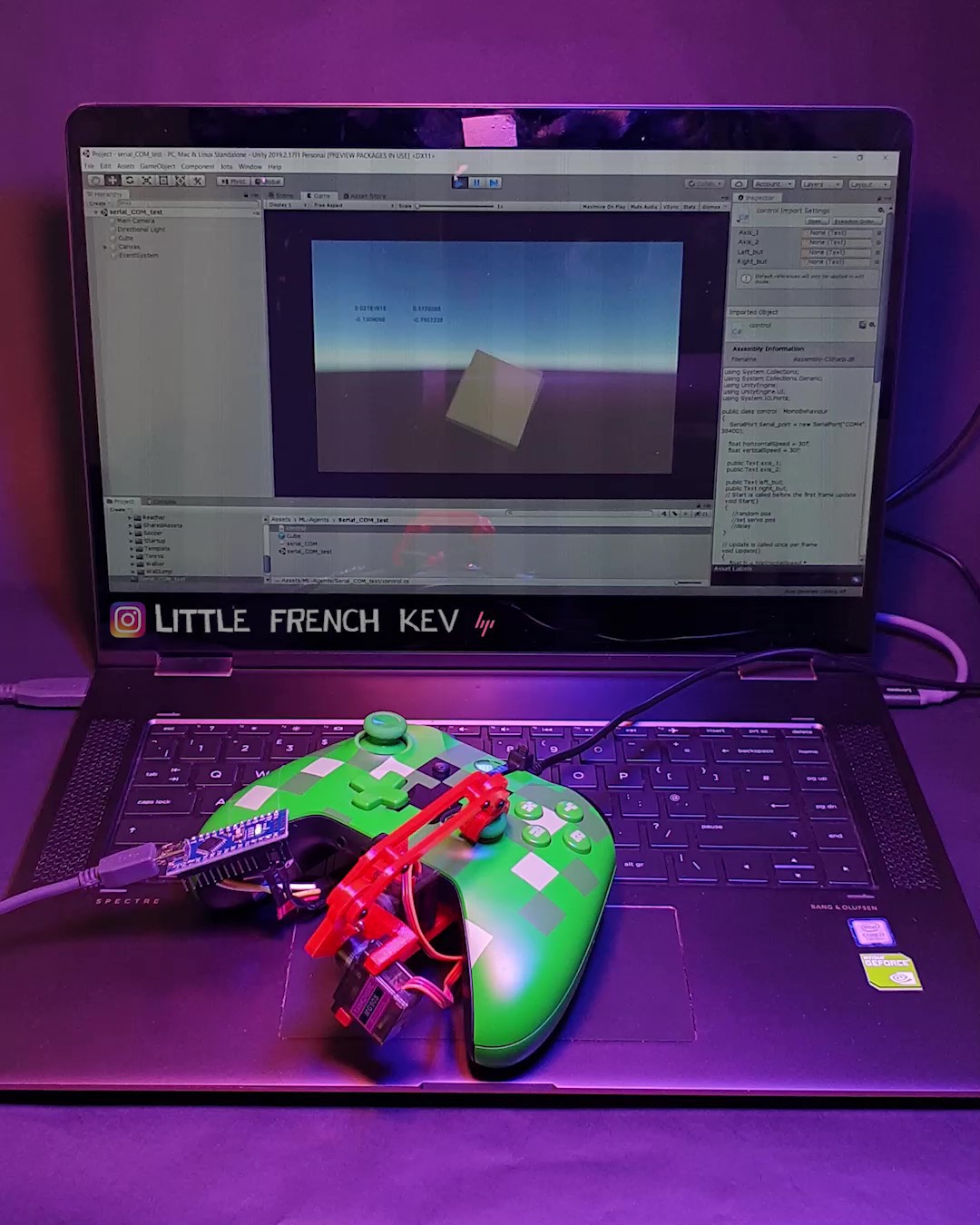

The next step was to write a simple program for the arduino that would allow it to control the joystick .

A simple unity scene was then created to help roughly calibrate the joystick movements .

It was time to make sure the AI would be able to take control of the joystick . The simple Unity scene was updated to allow the right joystick to be controlled using the left joystick . The left joystick was just used to simulate the output of the neural network, this allowed me to make sure the communication between the computer on the arduino was working fine .

THE GAME .

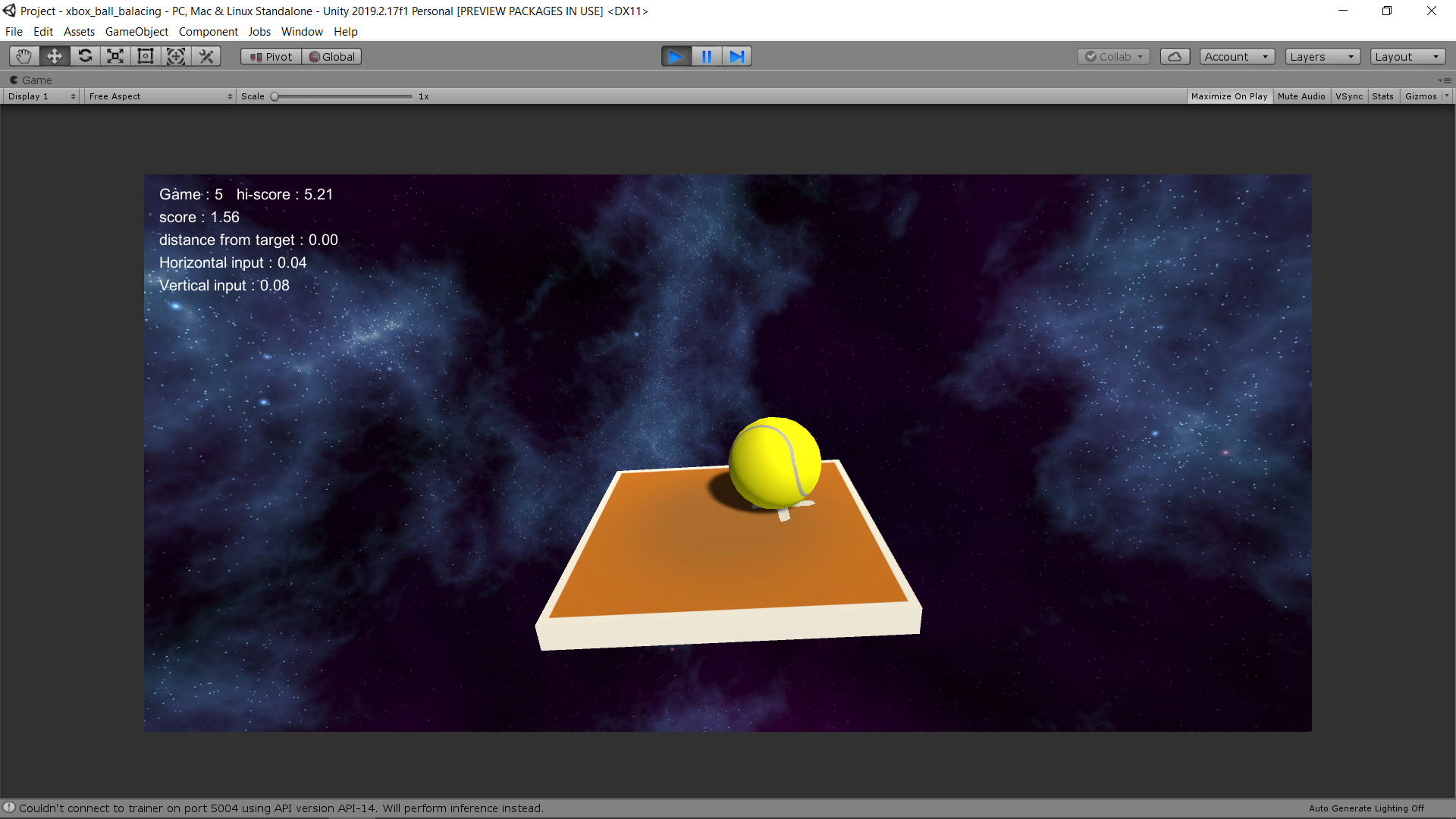

At this point it was time to create the game . As mentioned earlier, I recreated one of the example included in the toolkit and added some an extra difficulties . One was to add a target the ball needs to be as close to while being balanced on the platform, the second was to throw the ball at random speed in random direction at the start of each game .

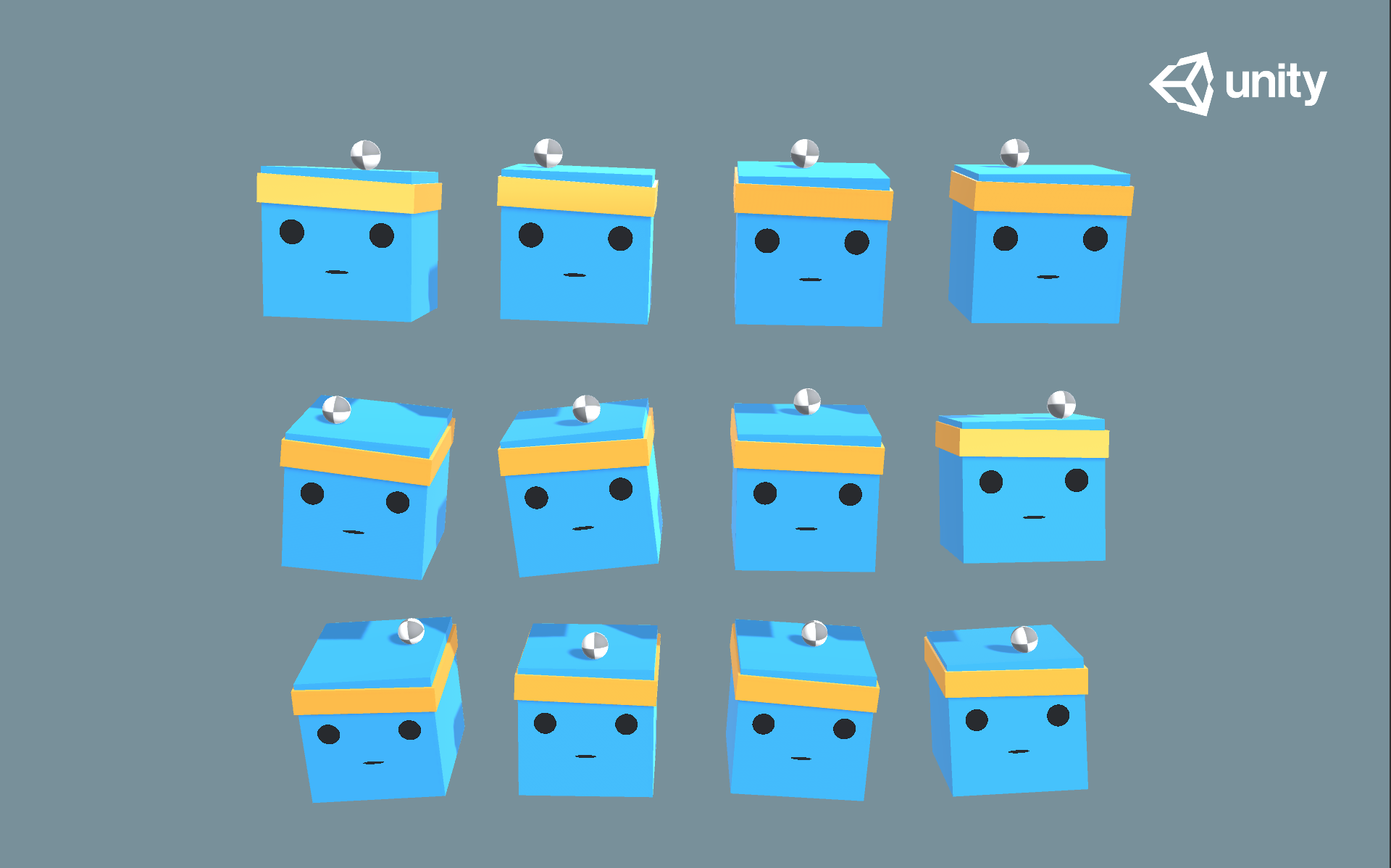

I also created a few textures for extra beauty (sot of...) .

TRAINING .

It was time to start the training process .

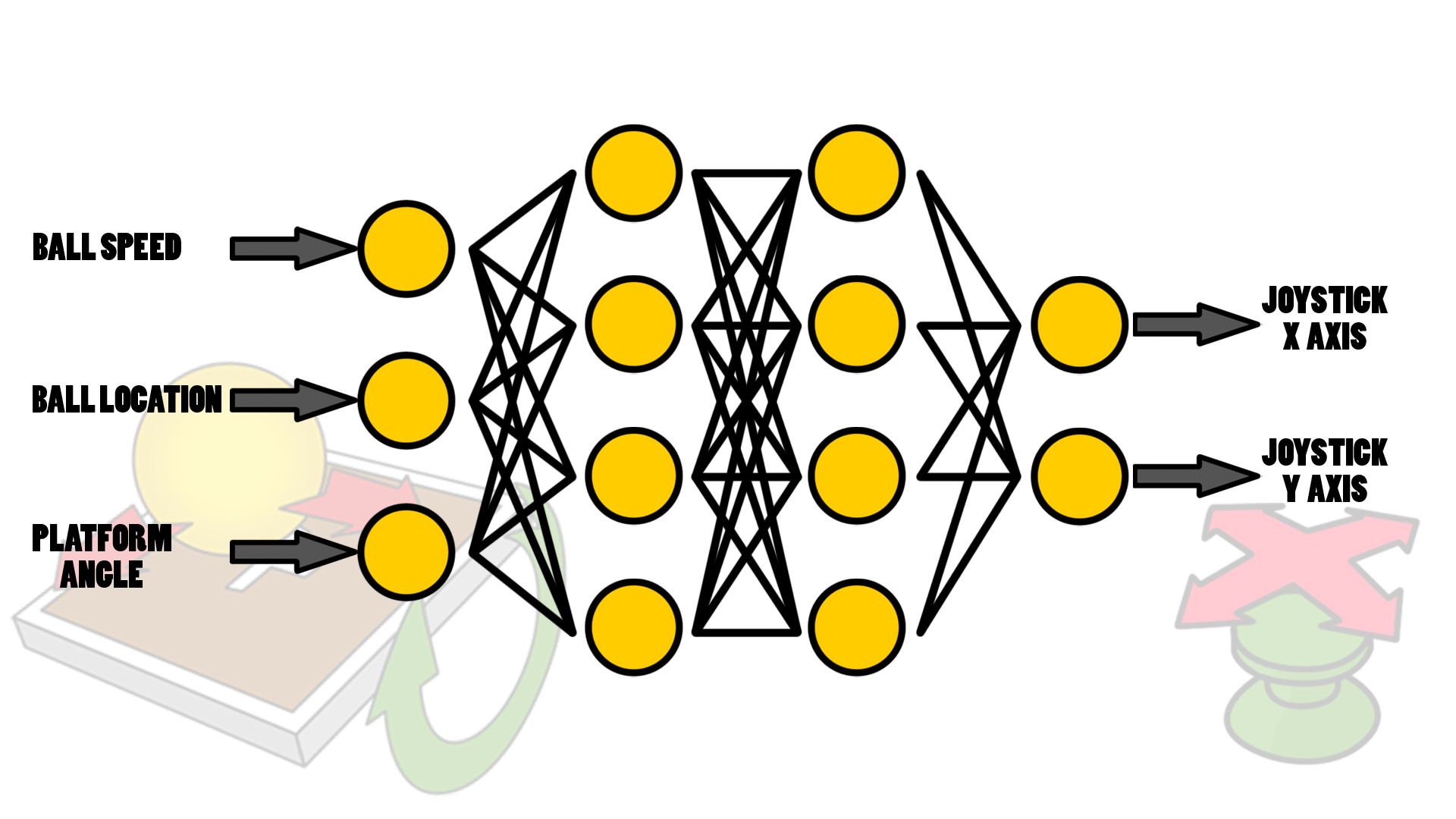

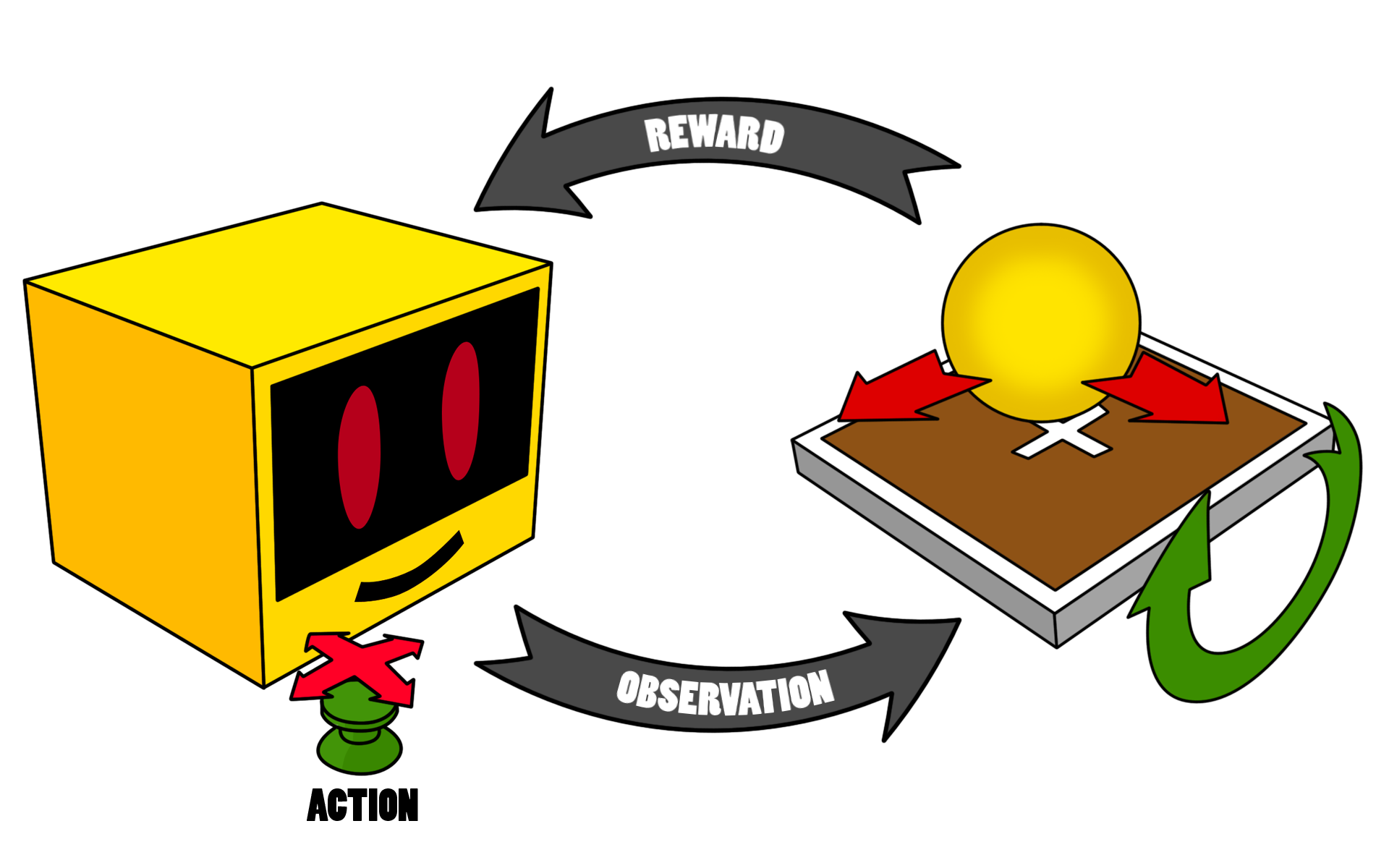

Here is an extremely simplified explanation of the steps happening during training .

1 - the AI, let's call it an agent, looks at the state of the game (observation), here it collects the information directly in game . It looks for the speed and location of the ball and also the angle of the platform .

2 - following its observations it will take an action (move the joystick) .

3 - depending on the outcome of this action, it will either receive a punishment if the ball falls (negative score) or a reward (positive score) if the ball remains on the platform, the closer the ball is to the target the bigger the reward is .

The cycle then repeat itself until the AI learns how to take the decisions required to gain a maximum score .

At this point I thought I would just have to start the training walk away for a few hours and come back to a fully functional robot .

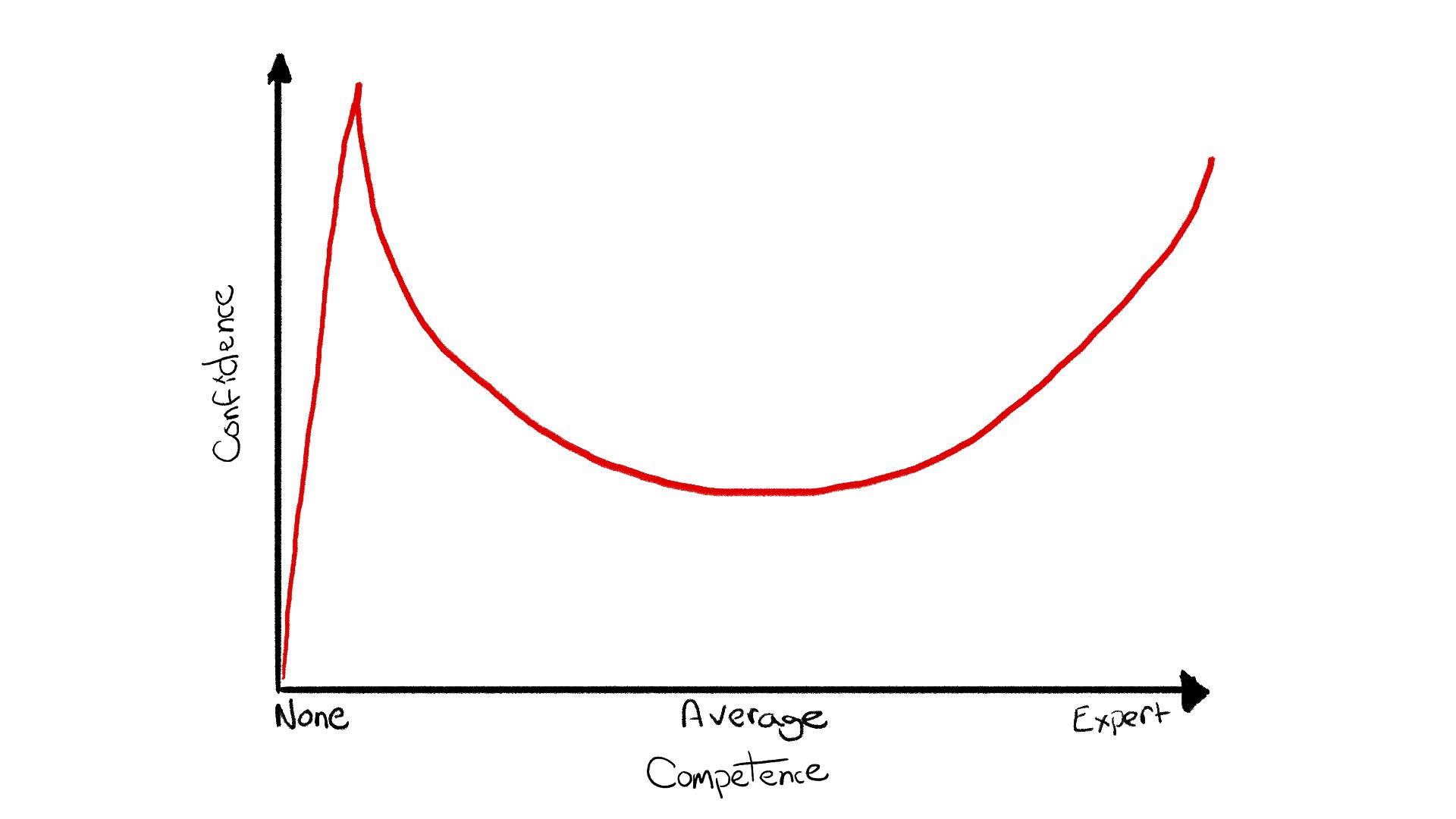

Well it wasn't the case... In the first training session, the AI learnt how to play the game but it was extremely twitchy .

I then decided to retrain the model and introduce extra reward for being smooth . It worked a bit better but far from great . I was pretty sure I could get more out of it .

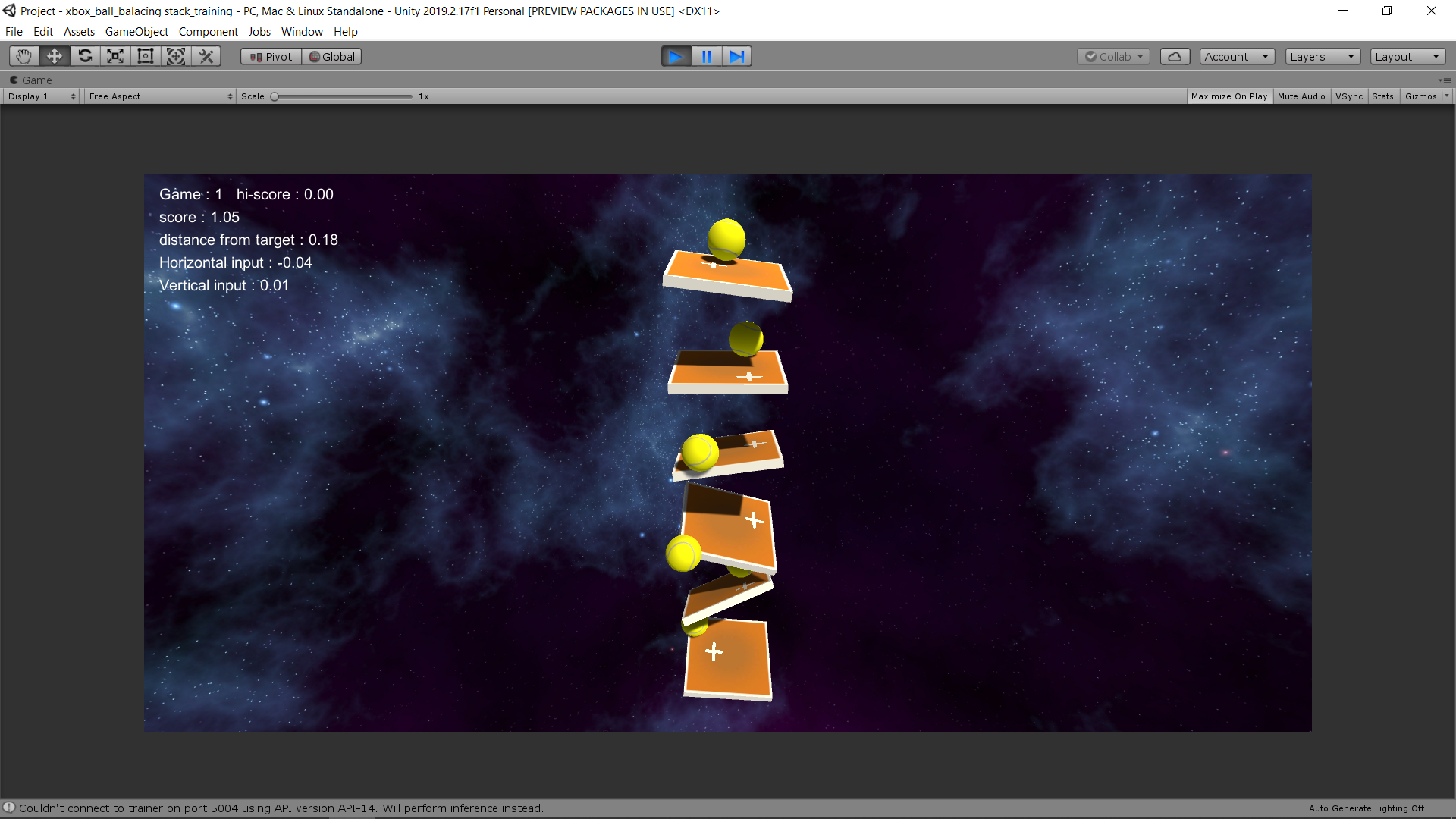

One of the big problem of including any hardware during training is that you are limited to only train one agent at the time at real time speed .

This is why I opted for a new strategy . I took the decision to split the training in half . The first half would happen without hardware . This mean I can train multiple AI at once and speed up time 20 folds . Then introduce the hardware in the second part of the training to make sure the AI would adapt to the hardware . This would help saving hours .

The training with multiple fully digital agents went very well an allowed me to test different reward/punishment systems as well as different neural network parameters (number of layers, number of neurons...) .

Unfortunately when I reintroduced the hardware in the second part of the training I was expecting the AI to handle the control fairly decently...It was not the case . I am not too sure if it was due to an error in my code or if the 'virtual' joystick wasn't simulating the real one well enough but It was clear that it would need a lot of training to get to a level I would be happy with .

Now I knew I had good network parameters and a decent reward/punishment system in place I decided to bite the bullet one last time and retrained from scratch . After another 10 hours of training I was quite happy with the result, it is still a bit twitchier than the fully digitally trained model but I thing it my be due to the network compensating for the hardware imperfection .

CONCLUSION .

I don't think I have made any ground breaking discoveries working on this project but it was certainly a good reminder that AI isn't the solution for every problem and it is certainly not some magical tool that can do everything on it's own .

I also found very interesting that it is actually very easy to introduce human bias in its behaviour . Maybe being smooth isn't the best technique? but it still decided to reward it and forced it to develop this behaviour .

And the last thing to take out of this project is that the amount of training necessary shouldn't be ignored as it ended up to be the most time consuming part of this adventure .

I still had a lot of fun with this short project and I sure I will try to do something like this again in the future .

little french kev

little french kev