Introduction

I wanted to choose a project that involved IoT concepts such as wireless communication and server-side scripting. I eventually decided to build a quadcopter that flies using a custom onboard flight controller that takes maneuvering commands from a touchscreen tablet via WiFi. The ultimate goal of this project is to have the quadcopter track a moving object from a camera image, and follow it.

The project is still in progress. Currently, I'm working on fine-tuning PID values within the flight controller to achieve a more stable flight, and am also in the process of implementing motion tracking.

Hardware

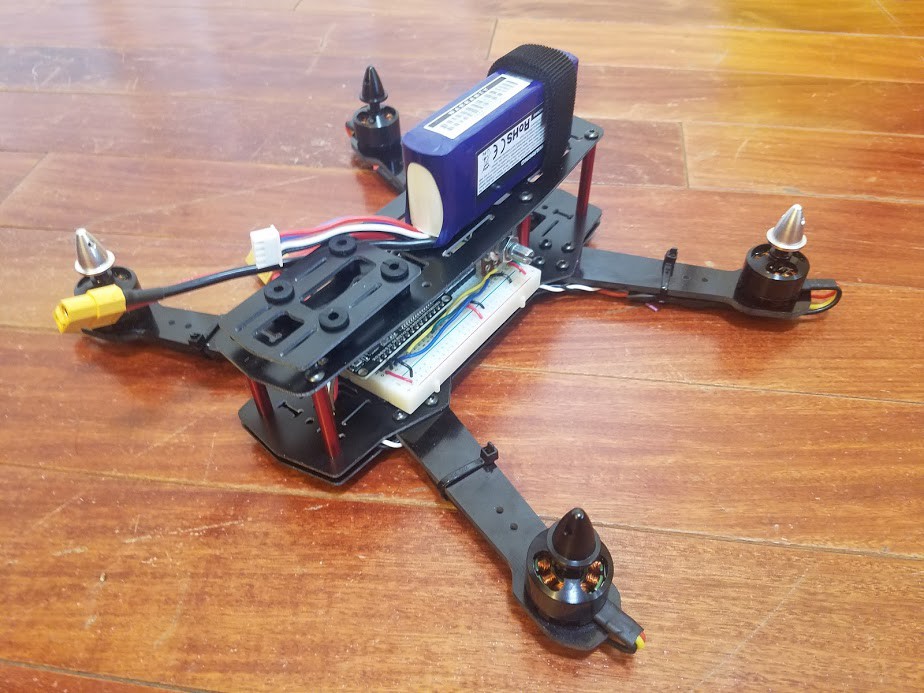

For the mechanical components of the quadcopter, I decided to purchase a bare-bone AeroSky ZMR250 kit that costs about $70. This kit is modular, and includes most of the essential parts needed to fly, including a carbon fiber frame, motors, ESCs, and a power distribution board. I chose to buy this kit because of its large mounting surface which will allow me to easily add my own sensors and peripherals in the future.

I ended up replacing the propellers that came with the kit with more durable tri-blade propellers from Dalprop.

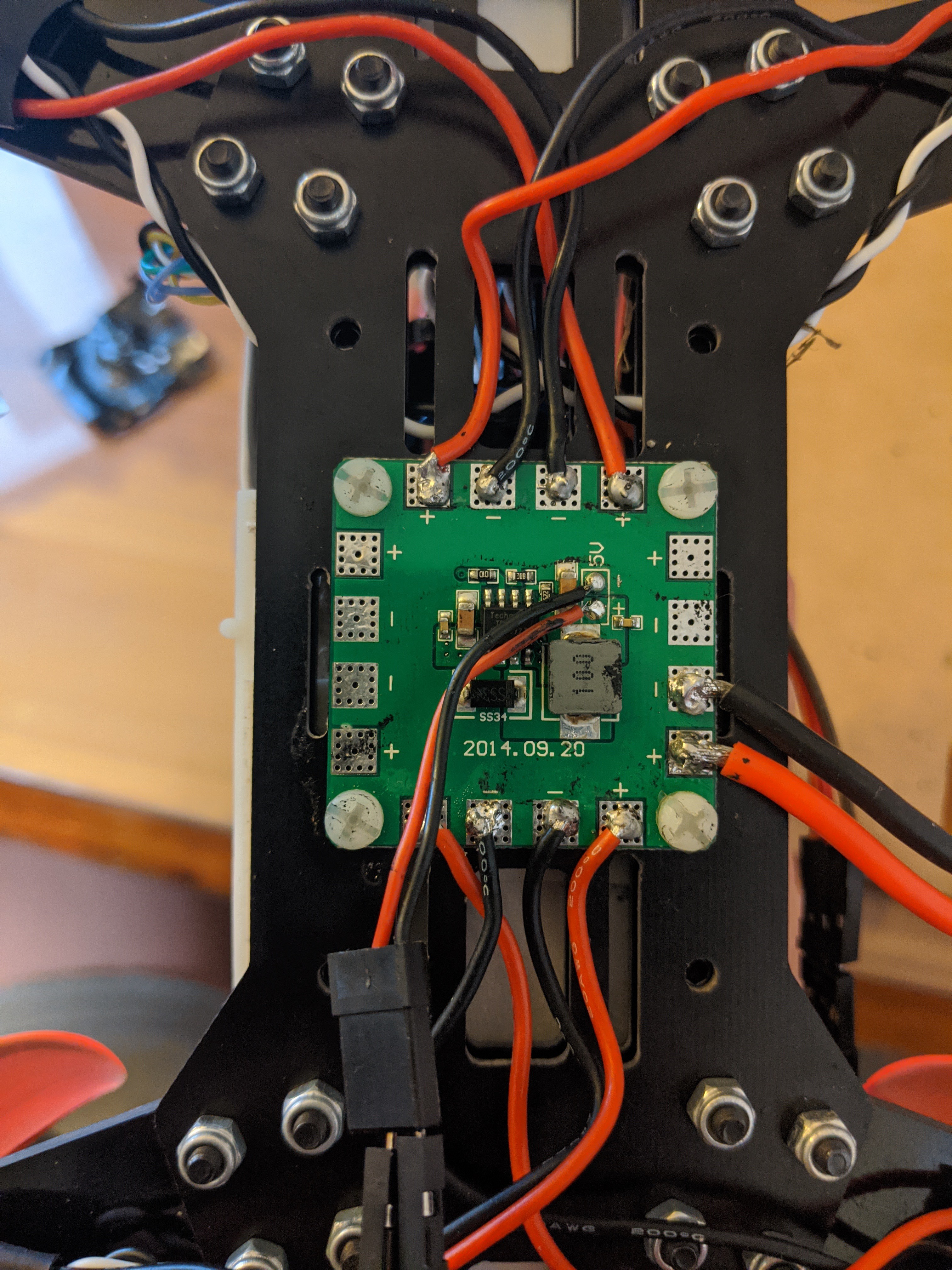

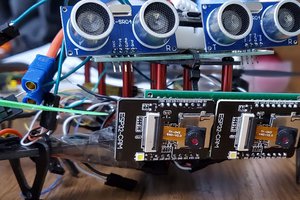

The flight controller of choice is a ESP-32S Development Board paired with a MPU6050 IMU breakout board. The communication between them is done through standard I2C protocol. The signal wires of the ESCs are connected to the breadboard using some extra-long male header pins. The breadboard is mounted onto the frame using double-sided mounting tapes.

A 3 cell 1500 mAh 35C LiPo battery is hooked up via XT60 connectors to the power distribution board, which supplies 11.1V to the four motors and the ESCs. The ESP32 and the IMU boards are powered by the regulated 5V line from the power distribution board.

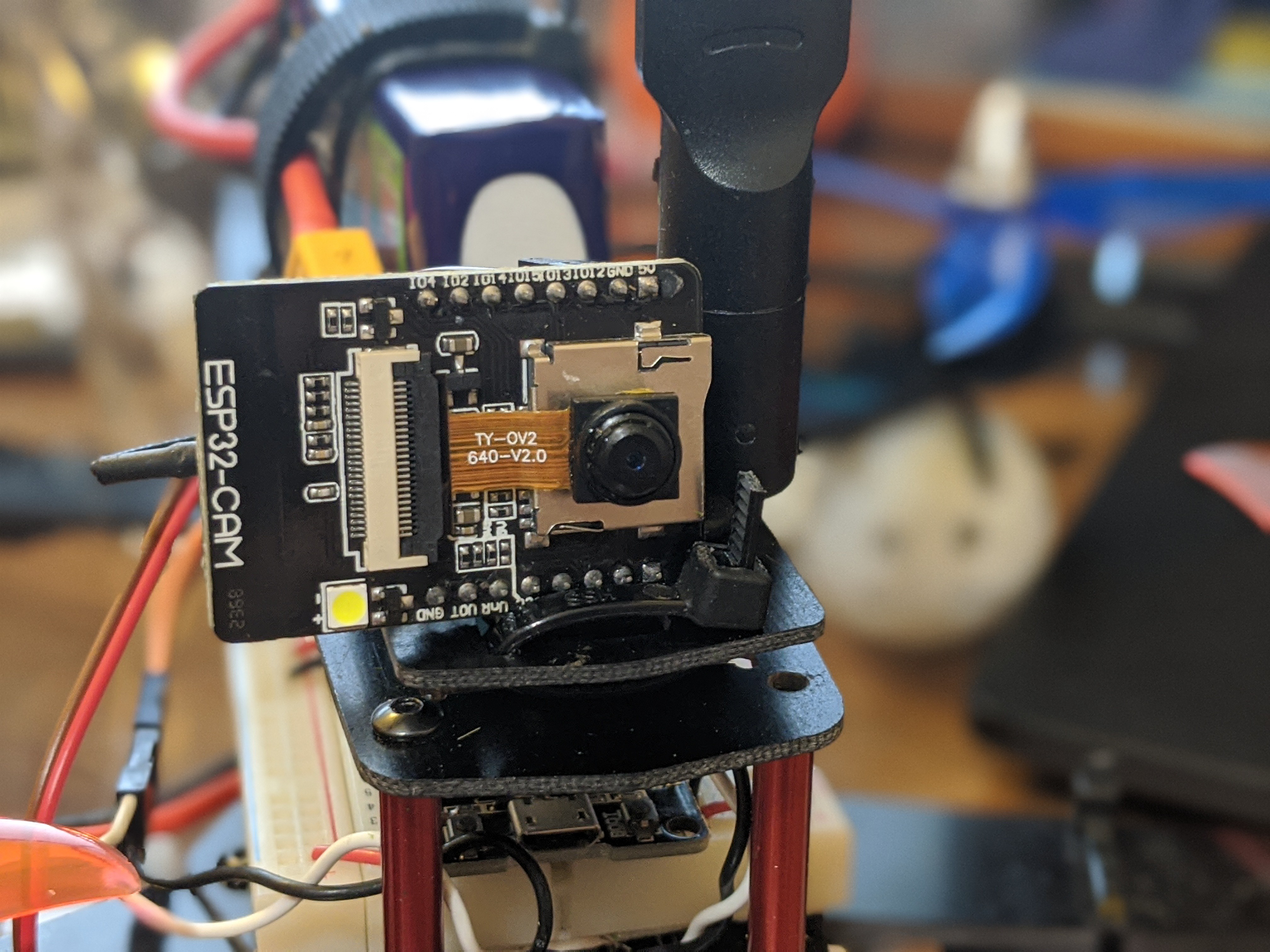

For the motion tracking camera, I used the popular AI-Thinker ESP32-CAM module which has a built in ESP32-S chip with WiFi capabilities. I paired it with an inexpensive OV2640 camera. Using just an internal PCB antenna, the frame rate frequently dropped below 1 fps when trying to stream QVGA images to a websocket. I solved this issue by connecting with an 8dBi external antenna, which required me to reposition a surface mount resistor on the board.

Software

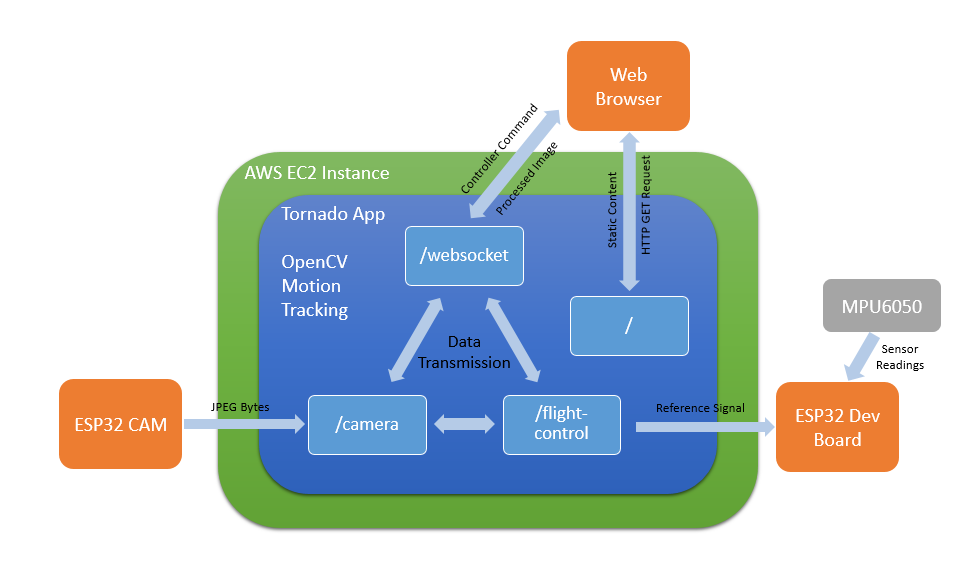

The overall architecture of the system is shown below. The entire system is supported by a Tornado server that is hosted inside an AWS EC2 t2.micro instance. Within the server, there are three websocket endpoints that communicate with three different clients: an ESP32 CAM, an ESP32 Development board which is used as the flight controller for the quadcopter, and a web browser that displays user interface. Only one client can connect to a given websocket channel in order to ensure that the correct type of message is being sent and received.

I created a static web "app" that contains a simple joystick interface using HTML, JavaScript, and CSS. This web app is optimized for mobile devices with touch screen, and is used to control the quadcopter as well as to view streamed images from the camera. It also has buttons to arm/disarm the quadcopter and put it into “follow” mode. When the joysticks are moved, a JavaScript routine sends their X and Y positions to the server, which then relays that information to the onboard ESP32 flight controller for thrust calculation. So far I've successfully tested the web app on a Google Pixel 3a and an Amazon Fire HD 8 tablet.

Using the image received from the camera, a Python script running inside the server tracks a bright orange ball around the video frame. This is done by filtering out all elements outside the boundaries of color orange in the HSV color space, then performing a series of erosion and dilation to remove noise. Knowing the actual diameter of the ball and its apparent pixel width in the camera image, the script uses triangle similarities to approximate the distance between the ball and the camera.

I plan...

Read more » Joshua Cho

Joshua Cho

Nathaniel Wong

Nathaniel Wong

Theo

Theo

Ulrich

Ulrich