Why Did We Build This?

"Prevention is better than cure" is one of the effective measures to prevent the spreading of COVID-19 and to protect mankind. Many researchers and doctors are working on medication and vaccination for corona.

COVID-19 spreads mostly by droplet infection when people cough or if we touch someone who is ill and then to our face (i.e rubbing eyes or nose). Ongoing pandemic shows that it is much more contagious and spreads fast. Depending on the infection spreading, we have two cases: Fast and Slow spread.

Fast pandemic will be terrible and will cost many lives. It occurs due to a rapid rate of infection because there are no countermeasures to slow it down. This is because, if the numbers of infected people get too large, healthcare systems become unable to handle it. We will lack resources such as medical staff or equipment like a ventilator.

To avoid the above situation, we need to do what we can to turn this into a slow pandemic. A pandemic can be slowed down only by the right responses, mainly in the early phase. In this phase, everyone who is sick can get treatment and there is no emergency point with flooded hospitals.

In this pandemic, we need to engineer our behavior as a vaccine. that is, "Not getting infected" and "Not infecting others". The best thing we can do is to wash our hands with soap or a hand sanitizer. The next best thing is social distancing.

To avoid getting infected or spreading it, It is essential to wear a face mask while going out from home especially to public places such as markets or hospitals.

Source - The Hindu

Idea 💡

The system is designed to detect the faces and to determine whether the person wears a face mask or not. Using the above data, we can decide whether the concerned person can be allowed inside public places such as the market, or a hospital. This project can be used in the hospital, market, bus terminals, restaurants, and other public gatherings where the monitoring has to be done.

This project consists of a camera that will capture the image of the people entering public places and detect whether the person wears a face mask or not using their facial features.

To test the real-time scenario, we deployed it on one of the rooms to test how possibly it could be used and the results were pretty affirmative.

Hardware Build

Step 1: Getting Started with NVIDIA Jetson Nano (Content from NVIDIA)

NVIDIA® Jetson Nano™ Developer Kit is a small, powerful computer that lets you run multiple neural networks in parallel for applications like image classification, object detection, segmentation, and speech processing. All in an easy-to-use platform that runs in as little as 5 watts.

Specification- Developer Kit I/Os

- USB

- 4x USB 3.0, USB 2.0 Micro-B

- Camera Connector

- 1x MIPI CSI-2 DPHY lanes

- Connectivity

- Gigabit Ethernet, M.2 Key E

- Storage

- microSD (not included)

- Display

- HDMI 2.0 and eDP 1.4

- Others

- GPIO, I2C, I2S, SPI, UART

You’ll Also Need:

- microSD Memory Card (32GB UHS-I minimum)

- 5V 4A Power Supply with 2.1mm DC barrel connector

- 2-pin Jumper

- USB cable (Micro-B to Type-A)

- USB camera / Pi camera

For a limited time only, bring the Jetson Nano Developer kit home for just $89.

Step 2: Burn NVIDIA Image to the SD card

We will need at least a minimum of 32GB SD card for the Jetson Nano. Download the image from NVIDIA DLI AI Jetson Nano SD Card Image v1.1.1

- Format your microSD card using SD Memory Card Formatter from the SD Association and disconnect once done

- Download Etcher and install it.

- Connect an SD card reader with the SD card inside.

- Open Etcher and select from your hard drive the Jetson Nano Developer Kit SD Card Image

.imgor.zipfile you wish to write to the SD card. - Select the SD card you wish to write your image to.

- Review your selections and click 'Flash!' to begin writing data to the SD card

We can access the NVIDIA Jetson Nano by two methods.

- Directly connecting the Display, Mouse, and Keyboard

- Headless Mode

for Headless mode, we need a USB cable to connect the PC and the Jetson Nano. Note that the USB cable should provide data transfer.

Step 3: Logging Into The JupyterLab Server

- Open the following link address : 192.168.55.1:8888The JupyterLab server running on the Jetson Nano will open up with a login prompt the first time.

- Enter the password:

dlinanoand click the Login button.

Now we have logged in to the JupyterLab Server.

Step 4: Block Diagram & Components 🛠️️

The Block Diagram of this circuit is given below.

To make these connections, I've used separate connectors for power, and relay output.

PI-CAMERA

The Pi-camera is connected to the CSI port of the Jetson Nano.

Pi Camera Module V2

Pi-cam to Jetson Nano

RELAY BOARD

We connect the Buzzer and an Emergency light using a relay circuit to the GPIO pins 18 & 23 of the Jetson Nano.

RELAY DRIVE CIRCUIT

The Power Supply is used to deliver the power for the Status Light, Emergency Light, and the Alarm.

Power Supply

The Industrial grade Light Indicators and Buzzer are used to show the status and alert signal to the security (user).

Industrial grade Light Indicators

Relay Board ---> Jetson Nano

Vcc ---> +5V

IN1 (Pass Light)---> 18

IN2 (Alert Light & Buzzer) ---> 23

GND ---> GND

The Industrial grade Light Indicators and Buzzer are connected to the GPIOs of the Jetson Nano.

Sensor Connection

Power Supply Connection

The Overall connection is shown below.

Overall Connection

Step 5: GPIO Integration 🔗

After reading a lot of forums, I tried to interface GPIO on the Jetson Nano. We need to install the Jetson GPIO Python library using the following command:

pip install Jetson.GPIO

If you face any issues in importing this package, do try the following command to import the package.

sudo -H pip install Jetson.GPIO

Installing Jetson.GPIO lib

Import the Jetson GPIO package using the following command.

#import GPIO Lib import Jetson.GPIO as GPIO

To interface Jetson Nano GPIO pins, I've started with a basic LED blink program.

output_pin = 18 GPIO.setmode(GPIO.BCM) GPIO.setup(output_pin, GPIO.OUT, initial=GPIO.LOW) curr_value = GPIO.LOW try: while True: time.sleep(1) GPIO.output(output_pin, curr_value) curr_value ^= GPIO.HIGH finally: GPIO.cleanup()

LED Blink GIF

The GPIO Pins of Jetson Nano delivers only 3.3V. To toggle the relay, we need a 5V input. To solve this issue, we use an NPN transistor to level the output to 5V. Luckily, the Relay breakout board which I use has two transistors. The Relay Module triggers whenever a low pulse is given to the input.

if(prediction_widget.value == 'mask_on'): GPIO.output(access_pin, LOW) GPIO.output(alert_pin, HIGH) if(prediction_widget.value == 'mask_off'): GPIO.output(access_pin, HIGH) GPIO.output(alert_pin, LOW)

Indicator Flashes

Step 6: Camera Initialization 📷

Depending on the type of camera you're using (CSI or USB), the initialization is done as follows. First, the full reset of the camera is done by:

!echo 'dlinano' | sudo -S systemctl restart nvargus-daemon && printf '\n' !ls -ltrh /dev/video* # Checks the device no

For the USB camera, the following lines are used. Note that, NVIDIA recommends Logitech C270 webcam, but Jetson Nano will also support other USB cameras.

from jetcam.usb_camera import USBCamera camera = USBCamera(width=224, height=224, capture_device=0) camera.running = True

For the PI camera, the following lines are used. I've used the Raspberry Pi Camera Module V2 in this project.

from jetcam.csi_camera import CSICamera camera = CSICamera(width=224, height=224) camera.running = True

Now the camera is initialized. Make sure that only one camera is active as the Jupyter notebook supports one camera at a time.

Step 7: Collecting the Dataset 🗄️

The first step in this project is data collection. The System has to identify the presence of masks and classify whether the person wears a mask or not.

Although most of the libraries are pre-installed, we install several libraries to the Jetson Nano using pip install <package_name> command.

torch torchvision dataset ipywidgets traitlets IPython threading time

Thanks to NVIDIA DLI, here we make some minor tweaks to the classification example and addition of GPIO interface to the project.

TASK = 'mask'

CATEGORIES = ['mask_on', 'mask_off']

DATASETS = ['A', 'B']

TRANSFORMS = transforms.Compose([ transforms.ColorJitter(0.2, 0.2, 0.2, 0.2), transforms.Resize((224, 224)), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

datasets = {}

for name in DATASETS: datasets[name] = ImageClassificationDataset(TASK + '_' + name, CATEGORIES, TRANSFORMS)

We have installed all the required dependencies for this project. The Data-set is made by collections and arrangements of the images under the appropriate label.

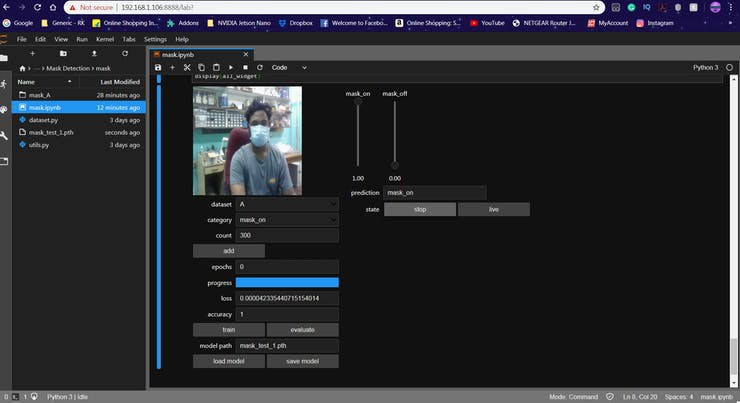

For this project, we classify the image as either 'mask_on' or 'mask_off'. Initially, we created 300 images each for the dataset labels 'mask_on' or 'mask_off'. More Images were collected with different peoples and with a different background.

Collecting Dataset for without Mask

Collecting Dataset for with Mask

The image is captured for each label ('mask_on', 'mask_off').

Step 8: Designing a NN & Training the Model ⚒️⚙️

The Core of this project is an image classifier that classifies one of three categories. To make this Classifier, we use the pre-trained CNN (Convolutional Network) called ResNet18.

Here we use PyTorch and CUDA to generate the ResNet model which can identify the presence of a mask. The images which we generated in the previous step are used to train the model. The model is trained using the Dataset generated for a number of Epochs (Cycles) mentioned.

The model is configured with the hyperparameters as shown below.

device = torch.device('cuda')

model = torchvision.models.resnet18(pretrained=True)

model.fc = torch.nn.Linear(512, len(dataset.categories))

BATCH_SIZE = 8

optimizer = torch.optim.Adam(model.parameters())

While the model is training, you can find the loss and accuracy of the model for each Epoch and the accuracy increases at some point in time after a few Epoch.

Model Training

Once the train button is pressed, the model will be trained using the selected Dataset for the given epochs. I've trained the model for epochs = 10 and saved the model.

It roughly took 10 minutes to generate the model with the highest accuracy after 10 epoch and that is really quick when compared to other hardware. Thanks to NVIDIA.

Step 9: Testing the Model ✅

Once the model is generated, It produces the output file "my_model.pth". This file is used as the source to test whether the system can identify the presence of masks and able to differentiate the people.

The camera reads the test image and transform the required color model, and then resize the image to 224 x 224 pixel (Same size used for model generation). The images which were used for training the model can be used to test the generated model.

Once the model is loaded and the image is acquired by the camera, the model predicts the captured image using the ResNet model loaded, and make the prediction for the mask detection.

1 / 2 • Test for without Mask

2 / 2 • Test for with Mask

The Prediction is displayed in the Text Label and the Slider is changed according to the prediction.

Step 10: Mask Detection Test

By taking a boolean into consideration, the presence of the mask is detected. The boolean is set to '1' when the prediction is mask_on. Similarly, the boolean is set to '0' when the prediction is mask_off.

A function is used to print the overlay to the captured image. The overlay text depends on the prediction value from the above step.

Some of the example images are shown below.

Overlay - Without Mask

Overlay - Without Mask

Step 11: Working of the Project 🔭

To test the real-time scenario, we deployed it on one of the rooms to test how possibly it could be used and the results were pretty affirmative.

This system is affordable and can be deployed in public places such as hospitals and markets to decrease the spreading of the virus unknowingly.

The entire world is going through a lot of struggle and pain due to COVID-19. Let's stay strong at home and support our kind to fight against this pandemic.

-----------------------------------------------------------------------------------------------------------------

If you faced any issues in building this project, feel free to ask me. Please do suggest new projects that you want me to do next.

Give a thumbs up if it really helped you and do follow my channel for interesting projects. :)

Share this video if you like.

Blog - https://rahulthelonelyprogrammer.blogspot.com/

Github - https://github.com/Rahul24-06

Instagram - https://www.instagram.com/the_lonely_programmer/

Happy to have you subscribed: https://www.youtube.com/c/rahulkhanna24june?sub_confirmation=1

Thanks for reading!

Rahul Khanna

Rahul Khanna