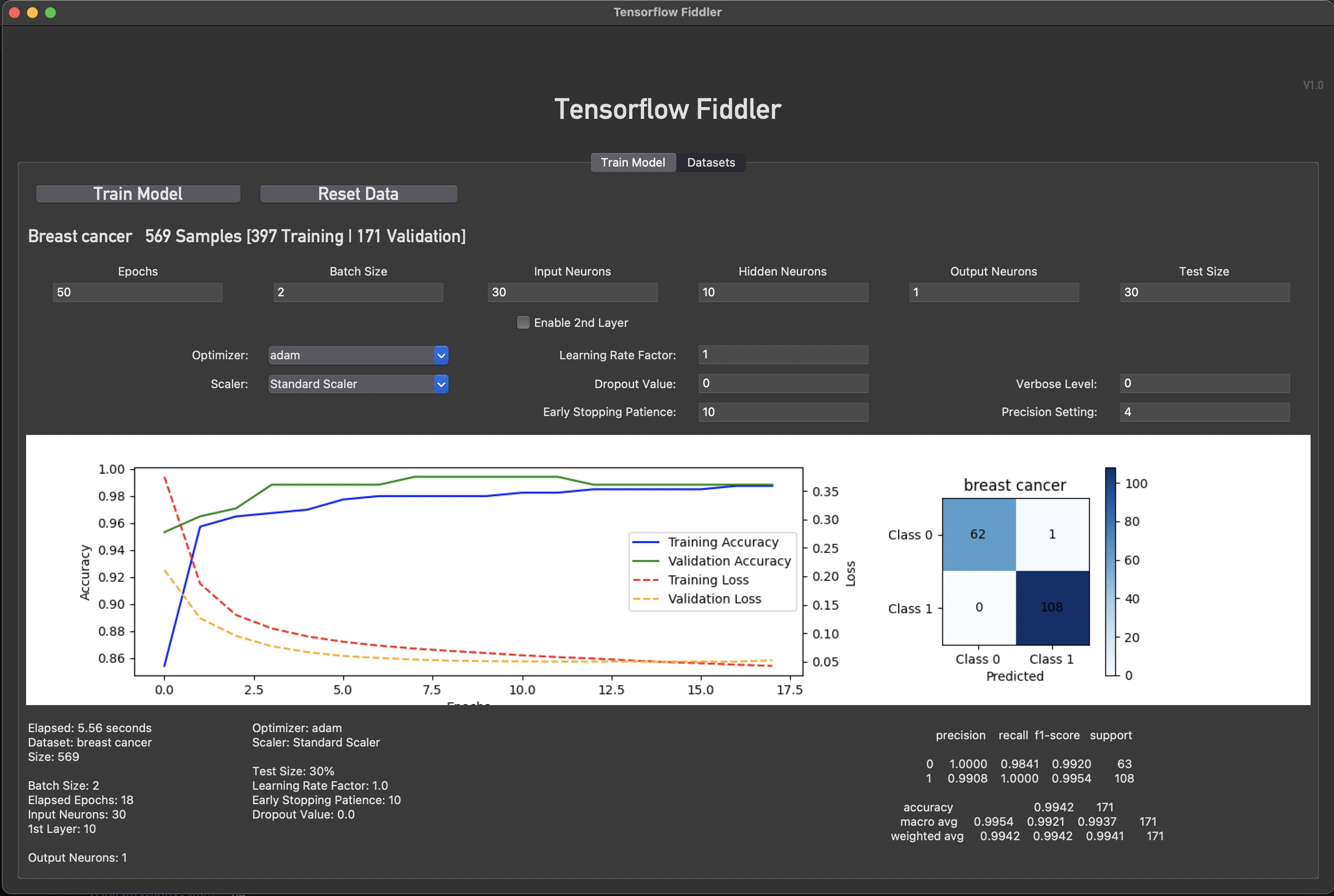

I programmed a first version of my own Tensorflow 'Fiddler'.

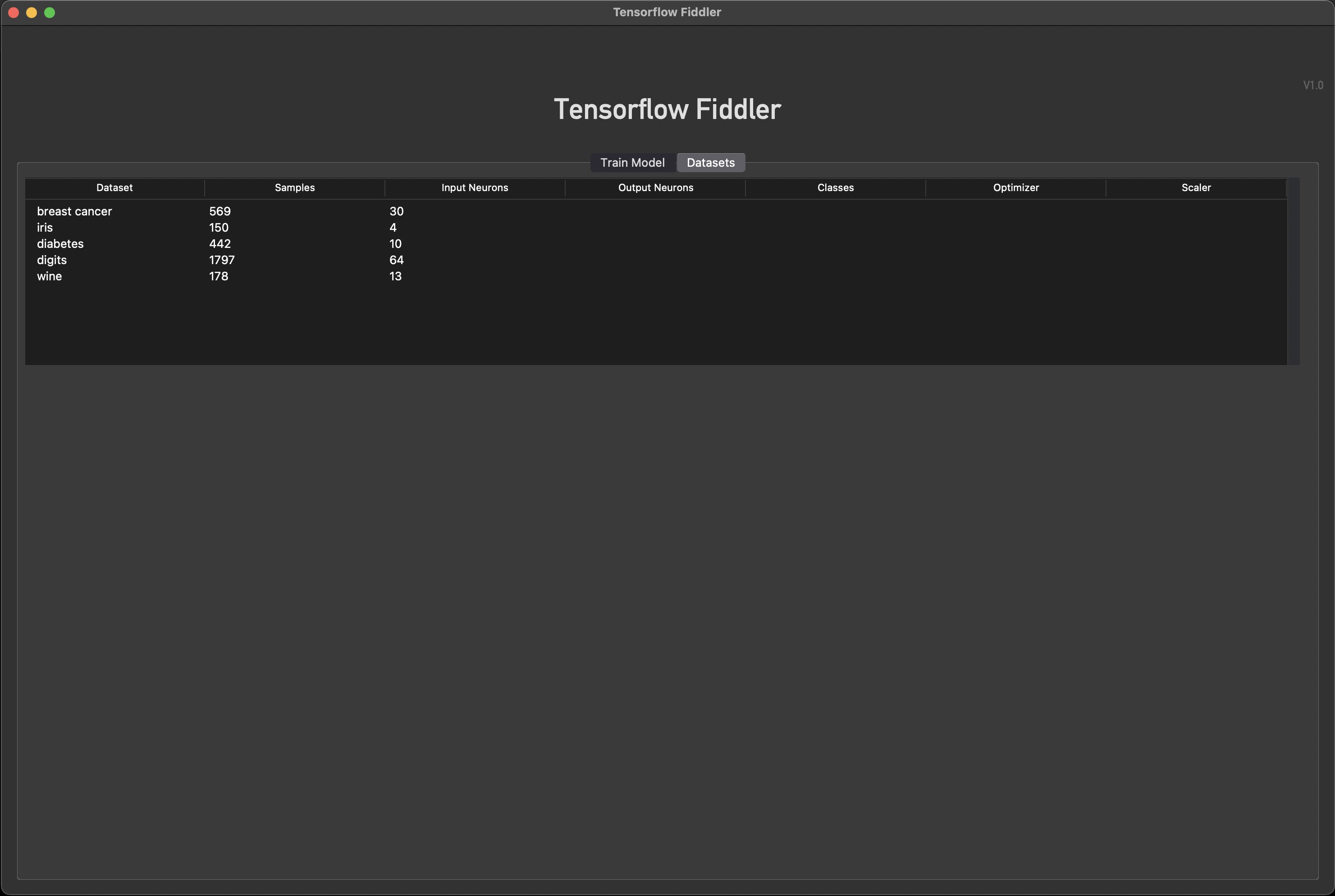

For now it uses provided datasets.

The goal was to get familiar with parameters, scalers, optimizers and training procedures.

This is the first app that i programmed ever. In a language that i have never written in before (python).

This is the first app that i programmed ever. In a language that i have never written in before (python).

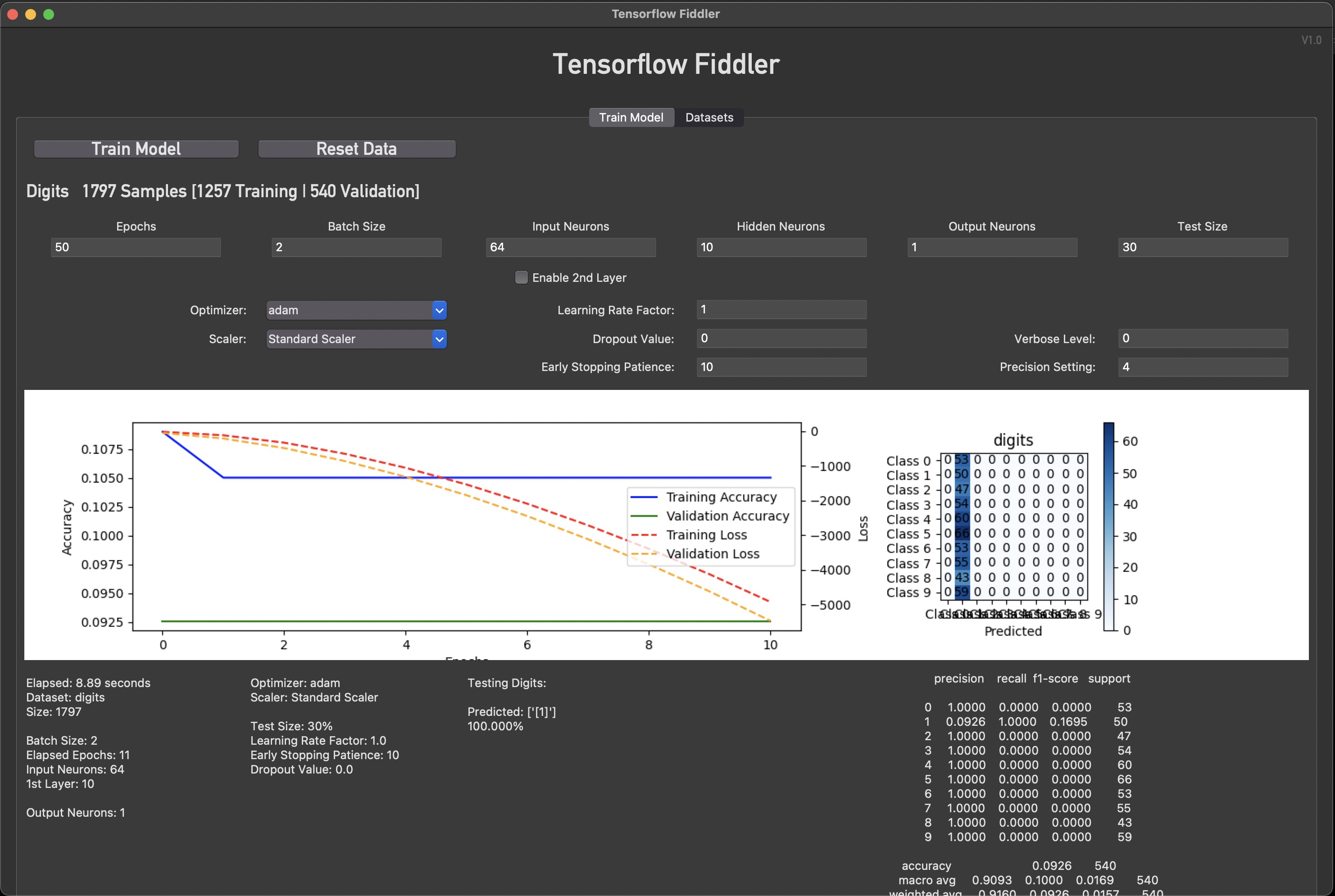

As can be seen in the next pic, i am running into my own knowledge cutoff when the dataset has different numbers of output Neurons or a way to display the result correctly. The app does adjust automatically for Input neurons and one extra hidden Neuron Layer can be activated. As well as all things that can be adjusted are 'broken out'. Still, more fiddling + research needed here.

It gets real weird with the 'digits' dataset obviously since my Fiddler is not set up to handle this kind of output. Or does it? The output displays a '1'.

Also a way to properly test a trained model is missing. Right now the model tests itself with one datapoint after it has finished training on top of the automatic eval.

The point of all this is to make a hub that can be used to train my own models with gathered Air Quality Data from the [HEX]POD and then pass on the model to the [HEX]POD for testing detection accuracy.

Do i move on from the existing datasets and start making this app about my own datasets or do i make it handle all the eventualities? To learn more about how all this works the latter would make sense, then build a completely new version of the app optimized for my own use case.

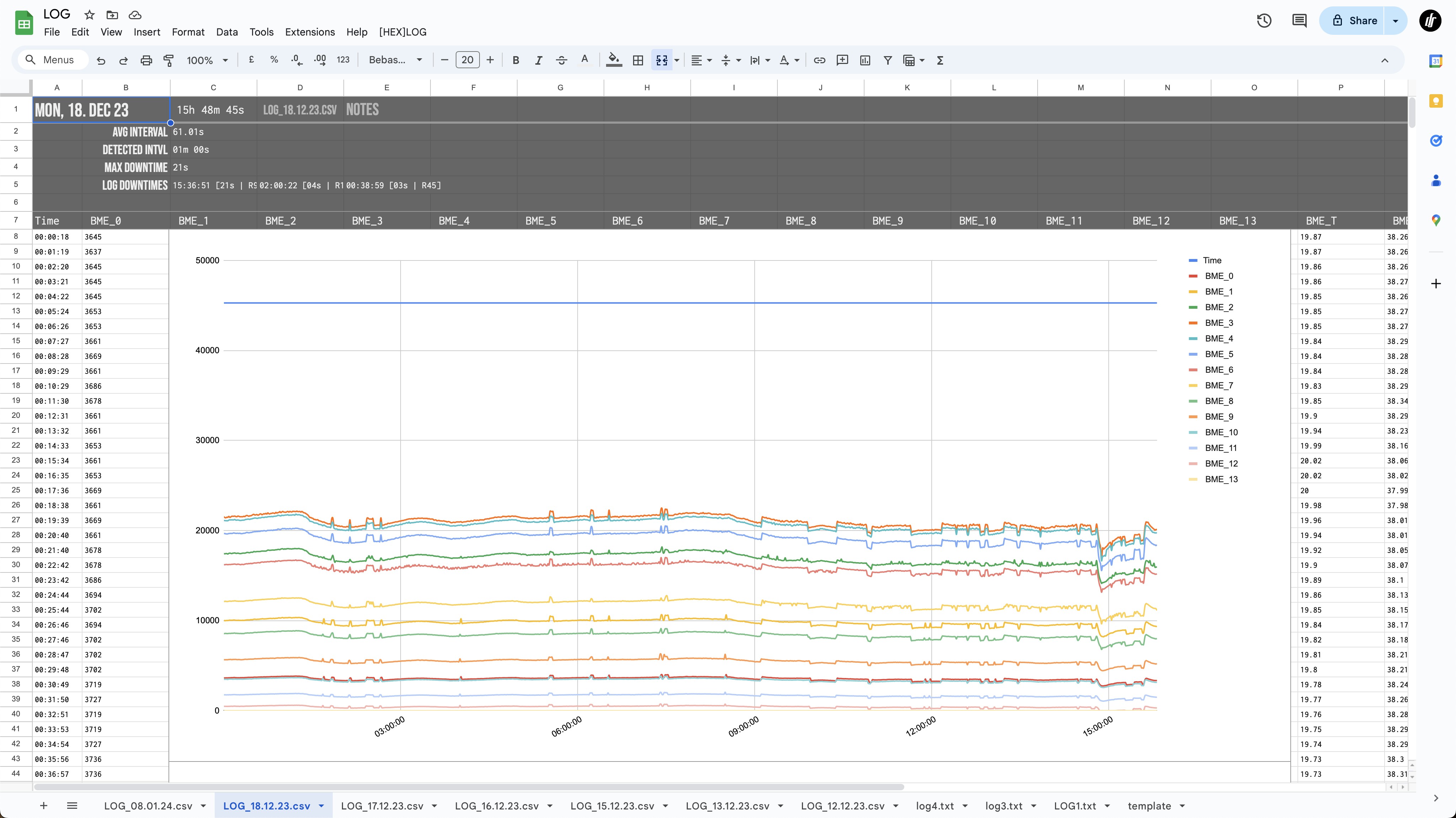

Collecting Sensor Data - Auto-Logs on Google Sheets:

I also programmed an automatic system that imports data logs from the [HEX]POD, detects the interval, and any downtime or missed samples and more. It is automatic for now. I could also embed this meta data into the log, but this seems more robust since it adjusts itself for any eventualities and automatically detects errors during sampling.

To burn in the sensors and get a first idea about collected data, i have run multiple test sessions of 3h - 21h duration. SGP41 and SCD41 - CO2, VOCs, NOx, and multiple redundant Humidity, Temp, Pressure datapoints are also part of the Log.

An option to add Notes during the sampling process, to mark location or environmental changes can be set via the Web Interface only for now.

An overhaul of the OS and Interface running on the [HEX]POD is in order.

I also want to assemble another updated hardware version that utilizes both BME688 sensors plus MPU and Ambient Light data.

BME688 profiles:

For now i am only running one incrementally stepping heater / Timing profile on one out of two BME688s. This does not yield any meaningful 'smell' data. A more complex sequence of profiles needs to be implemented. This can be found in the BoschAI app, they provide some good documentation. These profiles need to be chosen carefully, as different sequences of length and temperature respond to different compounds / 'smells'.

Sampling environment:

Another thought i had: I need to build a clean test-environment (maybe a ventilated box with carbon and hepa filters) to reset the Air sensors and provide a baseline for any smells. This would also help with training datasets on only one specific smell, without interference from the environment.

Any pointers are welcome!

eBender

eBender

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.