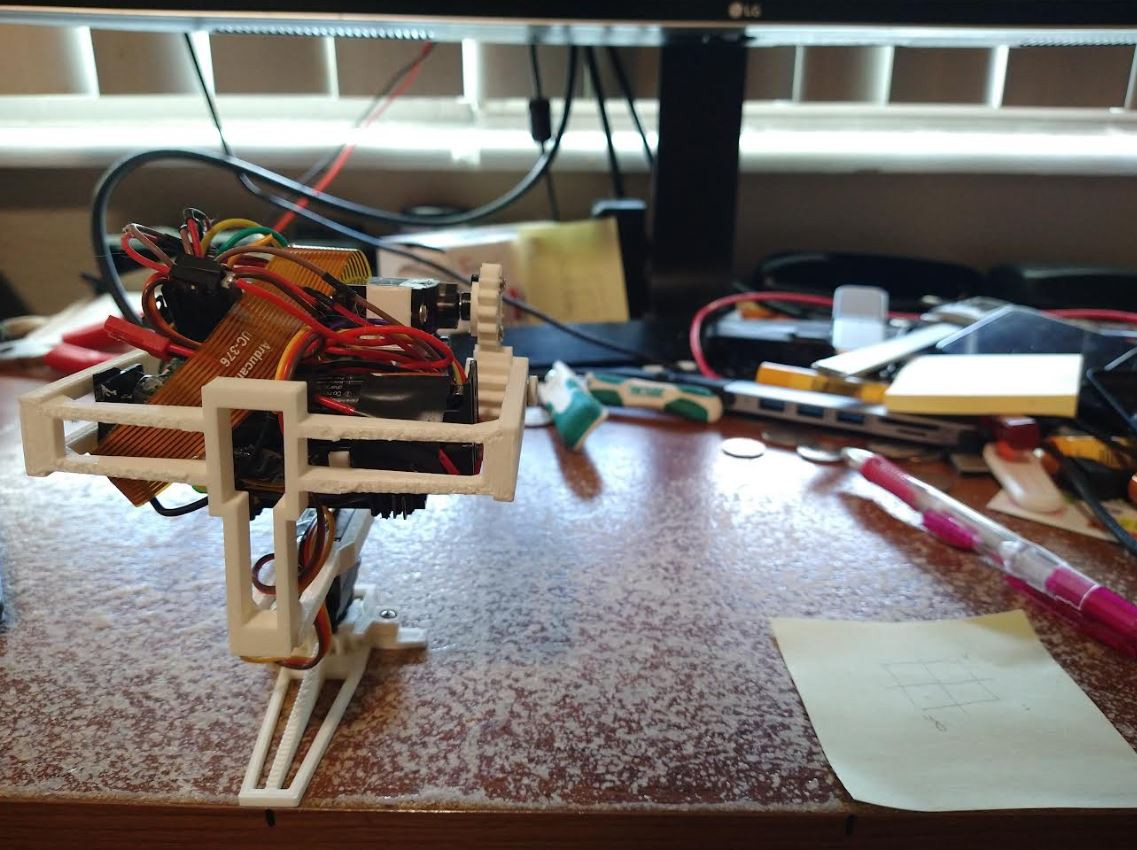

This is a small update, I have to stop working on this for a couple of weeks to learn something else so wanted to post what I worked on.

I started to work on the image processing aspect. I'm building on/extending what I started working on in the past.

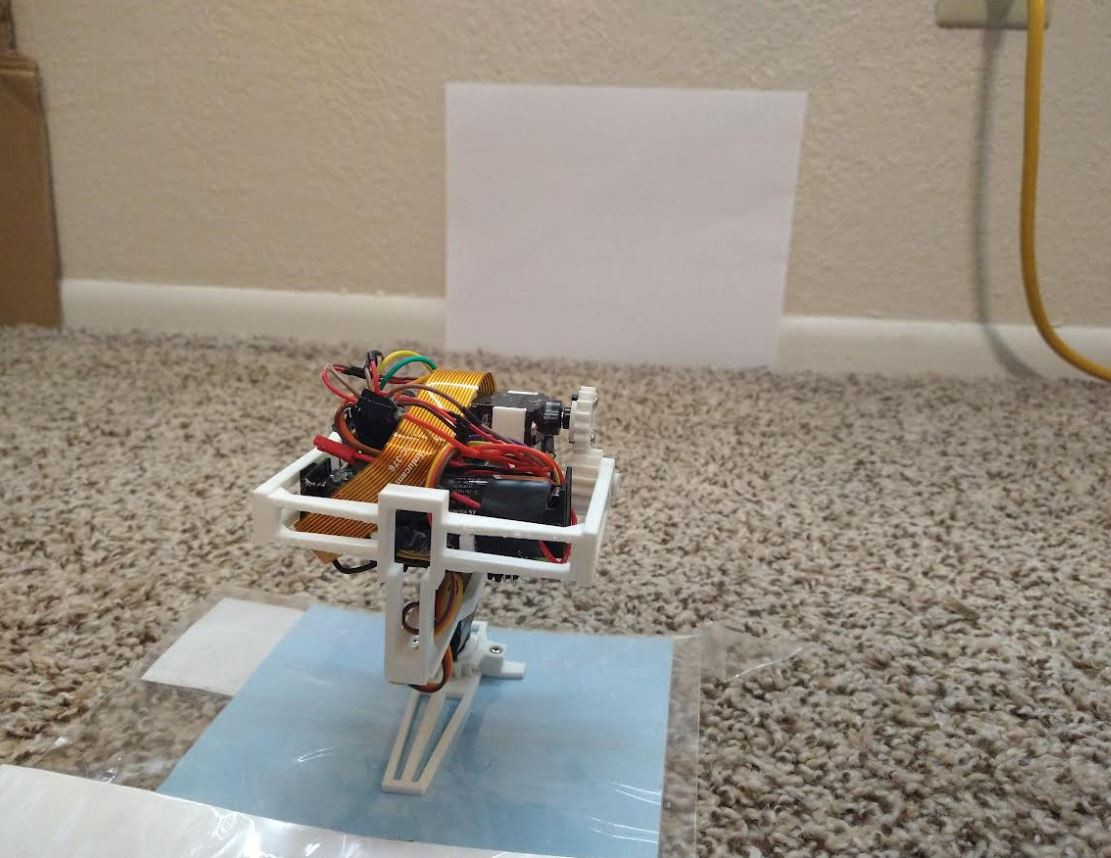

In this case I was just trying to isolate this monitor stand and figure out how far away it was with the depth probes.

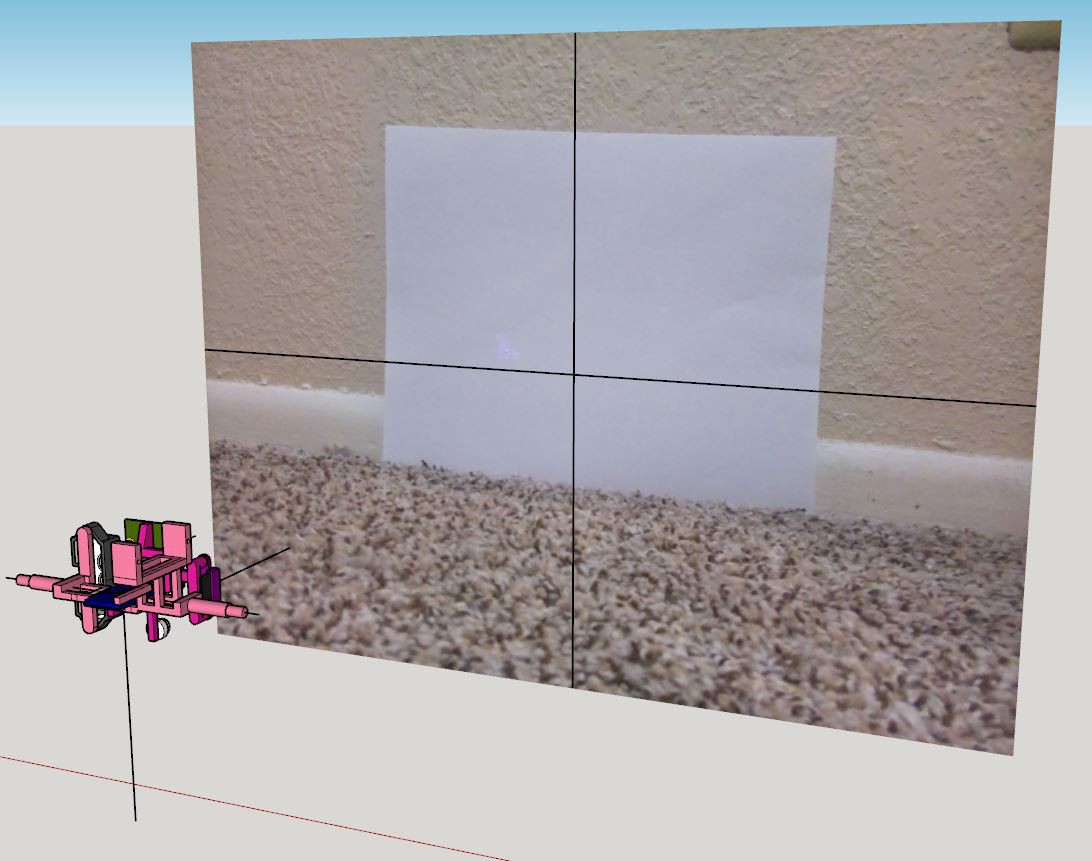

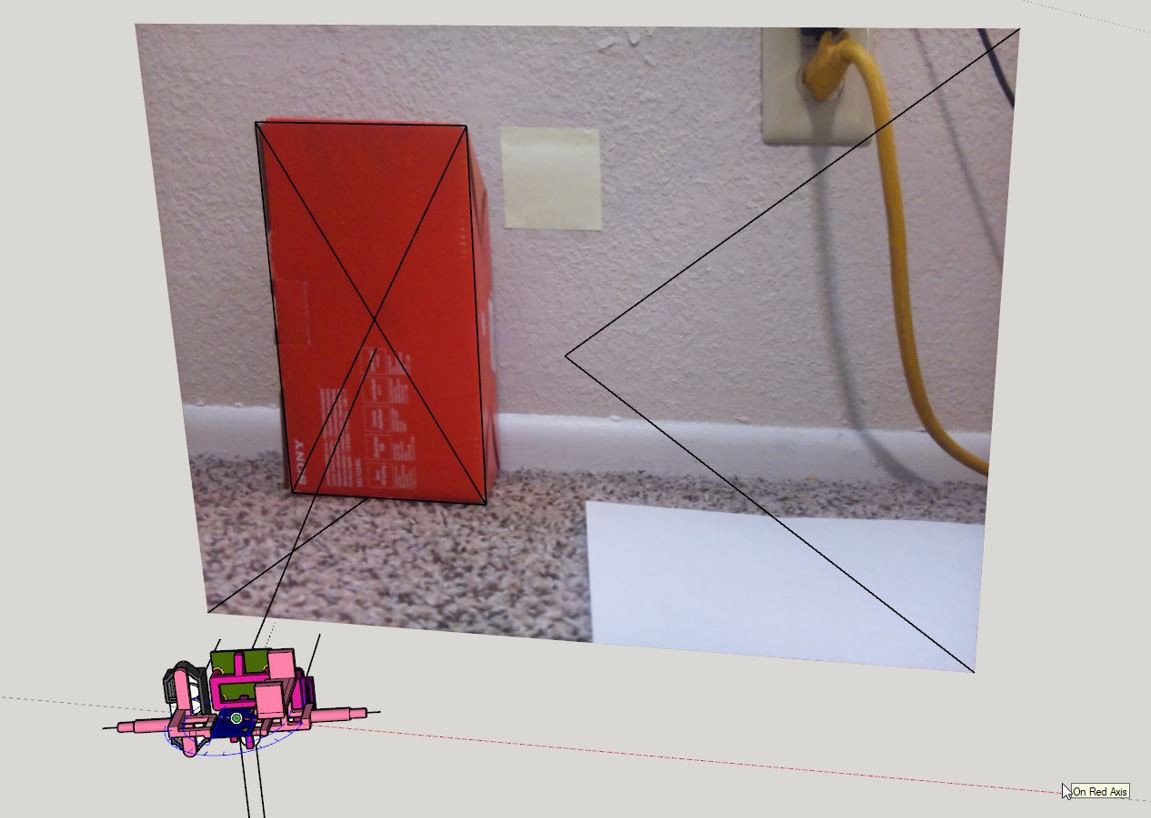

One trick I'm using with regard to FOV/perspective is bringing in physical dimensions into CAD.

I know that the sheet of paper is 8.5" x 11" in reality so you scale the imported image by those dimensions in SketchUp and it matches in scale with the modeled sensors.

It is hard to figure out the angle something might be at due to perspective but I should be able to come up with some proportion.

Anyway what I'm doing is I believe "image segmentation" where I'm using blobs of color to find items of interest then determining where that group is in 3D space.

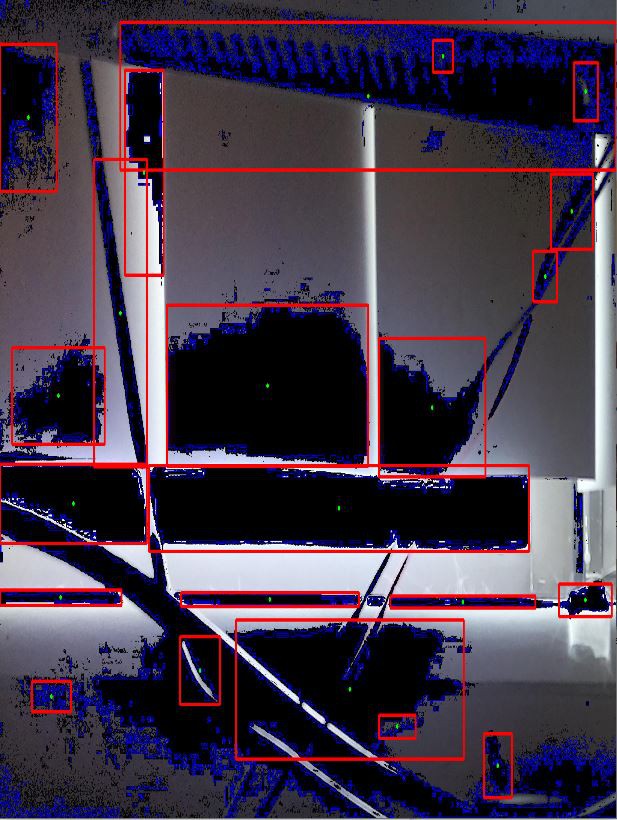

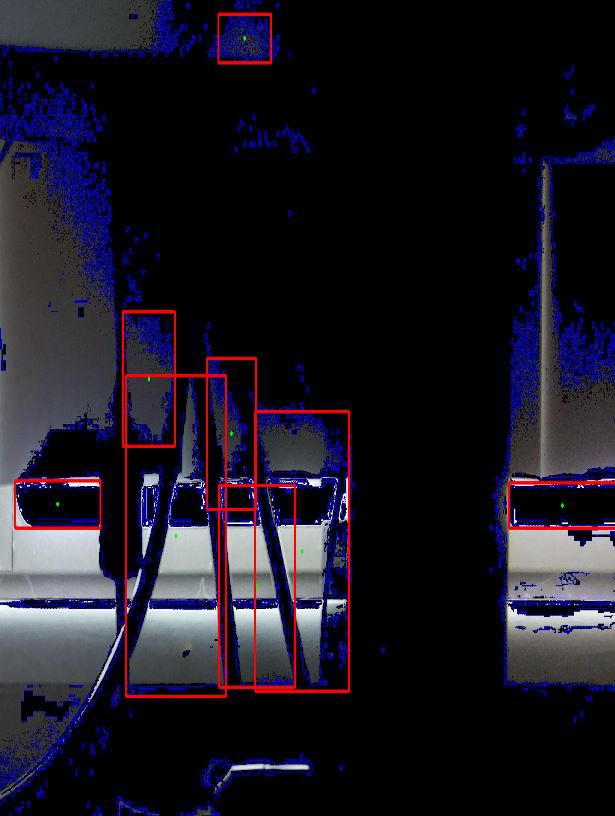

So here is a mask applied to try and find the all the groups of black pixels. Noe this photo was taken by the onboard camera not the one above where it's a phone taking the shot. Also a mask has been applied below.

Then I'm trying to group those black patches of pixels. My method is not good right now so I need to work on it some more.

Here is a quad that was sampled well (green is centroid)

Then here is one quadrant that was not sampled well. This is using contours (largest closed grouping of blue).

It missed the entire massive black area. So I'll improve this part.

Once I can accurately find the depth of things/matches with reality (need to do lots of testing) then it's pretty easy to navigate around it. Then the IMU onboard will track the robot's location and store the positions of objects it found. It's all crude but part of learning.

Pointing servos

At this time I have not determined a function to take a degree and point the sensor plane. It's possible that the milliseconds supplied to the pulse_width function is equal... no it's not. I read somewhere that 1500 is the center of a servo, and I had to rotate 16 degrees. Which turned out to be around 1640 in my case, though center is also 1460. I think it's just coincidence that the numbers almost match eg. 16 -> 1640.

But I have to figure that out, the other thing I realized is since the two sensors ToF/Lidar are parallel to each other but offset, if they point in a direction the one closest to that direction will have a shorter path... so I'll have to offset those measurements.

TL;DR is there's still a lot of work to do.

Jacob David C Cunningham

Jacob David C Cunningham

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.