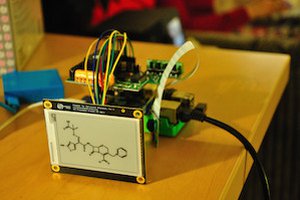

Dodo has a monochrome 128x64 pixel display which just so happens to represent 1kb of uncompressed bitmap data. I thought it would be fun to see if I can take a 1kb image, compress it, and take that left over space and try to fit in the decompression code and the display code. For example if I achieve 50% compression, then that leaves 512 bytes for code.

Here are the tasks that need to be done:

- Experiment with different compression types, for instance RLE vs Huffman to weigh the tradeoff between the compression % and the left over space available for software.

- Update my web IDE to allow assembly programming in addition to C. Here is the web IDE displaying the image I intend to compress: https://play.dodolabs.io/?code=f308275e

- Prototype the software in the web IDE

- Take the code and massage it so that the system firmware for Dodo can be abandoned and replaced with just this binary.

- Pray that this idea fits in 1kb!

Peter Noyes

Peter Noyes

mircemk

mircemk

rawe

rawe

Mike Szczys

Mike Szczys

Michał Nowotka

Michał Nowotka

I haven't tried to compress your image, but my lz77 (I think - I made it up and then found lz) but my decompressort is 77 bytes and needs 5 bytes of ZP, so it wouldn't need to compress much ;)