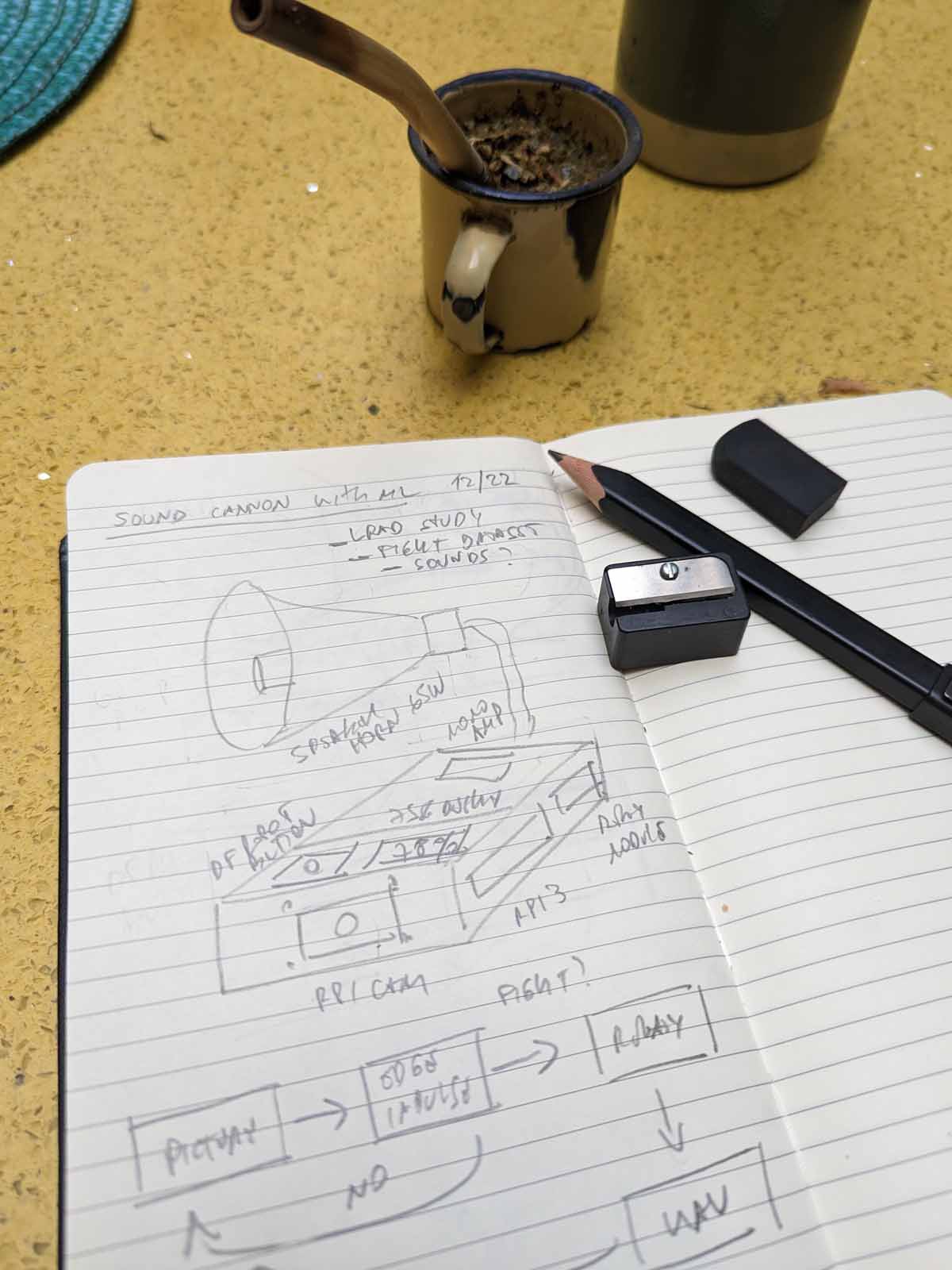

Hardware

LRAD are Genasys proprietary devices, so there is no access to details and circuits. However, there is a YouTube video where an audio specialist bought one of this devices for study. What did he find? Basically, a speaker horn array with a robust frame, good amplification and specifically designed audios to generate ear discomfort.

In the local market and at reasonable prices I was able to get an outdoor speaker horn and a matching mono amplification board. For detection and audio playback I decided to use a Raspberry Pi, since it has a decent camera, an audio output and easy integration with the Machine Learning platform Edge Impulse.

The complete list of materials is:

- Raspberry Pi 3

- Raspberry Pi cam

- Outdoor speaker horn

- Mono amp Tpa3118

- 12v power supply for the amp

- 5v power supply for Raspberry

- DFRobot LED button

- Miniplug cable

- 7 segment display TM1637

- 1 channel relay module

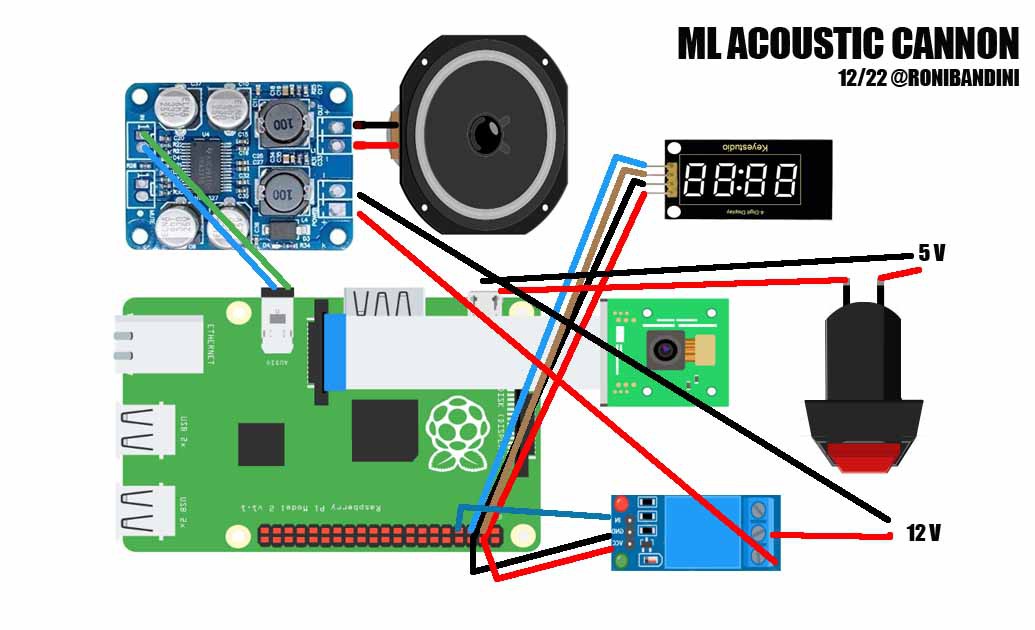

LRAD circuit

- TM1637 display CLK pin to GPIO 3, DIO pin to GPIO2, GND to GND, VCC to VCC

- Relay pin to GPIO 4, GND to GND and VCC to VCC. Then Relay NC and Signal in the middle of positive to 12V and amp power +. Negative of 12V to GND of amp power.

- 5V power supply to the microUSB for the Raspberry, but with + intercepted by DfRobot Led button.

- Speaker to amp audio output.

- Raspberry 3.5mm audio Output to amp Input (consider that only the 2 point parts are used, since the same connector is used for video. In my case it worked to use a stereo miniplug not totally inserted

Connect audio to X segments of the 3.5 jack.

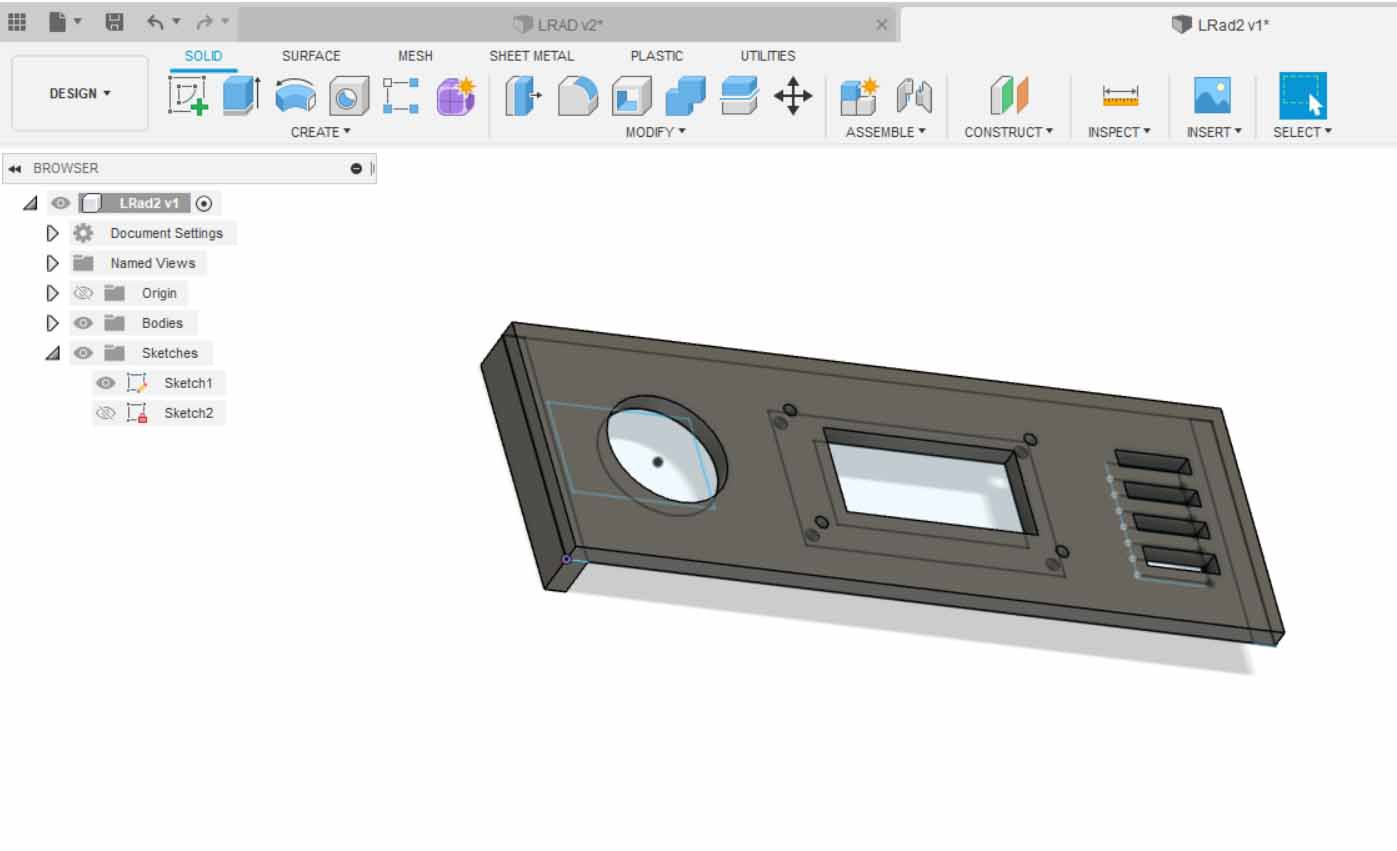

LRAD Enclosure

I’ve found an old PC removable HD tray big enough for all the components of this project. Then I’ve designed a small 3d part to hold 7 segment display and DFRbot Led button.

You can download this part at https://cults3d.com/en/3d-model/gadget/lrad-panel

Machine learning human detection

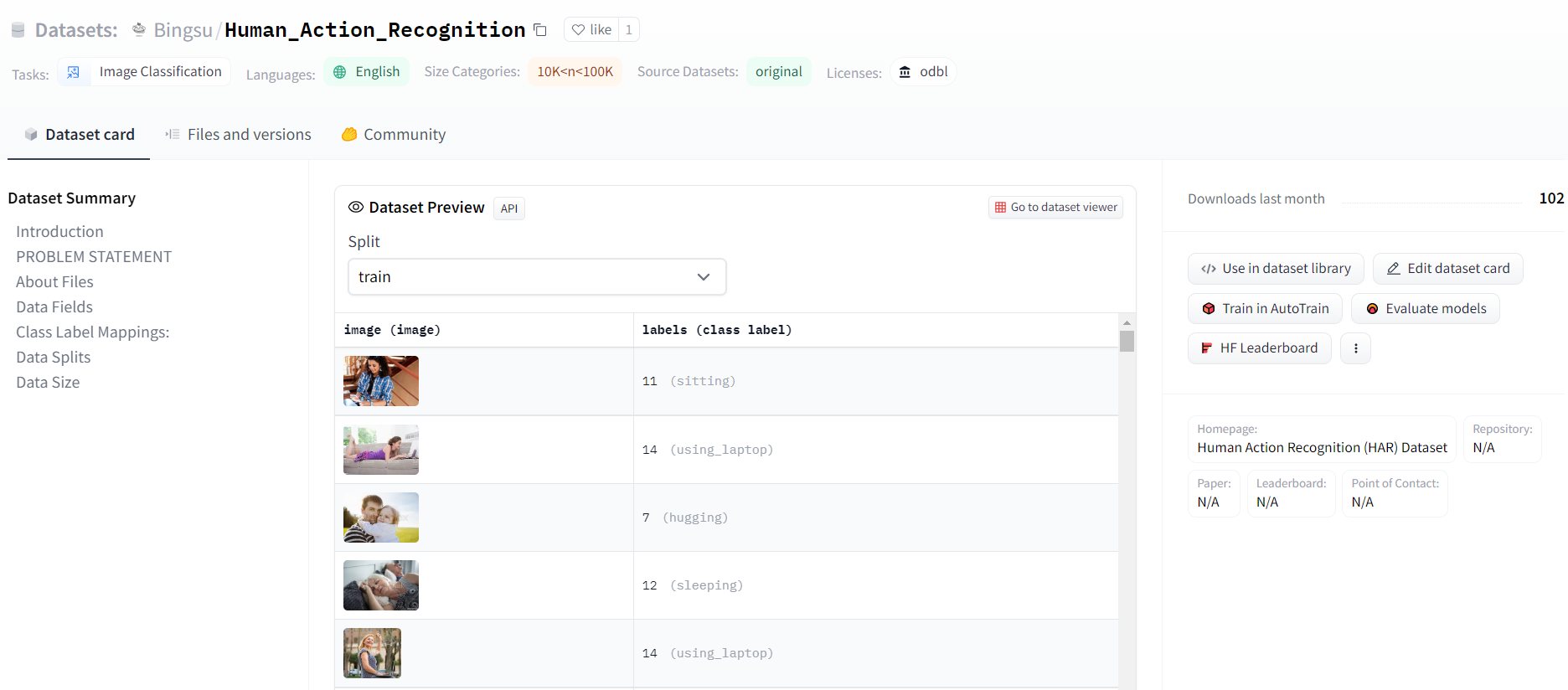

Lots of people passes by and it would be bad to shock a neighbor walking the dog.

If the sound cannon won’t detect people, could it detect certain actions of the people? Like fights, which actually happens almost every day. The interesting part is that I don’t even need to take hundreds of pictures since there is a dataset with human actions in the AI community Hugging Face.

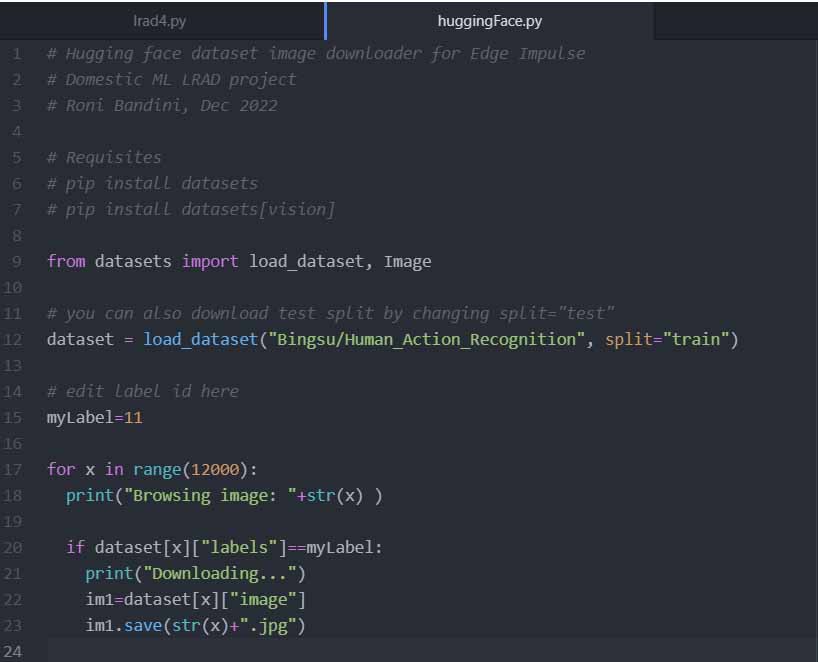

I wrote a Python script to download 2 actions from this dataset: "people sitting" and "people fighting"

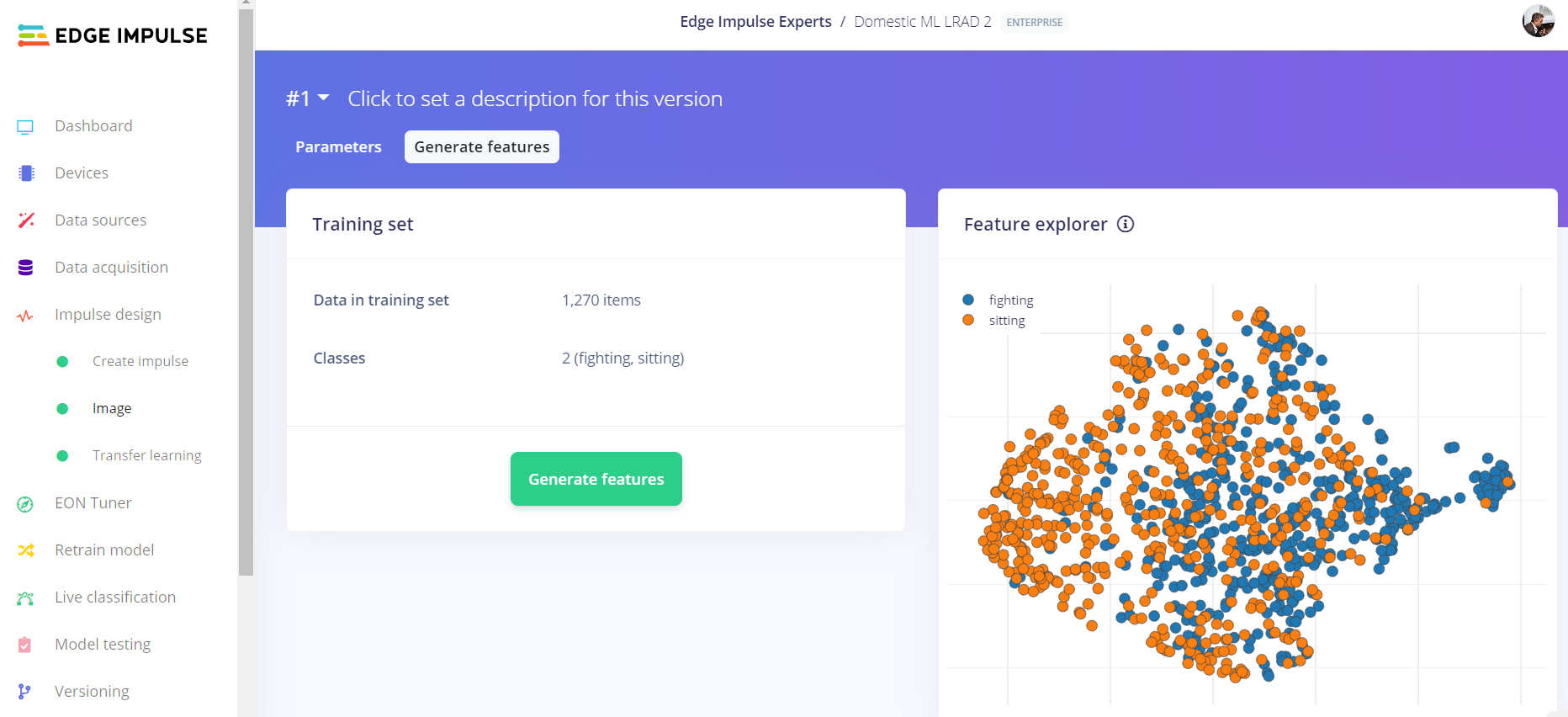

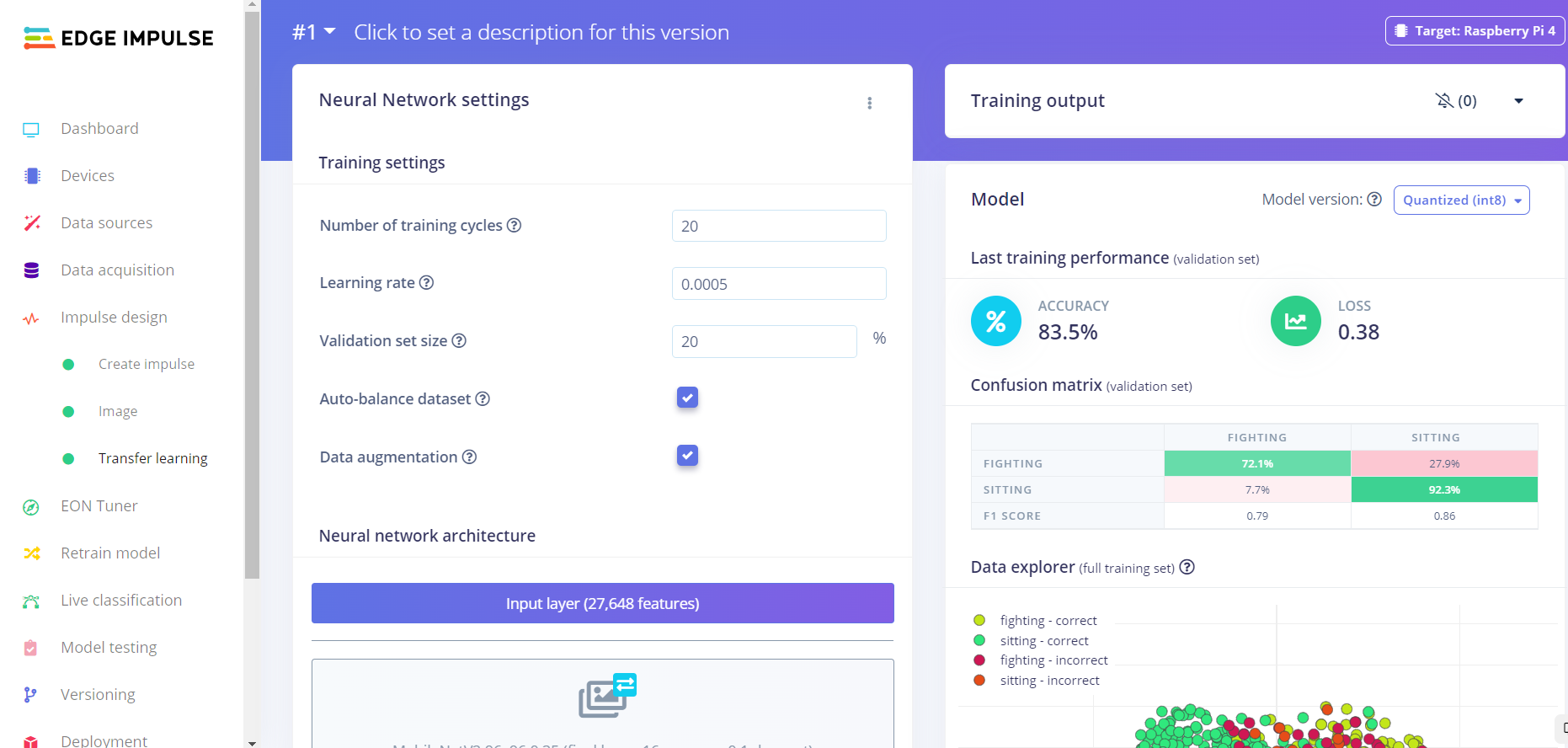

I have created a new project at Edge Impulse platform (free for developers) and uploaded the pictures, I trained a model and exported to an EIM file.

Audio files

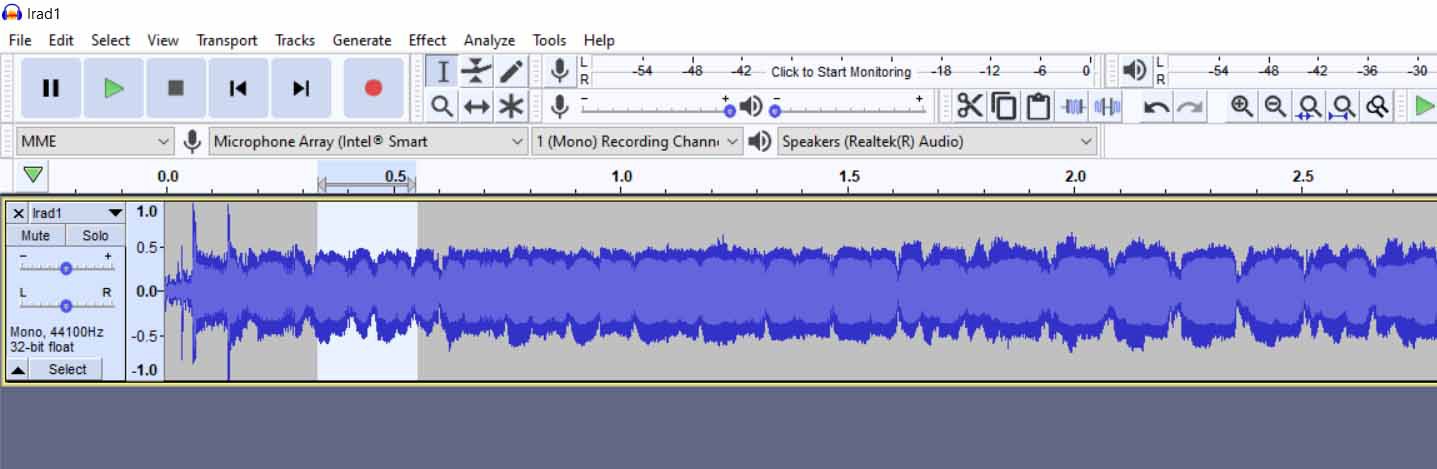

A brave man confronted a police grade LRAD and uploaded the video to YouTube. I downloaded the file, extracted the audio, processed this audio with Audacity app, replicated in time and exported the file as.WAV

Raspberry Pi Configuration

For the Raspberry Pi, I have executed this commands after installing the non desktop version of Raspi OS.

sudo apt-get install python3-pip

sudo apt install git

pip install picamera

sudo apt install python3-opencv

sudo apt-get install libatlas-base-dev libportaudio0 libportaudio2 libportaudiocpp0 portaudio19-dev

git clone https://github.com/edgeimpulse/linux-sdk-python

sudo python3 -m pip install edge_impulse_linux -i https://pypi.python.org/simple

sudo python3 -m pip install numpy

sudo python3 -m pip install pyaudio

I have uploaded the LRAD Python script, WAV file and EIM Machine Learning model (LRAD software)

I have assigned 744 execute permissions to the EIM and added the script to cronjob.

crontab -e

@reboot sudo python /home/pi/lrad4.py > /home/pi/lradlog.txt

Then reboot with sudo reboot

The LRAD script is autoexecuted. In the 7 segment display a greeting will be displayed, then the detection percentage and the trigger notice.

All the console output of the LRAD script are logged into lradlog.txt file

Some code settings to modify:

# EIM file name in case you use a new version

model = "domestic-ml-lrad-2-linux-armv7-v8.eim"

# fighting percentage

detectionLimit=0.75

# GPIO pin for the relay

relayPin=4

# seconds to play the crowd dispersion sound

playSeconds=5

This script is in charge of taking a picture every X seconds and send the picture to the model to get an inference. The model returns a percentage answering the question “is this a fight?” or "there are people sitting?" If the percentage for the first question is high enough, amplification is enabled with the relay and the annoying sound played with a subprocess os call

process = subprocess.Popen("aplay lrad1.wav", shell=True, stdout=subprocess.PIPE, preexec_fn=os.setsid)

TikTok

https://www.tiktok.com/@ronibandini/video/7331372161736805637

Demo and more details

Final notes

Last but not least, this device is not limited to crowd dispersion. LRADs could also be used to maintain birds out of airports, reject predators in farms, etc

Roni Bandini

Roni Bandini