More testing with pretrained efficientdet-lite0. It's already known that this model hits trees & light posts.

More testing with pretrained efficientdet-lite0. It's already known that this model hits trees & light posts.Finally made a script to go from checkpoint to trt engine in truckcam/det2trt.sh

It takes 46 minutes on the jetson, but there's no way to cross compile a trt engine.

Decided to try just 1 epoch of training.

root@gpu:/root/nn/automl-master/efficientdet% python3 main.py --mode=train --train_file_pattern=../../train_lion/pascal*.tfrecord --model_name=efficientdet-lite0 --ckpt=../../efficientdet-lite0 --model_dir=../../efficientlion-lite0 --train_batch_size=1 --num_examples_per_epoch=1000 --num_epochs=1 --hparams=config.yaml

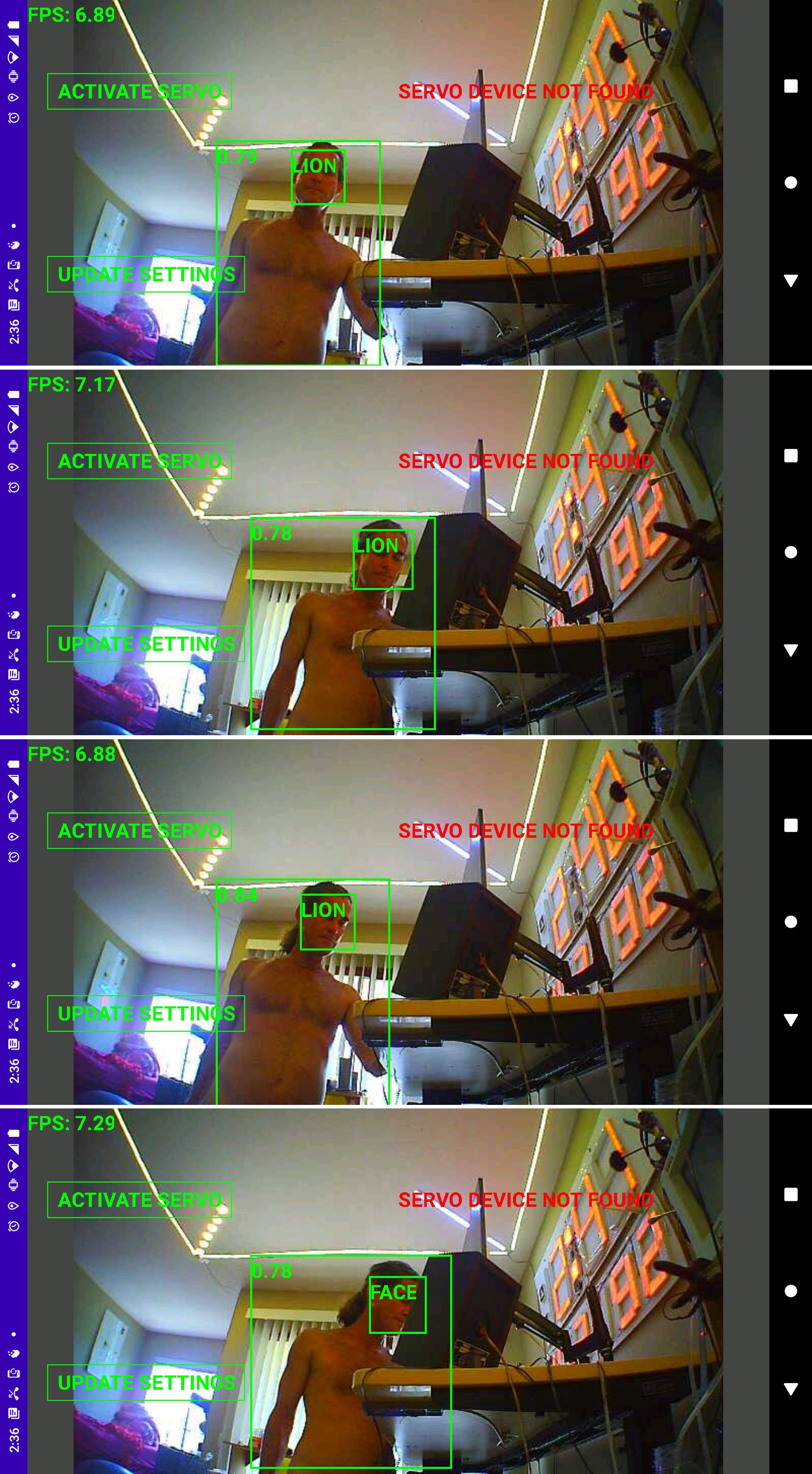

A most unexpected result where the original efficientdet-lite0 hit was still hitting while 2 more hits appeared, corresponding to the failed efficientlion-lite0. Ran the checkpoint with model_inspect.py

root@antiope:/root/automl/efficientdet% OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=infer --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0.1/ --hparams=../../efficientlion-lite0.1/config.yaml --input_image=../../truckcam/lion320.jpg --output_image_dir=.

This was the 1st time model_inspect.py showed the same evolution of failures as tensorrt. There's an evolution of the weights where they 1st deviate & eventually converge on the new data set.

Passing an efficientdet-lite0 checkpoint as the starting checkpoint shouldn't work because the num_classes changed. The next idea was training with the same num_classes so the onnx files would be easier to compare.

Right away, the pretrained efficientdet-lite0 had 90 classes instead of any previous number. The efficientdet-lite0 example was trained on the COCO dataset, but they didn't provide the config.yaml or dataset for that training.

python3 main.py --mode=train --train_file_pattern=../../train_lion/pascal*.tfrecord --model_name=efficientdet-lite0 --ckpt=../../efficientdet-lite0 --model_dir=../../efficientlion-lite0.21 --train_batch_size=1 --num_examples_per_epoch=1000 --hparams=config.yaml

config.yaml:

num_classes: 90 num_epochs: 300

The 2 models now had the same dimensions, same number of symbols, but just different symbol names.

Epoch 1 was less degraded, but epoch 33 was as bad as every other conversion on tensorrt

-----------------------------------------------

Mean subtracting the input images improved the results but dividing by stddev_rgb degraded results in truckcam. Noted it was mean subtracting & stddev dividing in model_inspect.py but this wasn't the reason it worked.

---------------------------------

Another change was in TensorRT/samples/python/efficientdet/create_onnx.py

# tensorrt doesn't support

# shape_corrected = np.asarray([-1, volume, shape_out[2]], dtype=np.int64)

shape_corrected = np.asarray([-1, volume, shape_out[2]], dtype=np.int32)

This got rid of the dreaded Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64

Sadly this was not the cause of the malfunction.

--------------------------------------------

Gave tf2onnx another try instead of TensorRT/samples/python/efficientdet/create_onnx.py.

python3 -m tf2onnx.convert --saved-model=efficientlion-lite0.out/ --output=efficientlion-lite0.out/efficientlion-lite0.onnx --opset=11

The lion kingdom's x86 box got trashed by a failed pip install. It now failed with the dreaded

AttributeError: module 'numpy' has no attribute 'object'.

or

KeyError: dtype('O')

A 20 minute conversion on the jetson yielded

Unsupported ONNX data type: UINT8 (2)

or

Assertion weights.type() == DataType::kINT32 failed.

tf2onnx seems to use too many data types unsupported by tensorrt. That's why there's an onnx converter in TensorRT/samples/python/efficientdet. You can sort of replace the data types with graphsurgeon.

graph = gs.import_onnx(onnx.load(IN_MODEL))

# convert the input layer from int8 to float32

for i in graph.inputs:

i.dtype = np.float32

onnx.save(gs.export_onnx(graph), OUT_MODEL)

But that isn't going to convert the weight values.

-------------------------------------------------------------

There's another conversion script in automl/efficientdet which goes directly from frozen graph to tensorrt. There's also an alternative way to freeze the graph.

/root/automl/efficientdet% time OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=freeze --model_name=efficientdet-lite0 --logdir=/root/efficientlion-lite0.out2 --ckpt_path=/root/efficientlion-lite0 --hparams=/root/efficientlion-lite0/config.yaml

/root/automl/efficientdet% time OPENBLAS_CORETYPE=CORTEXA57 python3 tensorrt.py --tf_savedmodel_dir=/root/efficientlion-lite0.out2/savedmodel --trt_savedmodel_dir=/root/efficientlion-lite0.out2/efficientlion-lite0.engine

If the graph was frozen by inspector.py, it fails after 46 minutes with

ValueError: Input 1 of node StatefulPartitionedCall_1 was passed float from efficientnet-lite0/stem/conv2d/kernel:0 incompatible with expected resource.

If it was frozen by model_inspect.py, python just crashes.

If it's frozen by model_inspect.py & converted to ONNX by create_onnx.py, it crashes on an out of bounds error.

File "/usr/local/lib/python3.6/dist-packages/onnx_graphsurgeon/ir/node.py", line 92, in o

return self.outputs[tensor_idx].outputs[consumer_idx]

IndexError: list index out of range

-----------------------------------------------------------------

There's a list of models on

https://mmdetection.readthedocs.io/en/v2.17.0/tutorials/onnx2tensorrt.html

which are known to work in tensorrt. The problem isn't converting the model as much as converting a trained version of the model.

These models are much slower than efficientdet. Efficientdet has 3.2 million weights. SSD mobilenet has 5.2 million.

---------------------------------------------------------------

model_inspect.py can be run in benchmarking mode

root@antiope:/root/automl/efficientdet% OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=bm --model_name=efficientdet-lite0 --ckpt_path=../../efficientlion-lite0/ --hparams=../../efficientlion-lite0/config.yaml --input_image=../../truckcam/lion320.jpg --output_image_dir=.

This gives 9fps using float32 libcudnn. It should be directly proportional to the size of the data type.

------------------------------------------------------------------------

https://github.com/google/automl/blob/master/efficientdet/README.md

had another command for directly exporting tensorrt from the checkpoint in section 3. They mention the highest scoring checkpoint is in the archive subdirectory. It was actually epoch 66.

time OPENBLAS_CORETYPE=CORTEXA57 python3 model_inspect.py --runmode=saved_model --model_name=efficientdet-lite0 --ckpt_path=/root/efficientlion-lite0/archive --saved_model_dir=/root/efficientlion-lite0.out2 --tensorrt=FP16 --hparams=/root/efficientlion-lite0/config.yaml

The session_config argument had to be commented out for create_inference_graph.

This crashed in the same place tensorrt.py did.

-------------------------------------------------------------------------

The training method seemed to be the right focus. Did another training run with some changes to config.yaml

nms_configs:

method: hard

iou_thresh: 0.35

This made no difference.

-------------------------------------------------------------------------

All these tensorrt exports have to be done on the jetson nano & they all seem to require a newer version of tensorrt. The jetson nano is a complete ecosystem locked into what was current in 2019.

One idea now is not trying to train efficientdet but going ahead with the pretrained one.

Software support for the jetson nano is definitely over. It's not going to run any more models besides the lucky few from years ago so investing in it is like retro computing.

Face tracking with the existing tracking camera sux. Another idea is to abandon face tracking & just run the body_25 model which was already ported to the jetson. If body_25 ran without face tracking, it would go at 9fps. It's possibly a more robust way of detecting animals than efficientdet.

Of course, if efficientdet ran without the face tracker, it would go at 20fps. It's not clear if the servo mechanics would be fast enough to justify the frame rate.

If body_25 falls over, another idea would be to just use the jetson for the XY tracker & build an updated enclosure for the original raspberry pi. The raspberry pi also took a lot less space.

Tracking without face recognition really sux. Another way would have to be found for classifying animals.

There are ways to strip down newer gopros & use them as the tracking camera. Face recognition would work well with that.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.