Overview

This project is made up of 3 main parts:

- Power supply

- LED controller

- the LED strings themselves

The LED strings used are WS2812B strings, commonly found on Amazon. The WS2812B RGB LEDs are individually addressable through a simple pulse-width-modulated one-wire serial protocol. Each LED is configured with 24 bits of color information: 8 bits each of red, green, and blue. The ones I purchased came in 5 meter strings of 150 LEDs.

The WS2812B strings I used have a +5 V power rail, so I had to deal with getting a relatively low voltage out to a physically large array of LEDs. Each LED can pull about 40 mA at full power (though may pull more at peak; this is more of a typical measurement than a real max). For this project I used several LRS-350-5 60 A 5 V supplies. These supplies can be powered from 120 or 240 VAC. Distribution of such a low voltage over the large area required thicker copper cables. I would have liked to use 24 or 48 VDC distribution with a 5 V step-down to power every couple of strings, but I couldn't find supplies cheap enough to offset the cost of the copper cabling, especially when the 60 A LRS-350-5 was already extremely cheap.

The LED controller is made up of a BeagleWire iCE40 FPGA shield connected to a BeagleBone Black. The BeagleWire contains a iCE40HX4K with 16 kB of block RAM and 4 PMOD connectors (each with 3.3V, ground, and 8 I/O for a total of 32 easily accessible I/O). There are also Grove connectors, though I don't use them in this project as the I/O density is low. Of particular note, the BeagleWire has access to the BeagleBone's GPMC bus, which can be used to memory-map the FPGA into the ARM's memory space and efficiently transfer pixel data from the ARM to the FPGA.

The frame buffer is generated by a rust application running on the BeagleBone. When generation is complete, the application transfers control of the buffer to the kernel, where a custom kernel module DMAs the data over the TI GPMC bus to the FPGA. All of the block RAM on the FPGA is allocated to a memory-mapped FIFO that holds the frame buffer. Once a full frame buffer is transmitted to the FIFO, custom Verilog code automatically shifts that data out to each LED string in parallel.

Planning

My office is roughly 11 by 13 ft or 3.5 by 4 m (I know, I mix standard and metric measurements. I'm trying to wean myself off of Standard and convert fully to metric, but it's a work in progress). The LED strings have a fixed distance between LEDs of 30 LEDs per meter. The LEDs come in 5 m strips, and to minimize the amount of cutting and splicing I needed to do, I decided to run the LEDs along the longer 4 m length of the room. If I call each 4 m string a "row", the only remaining question is how many rows to install.

It would have been nice to have a square array with the same 30 LEDs per meter resolution in both directions, but I was limited by the amount of RAM in the iCE40. I decided that I wanted to be able to store a single frame buffer in block RAM. This isn't technically a requirement: I can pretty easily stream the data over the GPMC bus as the bus is significantly faster than the serial protocol used to configure the LEDs. I also didn't have an infinite budget for this project though, and setting the requirement that a full framebuffer fit in FPGA RAM ended up being a pretty reasonable constraint.

It turned out that each row could fit about 118 LEDs. Each LED has 24 bits of color data, or 2832 kb per row. The iCE40 HX4K theoretically has 20 4-kbit block RAMs for a total of 80 kbit. However, yosys and nextpnr both report 32 4-kbit block RAMs, and I can confirm that the total of 128 kbit of block RAM appears to work. Maybe the BeagleWire is actually using an HX8K, I don't know. Anyway, if we go with the 128 kbits of frame buffer, that gives us up to 46 rows of 118 LEDs each, or about 14 rows per meter. That was close enough for me.

I wanted the full matrix to update as quickly as possible, which means programming as many strings as possible in parallel. Unfortunately, the BeagleWire doesn't have 46 easily-accessible I/O. As a compromise, every other row is daisy-chained, effectively making 23 rows of 236 LEDs. Each pair of rows is programmed in parallel by the FPGA, and I use 23 I/O spread across the 4 PMOD connectors as data lines.

Speaking of updating as quickly as possible, it's worth determining theoretically how quickly this matrix can update. Each color bit transmitted to the WS2812B string is nominally a 1.25 us symbol with a high time and a low time. Essentially, the duty cycle (technically only the positive pulse width, but to keep the symbol rate constant let's stick with duty cycle) determines whether a bit is a 1 or a 0. With 236 LEDs in a chain, that's 295 us to shift out the data. In addition there's a 50 us "refresh" period. I haven't experimented with exactly when this is needed, but I stuck one in in between each update and haven't noticed any problems. That gives us a minimum of 345 us per update, or about 2.9 kHz. That's way faster than would ever be useful (or perceivable) so we know that the string layout and control won't be a limiting factor.

Critical parameters:

- 118 LEDs per row and 46 rows

Power

With each LED consuming about 35 mA at max (measured by lighting a full 150-LED string at maximum brightness and measuring the current) and with 5428 LEDs, I have a maximum current consumption of... about 190 A. As an electrical engineer by trade, I recognize that that's a lot of current, at least for me. Even at 5 V, that's more than enough to start a fire if I'm not careful, and it's certainly enough to melt power distribution cables if I use cabling that's too thin. Getting power to this array without burning down the house was my next major concern.

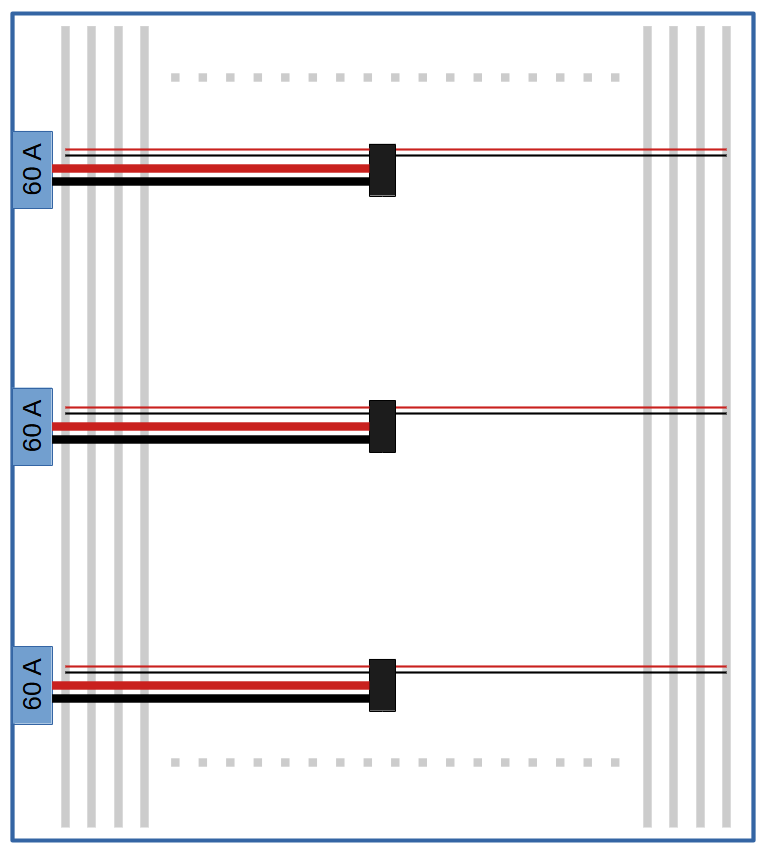

I started by determining how I was going to source all this current. The LRS-350-5 from Mean Well (sourced from DigiKey) seemed to be the sweet spot in terms of current capability versus price. Each of these supplies provides up to 60 A of current. Technically the full display at peak brightness could pull a total of 190 A, but I have no intention of ever running the entire array at full brightness. It's blinding! I felt that getting 3 supplies each providing 60 A gave me the power I needed with margin for power distribution loss.

Next I had to figure out how to get that power from moderately chunky power supplies to the entire ceiling with tolerable voltage drop (and again, without even a chance of burning down the house). My original plan was to locate all three supplies together on one wall, wire them up in parallel, and then run whatever cable would sustain 180 A without overheating to the center of the array and then fan out. That idea wasn't very good for a couple of reasons:

- These supplies do have an adjustment potentiometer, but are not designed to run in parallel. They have no sync capabilities at all so hooking them up in parallel is unlikely to work as expected.

- The amount of copper I'd need to run 190 A half the length of the room was cost prohibitive.

Instead of trying to keep everything neat and uncluttered, I decided to try to minimize the cable length for power distribution so I could reduce my overall cost. As I had three supplies, I decided to install these at regular intervals along the 4 m wall. I then ran a cable that would only need to carry up to 60 A from each supply to three distribution points on the ceiling.

The smaller cables illustrated above are actually one set of cables that supply power and four sets of smaller cables that actually attach to the strings. This ensures more balanced power distribution without the voltage dropping off too much towards the edge of the room.

The larger cables would need to carry up to 60 A half the width of the room, or about 1.75 m. I set a limit of the total amount of power I was willing to dissipate in the power distribution network of about 50 W; I figured that much heat would be easy to dissipate over these distances. My calculations showed that 12 or even 14 AWG would have done the job, but I bumped up to 10 AWG just to be super sure (and to avoid wasting power as this would potentially be on for long periods of time).

The smaller cables would only need to carry at most 30 A, but each time the smallest set of cables branched off of these, the required current would drop by 7.5 A. The first set of the smallest cables branches off only a few inches away from the center point, so these cables are only carrying 30 A for a very short distance. I ended up going with 14 AWG and 22 AWG for the horizontal runs and for the smallest "last-mile" cables, respectively.

To connect to the actual strips, I used 3-pin right-angle SMD headers. This made it easy to install the LED strips and power harness separately since I could plug and unplug each strip independently.

Critical parameters:

- 3 sets of cables

- 10 AWG from the supply to the center of the ceiling

- 14 AWG horizontal runs

- 22 AWG from the horizontal run to the LED strip itself

FPGA Control

The FPGA implements the serial protocol to shift out the color data to each string in parallel. Currently, the entire frame buffer must be written to the FIFO before shifting begins. This makes things a little simpler because I don't have to deal with how to handle data underflows. The datasheets for these LEDs is... sparse, to say the least, and I didn't want to have to do all of the investigation to determine how the LEDs handle an idle data signal.

TI's GPMC bus is the primary interface between the ARM Cortex A8 on the BeagleBone and the iCE40 FPGA on the BeagleWire. The GPMC bus does not have a free-running clock. Instead, the clock is only active while transactions are ocurring. I implemented all of the memory-mapped functionality on the GPMC clock domain, which works well for everything but the FIFO. My FIFO implementation takes an additional clock cycle to flush the write pointer to the read side of the FIFO, meaning that at the end of a burst write to the FIFO data, the FIFO full count is incorrect unless we get an extra clock cycle. This could be solved in a variety of ways, but I'm deferring that work for a rainy day. For now I simply read any register on the FPGA when the DMA transfer is complete to give that logic a few more clock cycles. This has minimal impact to performance from what I've seen so far.

Kernel Work

I wanted to use the DMA capabilities of the AM335x on the BeagleBone to move data to the FPGA. Fortunately, the DMA engine already has drivers present in the existing pre-built kernel and provides access using the DMAEngine API. I'm not an experienced kernel developer, but this made it easy enough for me to get DMA working in a custom kernel module pretty quickly.

The kernel module that supports all of this circuitry does several things:

- It allocates the frame buffer from kernel memory and maps it for DMA access.

- It implements calling the DMAEngine API to DMA the data from that buffer to the fixed memory location where the FPGA is mapped (based on the GPMC settings in the device tree).

- It creates a character device "ledfb" that can be mmap()ed to map the DMA buffer into userspace and implements an ioctl that userspace can call to DMA the buffer to the FPGA.

In addition to the custom kernel module, I needed to adjust the GPMC settings in the BeagleWire device tree overlay. The BeagleWire device tree overlays can be found in bb.org-overlays or under the BeagleWire project in BeagleBoard-DeviceTrees. I recommend using the bb.org-overlays project as a starting point as it's been maintained more actively.

Of particular note, the onboard eMMC on the BeagleBone Black conflicts with some of the GPMC lines. Unfortunately, to use the GPMC bus the onboard eMMC has to be disabled by adding `disable_uboot_overlay_emmc=1` to /boot/uEnv.txt. Without this step, the GPMC driver will load (unfortunately) but operations will not work correctly because the pins are in the wrong mode.

Application Software

Initially I prototyped and tested the kernel interface using python. The mmap library gave me access to the framebuffer using the OS's mmap, and numpy's frombuffer provided relatively efficient access to the packed data. This worked well enough for prototyping, but was too slow for rendering everything I wanted. I did manage to use ImageMagick's Wand library for much faster rendering, but ultimately I went up rewriting the application in rust for native speed.

Right now the application is fairly minimal, supporting only a few basic visualizations and test patterns. In the future I plan to implement a sort of digital modular synth with various DSP and linear algebra operations to produce the framebuffer. From there I'll be adding RPC to support controlling the whole thing from a GUI front panel. My whole goal is to be able to enjoy the visualizations on the ceiling without having to log in to a computer and run commands, or even needing to be technical at all.

Dane Wagner

Dane Wagner